Lucene学习

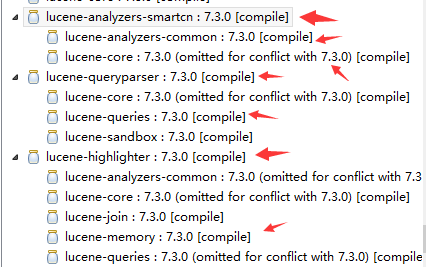

使用版本7.3.0,导入的jar包信息,如下图:

demo代码如下,其中包含了CRUD等操作,注意:使用的版本不同,部分代码编写不同,我在网上搜索的部分代码,移植到7.3.0报错

package com.grand.environment; import java.nio.file.Path; import java.nio.file.Paths; import java.util.Arrays; import org.apache.lucene.analysis.Analyzer; import org.apache.lucene.analysis.CharArraySet; import org.apache.lucene.analysis.TokenStream; import org.apache.lucene.analysis.cn.smart.SmartChineseAnalyzer; import org.apache.lucene.analysis.tokenattributes.CharTermAttribute; import org.apache.lucene.document.Document; import org.apache.lucene.document.Field.Store; import org.apache.lucene.document.StringField; import org.apache.lucene.document.TextField; import org.apache.lucene.index.DirectoryReader; import org.apache.lucene.index.IndexReader; import org.apache.lucene.index.IndexWriter; import org.apache.lucene.index.IndexWriterConfig; import org.apache.lucene.index.IndexWriterConfig.OpenMode; import org.apache.lucene.index.Term; import org.apache.lucene.queryparser.classic.MultiFieldQueryParser; import org.apache.lucene.queryparser.classic.QueryParser; import org.apache.lucene.search.BooleanClause.Occur; import org.apache.lucene.search.BooleanQuery; import org.apache.lucene.search.IndexSearcher; import org.apache.lucene.search.MatchAllDocsQuery; import org.apache.lucene.search.Query; import org.apache.lucene.search.ScoreDoc; import org.apache.lucene.search.TermQuery; import org.apache.lucene.search.TopDocs; import org.apache.lucene.search.highlight.Formatter; import org.apache.lucene.search.highlight.Highlighter; import org.apache.lucene.search.highlight.QueryScorer; import org.apache.lucene.search.highlight.Scorer; import org.apache.lucene.search.highlight.SimpleFragmenter; import org.apache.lucene.search.highlight.SimpleHTMLFormatter; import org.apache.lucene.store.Directory; import org.apache.lucene.store.FSDirectory; import org.junit.Test; public class LuceneTest{ /** * 添加文档并建立索引 */ @Test public void addDocumentAndIndex() { try { Path path = Paths.get("D:\\common\\lucene_db\\test_tb"); Directory d = FSDirectory.open(path ); Analyzer analyzer = new SmartChineseAnalyzer(); // 如果不配置分词器,默认使用StandardAnalyzer IndexWriterConfig conf = new IndexWriterConfig(analyzer ); conf.setOpenMode(OpenMode.CREATE_OR_APPEND); IndexWriter indexWriter = new IndexWriter(d, conf); Document doc = new Document(); doc.add(new StringField("code", "0001", Store.YES)); doc.add(new StringField("type", "武侠小说", Store.YES)); doc.add(new TextField("title", "笑傲江湖", Store.YES)); doc.add(new TextField("content", "金庸武侠小说改编电视剧,讲述令狐冲、东方不败,五岳剑派与魔教...", Store.YES)); indexWriter.addDocument(doc); doc = new Document(); doc.add(new StringField("code", "0002", Store.YES)); doc.add(new StringField("type", "武侠小说", Store.YES)); doc.add(new TextField("title", "大笑江湖", Store.YES)); doc.add(new TextField("content", "小山羊出演的武侠搞笑电影,无相神功等高深武功", Store.YES)); indexWriter.addDocument(doc); doc = new Document(); doc.add(new StringField("code", "0003", Store.YES)); doc.add(new StringField("type", "武侠小说", Store.YES)); doc.add(new TextField("title", "笑功震武林", Store.YES)); doc.add(new TextField("content", "影片故事发生在民国初年,东北地区形势复杂,土匪势力交错,各占地盘,民不聊生。军阀林国栋与七大退隐江湖的武林高手隐居于此,他们把土匪消灭得干干净净,保了镇民平安。", Store.YES)); indexWriter.addDocument(doc); doc = new Document(); doc.add(new StringField("code", "0011", Store.YES)); doc.add(new StringField("type", "科幻电影", Store.YES)); doc.add(new TextField("title", "钢铁侠", Store.YES)); doc.add(new TextField("content", "托尼·史塔克(Tony Stark)即钢铁侠(Iron Man),是美国漫威漫画旗下超级英雄,初次登场于《悬疑故事》(Tales of Suspense)第39期(1963年3月),由斯坦·李、赖瑞·理柏、唐·赫克以及杰克·科比联合创造。全名安东尼·爱德华·“托尼”·斯塔克(Anthony Edward “Tony” Stark),是斯塔克工业(STARK INDUSTRIES)的董事长,因于一场阴谋绑架中,胸部遭弹片穿入,生命危在旦夕,为了挽救自己的生命,在同被绑架的物理学家殷森(Yin Sen)的协助下托尼造出了防止弹片侵入心脏的方舟反应炉从而逃过一劫,后又用方舟反应炉作为能量运转的来源,暗中制造了一套高科技战衣杀出重围后逃脱,后参与创立复仇者联盟。", Store.YES)); indexWriter.addDocument(doc); doc = new Document(); doc.add(new StringField("code", "0012", Store.YES)); doc.add(new StringField("type", "科幻电影", Store.YES)); doc.add(new TextField("title", "复仇者联盟", Store.YES)); doc.add(new TextField("content", "主要讲述的是“复仇者联盟”应运而生。他们各显神通,团结一心,终于战胜了邪恶势力,保证了地球的安全。", Store.YES)); indexWriter.addDocument(doc); doc = new Document(); doc.add(new StringField("code", "0013", Store.YES)); doc.add(new StringField("type", "科幻电影", Store.YES)); doc.add(new TextField("title", "银河护卫队", Store.YES)); doc.add(new TextField("content", "影片剧情讲述因偷走神秘球体而被疯狂追杀的“星爵”彼得·奎尔被迫结盟四个格格不入的乌合之众——卡魔拉、火箭浣熊、树人格鲁特和毁灭者德拉克斯,他们必须破釜沉舟决一死战,才可能拯救整个银河系.", Store.YES)); indexWriter.addDocument(doc); doc = new Document(); doc.add(new StringField("code", "0014", Store.YES)); doc.add(new StringField("type", "科幻电影", Store.YES)); doc.add(new TextField("title", "异形", Store.YES)); doc.add(new TextField("content", "影片讲述了一艘飞船在执行救援任务时不慎将异形怪物带上船后,船员们与异形搏斗的故事。", Store.YES)); indexWriter.addDocument(doc); indexWriter.commit(); indexWriter.close(); } catch (Exception e) { e.printStackTrace(); } } /** * 更新文档且更新索引 */ @Test public void updateDocumentAndIndex() { try { Path path = Paths.get("D:\\common\\lucene_db\\test_tb"); Directory d = FSDirectory.open(path ); Analyzer analyzer = new SmartChineseAnalyzer(); // 如果不配置分词器,默认使用StandardAnalyzer IndexWriterConfig conf = new IndexWriterConfig(analyzer ); conf.setOpenMode(OpenMode.CREATE_OR_APPEND); IndexWriter indexWriter = new IndexWriter(d, conf); Term term = new Term("code", "0001"); Document doc = new Document(); doc.add(new StringField("code", "0001", Store.YES)); doc.add(new TextField("title", "笑傲江湖2", Store.YES)); doc.add(new TextField("content", "金庸武侠小说改编电视剧,讲述令狐冲、东方不败,五岳剑派与魔教...", Store.YES)); indexWriter.updateDocument(term, doc ); indexWriter.commit(); indexWriter.close(); } catch (Exception e) { e.printStackTrace(); } } /** * 删除文档和对应的索引信息 */ @Test public void removeDocumentAndIndex() { try { Path path = Paths.get("D:\\common\\lucene_db\\test_tb"); Directory d = FSDirectory.open(path ); // 如果不配置分词器,默认使用StandardAnalyzer IndexWriterConfig conf = new IndexWriterConfig(); conf.setOpenMode(OpenMode.CREATE_OR_APPEND); IndexWriter indexWriter = new IndexWriter(d, conf); // 删除StringField及相关索引,不需要analyzer Term term = new Term("code", "0001"); indexWriter.deleteDocuments(term); System.out.println("remove ok"); indexWriter.commit(); indexWriter.close(); } catch (Exception e) { e.printStackTrace(); } } /** * 查询满足条件的文档,其中使用到了不懂得分词器,也含有组合多个query进行查询的 */ @Test public void search() { try { Path path = Paths.get("D:\\common\\lucene_db\\test_tb"); Directory d = FSDirectory.open(path ); IndexReader r = DirectoryReader.open(d ); IndexSearcher indexSearcher = new IndexSearcher(r ); CharArraySet stopWords = new CharArraySet(Arrays.asList("啊","呀","的","了","哟"), true); Analyzer analyzer = new SmartChineseAnalyzer(stopWords ); // 多字段匹配 QueryParser queryParser = new MultiFieldQueryParser(new String[] {"title","content"}, analyzer ); // Query query = queryParser.parse("影片讲述 AND type:武侠小说"); // Query query = queryParser.parse("影片讲述 AND type:科幻电影"); // 通过逻辑操作符进行查询,使用的是相同的分词器 // 组合多个query进行查询,每个query可以使用不同的分词器 Query query1 = queryParser.parse("影片讲述"); // BooleanQuery结构发生的变动,与以前的写法不同了 BooleanQuery.Builder builder = new BooleanQuery.Builder(); builder.add(query1, Occur.MUST); // MUST类似于加法 Query query2 = new TermQuery(new Term("type", "科幻电影")); builder.add(query2, Occur.MUST); // MUST类似于减法 BooleanQuery query = builder.build(); // 高亮操作的相关设置 Formatter formatter = new SimpleHTMLFormatter("<font color='red'>", "</font>"); Scorer fragmentScorer = new QueryScorer(query); Highlighter highlighter = new Highlighter(formatter, fragmentScorer); highlighter.setTextFragmenter(new SimpleFragmenter(30)); TopDocs topDocs = indexSearcher.search(query , 10); // 在满足索引条件的前N个 System.out.println("totalHits = "+topDocs.totalHits); // 显示满足索引条件的总个数 ScoreDoc[] scoreDocs = topDocs.scoreDocs; for (int i = 0; i < scoreDocs.length; i++) { // 控制分页的话,使用pageNum、pageSize进行计算起止位置:start与end int docID = scoreDocs[i].doc; float score = scoreDocs[i].score; Document doc = indexSearcher.doc(docID); System.out.println(scoreDocs[i] + " " + doc.get("code")); // 是将查询结果进行高亮处理 String content = doc.get("content"); TokenStream tokenStream = analyzer.tokenStream(null, content); String highLightText = highlighter.getBestFragment(tokenStream,content); System.out.println("score:"+score+",title:"+doc.get("title")+",type:"+doc.get("type")+",content:"+doc.get("content")); System.out.println("content高亮后:"+highLightText+"\n"); } } catch (Exception e) { e.printStackTrace(); } } /** * 查询所有文档 */ @Test public void searchAll() { try { Path path = Paths.get("D:\\common\\lucene_db\\test_tb"); Directory d = FSDirectory.open(path ); IndexReader r = DirectoryReader.open(d ); IndexSearcher indexSearcher = new IndexSearcher(r ); // 查询所有文档使用MatchAllDocsQuery Query query = new MatchAllDocsQuery(); TopDocs topDocs = indexSearcher.search(query , 10); System.out.println("totalHits = "+topDocs.totalHits); ScoreDoc[] scoreDocs = topDocs.scoreDocs; for (int i = 0; i < scoreDocs.length; i++) { int docID = scoreDocs[i].doc; float score = scoreDocs[i].score; Document doc = indexSearcher.doc(docID); System.out.println(scoreDocs[i] + " " + doc.get("code")); System.out.println("score:"+score+",title:"+doc.get("title")+",content:"+doc.get("content")); } } catch (Exception e) { e.printStackTrace(); } } /** * 测试分词器的使用 */ @Test public void analyzer() { try { // 停顿词 CharArraySet stopWords = new CharArraySet(Arrays.asList("啊","呀"), false); Analyzer analyzer = new SmartChineseAnalyzer(stopWords ); String content = "科幻电影"; TokenStream tokenStream = analyzer.tokenStream(null, content); tokenStream.addAttribute(CharTermAttribute.class); tokenStream.reset();//必须先调用reset方法,否则会报下面的错,可以参考TokenStream的API说明 /* java.lang.IllegalStateException: TokenStream contract violation: reset()/close() call missing, reset() called multiple times, or subclass does not call super.reset(). Please see Javadocs of TokenStream class for more information about the correct consuming workflow.*/ System.out.println("结果:"); while (tokenStream.incrementToken()) { CharTermAttribute charTermAttribute = (CharTermAttribute) tokenStream.getAttribute(CharTermAttribute.class); System.out.println(charTermAttribute.toString()); } tokenStream.end(); tokenStream.close(); } catch (Exception e) { e.printStackTrace(); } } }