基于kube-vip搭建kubernetes1.24

一、规划

服务器规划:

| 系统 | 内核版本 | IP | 主机名 | 角色 |

| Ubuntu 22.04.1 LTS | 5.15.0-46-generic | 172.16.19.171 | k8s-master01 | control-plane |

| Ubuntu 22.04.1 LTS | 5.15.0-46-generic | 172.16.19.172 | k8s-master02 | control-plane |

| Ubuntu 22.04.1 LTS | 5.15.0-46-generic | 172.16.19.173 | k8s-master03 | control-plane |

| Ubuntu 22.04.1 LTS | 5.15.0-46-generic | 172.16.19.174 | k8s-node01 | worker |

| Ubuntu 22.04.1 LTS | 5.15.0-46-generic | 172.16.19.175 | k8s-node02 | worker |

| Ubuntu 22.04.1 LTS | 5.15.0-46-generic | 172.16.19.176 | k8s-node03 | worker |

二、服务器初始化

hostnamectl set-hostname k8s-master

apt-get install openssh-server

vim /etc/ssh/sshd_config

PermitRootLogin yes

apt-get update && apt-get install -y apt-transport-https

apt install -y net-tools tcpdump ntp bridge-utils tree zip wget iftop ethtool dig network-manager

##安装kubernetes源

wget https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg.asc

cat apt-key.gpg.asc |gpg --dearmor >/usr/share/keyrings/kubernetes-archive-keyring.gpg

vim /etc/apt/sources.list.d/kubernetes.list

deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

apt-get update

apt upgrade -y

##关闭swap

sed -ri 's/.*swap.*/#&/' /etc/fstab

swapoff -a

##安装kubeadm

apt install kubeadm=1.24.3-00 kubectl=1.24.3-00 kubelet=1.24.3-00三、安装containerd

##下载

wget https://github.com/containerd/containerd/releases/download/v1.6.6/cri-containerd-cni-1.6.6-linux-amd64.tar.gz

tar -zxf cri-containerd-cni-1.6.6-linux-amd64.tar.gz -C /

mkdir /etc/containerd

containerd config default > /etc/containerd/config.tomlvim /etc/containerd/config.toml

增加镜像仓库、修改基础镜像sandbox_image

disabled_plugins = []

imports = []

oom_score = 0

plugin_dir = ""

required_plugins = []

root = "/var/lib/containerd"

state = "/run/containerd"

temp = ""

version = 2

[cgroup]

path = ""

[debug]

address = ""

format = ""

gid = 0

level = ""

uid = 0

[grpc]

address = "/run/containerd/containerd.sock"

gid = 0

max_recv_message_size = 16777216

max_send_message_size = 16777216

tcp_address = ""

tcp_tls_ca = ""

tcp_tls_cert = ""

tcp_tls_key = ""

uid = 0

[metrics]

address = ""

grpc_histogram = false

[plugins]

[plugins."io.containerd.gc.v1.scheduler"]

deletion_threshold = 0

mutation_threshold = 100

pause_threshold = 0.02

schedule_delay = "0s"

startup_delay = "100ms"

[plugins."io.containerd.grpc.v1.cri"]

device_ownership_from_security_context = false

disable_apparmor = false

disable_cgroup = false

disable_hugetlb_controller = true

disable_proc_mount = false

disable_tcp_service = true

enable_selinux = false

enable_tls_streaming = false

enable_unprivileged_icmp = false

enable_unprivileged_ports = false

ignore_image_defined_volumes = false

max_concurrent_downloads = 3

max_container_log_line_size = 16384

netns_mounts_under_state_dir = false

restrict_oom_score_adj = false

#sandbox_image = "k8s.gcr.io/pause:3.6"

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.7"

selinux_category_range = 1024

stats_collect_period = 10

stream_idle_timeout = "4h0m0s"

stream_server_address = "127.0.0.1"

stream_server_port = "0"

systemd_cgroup = false

tolerate_missing_hugetlb_controller = true

unset_seccomp_profile = ""

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/opt/cni/bin"

conf_dir = "/etc/cni/net.d"

conf_template = ""

ip_pref = ""

max_conf_num = 1

[plugins."io.containerd.grpc.v1.cri".containerd]

default_runtime_name = "runc"

disable_snapshot_annotations = true

discard_unpacked_layers = false

ignore_rdt_not_enabled_errors = false

no_pivot = false

snapshotter = "overlayfs"

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

BinaryName = ""

CriuImagePath = ""

CriuPath = ""

CriuWorkPath = ""

IoGid = 0

IoUid = 0

NoNewKeyring = false

NoPivotRoot = false

Root = ""

ShimCgroup = ""

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options]

[plugins."io.containerd.grpc.v1.cri".image_decryption]

key_model = "node"

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://registry-1.docker.io"] #//到此为配置文件默认生成,之后为需要添加的内容

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."172.16.91.251"]

endpoint = ["http://172.16.91.251"]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."172.16.91.251".tls]

insecure_skip_verify = true

[plugins."io.containerd.grpc.v1.cri".registry.configs."172.16.91.251".auth]

username = "admin"

password = "Harbor12345"

#[plugins."io.containerd.grpc.v1.cri".registry]

# config_path = ""

# [plugins."io.containerd.grpc.v1.cri".registry.auths]

# [plugins."io.containerd.grpc.v1.cri".registry.configs]

# [plugins."io.containerd.grpc.v1.cri".registry.headers]

# [plugins."io.containerd.grpc.v1.cri".registry.mirrors]

# [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

# tls_cert_file = ""

# tls_key_file = ""

[plugins."io.containerd.internal.v1.opt"]

path = "/opt/containerd"

[plugins."io.containerd.internal.v1.restart"]

interval = "10s"

[plugins."io.containerd.internal.v1.tracing"]

sampling_ratio = 1.0

service_name = "containerd"

[plugins."io.containerd.metadata.v1.bolt"]

content_sharing_policy = "shared"

[plugins."io.containerd.monitor.v1.cgroups"]

no_prometheus = false

[plugins."io.containerd.runtime.v1.linux"]

no_shim = false

runtime = "runc"

runtime_root = ""

shim = "containerd-shim"

shim_debug = false

[plugins."io.containerd.runtime.v2.task"]

platforms = ["linux/amd64"]

sched_core = false

[plugins."io.containerd.service.v1.diff-service"]

default = ["walking"]

[plugins."io.containerd.service.v1.tasks-service"]

rdt_config_file = ""

[plugins."io.containerd.snapshotter.v1.aufs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.btrfs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.devmapper"]

async_remove = false

base_image_size = ""

discard_blocks = false

fs_options = ""

fs_type = ""

pool_name = ""

root_path = ""

[plugins."io.containerd.snapshotter.v1.native"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.overlayfs"]

root_path = ""

upperdir_label = false

[plugins."io.containerd.snapshotter.v1.zfs"]

root_path = ""

[plugins."io.containerd.tracing.processor.v1.otlp"]

endpoint = ""

insecure = false

protocol = ""

[proxy_plugins]

[stream_processors]

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar"]

accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar"

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"]

accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar+gzip"

[timeouts]

"io.containerd.timeout.bolt.open" = "0s"

"io.containerd.timeout.shim.cleanup" = "5s"

"io.containerd.timeout.shim.load" = "5s"

"io.containerd.timeout.shim.shutdown" = "3s"

"io.containerd.timeout.task.state" = "2s"

[ttrpc]

address = ""

gid = 0

uid = 0systemctl enable containerd --now

systemctl status containerd

安装nerdctl

wget https://github.com/containerd/nerdctl/releases/download/v0.22.2/nerdctl-0.22.2-linux-amd64.tar.gz

tar -zxf nerdctl-0.22.0-linux-amd64.tar.gz

nerdctl run --rm -it -p 80:80 --name=web --restart=always nginx:latest

nerdctl containerd ls

nerdctl ps四、安装kube-vip、kubeadm、kubelet、kubectl

# 设置VIP地址

export VIP=172.16.19.170

export INTERFACE=ens160

ctr -n k8s.io image pull docker.io/plndr/kube-vip:v0.5.0

ctr -n k8s.io run --rm --net-host docker.io/plndr/kube-vip:v0.5.0 vip \

/kube-vip manifest pod \

--interface $INTERFACE \

--vip $VIP \

--controlplane \

--services \

--arp \

--leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml所有control-plane上都需放至kube-vip.yaml文件

https://kube-vip.io/docs/installation/static/#kube-vip-as-ha-load-balancer-or-both

kubeadm.yaml 内容

kubeadm.yaml apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.19.171 # 指定当前节点内网IP

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock # 使用 containerd的Unix socket 地址

imagePullPolicy: IfNotPresent

name: k8s-master01

taints: # 给master添加污点,master节点不能调度应用

- effect: "NoSchedule"

key: "node-role.kubernetes.io/master"

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs # kube-proxy 模式

---

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.24.3

controlPlaneEndpoint: 172.16.19.170:6443 # 设置控制平面Endpoint地址

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

certSANs: # 添加其他master节点的相关信息

- 127.0.0.1

- 172.16.19.170

- k8s-master01

- k8s-master02

- k8s-master03

- 192.168.31.31

- 192.168.31.32

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 # 指定 pod 子网

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

cgroupDriver: systemd # 配置 cgroup driver

logging: {}

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

安装不带kube-proxy的kubernetes

kubeadm init --skip-phases=addon/kube-proxy --upload-certs --config kubeadm.yaml

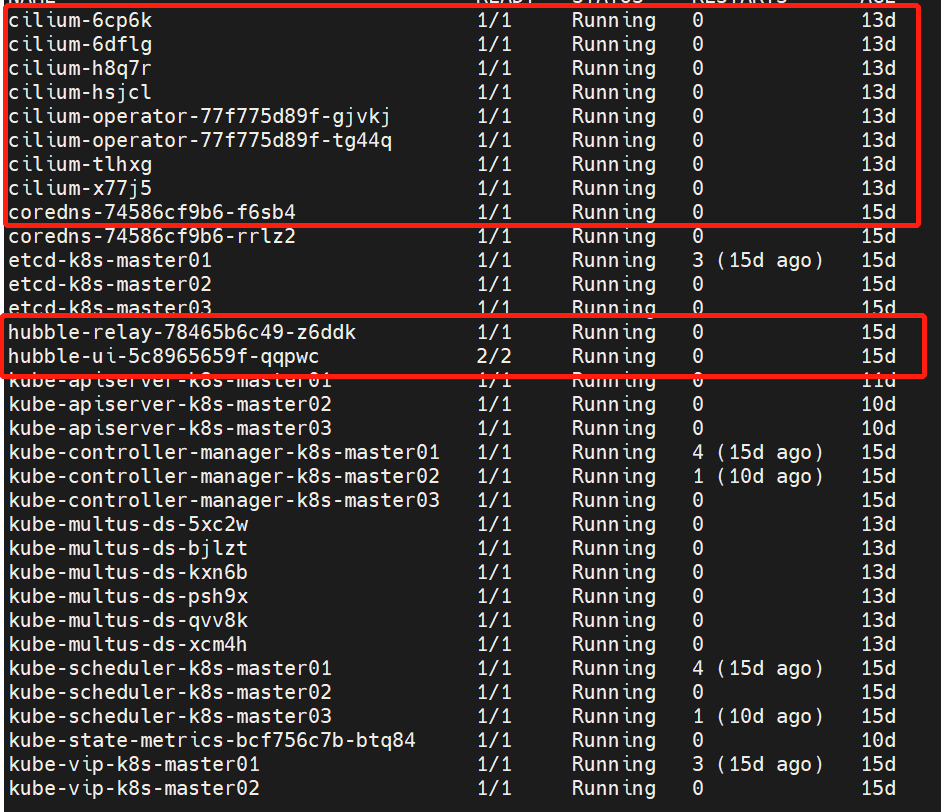

五、安装cilium(No kube-proxy)

安装helm3

curl https://baltocdn.com/helm/signing.asc | sudo apt-key add -

echo "deb https://baltocdn.com/helm/stable/debian/ all main" | sudo tee /etc/apt/sources.list.d/helm-stable-debian.list

helm repo add cilium https://helm.cilium.io/https://docs.cilium.io/en/stable/gettingstarted/kubeproxy-free/

安装cilium

API_SERVER_IP=172.16.19.170

API_SERVER_PORT=6443

helm template cilium cilium --version 1.12.1 --namespace kube-system --set kubeProxyReplacement=strict --set k8sServiceHost=${API_SERVER_IP} --set k8sServicePort=${API_SERVER_PORT} --set hubble.relay.enabled=true --set hubble.ui.enabled=true --set bpf.masquerade=true --set prometheus.enabled=true --set operator.prometheus.enabled=true --set hubble.enabled=true --set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,http}" >1.121.yaml

kubectl apply -f 1.121.yaml

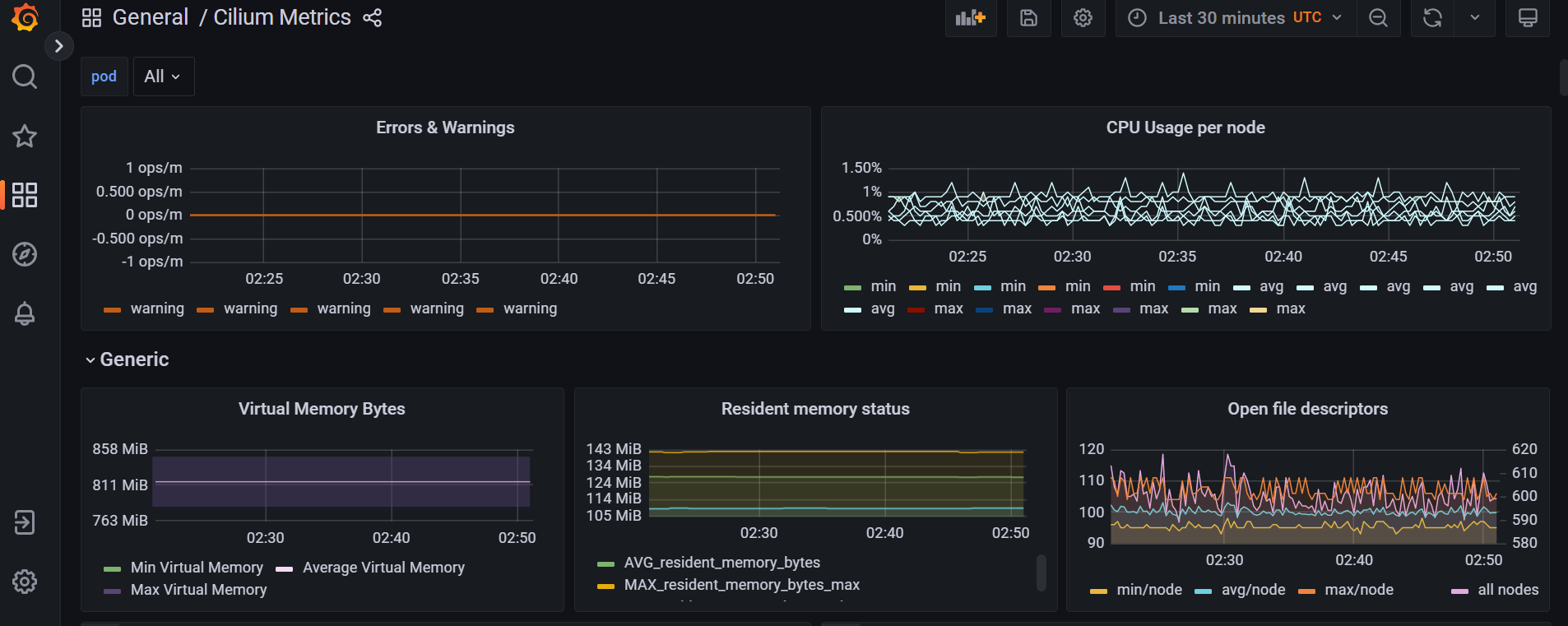

查看prometheus、grafana

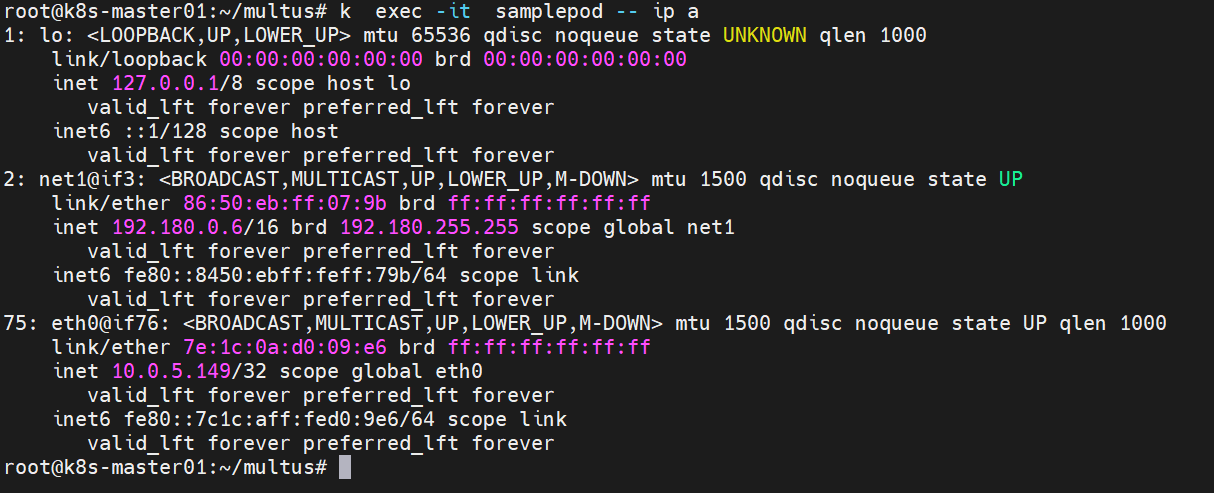

六、multus 多网卡

config.yaml

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: macvlan-conf

spec:

config: '{

"cniVersion": "0.3.0",

"type": "macvlan",

"master": "ens192",

"mode": "bridge",

"ipam": {

"type": "host-local",

"subnet": "192.180.0.0/16",

"rangeStart": "192.180.0.1",

"rangeEnd": "192.180.255.255",

"routes": [

{ "dst": "0.0.0.0/0" }

],

"gateway": "192.180.0.1"

}

}'

multus-daemonset.yml

multus-daemonset.yml # Note:

# This deployment file is designed for 'quickstart' of multus, easy installation to test it,

# hence this deployment yaml does not care about following things intentionally.

# - various configuration options

# - minor deployment scenario

# - upgrade/update/uninstall scenario

# Multus team understand users deployment scenarios are diverse, hence we do not cover

# comprehensive deployment scenario. We expect that it is covered by each platform deployment.

---

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: network-attachment-definitions.k8s.cni.cncf.io

spec:

group: k8s.cni.cncf.io

scope: Namespaced

names:

plural: network-attachment-definitions

singular: network-attachment-definition

kind: NetworkAttachmentDefinition

shortNames:

- net-attach-def

versions:

- name: v1

served: true

storage: true

schema:

openAPIV3Schema:

description: 'NetworkAttachmentDefinition is a CRD schema specified by the Network Plumbing

Working Group to express the intent for attaching pods to one or more logical or physical

networks. More information available at: https://github.com/k8snetworkplumbingwg/multi-net-spec'

type: object

properties:

apiVersion:

description: 'APIVersion defines the versioned schema of this represen

tation of an object. Servers should convert recognized schemas to the

latest internal value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources'

type: string

kind:

description: 'Kind is a string value representing the REST resource this

object represents. Servers may infer this from the endpoint the client

submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds'

type: string

metadata:

type: object

spec:

description: 'NetworkAttachmentDefinition spec defines the desired state of a network attachment'

type: object

properties:

config:

description: 'NetworkAttachmentDefinition config is a JSON-formatted CNI configuration'

type: string

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: multus

rules:

- apiGroups: ["k8s.cni.cncf.io"]

resources:

- '*'

verbs:

- '*'

- apiGroups:

- ""

resources:

- pods

- pods/status

verbs:

- get

- update

- apiGroups:

- ""

- events.k8s.io

resources:

- events

verbs:

- create

- patch

- update

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: multus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: multus

subjects:

- kind: ServiceAccount

name: multus

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: multus

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: multus-cni-config

namespace: kube-system

labels:

tier: node

app: multus

data:

# NOTE: If you'd prefer to manually apply a configuration file, you may create one here.

# In the case you'd like to customize the Multus installation, you should change the arguments to the Multus pod

# change the "args" line below from

# - "--multus-conf-file=auto"

# to:

# "--multus-conf-file=/tmp/multus-conf/70-multus.conf"

# Additionally -- you should ensure that the name "70-multus.conf" is the alphabetically first name in the

# /etc/cni/net.d/ directory on each node, otherwise, it will not be used by the Kubelet.

cni-conf.json: |

{

"name": "multus-cni-network",

"type": "multus",

"capabilities": {

"portMappings": true

},

"delegates": [

{

"cniVersion": "0.3.1",

"name": "default-cni-network",

"plugins": [

{

"type": "flannel",

"name": "flannel.1",

"delegate": {

"isDefaultGateway": true,

"hairpinMode": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

],

"kubeconfig": "/etc/cni/net.d/multus.d/multus.kubeconfig"

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-multus-ds

namespace: kube-system

labels:

tier: node

app: multus

name: multus

spec:

selector:

matchLabels:

name: multus

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

tier: node

app: multus

name: multus

spec:

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

- operator: Exists

effect: NoExecute

serviceAccountName: multus

containers:

- name: kube-multus

image: ghcr.io/k8snetworkplumbingwg/multus-cni:stable

command: ["/entrypoint.sh"]

args:

- "--multus-conf-file=auto"

- "--cni-version=0.3.1"

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

volumeMounts:

- name: cni

mountPath: /host/etc/cni/net.d

- name: cnibin

mountPath: /host/opt/cni/bin

- name: multus-cfg

mountPath: /tmp/multus-conf

initContainers:

- name: install-multus-binary

image: ghcr.io/k8snetworkplumbingwg/multus-cni:stable

command:

- "cp"

- "/usr/src/multus-cni/bin/multus"

- "/host/opt/cni/bin/multus"

resources:

requests:

cpu: "10m"

memory: "15Mi"

securityContext:

privileged: true

volumeMounts:

- name: cnibin

mountPath: /host/opt/cni/bin

mountPropagation: Bidirectional

terminationGracePeriodSeconds: 10

volumes:

- name: cni

hostPath:

path: /etc/cni/net.d

- name: cnibin

hostPath:

path: /opt/cni/bin

- name: multus-cfg

configMap:

name: multus-cni-config

items:

- key: cni-conf.json

path: 70-multus.conf

test.yaml

apiVersion: v1

kind: Pod

metadata:

name: samplepod

annotations:

k8s.v1.cni.cncf.io/networks: macvlan-conf

spec:

containers:

- name: samplepod

command: ["/bin/ash", "-c", "trap : TERM INT; sleep infinity & wait"]

image: alpine

浙公网安备 33010602011771号

浙公网安备 33010602011771号