hadoop分布式搭建

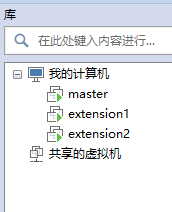

1、新建三台机器,分别为:

hadoop分布式搭建至少需要三台机器:

- master

- extension1

- extension2

本文利用在VMware Workstation下安装Linux centOS,安装教程请看:

2、编辑ip

用ifconfig查看本机ip:

[root@master ~]# ifconfig

eno16777736: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.204.128 netmask 255.255.255.0 broadcast 192.168.204.255

inet6 fe80::20c:29ff:fe43:53ea prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:43:53:ea txqueuelen 1000 (Ethernet)

RX packets 86219 bytes 123262936 (117.5 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 22010 bytes 1501252 (1.4 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 0 (Local Loopback)

RX packets 188 bytes 33400 (32.6 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 188 bytes 33400 (32.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

ether 00:00:00:00:00:00 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

extension1 和 extension2 同样如此,可以得到三台机器的ip分别为:

master:192.168.204.128

extension1:192.168.204.129

extension2:192.168.204.130

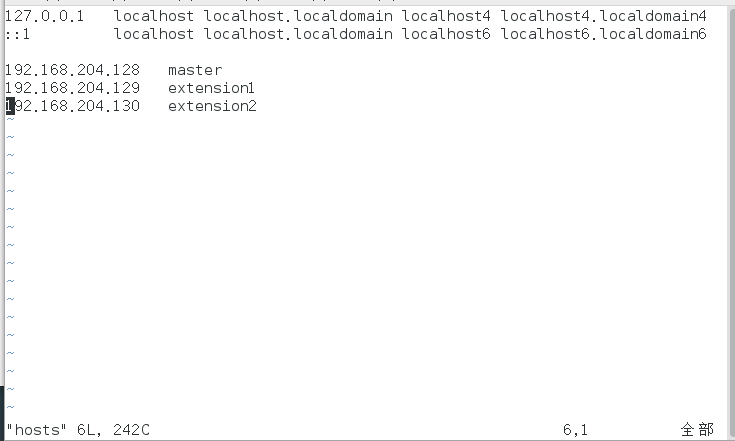

切换到/etc/hosts修改配置,隔一行在后面加上:

192.168.204.128 master

192.168.204.129 extension1

192.168.204.130 extension2

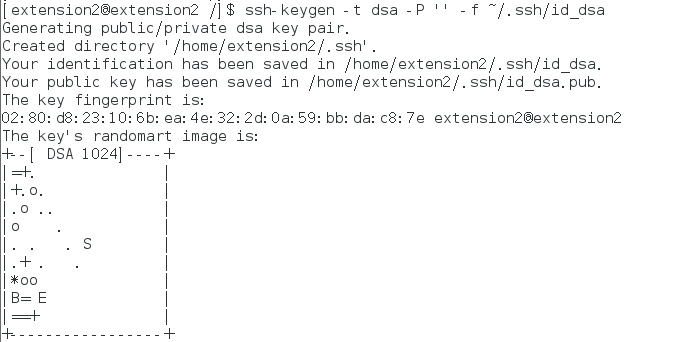

3、创建SSH密匙

创建密匙命令:

[master@master root]$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

得到样式如下:

extension1 和 extension2 同样操作

切换到密匙文件夹:/home/master/.ssh/

4、复制密匙成新文件

输入命令:

[master@master .ssh]$ cat id_dsa.pub >> authorized_keys

会在当前生成新的文件:

authorized_keys

extension1 和 extension2 同样操作

5、测试密匙

测试密匙能否使用:

- ssh localhost

- yes

- 输入密码

- exit

extension1 和 extension2 同样操作

6、extension复制master密匙

extension复制master密匙达到免密码登陆,在三台机器里面都输入下面命令:

[extension1@extension1 .ssh]$ scp master@master:~/.ssh/id_dsa.pub ./master_dsa.pub

cat master_dsa.pub >> authorized_keys

chmod 600 authorized_keys

extension1 和 extension2 同样操作

7、实现免密匙登陆

master能对master、extension1、extension2免密匙登陆:

ssh master@master

ssh extension1@extension1

ssh extension2@extension2

extension1 和 extension2 同样操作

8、下载解压安装包

查看电脑位数: getconf LONG_BIT

java地址(jdk1.7.0_09x64.tar.gz):

http://pan.baidu.com/s/1hs2uX1q

hadoop地址(hadoop-0.20.2.tar.gz):

http://vdisk.weibo.com/s/zNZl3

新建文件夹:

- 切换到 root

- 新建文件夹: mkdir /usr/program

- 放入安装包

- 解压

解压命令:

tar -zxvf hadoop-0.20.2.tar.gz

tar xvf jdk1.7.0_09x64.tar.gz

extension1 和 extension2 同样操作

9、java环境配置

打开 /etc/profile 配置文件,在最末尾加入:

# set java environment

exportJAVA_HOME=/usr/program/jdk1.7.0_09x64

exportJRE_HOME=/usr/program/jdk1.7.0_09x64/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

export PATH=/usr/program/jdk1.7.0_09x64/bin

保存退出后,跟新配置文件,让配置文件生效:

source /etc/profile

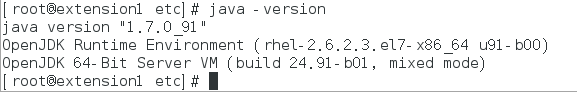

查看环境是否配置成功:

java -version

extension1 和 extension2 同样操作

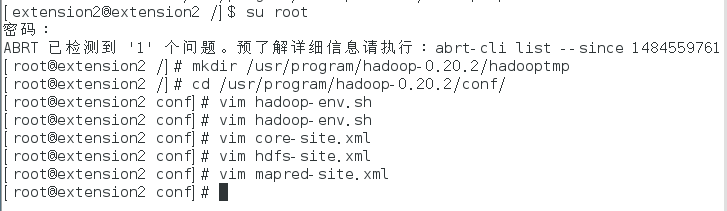

10、hadoop环境配置

创建一个文件夹:

mkdir /usr/program/hadoop-0.20.2/hadooptmp

进入文件夹:

/usr/program/hadoop-0.20.2/conf/

hadoop-env.sh:

export JAVA_HOME=/usr/program/jdk1.7.0_09x64

core-site.xml:

打开文件:

vim core-site.xml

里面的代码改成:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000/</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/program/hadoop-0.20.2/hadooptmp</value>

</property>

</configuration>

hdfs-site.xml:

打开文件:

vim hdfs-site.xml

写入以下代码:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

mapred-site.xml:

打开文件:

vim mapred-site.xml

写入以下代码:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>master:9001</value>

</property>

</configuration>

extension1 和 extension2 同样操作

11、环境配置

打开文件:

/etc/profile

在最后面加入:

#set hadoop

export HADOOP_HOME=/usr/program/hadoop-0.20.2

export PATH=$HADOOP_HOME/bin:$PATH

使配置文件生效:

source /etc/profile

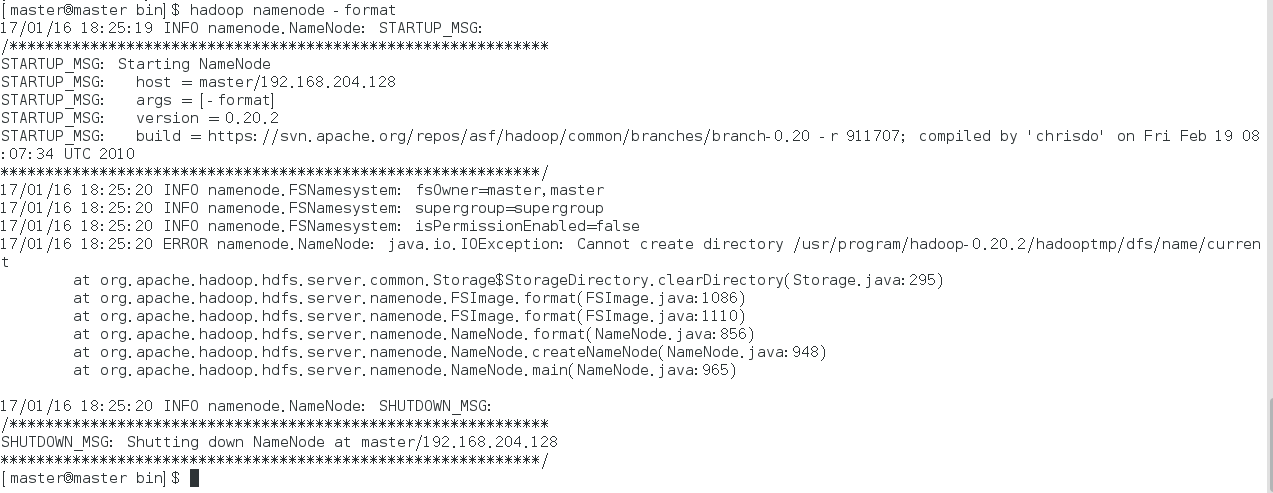

12、启动hadoop

进入文件夹:

/usr/program/hadoop-0.20.2/bin

格式化namenode:

hadoop namenode -format

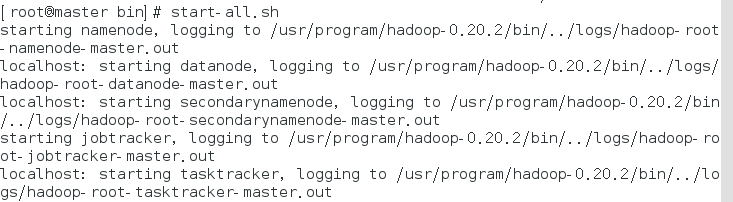

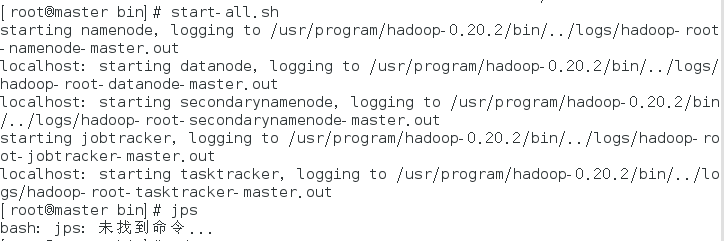

打开hadoop:

start-all.sh

输入 jps :

但是,如果输入口输入 jps 出现:

bash: jps: 未找到命令...

方法一:

查看java目录:

which java

删除这个指引:

rm /bin/java

建立新的指引:

ln -s /usr/program/jdk1.7.0_25/bin/java /bin/java

方法二:

经过排查发现是:

jps 命令是在java解压包中的 /bin/ 文件夹里面,是一个可执行文件,但是可以用另一个方法来看是否完成启动:

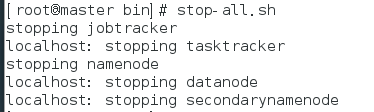

stop-all.sh

但是用另一个方法查看也行,浏览器输入:

192.168.204.128:50030

浏览器输入:

192.168.204.128:50070

该完成的时候还是会完成的,切记java下载的版本下载为:

- jdk1.8.0_101

- jdk1.7.0_09

- jdk1.6.0_13

- jdk1.7.0_21

oracle地址:

http://www.oracle.com/technetwork/cn/java/javase/downloads/jdk8-downloads-2133151-zhs.html

检查安装的JAVA包:

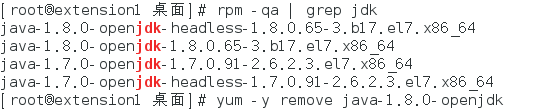

rpm -qa | grep jdk

卸载相应的包:

yum -y remove java-1.8.0-openjdk-headless.x86_64

浙公网安备 33010602011771号

浙公网安备 33010602011771号