elasticsearch安装ik分词器

安装ik分词器

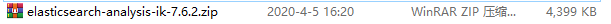

安装

解压到Elasticsearch目录的plugins目录中:

使用unzip命令解压:

unzip elasticsearch-analysis-ik-7.6.2.zip -d ik-analyzer

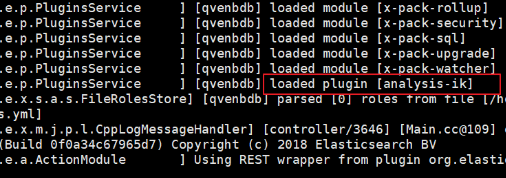

然后重启elasticsearch:

测试

先不管语法,我们先测试一波。

在kibana控制台输入下面的请求:

POST _analyze { "analyzer": "ik_max_word", "text": "我是中国人" }

运行得到结果:

{ "tokens": [ { "token": "我", "start_offset": 0, "end_offset": 1, "type": "CN_CHAR", "position": 0 }, { "token": "是", "start_offset": 1, "end_offset": 2, "type": "CN_CHAR", "position": 1 }, { "token": "中国人", "start_offset": 2, "end_offset": 5, "type": "CN_WORD", "position": 2 }, { "token": "中国", "start_offset": 2, "end_offset": 4, "type": "CN_WORD", "position": 3 }, { "token": "国人", "start_offset": 3, "end_offset": 5, "type": "CN_WORD", "position": 4 } ] }

本文来自博客园,作者:BaldHead,转载请注明原文链接:https://www.cnblogs.com/strict/p/12642242.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号