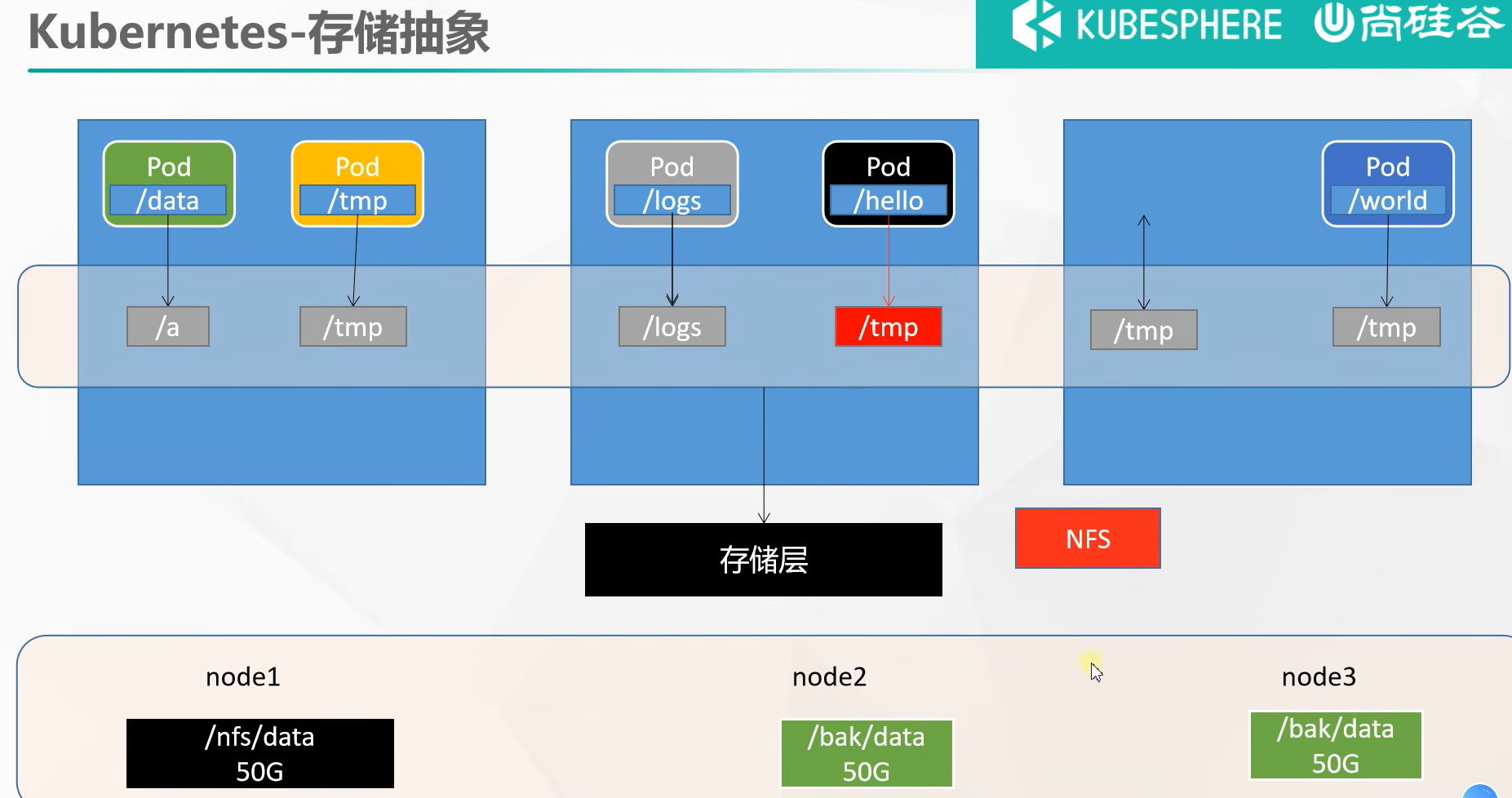

kubernetes -- 存储抽象

架构图:

文件系统:

- Glusterfs

- NFS

- CephFS

这里选用 NFS 文件系统

工作流程:

- pod 挂载统一的node文件目录

- 当pod重启后,还是会重新挂载相同的node文件目录

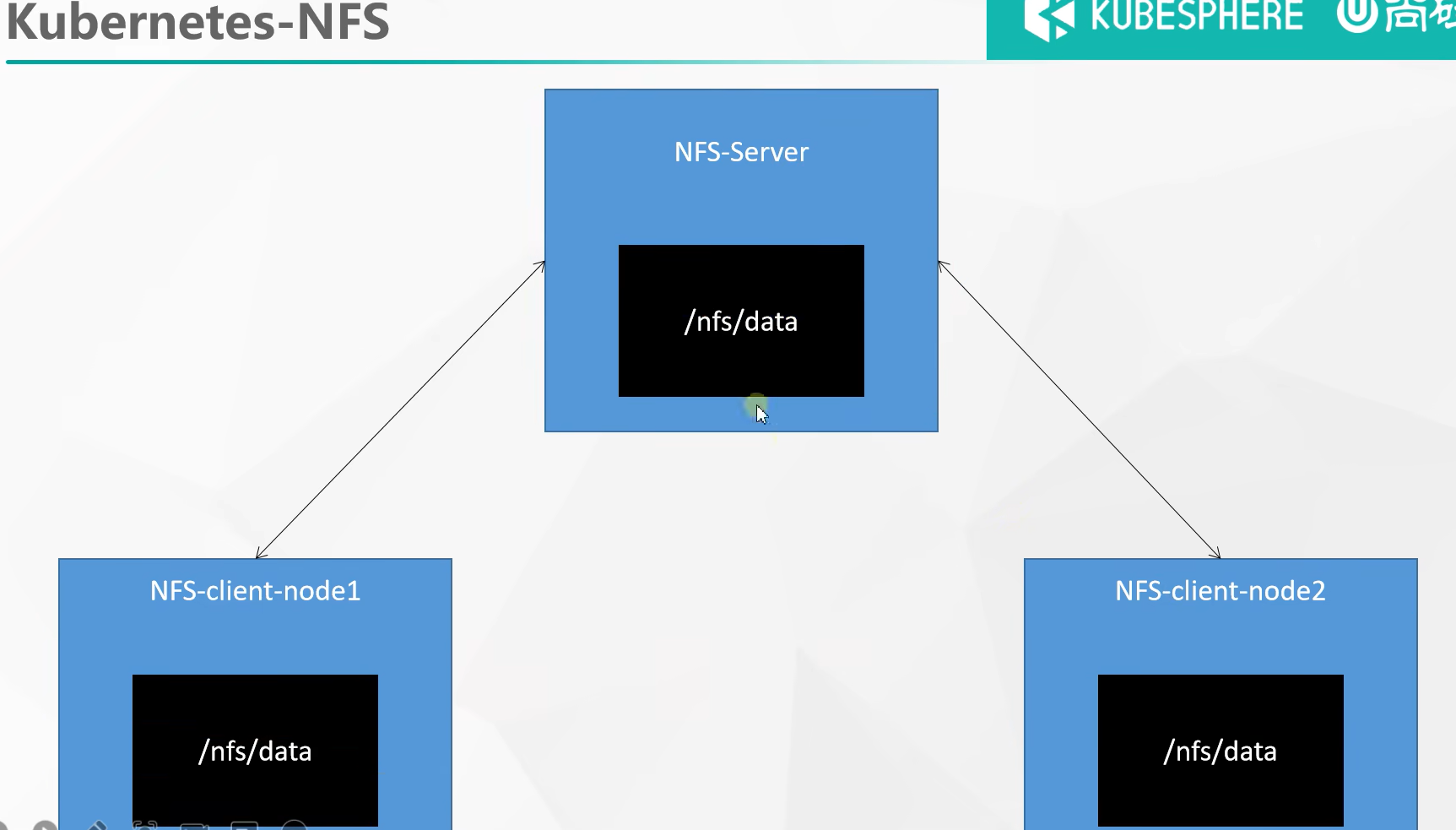

文件系统架构:

主节点文件目录共享给node1 和node2, 当主节点文件被修改, node1 和node2 也会被修改

安装:

安装NFS文件系统

#所有机器安装 yum install -y nfs-utils

主节点

#nfs主节点 echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports # 暴露目录 mkdir -p /nfs/data systemctl enable rpcbind --now systemctl enable nfs-server --now #配置生效 exportfs -r # 确认/nfs/data 成功暴露 exportfs

从节点

# 确认主节点暴露目录 showmount -e 172.31.0.4 【主节点私网IP】 #执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmount mkdir -p /nfs/data # 与主节点暴露目录同步 mount -t nfs 172.31.0.4:/nfs/data【主节点暴露目录】 /nfs/data【从节点目录】 # 验证写入一个测试文件, 其他节点查看 echo "hello nfs server" > /nfs/data/test.txt 从此主从节点/nfs/data/目录实现了同步

原生方式数据挂载

- 首先在主节点创建 /nfs/data/nginx-pv 挂载目录

apiVersion: apps/v1 kind: Deployment metadata: labels: app: nginx-pv-demo name: nginx-pv-demo spec: replicas: 2 selector: matchLabels: app: nginx-pv-demo template: metadata: labels: app: nginx-pv-demo spec: containers: - image: nginx name: nginx volumeMounts: - name: html mountPath: /usr/share/nginx/html volumes: - name: html nfs: server: 172.31.0.4 path: /nfs/data/nginx-pv

注意:

spec:

containers:

- image: nginx # 使用的镜像

name: nginx

volumeMounts: # 挂载点

- name: html # 名称

mountPath: /usr/share/nginx/html 容器中的挂载目录

volumes: # 挂载

- name: html # 挂载名称

nfs: # 文件系统

server: 172.31.0.4 # 本地主机IP

path: /nfs/data/nginx-pv # 本地主机挂载目录

注意: 和docker一样, 挂载成功之后, 本地目录会直接覆盖容器目录

原生挂载的问题:

- 本地挂载目录必须手动创建

- 如果pod 删除后, 本地挂载的目录数据会依旧保留

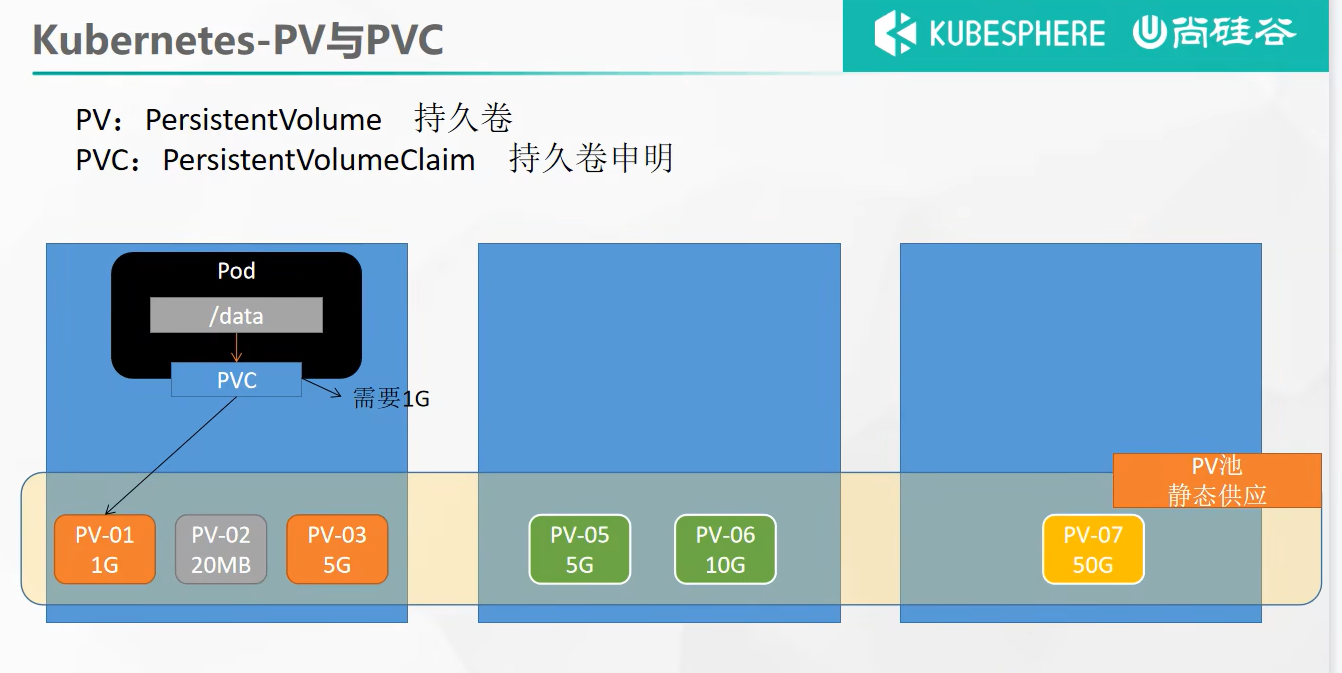

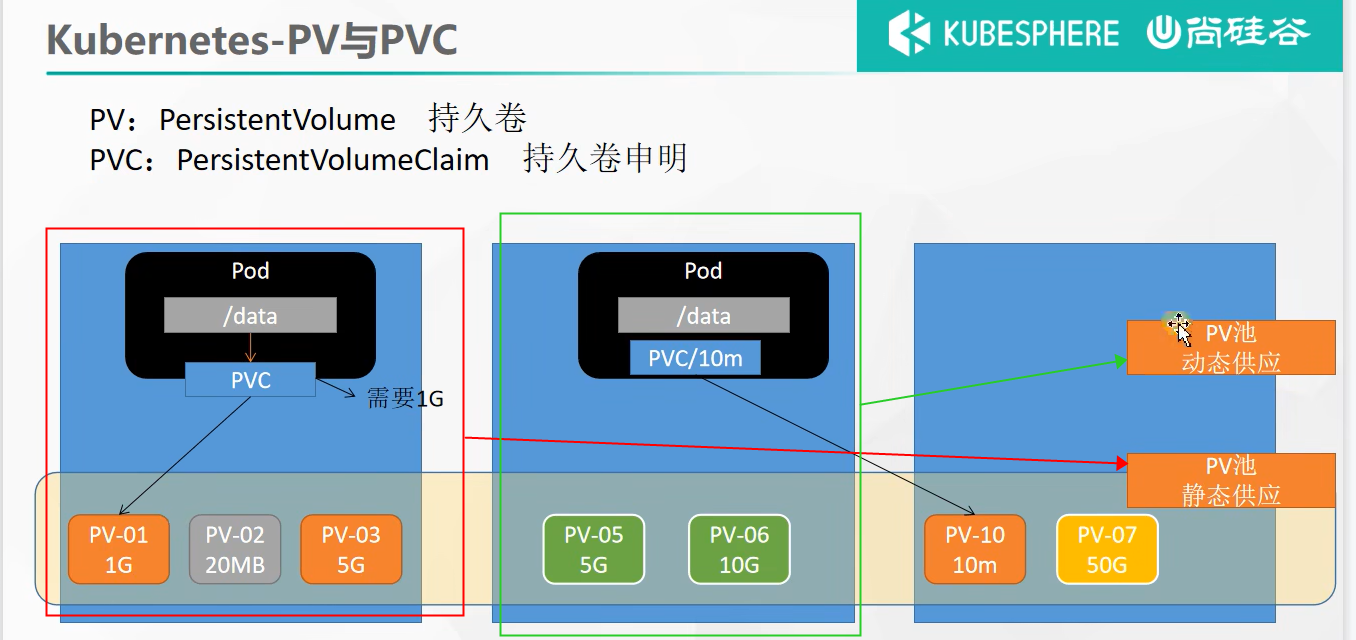

PV&PVC

PV:持久卷(Persistent Volume),将应用需要持久化的数据保存到指定位置

PVC:持久卷申明(Persistent Volume Claim),申明需要使用的持久卷规格

测试:(以下是静态供应演示)

创建pv.yaml

server更改成你的主节点IP

apiVersion: v1 kind: PersistentVolume metadata: name: pv01-10m spec: capacity: storage: 10M accessModes: - ReadWriteMany storageClassName: nfs nfs: path: /nfs/data/01 server: 172.31.0.4 --- apiVersion: v1 kind: PersistentVolume metadata: name: pv02-1gi spec: capacity: storage: 1Gi accessModes: - ReadWriteMany storageClassName: nfs nfs: path: /nfs/data/02 server: 172.31.0.4 --- apiVersion: v1 kind: PersistentVolume metadata: name: pv03-3gi spec: capacity: storage: 3Gi accessModes: - ReadWriteMany storageClassName: nfs nfs: path: /nfs/data/03 server: 172.31.0.4

查看:

kubectl get persistentvolume

# 简写

kubectl get pv

创建pvc

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: nginx-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 200Mi storageClassName: nfs

创建Pod绑定PVC

apiVersion: apps/v1 kind: Deployment metadata: labels: app: nginx-deploy-pvc name: nginx-deploy-pvc spec: replicas: 2 selector: matchLabels: app: nginx-deploy-pvc template: metadata: labels: app: nginx-deploy-pvc spec: containers: - image: nginx name: nginx volumeMounts: - name: html mountPath: /usr/share/nginx/html volumes: - name: html persistentVolumeClaim: claimName: nginx-pvc

验证:

- 修改主节点目录下的内容, 在从节点pod里的容器中查看

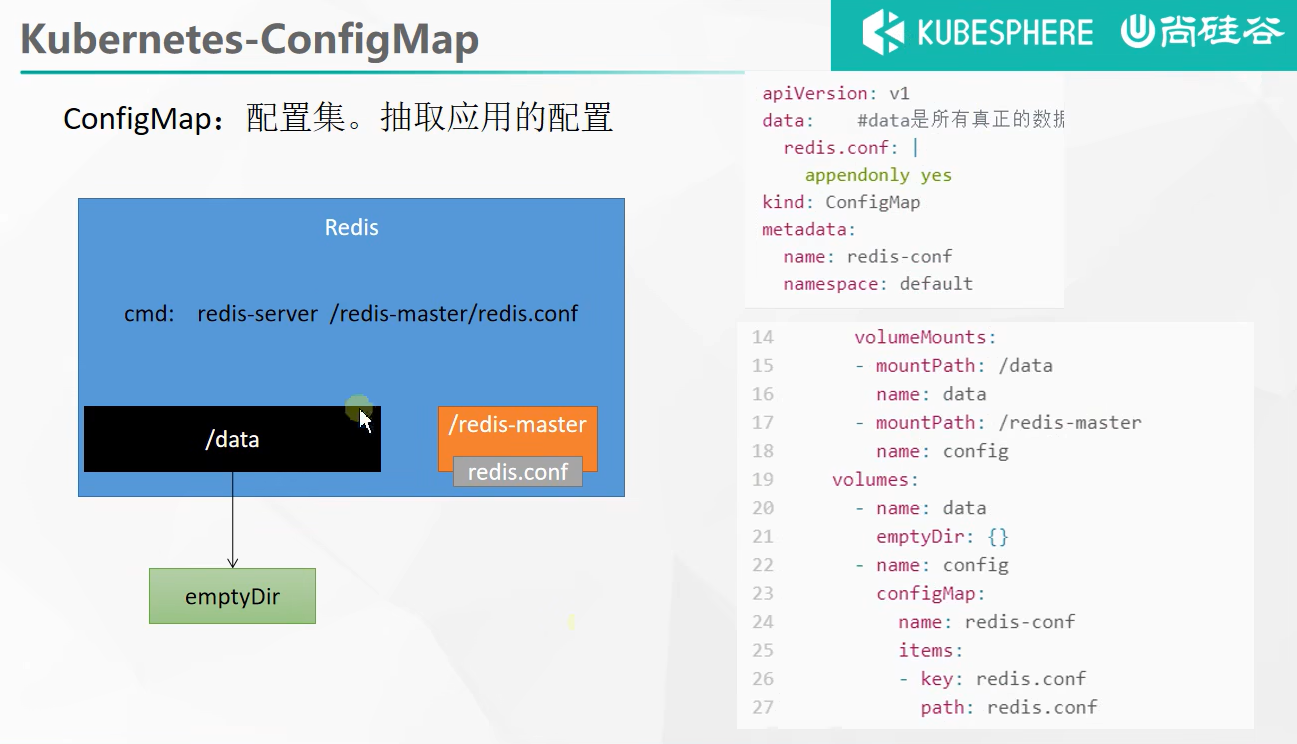

ConfigMap

抽取应用配置,并且可以自动更新

创建方式:

# 创建配置,redis保存到k8s的etcd; kubectl create cm redis-conf --from-file=redis.conf

# 查看

kubectl get cm

# 修改, configmap具有热更新能力, 更改后一段时间内同步到pod的容器上

kubectl edit cm 【redis-conf】

# 查看cm 中内容, 其余的都用

apiVersion: v1 data: #data是所有真正的数据,key:默认是文件名 value:配置文件的内容 redis.conf: | appendonly yes kind: ConfigMap metadata: name: redis-conf namespace: default

测试创建pod

apiVersion: v1 kind: Pod metadata: name: redis spec: containers: - name: redis image: redis command: - redis-server - "/redis-master/redis.conf" #指的是redis容器内部的位置 ports: - containerPort: 6379 volumeMounts: - mountPath: /data name: data - mountPath: /redis-master name: config volumes: - name: data emptyDir: {} - name: config configMap: name: redis-conf items: - key: redis.conf path: redis.conf

引用关系

- 从图得知data下的数据是以key:value保存, key【redis.conf】 是我们定义是的redis配置文件名称, value 是文件内容

- metadata:

- name 声明使用哪个cm 的配置集 (配置集名称可以通过kubectl get cm 查看)

- volumeMounts 数据挂载点有两个:

- 容器数据data 挂载到/data 目录下

- 配置挂载到/redis-master 目录下

- volumes:

- name:data 声明是data配置; emptyDir: {} 表示由K8s 随便创建个临时目录进行挂载

- name:config 声明上面配置;

- name 指定使用cm 配置集

- itmes: 获取配置集内容

- key 指定配置集中的key(由redis配置文件名称就是key)

- path 获取内容存放在 容器中/redis-master目录下的redis.conf 中

检查配置是否更新

kubectl exec -it redis -- redis-cli 127.0.0.1:6379> CONFIG GET maxmemory 127.0.0.1:6379> CONFIG GET maxmemory-policy

检查指定文件内容是否已经更新

修改了CM。Pod里面的配置文件会跟着变

PS:

配置值未更改,因为需要重新启动 Pod 才能从关联的 ConfigMap 中获取更新的值。

原因:我们的Pod部署的中间件自己本身没有热更新能力

总结

1、挂载目录使用 pvc

2、挂载配置文件使用configMap

动态供应

配置动态供应的默认存储类

## 创建了一个存储类 apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nfs-storage annotations: storageclass.kubernetes.io/is-default-class: "true" provisioner: k8s-sigs.io/nfs-subdir-external-provisioner parameters: archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份 --- apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2 # resources: # limits: # cpu: 10m # requests: # cpu: 10m volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: k8s-sigs.io/nfs-subdir-external-provisioner - name: NFS_SERVER value: 172.31.0.4 ## 指定自己nfs服务器地址 - name: NFS_PATH value: /nfs/data ## nfs服务器共享的目录 volumes: - name: nfs-client-root nfs: server: 172.31.0.4 path: /nfs/data --- apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["nodes"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io

注意: 更改IP

#确认配置是否生效

kubectl get sc

# sc 是storageclass的缩写

验证:

# 创建pvc

apiVersion: v1 kind: PersistentVolume metadata: name: pv-200m spec: capacity: storage: 200M accessModes: - ReadWriteMany

查看:

kubectl get pvc

# 查看pv, 分配的pv没有创建过, 是动态分配的

kubectl get pv

浙公网安备 33010602011771号

浙公网安备 33010602011771号