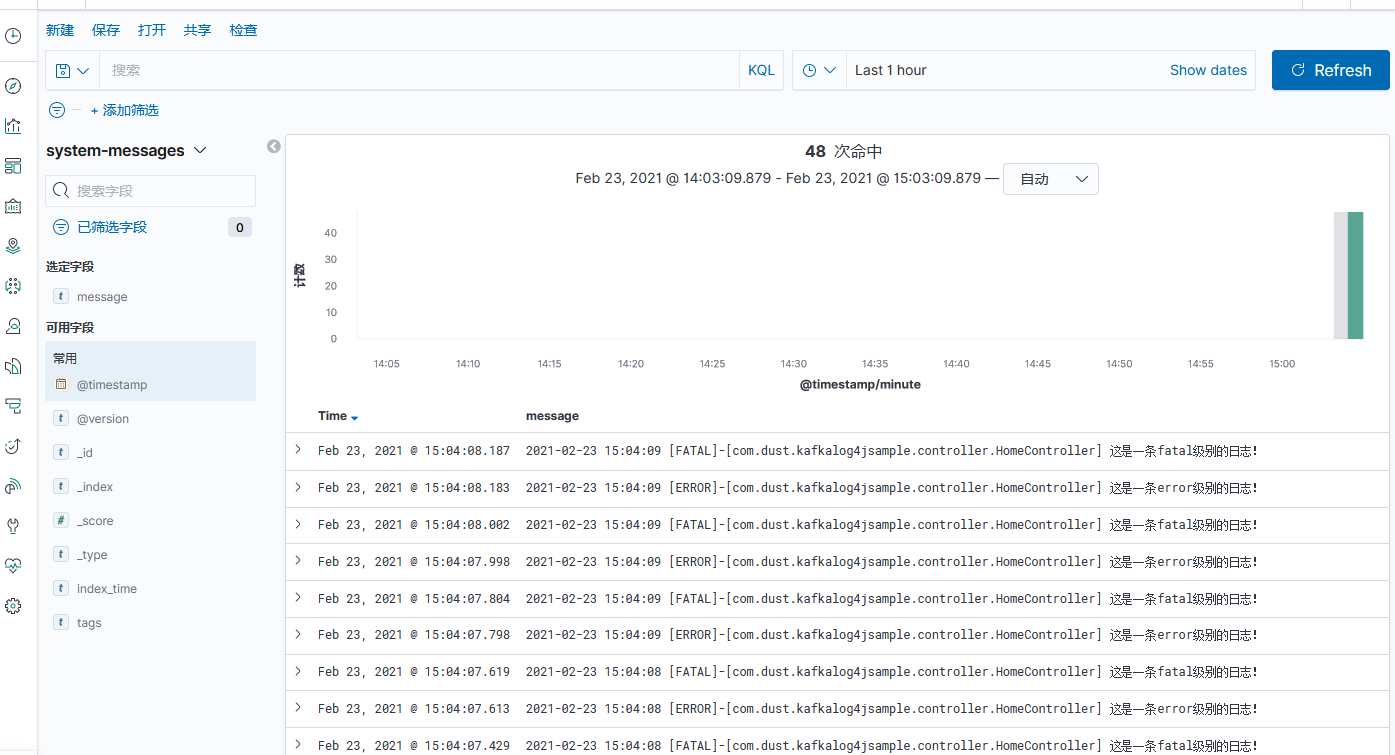

ELK kafka log4j springboot 实战日志监控

ELK 安装跳转

安装 kafka

wget https://archive.apache.org/dist/kafka/2.2.0/kafka_2.11-2.2.0.tgz

tar -zxvf kafka_2.11-2.2.0.tgz

mv kafka_2.11-2.2.0 /usr/local/kafka

修改配置脚本 vi /usr/local/kafka/config/server.properties

listeners=PLAINTEXT://172.17.173.56:9092

log.dirs=/usr/local/kafka/kafka-data

添加环境变量 vi /etc/profile.d/kafka.sh

KAFKA_HOME=/usr/local/kafka

export PATH=$KAFKA_HOME/bin:$PATH

Esc:wq保存执行 source /etc/profile.d/kafka.sh

启动

zookeeper-server-start.sh -daemon /usr/local/kafka/config/zookeeper.properties

kafka-server-start.sh -daemon /usr/local/kafka/config/server.properties

添加logsstash配置文件

input {

## 订阅kafka的所有system-log-开头的主题

kafka {

## system-log-服务名称

topics_pattern => "system-log-.*"

bootstrap_servers => "172.17.173.56:9092"

codec => json

consumer_threads => 1 ##增加consumer的并行消费线程数

decorate_events => true

# auto_offset_rest => "latest"

group_id => "system-log-group"

}

}

## 收到数据后,对数据进行过滤

filter {

## 时区转换

ruby {

code => "event.set('index_time',event.timestamp.time.localtime.strftime('%Y.%m.%d'))"

}

if "system-log" in [fields][logtopic]{

grok {

## [表达式]

match => ["message","\[%NOTSPACE:currentDateTime}\] \[%{NOTSPACE:level}\] \[%NOTSPACE:thread-id}\] \[%NOTSPACE:class}\] \[%NOTSPACE:hostName}\] \[%NOTSPACE:ip}\] \[%NOTSPACE:applicationName}\] \[%NOTSPACE:location}\] \[%NOTSPACE:messageInfo}\] ## (\'\'|%{QUOTEDSTRING:throwable})"]

}

}

}

output {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "system-messages"

}

}

启动 /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/*.conf &

springboot pom.xml 添加

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-log4j-appender</artifactId>

<version>1.0.1</version>

</dependency>

添加 log4j.properties 配置文件

log4j.rootLogger=info,stdout,kafka0

# stdout配置

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%-d{yyyy-MM-dd HH\:mm\:ss} [%p]-[%c] %m%n

# kafka0配置

#定义一个名为kafka 为Appender

log4j.appender.kafka0=org.apache.kafka.log4jappender.KafkaLog4jAppender

#指定日志写入到Kafka的主题

log4j.appender.kafka0.topic=system-log-test

#制定连接kafka的地址

log4j.appender.kafka0.brokerList=172.17.173.56:9092

#压缩方式,默认为none

log4j.appender.kafka0.compressionType=none

#指定Producer发送消息的方式,默认是false,即异步发送

log4j.appender.kafka0.syncSend=true

#指定日志级别

log4j.appender.kafka0.Threshold=Error

log4j.appender.kafka0.layout=org.apache.log4j.PatternLayout

log4j.appender.kafka0.layout.ConversionPattern=%-d{yyyy-MM-dd HH\:mm\:ss} [%p]-[%c] %m%n

Controller

package com.dust.kafkalog4jsample.controller;

import java.util.concurrent.atomic.AtomicLong;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import org.apache.log4j.Level;

import org.apache.log4j.Logger;

@RestController

public class HomeController {

private static final Logger LOG = Logger.getLogger(HomeController.class);

@GetMapping("/Logs")

public String Logs() {

LOG.setLevel(Level.INFO);

LOG.debug("这是一条debug级别的日志!");

LOG.info("这是一条info级别的日志!");

LOG.error("这是一条error级别的日志!");

LOG.fatal("这是一条fatal级别的日志!");

return "ok";

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号