JDBC批量插入大量数据(高性能)

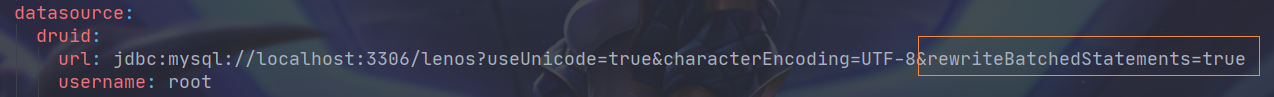

rewriteBatchedStatements

使用jdbc批量插入功能,rewriteBatchedStatements参数必不可少。对插入而言,所谓的 rewrite 其实就是将一批插入拼接成 insert into xxx values (a),(b),(c)...这样一条语句的形式然后执行,这样一来跟拼接 sql 的效果是一样的。但是此参数默认false,即便使用批处理,性能依然很差。

原因是这样的:

- 如果批量语句中的某些语句失败,则默认重写会导致所有语句都失败。

- 批量语句的某些语句参数不一样,则默认重写会使得查询缓存未命中。

因此如果需要使用jdbc批量插入功能,则在数据库连接URL中添加此参数为true。

上代码

测试环境为springboot,mybatis,mysql5.7.23。

package test;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.util.Objects;

import org.apache.ibatis.session.SqlSession;

import org.apache.ibatis.session.SqlSessionFactory;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.mybatis.spring.SqlSessionTemplate;

import org.mybatis.spring.SqlSessionUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.jdbc.support.JdbcUtils;

import org.springframework.test.context.junit4.SpringRunner;

import org.springframework.util.StopWatch;

import com.len.LenApplication;

/**

* 测试jdbc批量插入性能。

*

* @author chenxing

*/

@RunWith(SpringRunner.class)

@SpringBootTest(classes = LenApplication.class, webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT)

public class JdbcBatchTest {

private static final String SAVE_BATCH = "insert into test_demo(a,b,c,d,e,f,g,h,i,j) values(?,?,?,?,?,?,?,?,?,?)";

private static final Logger log = LoggerFactory.getLogger(JdbcBatchTest.class);

@Autowired

SqlSessionTemplate sqlSessionTemplate;

@Test

public void testStartJob() {

SqlSessionFactory sqlSessionFactory = sqlSessionTemplate.getSqlSessionFactory();

SqlSession sqlSession = null;

Connection connection = null;

PreparedStatement statement = null;

try {

sqlSession = sqlSessionFactory.openSession(false);

connection = sqlSession.getConnection();

connection.setAutoCommit(false);

statement = connection.prepareStatement(SAVE_BATCH);

//模拟五万条数据

int max = 50000;

//每1000条分批

int batchSize = 1000;

int limit = Math.min(max, batchSize);

StopWatch stopWatch = new StopWatch();

stopWatch.start("JDBC save batch");

for (int i = 1; i <= max; i++) {

statement.setString(1, "a" + i);

statement.setString(2, "b" + i);

statement.setString(3, "c" + i);

statement.setString(4, "d" + i);

statement.setString(5, "e" + i);

statement.setString(6, "f" + i);

statement.setString(7, "g" + i);

statement.setString(8, "h" + i);

statement.setString(9, "i" + i);

statement.setString(10, "j" + i);

statement.addBatch();

if (i == limit) {

statement.executeBatch();

statement.clearBatch();

limit = Math.min(limit + batchSize, max);

}

}

connection.commit();

stopWatch.stop();

//实测五万数据(10个字段)插入6~7秒左右。

log.info(stopWatch.getLastTaskName(),":{}",stopWatch.getTotalTimeMillis());

} catch (SQLException e) {

e.printStackTrace();

log.error(e.getMessage());

} finally {

SqlSessionUtils.closeSqlSession(Objects.requireNonNull(sqlSession),sqlSessionFactory);

JdbcUtils.closeStatement(statement);

JdbcUtils.closeConnection(connection);

}

}

}

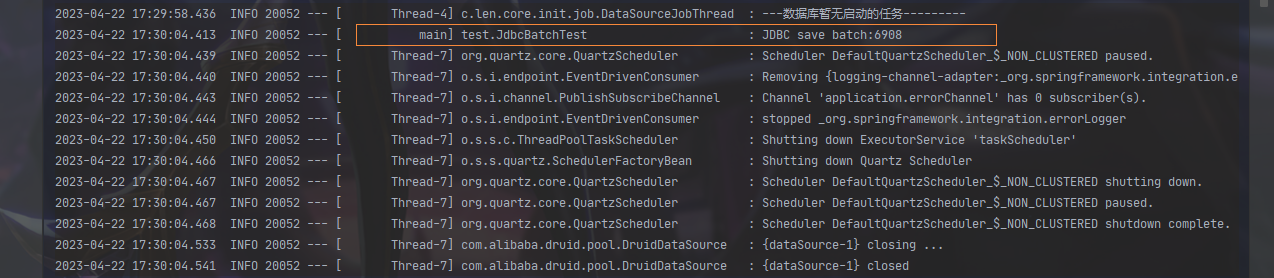

实测数据

此种方式是对比各种方式后得出的性能最佳,实测五万数据(10个字段)插入6~7秒左右。:

本文作者:_未来可期

本文链接:https://www.cnblogs.com/StarChen20/p/17343632.html

版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行许可。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步