现在到hive了。

hive安装比较简单。

下载个包,解压,配置hive-site.xml、hive-env.sh 就好了。

1、下载hive包

官网:http://mirror.bit.edu.cn/apache/hive/hive-2.3.3/

2、解压到hadoop目录

tar -zxvf apache-hive-2.3.3-bin.tar.gz #解压 mv apache-hive-2.3.3-bin hive2.3.3 #修改目录名,方便使用

3、配置hive环境变量

[hadoop@venn05 ~]$ more .bashrc # .bashrc # Source global definitions if [ -f /etc/bashrc ]; then . /etc/bashrc fi # Uncomment the following line if you don't like systemctl's auto-paging feature: # export SYSTEMD_PAGER= # User specific aliases and functions #jdk export JAVA_HOME=/opt/hadoop/jdk1.8 export JRE_HOME=${JAVA_HOME}/jre export CLASS_PATH=${JAVA_HOME}/lib:${JRE_HOME}/lib export PATH=${JAVA_HOME}/bin:$PATH #hadoop export HADOOP_HOME=/opt/hadoop/hadoop3 export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH #hive export HIVE_HOME=/opt/hadoop/hive2.3.3 export HIVE_CONF_DIR=$HIVE_HOME/conf export PATH=$HIVE_HOME/bin:$PATH

4、在hdfs上创建hive目录

hive工作目录如下:

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp/hive</value>

<description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/<username> is created, with ${hive.scratch.dir.permission}.</description>

</property>

所以创建如下目录:

hadoop fs -mkdir -p /user/hive/warehouse #hive库文件位置

hadoop fs -mkdir -p /tmp/hive/ #hive临时目录

#授权

hadoop fs -chmod -R 777 /user/hive/warehouse

hadoop fs -chmod -R 777 /tmp/hive

注:必须授权,不然会报错:

Logging initialized using configuration in jar:file:/opt/hadoop/hive2.3.3/lib/hive-common-2.3.3.jar!/hive-log4j2.properties Async: true Exception in thread "main" java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rwxr-xr-x at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:720) at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:650) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:582) at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:549) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:750) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.util.RunJar.run(RunJar.java:239) at org.apache.hadoop.util.RunJar.main(RunJar.java:153)

5、修改hive-site.xml

cp hive-default.xml.template hive-site.xml vim hive-site.xml

修改1: 将hive-site.xml 中的 “${system:java.io.tmpdir}” 都缓存具体目录:/opt/hadoop/hive2.3.3/tmp 4处

修改2: 将hive-site.xml 中的 “${system:user.name}” 都缓存具体目录:root 3处

<property>

<name>hive.exec.local.scratchdir</name>

<value>${system:java.io.tmpdir}/${system:user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>${system:java.io.tmpdir}/${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>${system:java.io.tmpdir}/${system:user.name}</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>${system:java.io.tmpdir}/${system:user.name}/operation_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

改为:

<property>

<name>hive.exec.local.scratchdir</name>

<value>/opt/hadoop/hive2.3.3/tmp/root</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/opt/hadoop/hive2.3.3/tmp/${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/opt/hadoop/hive2.3.3/tmp/root</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/opt/hadoop/hive2.3.3/tmp/root/operation_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

配置元数据库mysql:

mysql> CREATE USER 'hive'@'%' IDENTIFIED BY 'hive'; #创建hive用户 Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL ON *.* TO 'hive'@'%'; #授权 Query OK, 0 rows affected (0.00 sec)

修改数据库配置:

<!-- mysql 驱动 -->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<!-- 链接地址 -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://venn05:3306/hive?createDatabaseIfNotExist=true</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<!-- 用户名 -->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>Username to use against metastore database</description>

</property>

<!-- 密码 -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

<description>password to use against metastore database</description>

</property>

6、修改hive-env.sh

cp hive-env.sh.template hive-env.sh vim hive-env.sh

在末尾添加如下内容:

export HADOOP_HOME=/opt/hadoop/hadoop3 export HIVE_CONF_DIR=/opt/hadoop/hive2.3.3/conf export HIVE_AUX_JARS_PATH=/opt/hadoop/hive2.3.3/lib

7、上传mysql驱动包

上传到:$HIVE_HOME/lib

8、初始化hive

schematool -initSchema -dbType mysql

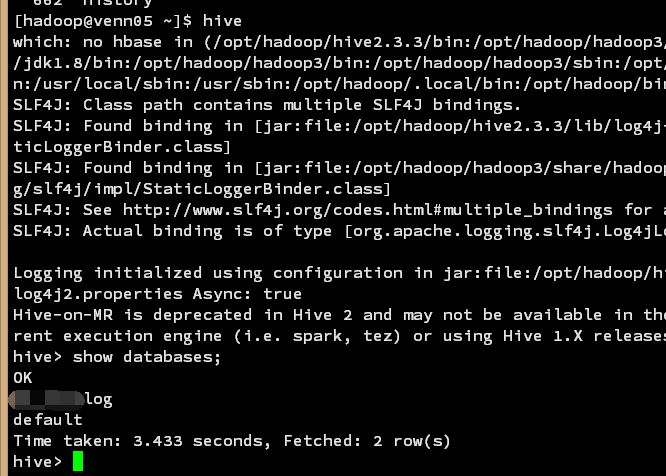

9、启动hive

hive

搞定

浙公网安备 33010602011771号

浙公网安备 33010602011771号