Flink sql redis lookup source

flink 版本: 1.14.3

redis lookup source 实现已经有一段时间了,之前实现的只能查询 string/hash 两种类型的数据,查询方式和返回结果都比较死板(hash 只能查某个key/feild 的值),对应其他类型没有好的思路来兼容

今天在review 的时候,有了新的灵感,想到了可以兼容所有类型数据的方式,如下:

-

- 首先设计通用的 redis 临时表结构: key/filed/value, 分别对应 redis 结构中的 key、filed(hash 类型才有,其他类型值为 key )、value

-

- 如果key 不存在,返回 null

-

- 对于 string 类型,直接返回对应的 value

-

- 对于 list、set、zset 返回多条数据,每条数据对应数据结构中的一个 value

-

- 对于 hash 有两种查询方法

- 输入一个参数,把该参数作为 key,返回 key 对应的 所有 filed 和 value,每个组合是一条数据

- 输入两个参数,把输入参数作为 key 和 filed,返回 key/filed 对应的 value

输入输出表格如下:

| 类型 | 输入参数 | 输出结果 | 说明 |

|---|---|---|---|

| string | key | value | 输入 key, 返回 value |

| list | key | list | 输入 key,返回 key 对应的 list,对 flink 来说,相当于 1 对 N,返回多条数据 |

| set | key | list | 输入 key,返回 key 对应的 set, flink 来说,相当于 1 对 N,返回多条数据 |

| zset | key | list | 输入 key,返回 key 对应的 zset, flink 来说,相当于 1 对 N,返回多条数据 |

| hash | key | field and vlaue list | 输入 key,返回 key 对应的 hash, flink 来说,相当于 1 对 N,返回多条数据, 并且每条数据包含 对应的 filed 和 value |

| hash | key,field | value | 输入 key、filed, 返回 value |

设计完成

- 注: 还有一个设计思路,在 redis 表上新增一个参数,控制查询到的redis 结果的组织形式: 行式,列式

- 行式: 每个结果都是一条数据,如list包含多个结果,返回多条数据(默认)

- 列式: 基于key 查询的所有结果,组成一个数组返回 (未实现,懒得写了,各位同学有需要可以实现一下)

实现 redis lookup source

详细代码不再赘述,这里这贴出核心代码,就三部分

- 查缓存

- 查reids,解析结果

- 构造输出,放缓存

public void eval(Object... keys) {

RowData keyRow = GenericRowData.of(keys);

// get row from cache

if (cache != null) {

List<RowData> cachedRows = cache.getIfPresent(keyRow);

if (cachedRows != null) {

for (RowData cachedRow : cachedRows) {

collect(cachedRow);

}

return;

}

}

// 返回数据构造为 tuple2 类型,放 filed 和 value,如果没有 filed,把 key 放入 filed 中

List<Tuple2<String, String>> list = new ArrayList<>();

// 转换获取 key

String key = keys[0].toString();

// 查询 key 对应的 数据类型

String type = command.type(key);

// query redis, retry maxRetryTimes count

for (int retry = 0; retry <= maxRetryTimes; retry++) {

try {

// 对于不同类型key,分别处理,结果放到 list 中

switch (type) {

case "string":

String result = command.get(key);

list.add(new Tuple2<>(key, result));

break;

case "list":

// list 获取 key 的全部数据

List<String> result1 = command.lrange(key, 0, -1);

result1.forEach((String v) -> list.add(new Tuple2<>(key, v)));

break;

case "set":

// set 获取 key 的全部数据

Set<String> result5 = command.smembers(key);

for (int i = 0; i < result5.size(); i++) {

result5.forEach((String v) -> list.add(new Tuple2<>(key, v)));

}

break;

case "zset":

// zset 获取 key 的全部数据

List<String> result6 = command.zrange(key, 0, -1);

result6.forEach((String v) -> list.add(new Tuple2<>(key, v)));

break;

case "hash":

// hash 类型,根据输入参数的个数,判断返回全部 map 还是只获取一个 值

if (keys.length == 2) {

// 输入两个参数,第二个参数作为 filed

String filed = keys[1].toString();

String result3 = command.hget(key, filed);

list.add(new Tuple2<>(key, result3));

} else {

// 只有一个参数,作为 key,获取 key 对应的 map

Map<String, String> result4 = command.hgetall(key);

result4.entrySet().forEach((Map.Entry<String, String> item) -> list.add(new Tuple2<>(item.getKey(), item.getValue())));

}

break;

default:

LOG.debug("nothing");

break;

}

break;

} catch (Exception e) {

e.printStackTrace();

}

}

// 构造输出 数据,并放入 换存中

if (list.size() > 0) {

List<RowData> cacheList = new ArrayList<>();

for (Tuple2<String, String> item : list) {

GenericRowData row = new GenericRowData(3);

row.setField(0, StringData.fromString(key));

row.setField(1, StringData.fromString(item.f0));

row.setField(2, StringData.fromString(item.f1));

row.setRowKind(RowKind.INSERT);

// 输出

collect(row);

cacheList.add(row);

}

// 放入缓存

cache.put(keyRow, cacheList);

}

}

完整代码参见: github sqlSubmit RedisRowDataLookUpFunction

测试 sql

-- Lookup Source: Sync Mode

-- kafka source

CREATE TABLE user_log (

user_id STRING

,item_id STRING

,category_id STRING

,behavior STRING

,ts TIMESTAMP(3)

,process_time as proctime()

, WATERMARK FOR ts AS ts - INTERVAL '5' SECOND

) WITH (

'connector' = 'kafka'

,'topic' = 'user_log'

,'properties.bootstrap.servers' = 'localhost:9092'

,'properties.group.id' = 'user_log'

,'scan.startup.mode' = 'latest-offset'

,'format' = 'json'

);

CREATE TEMPORARY TABLE redis_table (

`key` STRING

,filed STRING

,`value` STRING

) WITH (

'connector' = 'cust-redis'

,'redis.url' = 'redis://localhost?timeout=3000' -- url with timeout

,'lookup.cache.max.size' = '28'

,'lookup.cache.expire.ms' = '3600000' -- ttl time 超过这么长时间无数据才行

,'pass' = '11' -- redis pass

);

---sinkTable

CREATE TABLE kakfa_join_redis_sink (

user_id STRING

,item_id STRING

,category_id STRING

,behavior STRING

,behavior_map STRING

,ts TIMESTAMP(3)

,primary key (user_id) not enforced

) WITH (

'connector' = 'print'

);

-- sting/list/set/zset/hash test sql

INSERT INTO kakfa_join_redis_sink(user_id, item_id, category_id, behavior, behavior_map, ts)

SELECT a.user_id, a.item_id, a.category_id, a.behavior, b.`value`, a.ts

FROM user_log a

left join redis_table FOR SYSTEM_TIME AS OF a.process_time AS b

ON a.behavior = b.`key`

where a.behavior is not null;

-- hash multiple input

-- INSERT INTO kakfa_join_redis_sink(user_id, item_id, category_id, behavior, behavior_map, ts)

-- SELECT a.user_id, a.item_id, a.category_id, a.behavior, b.`value`, a.ts

-- FROM user_log a

-- left join redis_table FOR SYSTEM_TIME AS OF a.process_time AS b

-- ON a.behavior = b.key and a.item = c.filed

-- where a.behavior is not null;

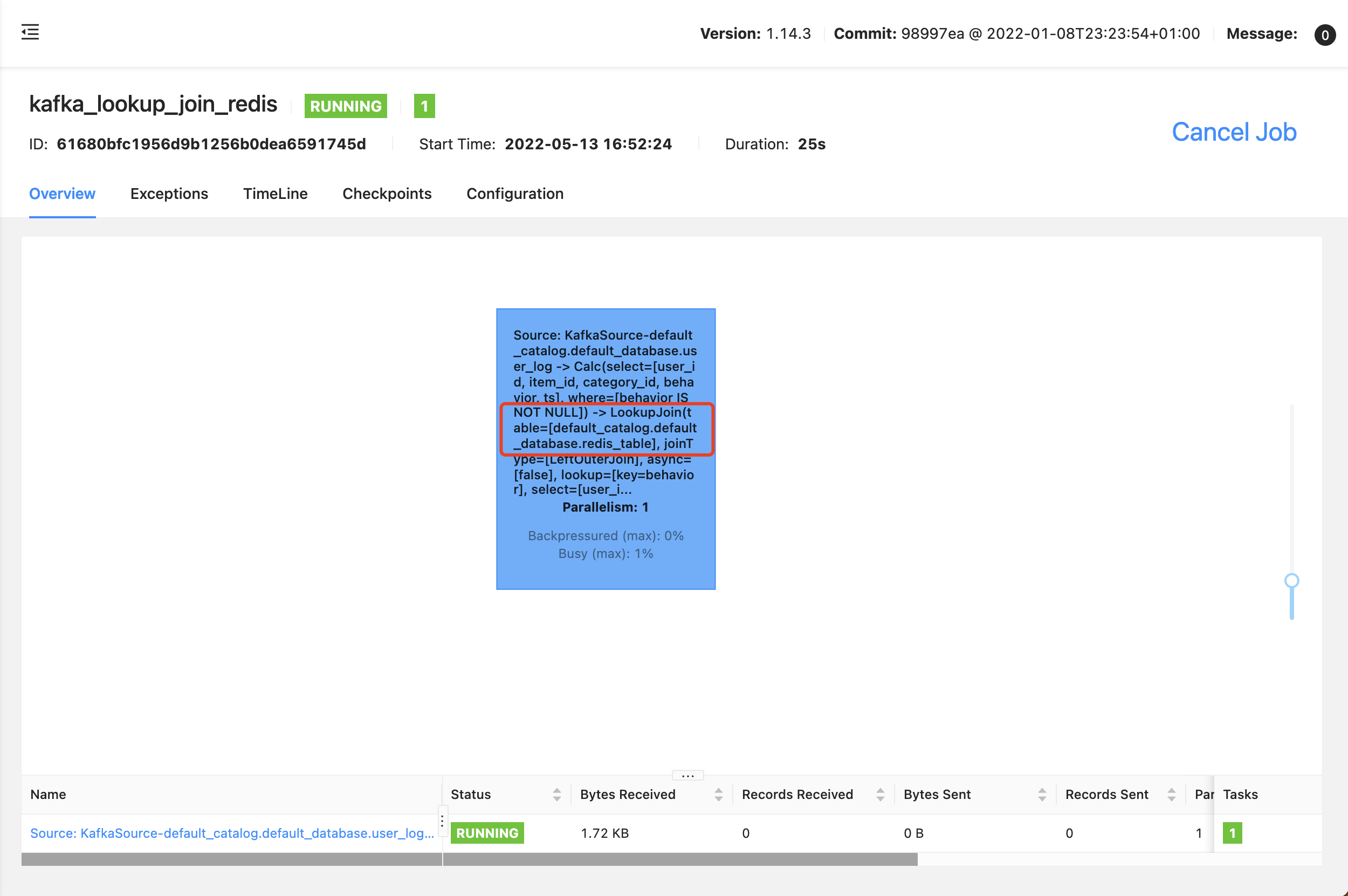

任务流图如下:

redis 测试数据集

# string, 查看数据: get ppv

set ppv ppv_value

set buy buy_value

set car car_value

## list, 查看数据: range ppv_list 0 -1

rpush ppv_list ppv_list_value_1 ppv_list_value_2 ppv_list_value_3

rpush buy_list buy_list_value_1 buy_list_value_2 buy_list_value_3

rpush car_list car_list_value_1 car_list_value_2 car_list_value_3

## set, 查看数据:smembers ppv_set

sadd ppv_set ppv_set_value_1 ppv_set_value_2 ppv_set_value_3

sadd buy_set buy_set_value_1 buy_set_value_2 buy_set_value_3

sadd car_set car_set_value_1 car_set_value_2 car_set_value_3

## zset,查看数据:zcard ppv_zset

zadd ppv_zset 0.1 ppv_zset_value_1 0.2 ppv_zset_value_2 0.3 ppv_zset_value_3

zadd buy_zset 0.1 buy_zset_value_1 0.2 buy_zset_value_2 0.3 buy_zset_value_3

zadd car_zset 0.1 car_zset_value_1 0.2 car_zset_value_2 0.3 car_zset_value_3

## hash, 查看数据: hgetall ppv_hash

hset ppv_hash ppv_hash_key_1 ppv_hash_value_1 ppv_hash_key_2 ppv_hash_value_2 ppv_hash_key_3 ppv_hash_value_3

hset buy_hash buy_hash_key_1 buy_hash_value_1 buy_hash_key_2 buy_hash_value_2 buy_hash_key_3 buy_hash_value_3

hset car_hash car_hash_key_1 car_hash_value_1 car_hash_key_2 car_hash_value_2 car_hash_key_3 car_hash_value_3

测试

string 类型

测试数据:

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"ppv","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"buy","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"car","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"fav","ts":"2022-05-13 17:00:08.815"}

输出数据:

+I[1498, item, 10, ppv, ppv_value, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy, buy_value, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car, car_value, 2022-05-13T17:00:08.815]

+I[1498, item, 10, fav, null, 2022-05-13T17:00:08.815]

list 类型

测试数据:

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"ppv_list","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"buy_list","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"car_list","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"fav_list","ts":"2022-05-13 17:00:08.815"}

输出数据:

+I[1498, item, 10, ppv_list, ppv_list_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, ppv_list, ppv_list_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, ppv_list, ppv_list_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_list, buy_list_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_list, buy_list_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_list, buy_list_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_list, car_list_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_list, car_list_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_list, car_list_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, fav_list, null, 2022-05-13T17:00:08.815]

set 类型

测试数据:

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"ppv_set","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"buy_set","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"car_set","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"fav_set","ts":"2022-05-13 17:00:08.815"}

输出数据:

+I[1498, item, 10, ppv_set, ppv_set_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, ppv_set, ppv_set_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, ppv_set, ppv_set_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_set, buy_set_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_set, buy_set_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_set, buy_set_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_set, car_set_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_set, car_set_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_set, car_set_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, fav_set, null, 2022-05-13T17:00:08.815]

zset 类型

测试数据:

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"ppv_zset","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"buy_zset","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"car_zset","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"fav_zset","ts":"2022-05-13 17:00:08.815"}

输出数据:

+I[1498, item, 10, ppv_zset, ppv_zset_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, ppv_zset, ppv_zset_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, ppv_zset, ppv_zset_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_zset, buy_zset_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_zset, buy_zset_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_zset, buy_zset_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_zset, car_zset_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_zset, car_zset_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_zset, car_zset_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, fav_zset, null, 2022-05-13T17:00:08.815

hash 类型,一个输入参数

CREATE TABLE kakfa_join_redis_sink_1 (

user_id STRING

,item_id STRING

,category_id STRING

,behavior STRING

,behavior_key STRING

,behavior_map STRING

,ts TIMESTAMP(3)

,primary key (user_id) not enforced

) WITH (

'connector' = 'print'

)

-- hash single input

INSERT INTO kakfa_join_redis_sink_1(user_id, item_id, category_id, behavior, behavior_key,behavior_map, ts)

SELECT a.user_id, a.item_id, a.category_id, a.behavior,b.filed, b.`value`, a.ts

FROM user_log a

left join redis_table FOR SYSTEM_TIME AS OF a.process_time AS b

ON a.behavior = b.key

where a.behavior is not null;

测试数据:

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"ppv_hash","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"buy_hash","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"car_hash","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"fav_hash","ts":"2022-05-13 17:00:08.815"}

输出数据:

+I[1498, item, 10, ppv_hash, ppv_hash_key_1, ppv_hash_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, ppv_hash, ppv_hash_key_2, ppv_hash_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, ppv_hash, ppv_hash_key_3, ppv_hash_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_hash, buy_hash_key_1, buy_hash_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_hash, buy_hash_key_2, buy_hash_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, buy_hash, buy_hash_key_3, buy_hash_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_hash, car_hash_key_1, car_hash_value_1, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_hash, car_hash_key_2, car_hash_value_2, 2022-05-13T17:00:08.815]

+I[1498, item, 10, car_hash, car_hash_key_3, car_hash_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, fav_hash, null, null, 2022-05-13T17:00:08.815]

hash 类型,两个输入参数

-- hash multiple input

INSERT INTO kakfa_join_redis_sink_1(user_id, item_id, category_id, behavior, behavior_key,behavior_map, ts)

SELECT a.user_id, a.item_id, a.category_id, a.behavior,b.filed, b.`value`, a.ts

FROM user_log a

left join redis_table FOR SYSTEM_TIME AS OF a.process_time AS b

ON a.behavior = b.key and a.item = b.filed

where a.behavior is not null;

测试数据:

{"category_id":10,"user_id":"1498","item_id":"ppv_hash_key_1","behavior":"ppv_hash","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","buy_hash_key_1":"buy_hash","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","car_hash_key_1":"car_hash","ts":"2022-05-13 17:00:08.815"}

{"category_id":10,"user_id":"1498","item_id":"item","behavior":"fav_hash","ts":"2022-05-13 17:00:08.815"}

输出数据:

+I[1498, ppv_hash_key_1, 10, ppv_hash, ppv_hash_key_1, ppv_hash_value_1, 2022-05-13T17:00:08.815]

+I[1498, ppv_hash_key_1, 10, ppv_hash, ppv_hash_key_2, ppv_hash_value_2, 2022-05-13T17:00:08.815]

+I[1498, ppv_hash_key_1, 10, ppv_hash, ppv_hash_key_3, ppv_hash_value_3, 2022-05-13T17:00:08.815]

+I[1498, item, 10, fav_hash, null, null, 2022-05-13T17:00:08.815]

搞定

- 注: pass 参数设计输入密码参数没有测试过

- 注:完整代码参见: github sqlSubmit kafka_lookup_join_redis.sql

欢迎关注Flink菜鸟公众号,会不定期更新Flink(开发技术)相关的推文

浙公网安备 33010602011771号

浙公网安备 33010602011771号