flink 版本: 1.14.3

注: 本文探讨 flink sql api 和 stream api 下窗口的触发机制

前几天有个同学问我这个问题,不清楚 Flink 窗口触发的机制,不知道窗口结束后还能触发几次。

先把这个问题分解成两个阶段:

- 窗口结束之前

- 窗口结束之后

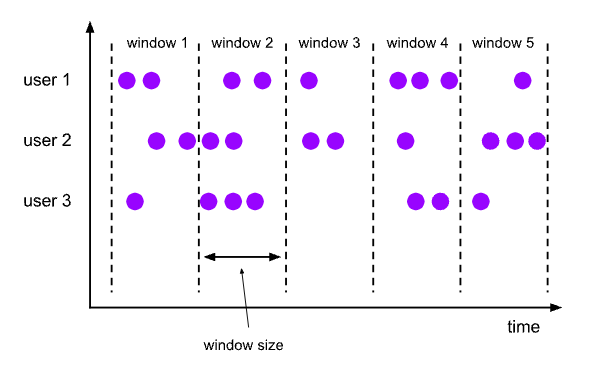

预设几个窗口的场景,来说明这个问题。

Flink sql

通用的建表语句

CREATE TABLE user_log

(

user_id VARCHAR,

item_id VARCHAR,

category_id VARCHAR,

behavior VARCHAR,

ts TIMESTAMP(3),

WATERMARK FOR ts AS ts - INTERVAL '1' MINUTES

) WITH (

'connector' = 'kafka'

,'topic' = 'user_log'

,'properties.bootstrap.servers' = 'localhost:9092'

,'properties.group.id' = 'user_log'

,'scan.startup.mode' = 'earliest-offset'

,'format' = 'json'

);

CREATE TABLE user_log_sink

(

start_time timestamp(3),

end_time timestamp(3),

coun int

) WITH (

'connector' = 'print'

);

都以事件时间为例,可以自己控制输入数据

Tumble 窗口

先看样例

sql 如下

insert into user_log_sink

select window_start, window_end, count(user_id)

from TABLE(

TUMBLE(TABLE user_log, DESCRIPTOR(ts), INTERVAL '10' MINUTES));

- 窗口结束之前: 每个窗口结束时触发一次,窗口结束时间为 水印 达到 10 分时(实际事件时间为 11 分,因为水印时间为 事件时间 - 1 分钟,建表时设置)

- 窗口结束之后: 不会触发

测试数据

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:00:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:10:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:10:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:30.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:11:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:10:30.001"}

留些问题大家思考一下,问题比较多,可以简单写一下,对理解窗口触发很有帮助:

问题1:窗口什么时候触发 ?

问题2:0 - 10 分这个窗口的 count 值是多少 ?

HOP 窗口

先看样例

sql 如下

insert into user_log_sink

select window_start, window_end, count(user_id)

from TABLE(

HOP(TABLE user_log, DESCRIPTOR(ts), INTERVAL '2' MINUTES ,INTERVAL '10' MINUTES ));

- 窗口结束之前: 每个窗口结束时触发一次,窗口结束时间为水印到达窗口结束时间时,只是滑动窗口,每条数据都会属于多个窗口(窗口)

- 窗口结束之后: 不会触发

注: HOP 窗口,窗口步长和窗口长度必须成整数倍,不然会报错

Exception in thread "main" org.apache.flink.table.api.TableException: HOP table function based aggregate requires size must be an integral multiple of slide, but got size 600000 ms and slide 180000 ms

at org.apache.flink.table.planner.plan.nodes.exec.stream.StreamExecWindowAggregateBase.createSliceAssigner(StreamExecWindowAggregateBase.java:115)

at org.apache.flink.table.planner.plan.nodes.exec.stream.StreamExecWindowAggregateBase.createSliceAssigner(StreamExecWindowAggregateBase.java:92)

at org.apache.flink.table.planner.plan.nodes.exec.stream.StreamExecLocalWindowAggregate.translateToPlanInternal(StreamExecLocalWindowAggregate.java:125)

at org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:134)

at org.apache.flink.table.planner.plan.nodes.exec.ExecEdge.translateToPlan(ExecEdge.java:250)

at org.apache.flink.table.planner.plan.nodes.exec.stream.StreamExecExchange.translateToPlanInternal(StreamExecExchange.java:75)

at org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:134)

at org.apache.flink.table.planner.plan.nodes.exec.ExecEdge.translateToPlan(ExecEdge.java:250)

at org.apache.flink.table.planner.plan.nodes.exec.stream.StreamExecGlobalWindowAggregate.translateToPlanInternal(StreamExecGlobalWindowAggregate.java:139)

at org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:134)

at org.apache.flink.table.planner.plan.nodes.exec.ExecEdge.translateToPlan(ExecEdge.java:250)

at org.apache.flink.table.planner.plan.nodes.exec.common.CommonExecCalc.translateToPlanInternal(CommonExecCalc.java:96)

at org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:134)

at org.apache.flink.table.planner.plan.nodes.exec.ExecEdge.translateToPlan(ExecEdge.java:250)

at org.apache.flink.table.planner.plan.nodes.exec.stream.StreamExecSink.translateToPlanInternal(StreamExecSink.java:114)

at org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:134)

at org.apache.flink.table.planner.delegation.StreamPlanner.$anonfun$translateToPlan$1(StreamPlanner.scala:71)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:233)

at scala.collection.Iterator.foreach(Iterator.scala:937)

at scala.collection.Iterator.foreach$(Iterator.scala:937)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1425)

at scala.collection.IterableLike.foreach(IterableLike.scala:70)

at scala.collection.IterableLike.foreach$(IterableLike.scala:69)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike.map(TraversableLike.scala:233)

at scala.collection.TraversableLike.map$(TraversableLike.scala:226)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at org.apache.flink.table.planner.delegation.StreamPlanner.translateToPlan(StreamPlanner.scala:70)

at org.apache.flink.table.planner.delegation.PlannerBase.translate(PlannerBase.scala:185)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.translate(TableEnvironmentImpl.java:1665)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:752)

at org.apache.flink.table.api.internal.StatementSetImpl.execute(StatementSetImpl.java:124)

at com.rookie.submit.main.SqlSubmit$.main(SqlSubmit.scala:91)

at com.rookie.submit.main.SqlSubmit.main(SqlSubmit.scala)

测试数据

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:00:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:01:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:02:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:03:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:04:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:05:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:06:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:07:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:08:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:10:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:30.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:11:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:31.001"}

问题3:窗口第一次触发是什么时候 ?

问题4:窗口总共会触发几次 ?

问题5:每次的窗口范围是?

问题6: 0 - 10 分这个窗口的 count 值是多少 ?

Cumulate 窗口

样例sql:

insert into user_log_sink

select window_start, window_end, count(user_id)

from TABLE(

CUMULATE(TABLE user_log, DESCRIPTOR(ts), INTERVAL '2' MINUTES ,INTERVAL '10' MINUTES ));

- 窗口结束之前: 窗口长度 / 窗口步长 次

- 窗口结束之后: 不会触发

测试数据

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:00:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:01:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:02:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:03:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:04:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:05:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:06:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:07:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:08:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:10:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:30.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:11:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:31.001"}

问题7:窗口第一次触发是什么时候 ?

问题8:窗口总共会触发几次 ?

问题9:每次的窗口范围是?

问题10: 0 - 10 分这个窗口的 count 值是多少 ?

stream api

注: 概念类似,仅列举 TumblingEventTimeWindows

注: 使用默认触发器和驱逐器

直接来看核心代码

// timestamp & watermart

.assignTimestampsAndWatermarks(WatermarkStrategy

// 固定延迟时间

.forBoundedOutOfOrderness(Duration.ofMinutes(1))

// timestamp

.withTimestampAssigner(TimestampAssignerSupplier.of(new SerializableTimestampAssigner[Behavior] {

override def extractTimestamp(element: Behavior, recordTimestamp: Long): Long =

element.getTs

}))

)

// 翻滚窗口

.windowAll(TumblingEventTimeWindows.of(Time.minutes(10)))

// 窗口函数

.process(new ProcessAllWindowFunction[Behavior, String, TimeWindow]() {

override def process(context: Context, elements: Iterable[Behavior], out: Collector[String]): Unit = {

val window = context.window

val windowStart = DateTimeUtil.formatMillis(window.getStart / 1000, DateTimeUtil.YYYY_MM_DD_HH_MM_SS)

val windowEnd = DateTimeUtil.formatMillis(window.getStart / 1000, DateTimeUtil.YYYY_MM_DD_HH_MM_SS)

var count = 0

val list = new util.ArrayList[String]()

elements.foreach(b => {

count += 1

list.add(DateTimeUtil.formatMillis(b.getTs / 1000, DateTimeUtil.YYYY_MM_DD_HH_MM_SS))

})

out.collect(windowStart + " - " + windowEnd + " : " + count + ", element : " + list.toArray.toString)

}

}

)

测试数据

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:00:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:10:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:30.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:11:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:31.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:12:00.001"}

{"category_id":40,"user_id":"1","item_id":"40972","behavior":"pv","ts":"2022-04-22 00:09:32.001"}

- 窗口结束之前: 每个窗口结束时触发一次,窗口结束时间为 水印 达到 10 分时(实际事件时间为 11 分,因为水印时间为 事件时间 - 1 分钟)

- 窗口结束之后: 水印超过允许延迟时间之前,来多少条数据,就触发多少次,窗口元素是包含 当次触发前窗口所有数据(允许延迟触发的窗口计算)

问题11: 窗口什么时候触发?

问题12:窗口会触发几次?

问题13: 窗口 count 值分别是多少?

总结

- flink 窗口数据是指:事件时间是在窗口范围内的数据

- 水印达到窗口结束时间是触发窗口计算的条件

- 在水印达到窗口结束时间以前的所有 “窗口数据” 都会在窗口结束时算入当前窗口结果中

- 水印超过窗口结束时间之后到达的“窗口数据” 如果在允许延迟范围内,还可以触发窗口计算,窗口数据包含正常窗口数据和迟到数据

- flink sql 窗口没有 允许延迟参数

注: 看完结论再回去看看自己写的答案

答案

问题1:窗口什么时候触发 ? 答: 11 分的数据到达时触发计算

问题2:0 - 10 分这个窗口的 count 值是多少 ? 答: 0 - 10 分的窗口 count 值是 2,分别是 00 分和 9:30 的两条数据

问题3:窗口第一次触发是什么时候 ? 答: 03 分数据输入时

问题4:窗口总共会触发几次 ? 答: 5 次,分别是 03/05/07/09/11 分数据输入

问题5:每次的窗口范围是? 答:

+I[2022-04-21T23:52, 2022-04-22T00:02, 2]

+I[2022-04-21T23:54, 2022-04-22T00:04, 4]

+I[2022-04-21T23:56, 2022-04-22T00:06, 6]

+I[2022-04-21T23:58, 2022-04-22T00:08, 8]

+I[2022-04-22T00:00, 2022-04-22T00:10, 11]

问题6: 0 - 10 分这个窗口的 count 值是多少 ? : 11,分别是 00/01/02/03/04/05/06/07/08/09/09.30 (可以使用 collect 函数输出所有 时间)

问题7:窗口第一次触发是什么时候 ? 答: 03 分数据输入时

问题8:窗口总共会触发几次 ? 答: 5 次,分别是 03/05/07/09/11 分数据输入

问题9:每次的窗口范围是? 答:

+I[2022-04-22T00:00, 2022-04-22T00:02, 2]

+I[2022-04-22T00:00, 2022-04-22T00:04, 4]

+I[2022-04-22T00:00, 2022-04-22T00:06, 6]

+I[2022-04-22T00:00, 2022-04-22T00:08, 8]

+I[2022-04-22T00:00, 2022-04-22T00:10, 11]

问题10: 0 - 10 分这个窗口的 count 值是多少 ? 11

问题11: 窗口什么时候触发? 答:窗口结束时;窗口结束到允许延迟时间内,迟到数据的条数那么多次

问题12:窗口会触发几次? 答:

2022-04-22 00:00:00 - 2022-04-22 00:00:00 : 2, element : [2022-04-22 00:00:00, 2022-04-22 00:09:30]

2022-04-22 00:00:00 - 2022-04-22 00:00:00 : 3, element : [2022-04-22 00:00:00, 2022-04-22 00:09:30, 2022-04-22 00:09:31]

问题13: 窗口 count 值分别是多少? 答: 2, 3

两个思考题:

HOP 窗口和 CUMULATE 窗口为什么不一样 ?

Flink sql api 的窗口和 Stream api 的 窗口有什么不一样 ?

注: 可以直接留言讨论

stream api 完整代码参见 github flink-rookie WhenWindowFire.java

sql api 完整代码参见 github sqlSubmit kafka_to_window_test.sql

欢迎关注Flink菜鸟公众号,会不定期更新Flink(开发技术)相关的推文

浙公网安备 33010602011771号

浙公网安备 33010602011771号