有朋友看了之前的 sql 写 Hbase 的博客,自己试了写,可能没有得到预期的结果

之前在捣腾的时候,也花了不少时间,这里大概写下遇到的两个问题

1、hbase 表参数问题

'connector.zookeeper.quorum' = 'venn:2181' 'connector.version' = '2.1.4'

我们有多套hbase 集群,之前 zookeeper 的地址写错了,连到另一个集群的情况下,程序还是可以正常执行,在 Hbase 中怎么试都没有数据,之后慢慢扒 taskmanager.log 才看到是 地址写错了:

2020-04-28 10:22:51,223 INFO org.apache.flink.addons.hbase.HBaseUpsertSinkFunction - start open ... 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:host.name=venn 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:java.version=1.8.0_111 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:java.vendor=Oracle Corporation 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:java.home=/opt/jdk1.8.0_111/jre 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:java.class.path=:flinkDemo-1.0.jar:lib/elasticsearch-7.3.2.jar:lib/ ... jar 包 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:java.library.path=:/opt/hadoop2.7/lib/native:/opt/hadoop2.7/lib/native:/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:java.io.tmpdir=/tmp 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:java.compiler=<NA> 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:os.name=Linux 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:os.arch=amd64 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:os.version=3.10.0-957.el7.x86_64 2020-04-28 10:22:51,432 INFO org.apache.zookeeper.ZooKeeper - Client environment:user.name=venn 2020-04-28 10:22:51,433 INFO org.apache.zookeeper.ZooKeeper - Client environment:user.home=/home/venn 2020-04-28 10:22:51,433 INFO org.apache.zookeeper.ZooKeeper - Client environment:user.dir=/opt/hadoop/hadoop/tmp/nm-local-dir/usercache/venn/appcache/application_1587014675619_0013/container_1587014675619_0013_01_000002 2020-04-28 10:22:51,434 INFO org.apache.zookeeper.ZooKeeper - Initiating client connection, connectString=venn:2181 sessionTimeout=90000 watcher=org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient$$Lambda$137/809205951@23ceed6e 2020-04-28 10:22:51,463 WARN org.apache.zookeeper.ClientCnxn - SASL configuration failed: javax.security.auth.login.LoginException: No JAAS configuration section named 'Client' was found in specified JAAS configuration file: '/opt/hadoop/hadoop/tmp/nm-local-dir/usercache/venn/appcache/application_1587014675619_0013/jaas-876377732957956778.conf'. Will continue connection to Zookeeper server without SASL authentication, if Zookeeper server allows it. 2020-04-28 10:22:51,464 INFO org.apache.zookeeper.ClientCnxn - Opening socket connection to server venn/192.168.229.128:2181 2020-04-28 10:22:51,465 INFO org.apache.zookeeper.ClientCnxn - Socket connection established to venn/192.168.229.128:2181, initiating session 2020-04-28 10:22:51,469 INFO org.apache.zookeeper.ClientCnxn - Session establishment complete on server venn/192.168.229.128:2181, sessionid = 0x10000017be40018, negotiated timeout = 40000 2020-04-28 10:22:51,693 INFO org.apache.flink.addons.hbase.HBaseUpsertSinkFunction - end open.

还有 flink-hbase 支持版本 1.4.3 ,目前flink-hbase 只支持 1.4.3 ,应该是官方只在 hbase 1.4.3 上跑过完整的测试(后续版本应该会支持更多的hbase 版本),但是不是说 其他的hbase 版本都不能使用,只是可能不是那么安全(需要根据业务来测试是否适合项目上使用)

目前我测试过在 hbase 1.1.2 和 hbase 2.1.4 上可以正常使用(我们业务比较简单这部分就直接往hbase里面写,后续会通过其他方式如:udf 等来访问,并且数据量也不太)

2、hbase-client 版本问题

flink-hbase 版本 是 1.10 的时候,在使用不同的hbase-client 版本时,需要添加不同的jar包。

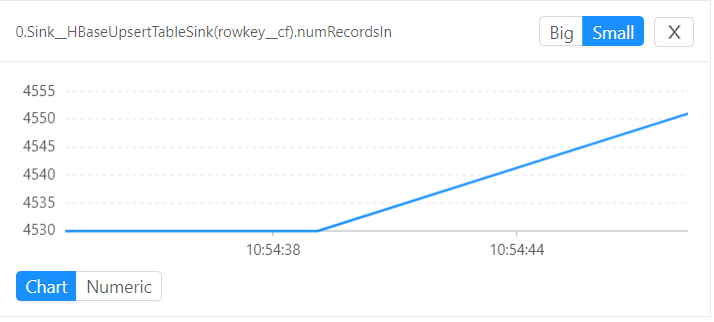

之前有遇到,在yarn 上执行 sql 写hbase 时,数据正常写入,在metrics 中看到数据已经进了 HbaseUpsertTableSink,但是 在hbase shell 中 count hbase 表没有数

我遇到这样的问题都是因为 jar 包引入不全导致的

程序正常执行,没有报错,数据也正常写入(metrics中可以看到),但是数据就是没有进 hbase,在 taskmanager日志中又没有看到报错(可能很多人会产生是否支持对应版本的 hbase 的疑问)

如果是缺少必须的jar 包会导致任务失败的报错,日志里面马上会打出来,但是缺少连接hbase 的jar包,报错信息则会晚很多(主要取决于 timeout 时间)

导致任务失败的报错(马上在 taskmanager 看到):

2020-04-28 09:54:43,888 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph - Source: KafkaTableSource(user_id, item_id, category_id, behavior, ts) -> SourceConversion(table=[default_catalog.default_database.user_log, source: [KafkaTableSource(user_id, item_id, category_id, behavior, ts)]], fields=[user_id, item_id, category_id, behavior, ts]) -> Calc(select=[user_id, (item_id ROW category_id ROW behavior ROW ts) AS cf]) -> SinkConversionToTuple2 -> Sink: HBaseUpsertTableSink(rowkey, cf) (1/1) (5d8a2430e5e0db10669d9db48e14a552) switched from RUNNING to FAILED. java.lang.RuntimeException: Cannot create connection to HBase. at org.apache.flink.addons.hbase.HBaseUpsertSinkFunction.open(HBaseUpsertSinkFunction.java:154) at org.apache.flink.api.common.functions.util.FunctionUtils.openFunction(FunctionUtils.java:36) at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.open(AbstractUdfStreamOperator.java:102) at org.apache.flink.streaming.api.operators.StreamSink.open(StreamSink.java:48) at org.apache.flink.streaming.runtime.tasks.StreamTask.initializeStateAndOpen(StreamTask.java:1007) at org.apache.flink.streaming.runtime.tasks.StreamTask.lambda$beforeInvoke$0(StreamTask.java:454) at org.apache.flink.streaming.runtime.tasks.StreamTaskActionExecutor$SynchronizedStreamTaskActionExecutor.runThrowing(StreamTaskActionExecutor.java:94) at org.apache.flink.streaming.runtime.tasks.StreamTask.beforeInvoke(StreamTask.java:449) at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:461) at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:707) at org.apache.flink.runtime.taskmanager.Task.run(Task.java:532) at java.lang.Thread.run(Thread.java:745) Caused by: java.io.IOException: java.lang.reflect.InvocationTargetException at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:222) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:115) at org.apache.flink.addons.hbase.HBaseUpsertSinkFunction.open(HBaseUpsertSinkFunction.java:125) ... 11 more Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:220) ... 13 more Caused by: java.lang.NoClassDefFoundError: org/apache/hbase/thirdparty/com/google/protobuf/RpcController at java.lang.ClassLoader.defineClass1(Native Method) at java.lang.ClassLoader.defineClass(ClassLoader.java:763) at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) at java.net.URLClassLoader.defineClass(URLClassLoader.java:467) at java.net.URLClassLoader.access$100(URLClassLoader.java:73) at java.net.URLClassLoader$1.run(URLClassLoader.java:368) at java.net.URLClassLoader$1.run(URLClassLoader.java:362) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:361) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) at org.apache.hadoop.hbase.client.ConnectionImplementation.<init>(ConnectionImplementation.java:262) ... 18 more Caused by: java.lang.ClassNotFoundException: org.apache.hbase.thirdparty.com.google.protobuf.RpcController at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ... 31 more

缺少连接hbase 的jar 包报错(过一定时间才能再 taskmanager 中看到):

2020-04-28 10:12:46,605 ERROR org.apache.hadoop.hbase.client.AsyncProcess - Failed to get region location

org.apache.hadoop.hbase.DoNotRetryIOException: java.lang.NoClassDefFoundError: org/apache/htrace/core/HTraceConfiguration

目前使用的 两个 hbase 版本 1.1.2 /2.1.4 中:

连接 hbase 1.1.2 ,使用 hbase-client 1.1.2 可以在flink lib 中添加如下jar 包:

hbase-client-1.1.2.jar

hbase-common-1.1.2.jar

hbase-protocol-1.1.2.jar

连接 hbase2.1.4 使用 hbase-client 1.1.2 可以在flink lib 中添加如下jar 包:

hbase-client-1.1.2.jar hbase-common-1.1.2.jar hbase-protocol-1.1.2.jar

连接 hbase2.1.4 使用 hbase-client 2.1.4 可以在flink lib 中添加如下jar 包:

flink-hbase_2.11-1.10.0.jar hbase-client-2.1.4.jar hbase-common-2.1.4.jar hbase-shaded-protobuf-2.1.0.jar hbase-protocol-shaded-2.1.4.jar htrace-core4-4.2.0-incubating.jar hbase-protocol-2.1.4.jar metrics-core-3.2.1.jar hbase-shaded-netty-2.1.0.jar hbase-shaded-miscellaneous-2.1.0.jar

以上三种组合都是测试过可以正常写数据到hbase 中的

其他版本的hbase-client 请各位自行测试/添加,只需要看taskmanager.log 中的报错信息,添加对应jar包即可

欢迎关注Flink菜鸟公众号,会不定期更新Flink(开发技术)相关的推文

浙公网安备 33010602011771号

浙公网安备 33010602011771号