Python源码解析:内存管理(DEBUG模式)的几个理解点

写了这多贴子,顺带写点自己的感想吧!其实很多贴子在写的时候很踌躇,比如这次打算写的python内存管理,因为内存管理都比较琐碎,在软件架构里,也是很容易出问题的地方,涉及的细节内容非常多,要写好写明白,得从各个方面花功夫才行。所以我一向比较敬重那些好又全的贴子,毕竟人家下了功夫。

因为时间关系,本贴比较粗略,只是几个简单的点,没能做到面,更不用提面面俱到了,建议配合参考资料看,比如陈儒所著的《Python源码解析》就比较细。嗯,还有,感谢Python开发者们,源码注释比一般的开源库都要全(甚至于有些啰嗦,不过相信很多读者尤其是刚开始学习源码的读者都会喜欢)。

理解的重点应该在后面的源码部分,包括arena的分配,pool的分配,以及内存的回收。可惜CSDN的源码排版,汗一个....

Debug(开发)模式

如果定义了_DEBUG,那么,在pyconfig.h中,就会链式的定义 Py_DEBUG =》PYMALLOC_DEBUG

#ifdef _DEBUG

# define Py_DEBUG

#endif

在python.h中,就会定义PYMALLOC_DEBUG

#if defined(Py_DEBUG) && defined(WITH_PYMALLOC) && !defined(PYMALLOC_DEBUG)

#define PYMALLOC_DEBUG

#endif

此时,内存管理使用的就是debug模式下的管理机制,这套机制在正常的管理之下,还会记录更多的信息以便调试。

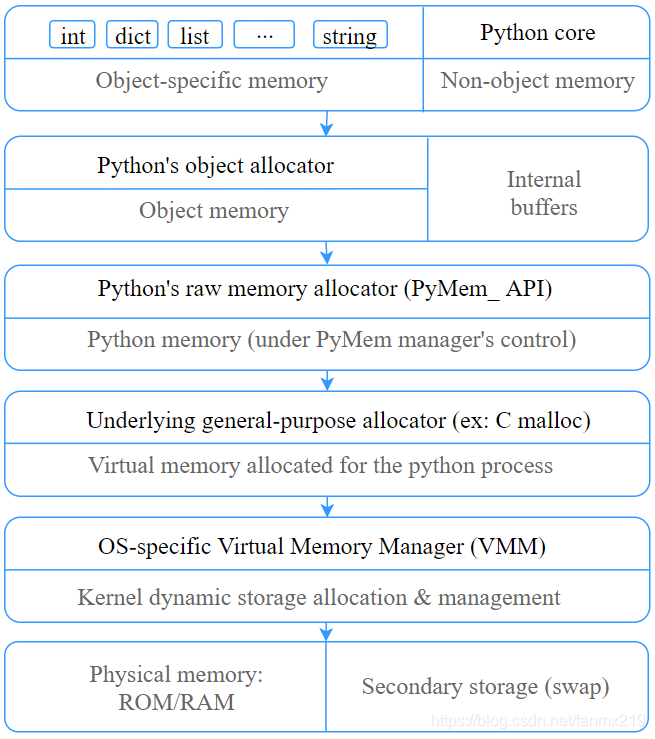

Python内存管理的层次模式

Python采用一种层次结构来对内存进行管理,如下图所示,

其中,

第0层是操作系统层:底层函数就是c运行时提供的malloc, free函数

第1层:Python的raw memory接口,这一层主要是针对不同的操作系统函数进行包装,以便由上一层统一调用。这一层主要是_PyMem_Raw _PyMem _PyObject函数族,其定义如下,

#ifdef Py_DEBUG

static PyMemAllocatorEx _PyMem_Raw = PYDBGRAW_ALLOC;

static PyMemAllocatorEx _PyMem = PYDBGMEM_ALLOC;

static PyMemAllocatorEx _PyObject = PYDBGOBJ_ALLOC;

#else

static PyMemAllocatorEx _PyMem_Raw = PYRAW_ALLOC;

static PyMemAllocatorEx _PyMem = PYMEM_ALLOC;

static PyMemAllocatorEx _PyObject = PYOBJ_ALLOC;

#endif其Py_DEBUG模式对应的定义如下,

#define PYDBGRAW_ALLOC \

{&_PyMem_Debug.raw, _PyMem_DebugRawMalloc, _PyMem_DebugRawCalloc, _PyMem_DebugRawRealloc, _PyMem_DebugRawFree}

#define PYDBGMEM_ALLOC \

{&_PyMem_Debug.mem, _PyMem_DebugMalloc, _PyMem_DebugCalloc, _PyMem_DebugRealloc, _PyMem_DebugFree}

#define PYDBGOBJ_ALLOC \

{&_PyMem_Debug.obj, _PyMem_DebugMalloc, _PyMem_DebugCalloc, _PyMem_DebugRealloc, _PyMem_DebugFree}而对应于非debug模式,定义如下

#define MALLOC_ALLOC {NULL, _PyMem_RawMalloc, _PyMem_RawCalloc, _PyMem_RawRealloc, _PyMem_RawFree}

#ifdef WITH_PYMALLOC

# define PYMALLOC_ALLOC {NULL, _PyObject_Malloc, _PyObject_Calloc, _PyObject_Realloc, _PyObject_Free}

#endif

#define PYRAW_ALLOC MALLOC_ALLOC

#ifdef WITH_PYMALLOC

# define PYOBJ_ALLOC PYMALLOC_ALLOC

#else

# define PYOBJ_ALLOC MALLOC_ALLOC

#endif

#define PYMEM_ALLOC PYOBJ_ALLOC这里宏WITH_PYMALLOC表示使用Python的小块内存分配机制(small-block memory-allocator),后面还会讲到

第2层:抽象层的内存管理,该层与操作系统无关

第3层:对象缓冲池

以_PyMem函数族为例,在Py_DEBUG模式下, _PyMem = PYDBGMEM_ALLOC,

void *

PyMem_Malloc(size_t size)

{

/* see PyMem_RawMalloc() */

if (size > (size_t)PY_SSIZE_T_MAX)

return NULL;

return _PyMem.malloc(_PyMem.ctx, size);

}

void *

PyMem_Calloc(size_t nelem, size_t elsize)

{

/* see PyMem_RawMalloc() */

if (elsize != 0 && nelem > (size_t)PY_SSIZE_T_MAX / elsize)

return NULL;

return _PyMem.calloc(_PyMem.ctx, nelem, elsize);

}

void *

PyMem_Realloc(void *ptr, size_t new_size)

{

/* see PyMem_RawMalloc() */

if (new_size > (size_t)PY_SSIZE_T_MAX)

return NULL;

return _PyMem.realloc(_PyMem.ctx, ptr, new_size);

}

void

PyMem_Free(void *ptr)

{

_PyMem.free(_PyMem.ctx, ptr);

}所以 PyMem_Malloc,PyMem_Realloc,PyMem_Free通过_PyMem调用的实际函数是 _PyMem_DebugMalloc, _PyMem_DebugCalloc, _PyMem_DebugRealloc, _PyMem_DebugFree。也就是实际调用了

static void *

_PyMem_DebugMalloc(void *ctx, size_t nbytes)

{

_PyMem_DebugCheckGIL();

return _PyMem_DebugRawMalloc(ctx, nbytes);

}

static void *

_PyMem_DebugCalloc(void *ctx, size_t nelem, size_t elsize)

{

_PyMem_DebugCheckGIL();

return _PyMem_DebugRawCalloc(ctx, nelem, elsize);

}

static void

_PyMem_DebugFree(void *ctx, void *ptr)

{

_PyMem_DebugCheckGIL();

_PyMem_DebugRawFree(ctx, ptr);

}

static void *

_PyMem_DebugRealloc(void *ctx, void *ptr, size_t nbytes)

{

_PyMem_DebugCheckGIL();

return _PyMem_DebugRawRealloc(ctx, ptr, nbytes);

}如果我们从源头开始追溯的话,发现这个调用过程非常地绕,如下,

PyObject_MALLOC ==>>>

PyObject_Malloc ==>>>

_PyObject.malloc(_PyObject.ctx, size); (注意:_PyObject = PYDBGOBJ_ALLOC,所以调用的是_PyObject对应的PYDBGOBJ_ALLOC / _PyMem_DebugMalloc) ==>>>

_PyMem_DebugMalloc ==>>>

_PyMem_DebugRawMalloc ==>>>

_PyMem_DebugRawAlloc(0, ctx, nbytes);==>>>

p = (uint8_t *)api->alloc.malloc(api->alloc.ctx, total); (这里调用的是_PyMem_Debug.obj所定义的PYOBJ_ALLOC / PYMALLOC_ALLOC / _PyObject_Malloc)==>>>

_PyObject_Malloc==>>>

pymalloc_alloc (or 如否,则采用PyMem_RawMalloc)

这里,_PyMem_Debug的定义是这样的

typedef struct {

/* user context passed as the first argument to the 4 functions */

void *ctx;

/* allocate a memory block */

void* (*malloc) (void *ctx, size_t size);

/* allocate a memory block initialized by zeros */

void* (*calloc) (void *ctx, size_t nelem, size_t elsize);

/* allocate or resize a memory block */

void* (*realloc) (void *ctx, void *ptr, size_t new_size);

/* release a memory block */

void (*free) (void *ctx, void *ptr);

} PyMemAllocatorEx;

typedef struct {

/* We tag each block with an API ID in order to tag API violations */

char api_id;

PyMemAllocatorEx alloc;

} debug_alloc_api_t;

static struct {

debug_alloc_api_t raw;

debug_alloc_api_t mem;

debug_alloc_api_t obj;

} _PyMem_Debug = {

{'r', PYRAW_ALLOC},

{'m', PYMEM_ALLOC},

{'o', PYOBJ_ALLOC}

};PyMem_RawMalloc的调用路线如下,

PyMem_RawMalloc ==>>>

_PyMem_Raw.malloc(_PyMem_Raw.ctx, size) ==>>>

_PyMem_DebugRawMalloc ==>>>

_PyMem_DebugRawAlloc ==>>>

p = (uint8_t *)api->alloc.malloc(api->alloc.ctx, total); ==>>>

_PyMem_RawMalloc(void *ctx, size_t size) ==>>>

return malloc(size);

Python 内存池pool的管理--小块内存的管理

Python对大块内存,是直接通过PyMem_RawMalloc由malloc系统分配,而对于需求小于SMALL_REQUEST_THRESHOLD (Python3.7默认是512个字节)的内存块,由Python统一管理,在默认情况下,其层次结构是:

block ==>>> pool ==>>> area ==>>> use memory

1 arena = 256KB

1 arena = 64 pool,

1 pool = 4KB

1 pool = multiple number of blocks

一个pool中到底有多少个block是不确定的,Python会根据实际情况,比如分页对齐等调整,使得可分配的空间为某个block 大小的整数倍,

block结构如下

(ref. objmalloc.c):

* Request in bytes Size of allocated block Size class idx

* ----------------------------------------------------------------

* 1-8 8 0

* 9-16 16 1

* 17-24 24 2

* 25-32 32 3

* 33-40 40 4

* 41-48 48 5

* 49-56 56 6

* 57-64 64 7

* 65-72 72 8

* ... ... ...

* 497-504 504 62

* 505-512 512 63

*

* 0, SMALL_REQUEST_THRESHOLD + 1 and up: routed to the underlying

* allocator.

*/

Pool

Pool的结构

/* When you say memory, my mind reasons in terms of (pointers to) blocks */

typedef uint8_t block;

/* Pool for small blocks. */

struct pool_header {

union { block *_padding;

uint count; } ref; /* number of allocated blocks */

block *freeblock; /* pool's free list head */

struct pool_header *nextpool; /* next pool of this size class */

struct pool_header *prevpool; /* previous pool "" */

uint arenaindex; /* index into arenas of base adr */

uint szidx; /* block size class index */

uint nextoffset; /* bytes to virgin block */

uint maxnextoffset; /* largest valid nextoffset */

};

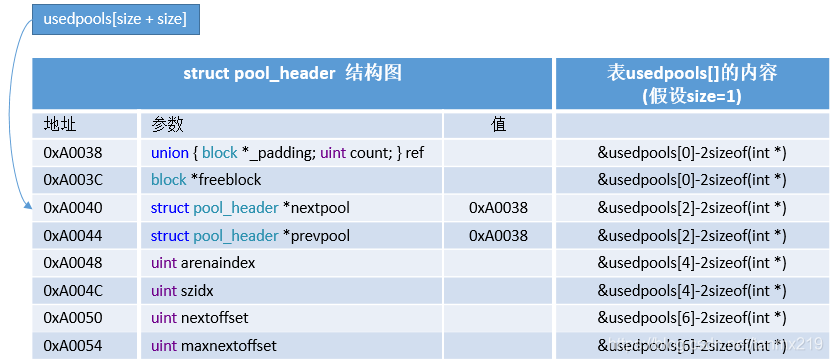

typedef struct pool_header *poolp;Pool 表:usedpools[ ]

一个记录所有正在使用中的双向循环List表的头地址的表,是一个指针表。

对index= i, usedpools[i+i] 记录的是一个class idx = i 的List的头部地址,该list记录了所有的内存块大小"size class idx"= i 的缓冲池,因此,

usedpools[0] 对应 blocks of size 8,

usedpools[2] 对应 blocks of size 16,

依次类推, index 2*i 对应 blocks of size (i+1)<<ALIGNMENT_SHIFT. (参考:INDEX2SIZE宏)

注意这里ALIGNMENT_SHIFT=3。

一些需要理解的宏定义,

#define ALIGNMENT 8 /* must be 2^N */

#define ALIGNMENT_SHIFT 3

#define INDEX2SIZE(I) (((uint)(I) + 1) << ALIGNMENT_SHIFT)

#define SMALL_REQUEST_THRESHOLD 512 // 允许的“小块“内存最大值,超过该值就不属于小内存池管理。

#define NB_SMALL_SIZE_CLASSES (SMALL_REQUEST_THRESHOLD / ALIGNMENT) // = 512/8 = 64

#define SYSTEM_PAGE_SIZE (4 * 1024) // 每个pool的最大内存是一页,4KB

#define SYSTEM_PAGE_SIZE_MASK (SYSTEM_PAGE_SIZE - 1)

#define ARENA_SIZE (256 << 10) // 256KB, 当前进程的总“小块“内存

#define POOL_SIZE SYSTEM_PAGE_SIZE /* must be 2^N */

#define POOL_SIZE_MASK SYSTEM_PAGE_SIZE_MASK

#define POOL_OVERHEAD _Py_SIZE_ROUND_UP(sizeof(struct pool_header), ALIGNMENT)其中,POOL_SIZE 的大小和SYSTEM_PAGE_SIZE的大小是一样的,都是4KB,

POOL_OVERHEAD是指pool_header结构的大小,

usedpools[ ] 共计64(i.e., NB_SMALL_SIZE_CLASSES)个表项,第2个表项对应一个class index, usedpools[ ]初始化的代码如下,

#define PTA(x) ((poolp )((uint8_t *)&(usedpools[2*(x)]) - 2*sizeof(block *)))

#define PT(x) PTA(x), PTA(x)

static poolp usedpools[2 * ((NB_SMALL_SIZE_CLASSES + 7) / 8) * 8] = {

PT(0), PT(1), PT(2), PT(3), PT(4), PT(5), PT(6), PT(7)

, PT(8), PT(9), PT(10), PT(11), PT(12), PT(13), PT(14), PT(15)

, PT(16), PT(17), PT(18), PT(19), PT(20), PT(21), PT(22), PT(23)

, PT(24), PT(25), PT(26), PT(27), PT(28), PT(29), PT(30), PT(31)

, PT(32), PT(33), PT(34), PT(35), PT(36), PT(37), PT(38), PT(39)

, PT(40), PT(41), PT(42), PT(43), PT(44), PT(45), PT(46), PT(47)

, PT(48), PT(49), PT(50), PT(51), PT(52), PT(53), PT(54), PT(55)

, PT(56), PT(57), PT(58), PT(59), PT(60), PT(61), PT(62), PT(63)

初始化后的结构如下

初始化解说:

表usedpools[]是一个指针( *poolp )表,这个表采用了一种类似卷积操作的空间结构(会出现很多空间重叠部分)来初始化参数nextpool和prevpool,其中存放的是struct pool_header的所有 首地址(在示例图中就是0xA0038),

该首地址 = &usedpools[size + size] - 2sizeof(int*)

所以usedpools[size + size] 的内容是其对应结构struct pool_header的*nextpool,而不是该结构的头部,这样一来,其值nextpool和prevpool就被初始化成同一个值:即usedpools[size + size] 项的内容(注意该内容是重复2次的),如图所示;此时,ref, freeblock, areanaindex等参数都是无效的,都堆叠在usedpools[size + size]附近的空间。

这样,程序中 pool = usedpools[size + size]时,pool取的是值0xA0038(而不是usedpools[size + size]的地址0xA0040),所以此时pool是指向结构struct pool_header的首地址ref,而不是*nextpool, 故而后面会用if (pool != pool->nextpool)来判断该pool是否已经被使用过,被使用过的情况下就会有 (pool != pool->nextpool)。

重点:表usedpools[]是一个指针( *poolp )表,其中重复的2项对应结构体pool_header中的nextpool和prevpool,至于结构的其他参数,在使用前都是无效的,所以内容是谁,指向哪里在使用前暂时都不用管。

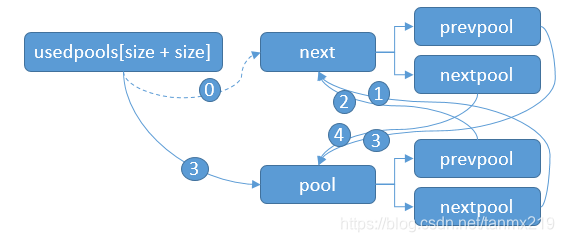

使用usedpools -- init_pool

在usedpools[i+i]初次使用时,初始化刚(从arena中)分配的pool的源码是这样的,

init_pool:

/* Frontlink to used pools. */

next = usedpools[size + size]; /* == prev */

pool->nextpool = next; (1)

pool->prevpool = next; (2)

next->nextpool = pool; (3)

next->prevpool = pool; (4)

pool->ref.count = 1;

这里要注意的是第(3)步隐含着这样一个条件:usedpools[i + i]指向新的内存空间pool,因为此时next->nextpool的地址就是usedpools[i + i]的地址,其值(该地址中的内容)为pool也就相当于usedpools[i + i]的值为pool,所以自然也就是usedpools[i+i]指针的改变。

Arena

Arena的结构

struct arena_object {

uintptr_t address;

block* pool_address;

uint nfreepools;

uint ntotalpools;

struct pool_header* freepools;

struct arena_object* nextarena;

struct arena_object* prevarena;

};

源码(含注释)

最后给出Python3.7内存管理的主体函数源码,理解了这些源码,是理解Python内存管理模式的关键,其中注释很详细,我添加了部分说明

new_arena

/* Allocate a new arena. If we run out of memory, return NULL. Else

* allocate a new arena, and return the address of an arena_object

* describing the new arena. It's expected that the caller will set

* `usable_arenas` to the return value.

*/

static struct arena_object*

new_arena(void)

{

struct arena_object* arenaobj;

uint excess; /* number of bytes above pool alignment */

void *address;

static int debug_stats = -1;

if (debug_stats == -1) {

const char *opt = Py_GETENV("PYTHONMALLOCSTATS");

debug_stats = (opt != NULL && *opt != '\0');

}

if (debug_stats)

_PyObject_DebugMallocStats(stderr);

if (unused_arena_objects == NULL) {

// 第一次进入程序,开始分配内存(此前还没有分配过内存)

uint i;

uint numarenas;

size_t nbytes;

/* Double the number of arena objects on each allocation.

* Note that it's possible for `numarenas` to overflow.

*/

numarenas = maxarenas ? maxarenas << 1 : INITIAL_ARENA_OBJECTS;

if (numarenas <= maxarenas)

return NULL; /* overflow */

#if SIZEOF_SIZE_T <= SIZEOF_INT

if (numarenas > SIZE_MAX / sizeof(*arenas))

return NULL; /* overflow */

#endif

nbytes = numarenas * sizeof(*arenas);

arenaobj = (struct arena_object *)PyMem_RawRealloc(arenas, nbytes);

if (arenaobj == NULL)

return NULL;

arenas = arenaobj;

/* We might need to fix pointers that were copied. However,

* new_arena only gets called when all the pages in the

* previous arenas are full. Thus, there are *no* pointers

* into the old array. Thus, we don't have to worry about

* invalid pointers. Just to be sure, some asserts:

*/

assert(usable_arenas == NULL);

assert(unused_arena_objects == NULL);

/* Put the new arenas on the unused_arena_objects list. */

for (i = maxarenas; i < numarenas; ++i) {

arenas[i].address = 0; /* mark as unassociated */

arenas[i].nextarena = i < numarenas - 1 ?

&arenas[i+1] : NULL;

}

/* Update globals. */

unused_arena_objects = &arenas[maxarenas];

maxarenas = numarenas;

}

/* Take the next available arena object off the head of the list. */

assert(unused_arena_objects != NULL);

arenaobj = unused_arena_objects; //(从arenas[]表中拉1个)当前要使用的arena的结构

unused_arena_objects = arenaobj->nextarena; // 指向空arena的指针向后移一个结构单位

assert(arenaobj->address == 0);

address = _PyObject_Arena.alloc(_PyObject_Arena.ctx, ARENA_SIZE);

// 分配当前arena的空间

if (address == NULL) {

/* The allocation failed: return NULL after putting the

* arenaobj back.

*/

arenaobj->nextarena = unused_arena_objects;

unused_arena_objects = arenaobj;

return NULL;

}

arenaobj->address = (uintptr_t)address; // 当前要使用的arena

++narenas_currently_allocated;

++ntimes_arena_allocated;

if (narenas_currently_allocated > narenas_highwater)

narenas_highwater = narenas_currently_allocated;

arenaobj->freepools = NULL; //这里设置为NULL,为什么?注意看后面的对应解释

/* pool_address <- first pool-aligned address in the arena

nfreepools <- number of whole pools that fit after alignment */

arenaobj->pool_address = (block*)arenaobj->address;

arenaobj->nfreepools = ARENA_SIZE / POOL_SIZE;

assert(POOL_SIZE * arenaobj->nfreepools == ARENA_SIZE);

excess = (uint)(arenaobj->address & POOL_SIZE_MASK);

if (excess != 0) {

--arenaobj->nfreepools;

arenaobj->pool_address += POOL_SIZE - excess;

}

arenaobj->ntotalpools = arenaobj->nfreepools;

return arenaobj;

}pymalloc_alloc

/* pymalloc allocator

The basic blocks are ordered by decreasing execution frequency,

which minimizes the number of jumps in the most common cases,

improves branching prediction and instruction scheduling (small

block allocations typically result in a couple of instructions).

Unless the optimizer reorders everything, being too smart...

Return 1 if pymalloc allocated memory and wrote the pointer into *ptr_p.

Return 0 if pymalloc failed to allocate the memory block: on bigger

requests, on error in the code below (as a last chance to serve the request)

or when the max memory limit has been reached. */

static int

pymalloc_alloc(void *ctx, void **ptr_p, size_t nbytes)

{

block *bp;

poolp pool;

poolp next;

uint size;

#ifdef WITH_VALGRIND

if (UNLIKELY(running_on_valgrind == -1)) {

running_on_valgrind = RUNNING_ON_VALGRIND;

}

if (UNLIKELY(running_on_valgrind)) {

return 0;

}

#endif

if (nbytes == 0) {

return 0;

}

if (nbytes > SMALL_REQUEST_THRESHOLD) {

return 0;

}

LOCK();

/*

* Most frequent paths first

*/

size = (uint)(nbytes - 1) >> ALIGNMENT_SHIFT;

pool = usedpools[size + size]; // 得到对应size class的首地址

if (pool != pool->nextpool) {

// 如果已经有使用过,就会进入本if语句

/*

* There is a used pool for this size class.

* Pick up the head block of its free list.

*/

++pool->ref.count; // 本pool中多了一个object, 使用计算 + 1

bp = pool->freeblock; // 上一次留下的可分配空间的首地址

assert(bp != NULL);

if ((pool->freeblock = *(block **)bp) != NULL) {

goto success; // 如果有可分配的自由空间给下一次分配,赋给freeblock就OK了

// 注意:这个空间应该是使用过之后再被释放出来的,

// 插入到了freeblock队列前端的空间

}

/*

* Reached the end of the free list, try to extend it.

*/

if (pool->nextoffset <= pool->maxnextoffset) {

// 如果还有足够的空间分配给下一个对象

/* There is room for another block. */

pool->freeblock = (block*)pool +

pool->nextoffset; // 就更新下一次可能要申请的首地址

pool->nextoffset += INDEX2SIZE(size);

*(block **)(pool->freeblock) = NULL;

// 分配未满的情况下,内存取自从未分配过的后面的空间部分,此时feeblock为NULL

goto success;

}

// Pool已经满了,从链表中移除

// 注意这里隐含了条件:pool->freeblock == NULL

// 该条件在pymalloc_free中要用到

/* Pool is full, unlink from used pools. */

next = pool->nextpool;

pool = pool->prevpool;

next->prevpool = pool;

pool->nextpool = next;

goto success;

}

/* There isn't a pool of the right size class immediately

* available: use a free pool.

*/

if (usable_arenas == NULL) {

/* No arena has a free pool: allocate a new arena. */

#ifdef WITH_MEMORY_LIMITS

if (narenas_currently_allocated >= MAX_ARENAS) {

goto failed;

}

#endif

usable_arenas = new_arena();

if (usable_arenas == NULL) {

goto failed;

}

usable_arenas->nextarena =

usable_arenas->prevarena = NULL;

// 切除与unused_arena_objects的联系

}

assert(usable_arenas->address != 0);

/* Try to get a cached free pool. */

pool = usable_arenas->freepools;

if (pool != NULL) {

/* Unlink from cached pools. */

usable_arenas->freepools = pool->nextpool;

/* This arena already had the smallest nfreepools

* value, so decreasing nfreepools doesn't change

* that, and we don't need to rearrange the

* usable_arenas list. However, if the arena has

* become wholly allocated, we need to remove its

* arena_object from usable_arenas.

*/

--usable_arenas->nfreepools;

if (usable_arenas->nfreepools == 0) {

/* Wholly allocated: remove. */

assert(usable_arenas->freepools == NULL);

assert(usable_arenas->nextarena == NULL ||

usable_arenas->nextarena->prevarena ==

usable_arenas);

usable_arenas = usable_arenas->nextarena;

if (usable_arenas != NULL) {

usable_arenas->prevarena = NULL;

assert(usable_arenas->address != 0);

}

}

else {

/* nfreepools > 0: it must be that freepools

* isn't NULL, or that we haven't yet carved

* off all the arena's pools for the first

* time.

*/

assert(usable_arenas->freepools != NULL ||

usable_arenas->pool_address <=

(block*)usable_arenas->address +

ARENA_SIZE - POOL_SIZE);

}

//初始化刚(从arena中)分配的pool

init_pool:

/* Frontlink to used pools. */

next = usedpools[size + size]; /* == prev */

pool->nextpool = next;

pool->prevpool = next;

next->nextpool = pool;

next->prevpool = pool;

pool->ref.count = 1;

if (pool->szidx == size) {

/* Luckily, this pool last contained blocks

* of the same size class, so its header

* and free list are already initialized.

*/

bp = pool->freeblock;

assert(bp != NULL);

pool->freeblock = *(block **)bp;

goto success;

}

/*

* Initialize the pool header, set up the free list to

* contain just the second block, and return the first

* block.

*/

pool->szidx = size;

size = INDEX2SIZE(size);

bp = (block *)pool + POOL_OVERHEAD; // 用来存储对象的首地址

pool->nextoffset = POOL_OVERHEAD + (size << 1);

pool->maxnextoffset = POOL_SIZE - size;

pool->freeblock = bp + size;

*(block **)(pool->freeblock) = NULL;

goto success;

}

/* Carve off a new pool. */

assert(usable_arenas->nfreepools > 0);

assert(usable_arenas->freepools == NULL);

pool = (poolp)usable_arenas->pool_address;

assert((block*)pool <= (block*)usable_arenas->address +

ARENA_SIZE - POOL_SIZE);

pool->arenaindex = (uint)(usable_arenas - arenas);

assert(&arenas[pool->arenaindex] == usable_arenas);

pool->szidx = DUMMY_SIZE_IDX;

usable_arenas->pool_address += POOL_SIZE;

--usable_arenas->nfreepools;

if (usable_arenas->nfreepools == 0) {

assert(usable_arenas->nextarena == NULL ||

usable_arenas->nextarena->prevarena ==

usable_arenas);

/* Unlink the arena: it is completely allocated. */

usable_arenas = usable_arenas->nextarena;

if (usable_arenas != NULL) {

usable_arenas->prevarena = NULL;

assert(usable_arenas->address != 0);

}

}

goto init_pool;

success:

UNLOCK();

assert(bp != NULL);

*ptr_p = (void *)bp;

return 1;

failed:

UNLOCK();

return 0;

}

pymalloc_free

/* Free a memory block allocated by pymalloc_alloc().

Return 1 if it was freed.

Return 0 if the block was not allocated by pymalloc_alloc(). */

static int

pymalloc_free(void *ctx, void *p)

{

poolp pool;

block *lastfree;

poolp next, prev;

uint size;

assert(p != NULL);

#ifdef WITH_VALGRIND

if (UNLIKELY(running_on_valgrind > 0)) {

return 0;

}

#endif

pool = POOL_ADDR(p);

if (!address_in_range(p, pool)) {

return 0;

}

/* We allocated this address. */

LOCK();

/* Link p to the start of the pool's freeblock list. Since

* the pool had at least the p block outstanding, the pool

* wasn't empty (so it's already in a usedpools[] list, or

* was full and is in no list -- it's not in the freeblocks

* list in any case).

*/

assert(pool->ref.count > 0); /* else it was empty */

// 下面这两句比较关键,pool->freeblock保存了前面已经释放的所有空间的链表,

// 这里,再在链表头加入了这个新释放的空间。

// 这个两句的操作相当于,把pool->freeblock直接指向了这个刚释放的空间,

// 而在这个空间内,保存了前面已经释放的所有空间的列表的首地址。

// 这里p不会溢出,因为申请的最小空间也有8个bytes,

// 而一个指针在64位机上也只有8个bytes,在32位机上只有4个bytes。

*(block **)p = lastfree = pool->freeblock;

pool->freeblock = (block *)p;

if (!lastfree) {

// pool->freeblock==NULL, 表已经满了,参考pymalloc_alloc中的注释部分

// 现在因为有空间释放出来,表又可以使用了,所以在这里,再把这个表插入回到

// usedpools[size + size]这个链表中

/* Pool was full, so doesn't currently live in any list:

* link it to the front of the appropriate usedpools[] list.

* This mimics LRU pool usage for new allocations and

* targets optimal filling when several pools contain

* blocks of the same size class.

* least recently used (LRU)

*/

--pool->ref.count;

assert(pool->ref.count > 0); /* else the pool is empty */

size = pool->szidx;

next = usedpools[size + size];

prev = next->prevpool;

/* insert pool before next: prev <-> pool <-> next */

pool->nextpool = next;

pool->prevpool = prev;

next->prevpool = pool;

prev->nextpool = pool;

goto success;

}

struct arena_object* ao;

uint nf; /* ao->nfreepools */

/* freeblock wasn't NULL, so the pool wasn't full,

* and the pool is in a usedpools[] list.

*/

if (--pool->ref.count != 0) {

/* pool isn't empty: leave it in usedpools */

goto success;

}

/* Pool is now empty: unlink from usedpools, and

* link to the front of freepools. This ensures that

* previously freed pools will be allocated later

* (being not referenced, they are perhaps paged out).

*/

// prev <-> pool <-> next

next = pool->nextpool;

prev = pool->prevpool;

// prev <--> next

next->prevpool = prev;

prev->nextpool = next;

/* Link the pool to freepools. This is a singly-linked

* list, and pool->prevpool isn't used there.

*/

// ao->freepools <-> freepool list

ao = &arenas[pool->arenaindex];

// ao->freepools <-> pool <-> freepool list

pool->nextpool = ao->freepools;

ao->freepools = pool;

nf = ++ao->nfreepools;

/* All the rest is arena management. We just freed

* a pool, and there are 4 cases for arena mgmt:

* 1. If all the pools are free, return the arena to

* the system free().

* 2. If this is the only free pool in the arena,

* add the arena back to the `usable_arenas` list.

* 3. If the "next" arena has a smaller count of free

* pools, we have to "slide this arena right" to

* restore that usable_arenas is sorted in order of

* nfreepools.

* 4. Else there's nothing more to do.

*/

// 情况1: 全部空闲,通过_PyObject_Arena.free把arena的空间还给系统

if (nf == ao->ntotalpools) {

/* Case 1. First unlink ao from usable_arenas.

*/

assert(ao->prevarena == NULL ||

ao->prevarena->address != 0);

assert(ao ->nextarena == NULL ||

ao->nextarena->address != 0);

/* Fix the pointer in the prevarena, or the

* usable_arenas pointer.

*/

if (ao->prevarena == NULL) {

usable_arenas = ao->nextarena;

assert(usable_arenas == NULL ||

usable_arenas->address != 0);

}

else {

assert(ao->prevarena->nextarena == ao);

ao->prevarena->nextarena =

ao->nextarena;

}

/* Fix the pointer in the nextarena. */

if (ao->nextarena != NULL) {

assert(ao->nextarena->prevarena == ao);

ao->nextarena->prevarena =

ao->prevarena;

}

/* Record that this arena_object slot is

* available to be reused.

*/

ao->nextarena = unused_arena_objects;

unused_arena_objects = ao;

/* Free the entire arena. */

_PyObject_Arena.free(_PyObject_Arena.ctx,

(void *)ao->address, ARENA_SIZE);

ao->address = 0; /* mark unassociated */

--narenas_currently_allocated;

goto success;

}

//情况2:usable_arenas是一个双向链表,在这里扩展、或维护

if (nf == 1) {

/* Case 2. Put ao at the head of

* usable_arenas. Note that because

* ao->nfreepools was 0 before, ao isn't

* currently on the usable_arenas list.

*/

ao->nextarena = usable_arenas;

ao->prevarena = NULL;

if (usable_arenas)

usable_arenas->prevarena = ao;

usable_arenas = ao;

assert(usable_arenas->address != 0);

goto success;

}

/* If this arena is now out of order, we need to keep

* the list sorted. The list is kept sorted so that

* the "most full" arenas are used first, which allows

* the nearly empty arenas to be completely freed. In

* a few un-scientific tests, it seems like this

* approach allowed a lot more memory to be freed.

*/

if (ao->nextarena == NULL ||

nf <= ao->nextarena->nfreepools) {

/* Case 4. Nothing to do. */

goto success;

}

//情况3: arena重排序,最满的那个在最前面,

/* Case 3: We have to move the arena towards the end

* of the list, because it has more free pools than

* the arena to its right.

* First unlink ao from usable_arenas.

*/

if (ao->prevarena != NULL) {

/* ao isn't at the head of the list */

assert(ao->prevarena->nextarena == ao);

ao->prevarena->nextarena = ao->nextarena;

}

else {

/* ao is at the head of the list */

assert(usable_arenas == ao);

usable_arenas = ao->nextarena;

}

ao->nextarena->prevarena = ao->prevarena;

// 找到合适的插入位置

/* Locate the new insertion point by iterating over

* the list, using our nextarena pointer.

*/

while (ao->nextarena != NULL && nf > ao->nextarena->nfreepools) {

ao->prevarena = ao->nextarena;

ao->nextarena = ao->nextarena->nextarena;

}

// 插入usable_arenas列表中

/* Insert ao at this point. */

assert(ao->nextarena == NULL || ao->prevarena == ao->nextarena->prevarena);

assert(ao->prevarena->nextarena == ao->nextarena);

ao->prevarena->nextarena = ao;

if (ao->nextarena != NULL) {

ao->nextarena->prevarena = ao;

}

/* Verify that the swaps worked. */

assert(ao->nextarena == NULL || nf <= ao->nextarena->nfreepools);

assert(ao->prevarena == NULL || nf > ao->prevarena->nfreepools);

assert(ao->nextarena == NULL || ao->nextarena->prevarena == ao);

assert((usable_arenas == ao && ao->prevarena == NULL)

|| ao->prevarena->nextarena == ao);

goto success;

success:

UNLOCK();

return 1;

}

参考资料【1】 Python 源码剖析 陈儒著

【2】 https://rushter.com/blog/python-memory-managment/

浙公网安备 33010602011771号

浙公网安备 33010602011771号