2.安装Spark与Python练习

一、安装Spark

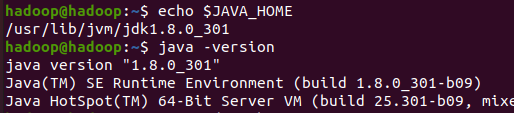

1.检查基础环境hadoop,jdk

检查JDK

echo $JAVA_HOME

java -version

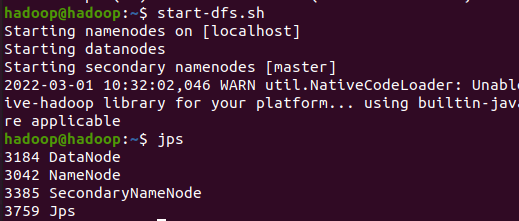

检查Hadoop

start-dfs.sh

jps

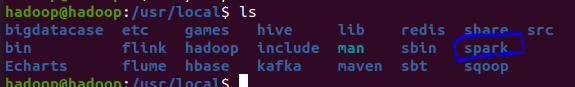

2.查看spark

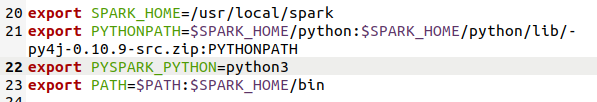

3.配置文件

export SPARK_HOME=/usr/local/spark export PYTHONPATH=$SPARK_HOME/python:$SPARK_HOME/python/lib/py4j-0.10.9-src.zip:PYTHONPATH export PYSPARK_PYTHON=python3 export PATH=$PATH:$SPARK_HOME/bin

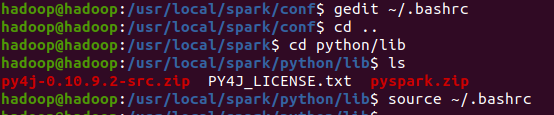

gedit ~/.bashrc

source ~/.bashrc

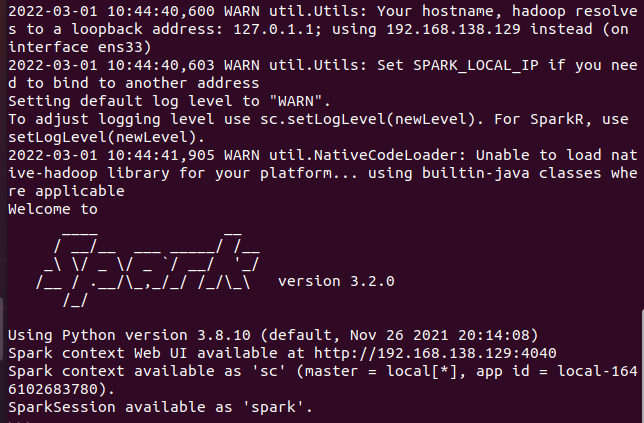

4.运行spark

./usr/local/spark/bin/pyspark

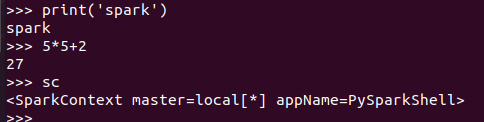

5.试运行python代码

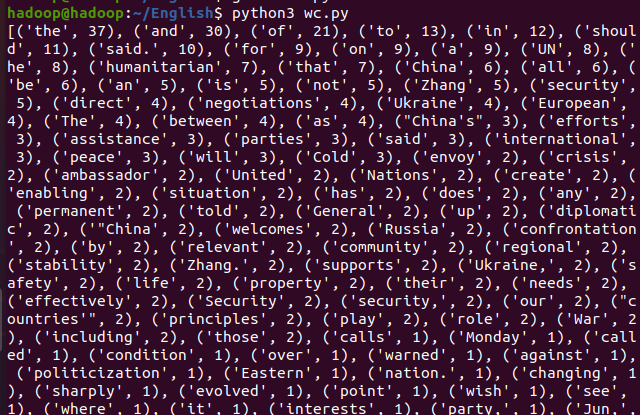

二、Python编程练习:英文文本的词频统计

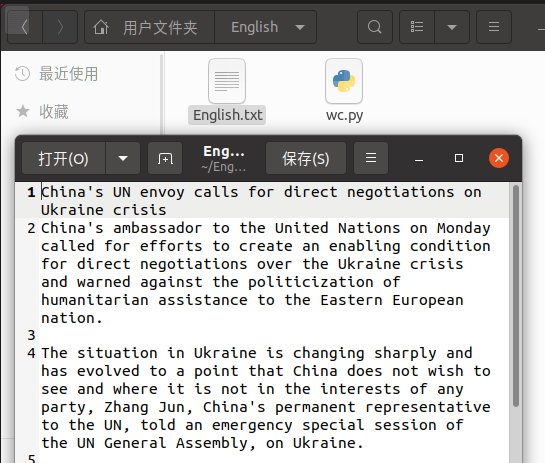

1.准备文本文件

2.读文件

path='/home/hadoop/English/English.txt'

with open(path) as f:

text=f.read()

3.分词

words = text.split()

4.统计每个单词出现的次数

wc={}

for word in words: wc[word]=wc.get(word,0)+1

5.按词频大小排序

wclist=list(wc.items())

wclist.sort(key=lambda x:x[1],reverse=True)

6.打印输出

print(wclist)

7.完整代码

path='/home/hadoop/English/English.txt' with open(path) as f: text=f.read() words = text.split() wc={} for word in words: wc[word]=wc.get(word,0)+1 wclist=list(wc.items()) wclist.sort(key=lambda x:x[1],reverse=True) print(wclist)

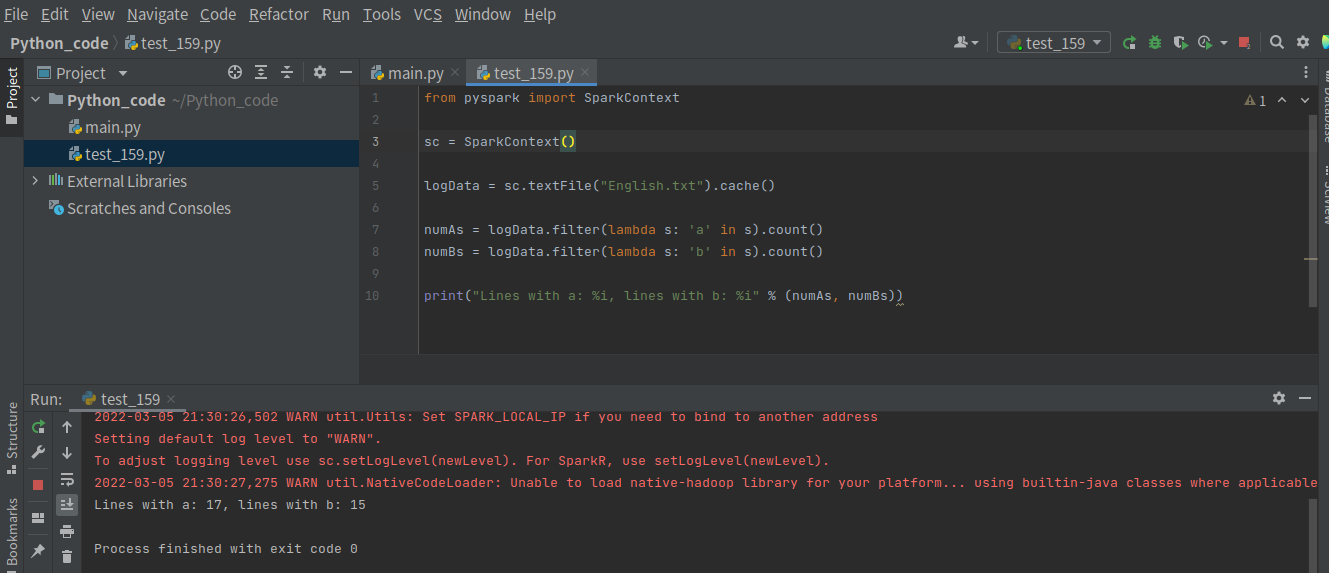

三、根据自己的编程习惯搭建编程环境

1.下载PyCharm

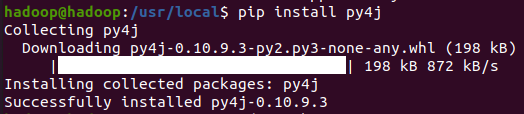

2.下载py4j

sudo pip install py4j

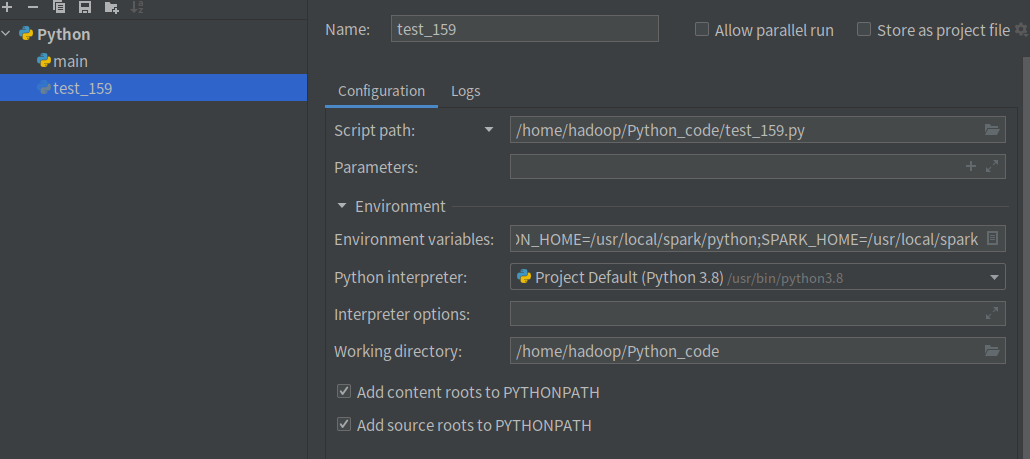

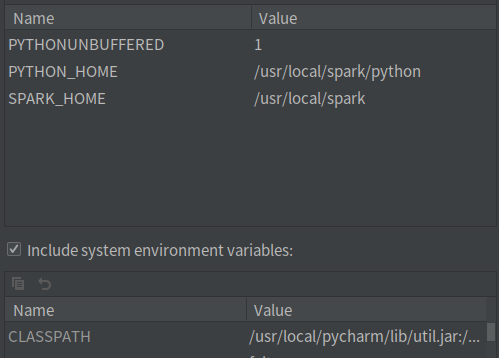

3.配置pycharm

4.运行代码

浙公网安备 33010602011771号

浙公网安备 33010602011771号