TPN论文笔记

Learning to Propagate Labels: Transductive Propagation Network for Few-shot Learning

Abstract:

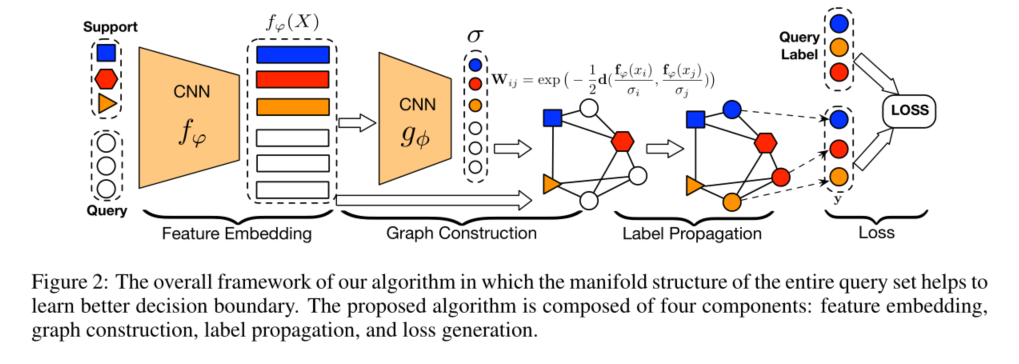

This paper propose Transductive Propagation Network (TPN), a novel meta-learning framework for transductive inference that classifies the entire test set at once to alleviate the low-data problem. Specifically, they propose to learn to propagate labels from labeled instances to unlabeled test instances, by learning a graph construction module that exploits the manifold structure in the data. TPN jointly learns both the parameters of feature embedding and the graph construction in an end-to-end manner.

The author validate TPN on multiple benchmark datasets, on which it largely outperforms existing few-shot learning approaches and achieves the state-of-the-art results.

contribution:

- The first to model transductive inference explicitly in few-shot learning

Although Reptile experimented with a transductive setting, they only share information between test examples by batch normalization rather than directly proposing a transductive model.

- TPN

Feature Embedding

The network is made up of four convolutional blocks where each block begins with a 2D convolutional layer with a 3×3 kernel and filter size of 64. Each 4 convolutional layer is followed by a batch-normalization layer, a ReLU nonlinearity and a 2×2 max-pooling layer. They use the same embedding function \(f_ϕ\) for both the Support set \(S\) and the Query set \(Q\).

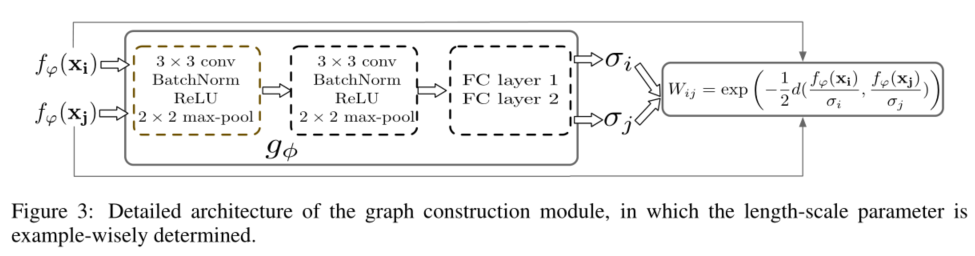

Graph Construction

Use Gaussian similarity function to calculate the weight:

Label Propagation

Loss