flink1.11.1 从源码看table api以及表达式的问题

想玩一下flink1.11全新的table api,发觉全是坑啊,不知道是因为我新手哪里使用不对,还是flink开发者的疏忽导致的BUG。

table api新特性

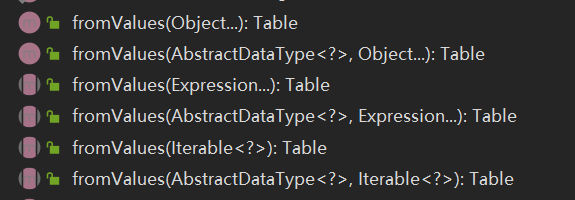

在flink1.11中,单纯的sql字符串对字段的操作已经被标记为过时,看一下部分源码:

/** * Performs a selection operation. Similar to a SQL SELECT statement. The field expressions * can contain complex expressions and aggregations. * * <p>Example: * * <pre> * {@code * tab.select("key, value.avg + ' The average' as average") * } * </pre> * @deprecated use {@link #select(Expression...)} */ @Deprecated Table select(String fields); /** * Performs a selection operation. Similar to a SQL SELECT statement. The field expressions * can contain complex expressions and aggregations. * * <p>Scala Example: * * <pre> * {@code * tab.select($("key"), $("value").avg().plus(" The average").as("average")); * } * </pre> * * <p>Scala Example: * * <pre> * {@code * tab.select($"key", $"value".avg + " The average" as "average") * } * </pre> */ Table select(Expression... fields);

这里是通过select 举例,之外还有where、filter、groupBy等。

可以看api文档注释,使用$用来表示字段,并且还可以提供字段方法,使用起来比较方便,例如$("a").as("b"),$是一个静态方法。

/** * Creates an unresolved reference to a table's field. * * <p>Example: * <pre>{@code * tab.select($("key"), $("value")) * } * </pre> */ //CHECKSTYLE.OFF: MethodName public static ApiExpression $(String name) { return new ApiExpression(unresolvedRef(name)); }

除此之外还可以用row,如row("field"),我个人使用看来,$和row是等价的。

问题

- TableEnviroment接口并没有提供流输入

上篇文章说到TableEnviroment比起flink1.10的改动提供接受原始数据的方法,

但是这些方法没有支持输入流数据,需要流数据还得用回StreamTableEnvironment。

- StreamTableEnvironment新方法有问题

SteamTableEnviroment提供了新的重载方法fromDataStream,先看一下具体事先类

@Override public <T> Table fromDataStream(DataStream<T> dataStream, String fields) { List<Expression> expressions = ExpressionParser.parseExpressionList(fields); return fromDataStream(dataStream, expressions.toArray(new Expression[0])); } @Override public <T> Table fromDataStream(DataStream<T> dataStream, Expression... fields) { JavaDataStreamQueryOperation<T> queryOperation = asQueryOperation( dataStream, Optional.of(Arrays.asList(fields))); return createTable(queryOperation); }

再看接口文档注释

/** * Converts the given {@link DataStream} into a {@link Table} with specified field names. * * <p>There are two modes for mapping original fields to the fields of the {@link Table}: * * <p>1. Reference input fields by name: * All fields in the schema definition are referenced by name * (and possibly renamed using an alias (as). Moreover, we can define proctime and rowtime * attributes at arbitrary positions using arbitrary names (except those that exist in the * result schema). In this mode, fields can be reordered and projected out. This mode can * be used for any input type, including POJOs. * * <p>Example: * * <pre> * {@code * DataStream<Tuple2<String, Long>> stream = ... * Table table = tableEnv.fromDataStream( * stream, * $("f1"), // reorder and use the original field * $("rowtime").rowtime(), // extract the internally attached timestamp into an event-time * // attribute named 'rowtime' * $("f0").as("name") // reorder and give the original field a better name * ); * } * </pre> * * <p>2. Reference input fields by position: * In this mode, fields are simply renamed. Event-time attributes can * replace the field on their position in the input data (if it is of correct type) or be * appended at the end. Proctime attributes must be appended at the end. This mode can only be * used if the input type has a defined field order (tuple, case class, Row) and none of * the {@code fields} references a field of the input type. * * <p>Example: * * <pre> * {@code * DataStream<Tuple2<String, Long>> stream = ... * Table table = tableEnv.fromDataStream( * stream, * $("a"), // rename the first field to 'a' * $("b"), // rename the second field to 'b' * $("rowtime").rowtime() // extract the internally attached timestamp into an event-time * // attribute named 'rowtime' * ); * } * </pre> * * @param dataStream The {@link DataStream} to be converted. * @param fields The fields expressions to map original fields of the DataStream to the fields of the {@code Table}. * @param <T> The type of the {@link DataStream}. * @return The converted {@link Table}. */ <T> Table fromDataStream(DataStream<T> dataStream, Expression... fields);

看起来很美好,可以直接$("field")获得字段,as重命名,rowtime可以直接转为时间,但是实际上,用起来肯定报错,先看一段测试代码

Table words = tblEnv.fromDataStream(dataStream

, row("f0").as("word"));

说明一下tuple中第一个字段是f0,可以直接引用。运行到这一段代码就会报错:

org.apache.flink.table.api.ValidationException: Alias 'word' is not allowed if other fields are referenced by position.

at org.apache.flink.table.typeutils.FieldInfoUtils$IndexedExprToFieldInfo.visitAlias(FieldInfoUtils.java:500)

at org.apache.flink.table.typeutils.FieldInfoUtils$IndexedExprToFieldInfo.visit(FieldInfoUtils.java:478)

接下来看源码是怎么处理的

1 @Override 2 public FieldInfo visit(UnresolvedCallExpression unresolvedCall) { 3 if (unresolvedCall.getFunctionDefinition() == BuiltInFunctionDefinitions.AS) { 4 return visitAlias(unresolvedCall); 5 } else if (isRowTimeExpression(unresolvedCall)) { 6 validateRowtimeReplacesCompatibleType(unresolvedCall); 7 return createTimeAttributeField(getChildAsReference(unresolvedCall), TimestampKind.ROWTIME, null); 8 } else if (isProcTimeExpression(unresolvedCall)) { 9 validateProcTimeAttributeAppended(unresolvedCall); 10 return createTimeAttributeField(getChildAsReference(unresolvedCall), TimestampKind.PROCTIME, null); 11 } 12 13 return defaultMethod(unresolvedCall); 14 } 15 16 private FieldInfo visitAlias(UnresolvedCallExpression unresolvedCall) { 17 List<Expression> children = unresolvedCall.getChildren(); 18 String newName = extractAlias(children.get(1)); 19 20 Expression child = children.get(0); 21 if (isProcTimeExpression(child)) { 22 validateProcTimeAttributeAppended(unresolvedCall); 23 return createTimeAttributeField(getChildAsReference(child), TimestampKind.PROCTIME, newName); 24 } else { 25 throw new ValidationException( 26 format("Alias '%s' is not allowed if other fields are referenced by position.", newName)); 27 } 28 } 29 30 @Override 31 protected FieldInfo defaultMethod(Expression expression) { 32 throw new ValidationException("Field reference expression or alias on field expression expected."); 33 }

调用visitAlias时候报错了,看逻辑,必须是procTime才能使用as,很莫名其妙的判断。

再往上看实在visit中调用的visitAlias,看看visit有三个判断,四个分支,是procTime,可以使用,rowTime也可以使用,如果用了as,就进入了visitAlias里面,这里就必须要是procTime。那如果不用as起别名,直接用原生名称,会进入defaultMethod,也是抛个错误出来。按照代码逻辑呢,就是除了rowTime、proTime就别用这fromDataStream的重载方法了,很接口注释文档的内容很不符合,我也不敢说这一定是一个BUG,如果有大神在的话,可以交流一下这个方法到底是怎么用的,在什么场景用。

实际上使用以下方法就不会有问题fromDataStream,

@Override public <T> Table fromDataStream(DataStream<T> dataStream) { JavaDataStreamQueryOperation<T> queryOperation = asQueryOperation( dataStream, Optional.empty()); return createTable(queryOperation); }

如果要用字段别名,之后再用select方法就可以。从代码上可以看出三个DataStream都是复用一套方法,只不过这一个没有在后面参数写入字段而已,所以那个错误更加显得莫名其妙,我还是觉得是个Bug。

因为table api几乎所有算子都换了新的表达式入参,还有很多问题,待续。

posted on 2020-09-13 11:20 SaltFishYe 阅读(734) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号