Keepalived + Nginx + Fastdfs

Keepalived+Nginx+Fastdfs

集群环境简介

- 操作系统:CentOS Linux release 7.6.1810

- Fastdfs版本:fastdfs-6.06.tar.gz

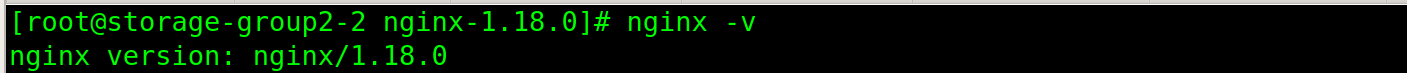

- Nginx版本:nginx-1.18.0.tar.gz

- 高可用版本:keepalived-1.4.5.tar.gz

- 其余依赖包版本

- libfastcommon-1.0.43.tar.gz(是从FastDFS和FastDHT中提取出来的公共C函数库)

- fastdfs-nginx-module-1.22.tar.gz

- ngx_cache_purge-2.3.tar.gz

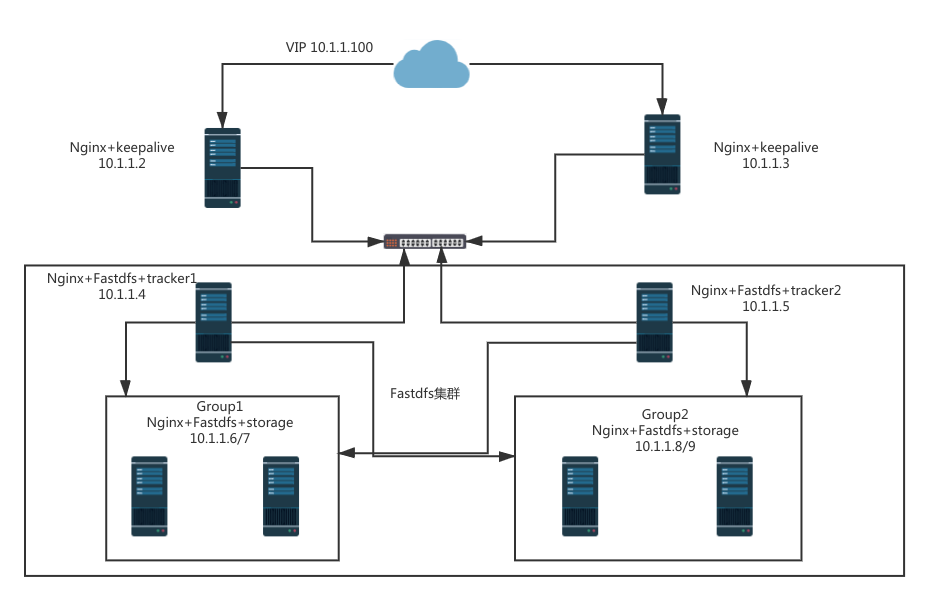

网络拓扑

跟踪服务集群规划

| 节点名称 | IP地址 | 端口 | 主机名称 | Nginx端口 |

|---|---|---|---|---|

| 跟踪节点1 | 10.1.1.4 | 22122 | tracker-group1 | 80 |

| 跟踪节点2 | 10.1.1.5 | 22122 | tracker-group2 | 80 |

存储服务集群规划

| 节点名称 | IP地址 | 端口 | 主机名称 | Nginx端口 |

|---|---|---|---|---|

| 存储节点组1-1 | 10.1.1.6 | 22122 | storage-group1-1 | 80 |

| 存储节点组1-2 | 10.1.1.7 | 22122 | storage-group1-2 | 80 |

| 存储节点组2-1 | 10.1.1.8 | 22122 | storage-group2-1 | 80 |

| 存储节点组2-2 | 10.1.1.9 | 22122 | storage-group2-2 | 80 |

Keepalived高可用规划

| 节点名称 | IP地址 | 主机名称 | Nginx端口 | 默认主从 |

|---|---|---|---|---|

| VIP | 10.1.1.100 | 虚拟IP | ||

| MASTER | 10.1.1.2 | master-proxy | 80 | MASTER |

| BACKUP | 10.1.1.3 | slave-proxy | 80 | BACKUP |

免密登录

# 开启秘钥

ssh-keygen

# 所有节点依次执行

ssh-copy-id root@10.1.1.3

ssh-copy-id root@10.1.1.4

ssh-copy-id root@10.1.1.5

ssh-copy-id root@10.1.1.6

ssh-copy-id root@10.1.1.7

ssh-copy-id root@10.1.1.8

ssh-copy-id root@10.1.1.9

依赖环境

# 所有设备都执行该命令

yum install -y gcc gcc-c++ make automake autoconf libtool pcre pcre-devel zlib zlib-devel openssl openssl-devel

Fastdfs安装

安装libfastcommon

# 所有fastdfs设备都执行此操作 本文只显示一次 其余设备操作一样

wget https://github.com/happyfish100/libfastcommon/archive/V1.0.43.tar.gz

tar -zxvf V1.0.43.tar.gz -C /usr/local/src/

cd /usr/local/src/libfastcommon-1.0.43

./make.sh && ./make.sh install

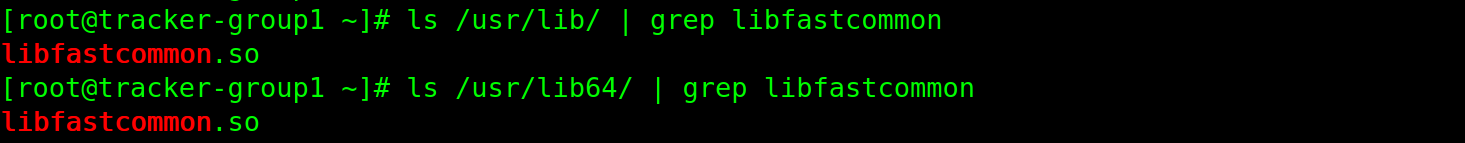

# 通过执行改命令检查是否安装成功

ls /usr/lib64/ | grep libfastcommon

ls /usr/lib/ | grep libfastcommon

安装Fastdfs

# 所有fastdfs设备都执行此操作 本文只显示一次 其余设备操作一样

wget https://github.com/happyfish100/fastdfs/archive/V6.06.tar.gz

tar -zxvf V6.06.tar.gz -C /usr/local/src

cd /usr/local/src/fastdfs-6.06

./make.sh && ./make.sh install

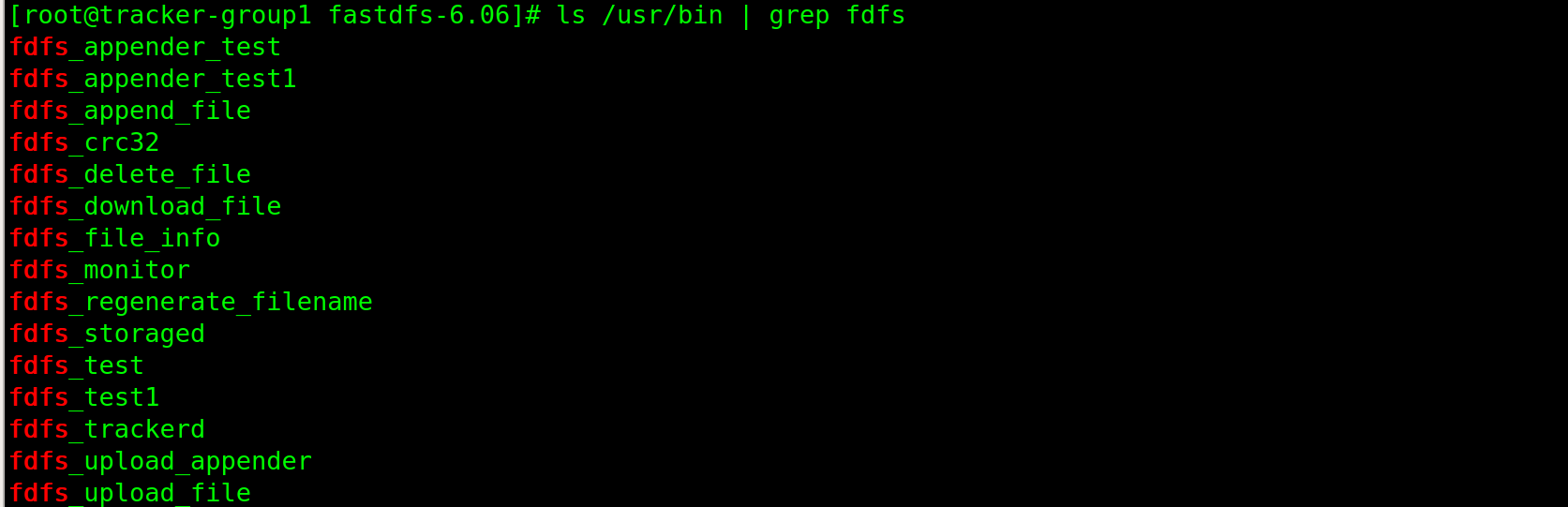

# 检查是否安装成功

ls /usr/bin | grep fdfs

Fastdfs搭建集群

Tracker跟踪器集群配置

配置跟踪器tracker(10.1.1.4,10.1.1.5)

修改配置文件

# 创建tracker的数据与日志目录

mkdir -p /var/fdfs/tracker

cd /etc/fdfs/

# 备份配置文件

cp tracker.conf.sample tracker.conf

# 由于有多个存储组 此处以轮循的方式进行存储

vim tracker.conf

# tracker数据与日志目录

base_path=/var/fdfs/tracker

# 轮循进行数据存储

store_lookup = 0

# 指定存储组为组一

store_group = group1

# tracker2配置同理 但是要将存储组改成store_group = group2

store_lookup

- 当值为

0的时候以轮循方式在多个组中进行文件存储 - 当值为

1的时候上传到指定的组 例如组一 - 当值为

2的时候负载均衡选择剩余空间最大的组进行上传

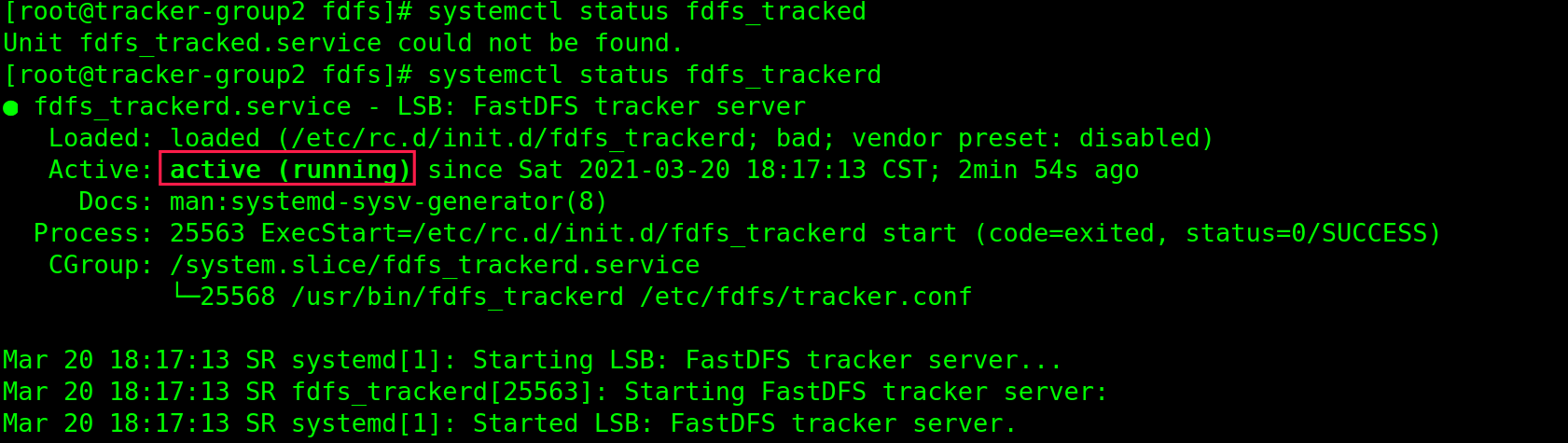

启动tracker

# 启动tracker

/etc/init.d/fdfs_trackerd start

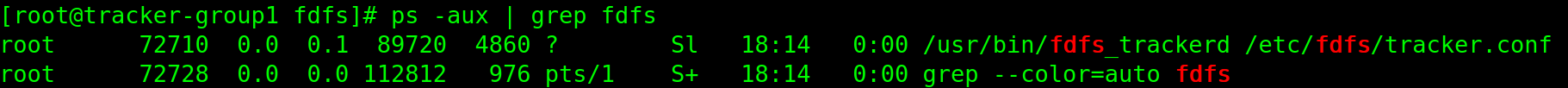

# 查看服务是否启动

ps -aux | grep fdfs

# 第一次启动成功后,可以使用系统命令启动、重启、停止

systemctl start fdfs_trackerd

systemctl restart fdfs_trackerd

systemctl stop fdfs_trackerd

systemctl status fdfs_tracked

配置开机自启

echo /etc/init.d/fdfs_trackerd start >> /etc/rc.d/rc.local

chmod +x /etc/rc.d/rc.local

Storage存储器集群配置

配置Fastdfs存储(10.1.1.6,10.1.1.7,10.1.1.8,10.1.1.9)

修改配置文件

# 创建数据与日志存储目录

mkdir -p /var/fdfs/storage

cd /etc/fdfs/

# 备份配置文件

cp storage.conf.sample storage.conf

vim client.conf

group_name=group1 # 第一组为组一 第二组为组二

base_path=/var/fdfs/storage #设置storage的日志目录

store_path0=/var/fdfs/storage #存储路径

# 配置tracker

tracker_server = 10.1.1.4:22122

tracker_server = 10.1.1.5:22122

# 其余存储设备配置同理 但是在组二中需要修改group_name=group2

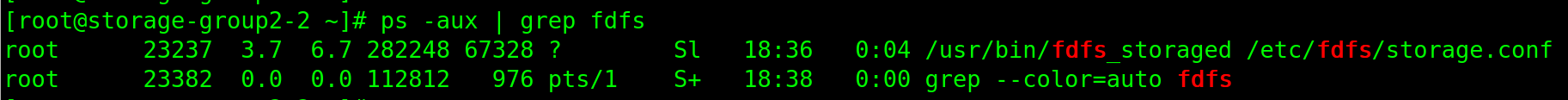

启动tracker

# 启动tracker

/etc/init.d/fdfs_storaged start

# 查看进程

ps -aux | grep fdfs

配置开机自启

echo /etc/init.d/fdfs_storaged start >> /etc/rc.d/rc.local && chmod +x /etc/rc.d/rc.local

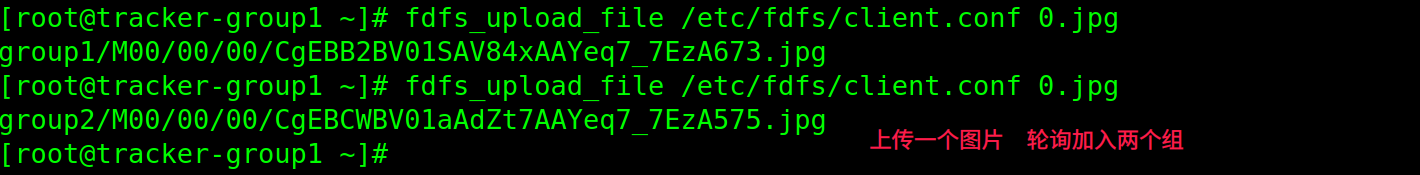

客户端配置

修改tracker服务器充当客户端

# 配置文件备份

cp /etc/fdfs/client.conf.sample client.conf

vim client.conf

base_path=/var/fdfs/tracker

tracker_server=10.1.1.4:22122

tracker_server=10.1.1.5:22122

# 上传文件名

fdfs_upload_file /etc/fdfs/client.conf + 需要上传的文件名称

- 不同组之间是独立的数据不能共享

- 同组之间数据是共享的

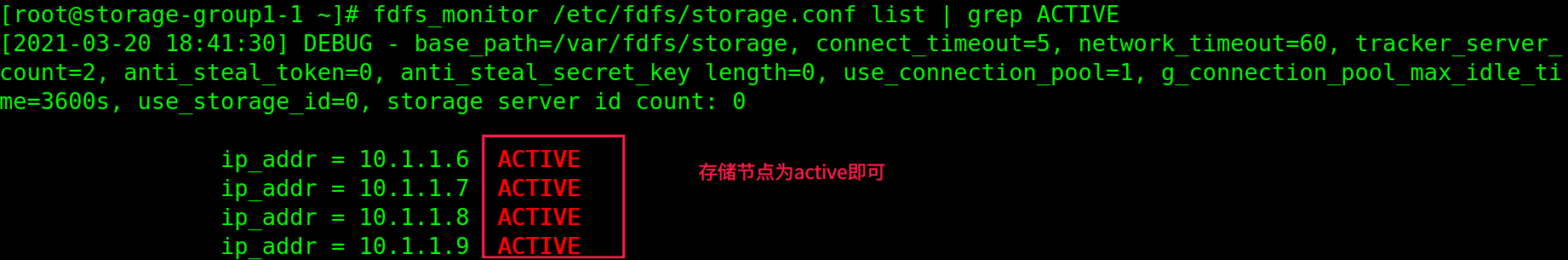

查看集群状态

# 在任意存储节点执行如下命令

fdfs_monitor /etc/fdfs/storage.conf list | grep ACTIVE

Nginx搭建集群

存储节点安装Nginx

在所有的存储节点进行安装配置 Nginx

安装fastdfs-nginx-module

wget https://codeload.github.com/happyfish100/fastdfs-nginx-module/tar.gz/V1.22

tar -zxvf /opt/fastdfs-nginx-module-1.22.tar.gz -C /usr/local/src

安装Nginx

wget https://nginx.org/download/nginx-1.18.0.tar.gz

tar -zxvf nginx-1.18.0.tar.gz -C /usr/local/src/

cd /usr/local/src/nginx-1.18.0

./configure --prefix=/usr/local/nginx --add-module=/usr/local/src/fastdfs-nginx-module-1.22/src

make && make install

配置环境变量

vim /etc/profile

# 尾部追加

NGINX_HOME=/usr/local/nginx

export PATH=${NGINX_HOME}/sbin:${PATH}

source /etc/profile

配置fastdfs-nginx-module

# 复制配置文件

cp /usr/local/src/fastdfs-nginx-module-1.22/src/mod_fastdfs.conf /etc/fdfs/

# 配置文件

connect_timeout=10

base_path=/tmp

tracker_server=10.1.1.4:22122

tracker_server=10.1.1.5:22122

storage_server_port=23000

group_name=group1 # 第一组为group1 第二组为group2

url_have_group_name = true

store_path0=/var/fdfs/storage

group_count = 2

[group1]

group_name=group1

storage_server_port=23000

store_path_count=1

store_path0=/var/fdfs/storage

[group2]

group_name=group2

storage_server_port=23000

store_path_count=1

store_path0=/var/fdfs/storage

复制http文件

# 复制FastDFS部分配置文件到/etc/fdfs目录

cd /usr/local/src/fastdfs-6.06/conf

cp http.conf mime.types /etc/fdfs/

建立软链接存储数据

ln -s /var/fdfs/storage/data/ /var/fdfs/storage/data/M00

修改Nginx配置文件

cd /usr/local/nginx/conf/

cp nginx.conf nginx.conf.bak # 备份配置文件

# 修改第二行的用户

user nobody ---> user root

# server内容

server {

listen 8888; # 与fastdfs内容对应

server_name localhost;

location ~/group([0-9]) {

root /fastdfs/storage/data;

ngx_fastdfs_module;

}

}

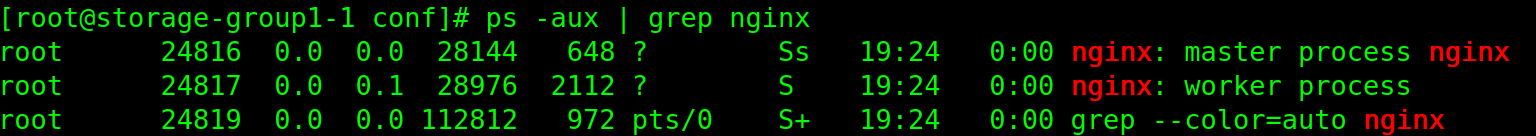

启动Nginx

nginx

ps -aux | grep nginx

配置开机自启

echo /usr/local/nginx/sbin/nginx >> /etc/rc.d/rc.local

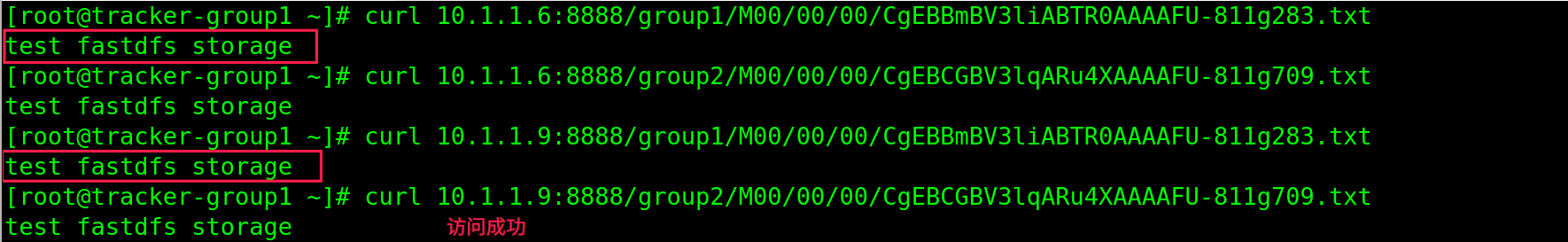

测试通过存储节点访问数据

echo "test fastdfs storage" > test.txt # 添加测试文件

fdfs_upload_file /etc/fdfs/client.conf test.txt # 上传文件 获取文件ID

# 发起get请求测试

curl 10.1.1.6:8888/group1/M00/00/00/CgEBBmBV3liABTR0AAAAFU-811g283.txt

curl 10.1.1.6:8888/group2/M00/00/00/CgEBCGBV3lqARu4XAAAAFU-811g709.txt

curl 10.1.1.9:8888/group1/M00/00/00/CgEBBmBV3liABTR0AAAAFU-811g283.txt

curl 10.1.1.9:8888/group2/M00/00/00/CgEBCGBV3lqARu4XAAAAFU-811g709.txt

跟踪节点安装Nginx

在跟踪集群节点上安装Nginx(10.1.1.4,10.1.1.5)

下载ngx_cache_purge 缓存插件

wget https://codeload.github.com/FRiCKLE/ngx_cache_purge/tar.gz/2.3

# 后面编译nginx需要使用

tar -zxvf ngx_cache_purge-2.3.tar.gz -C /usr/local/src/

安装Nginx

tar -zxvf nginx-1.18.0.tar.gz -C /usr/local/src/

cd /usr/local/src/nginx-1.18.0

./configure --prefix=/usr/local/nginx --add-module=/usr/local/src/ngx_cache_purge-2.3

make && make install

配置环境变量

vim /etc/profile

# 尾部追加

NGINX_HOME=/usr/local/nginx

export PATH=${NGINX_HOME}/sbin:${PATH}

source /etc/profile

修改配置文件

cd /usr/local/nginx/conf/

# 备份配置文件

cp nginx.conf nginx.conf.bak

user root;

http {

#gzip on;

#配置缓存

server_names_hash_bucket_size 128;

client_header_buffer_size 32k;

large_client_header_buffers 4 32k;

client_max_body_size 300m;

proxy_redirect off;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 90;

proxy_send_timeout 90;

proxy_read_timeout 90;

proxy_buffer_size 16k;

proxy_buffers 4 64k;

proxy_busy_buffers_size 128k;

proxy_temp_file_write_size 128k;

#配置缓存存储路径、存储方式、分配内存大小、磁盘最大空间、缓存期限

proxy_cache_path /fastdfs/cache/nginx/proxy_cache levels=1:2

keys_zone=http-cache:200m max_size=1g inactive=30d;

proxy_temp_path /fastdfs/cache/nginx/proxy_cache/tmp;

#配置存储服务集群 group1 负载均衡服务器

upstream fdfs_group1 {

server 10.1.1.6:8888 weight=1 max_fails=2 fail_timeout=30s;

server 10.1.1.7:8888 weight=1 max_fails=2 fail_timeout=30s;

}

#配置存储服务集群 group2 负载均衡服务器

upstream fdfs_group2 {

server 10.1.1.8:8888 weight=1 max_fails=2 fail_timeout=30s;

server 10.1.1.9:8888 weight=1 max_fails=2 fail_timeout=30s;

}

server {

listen 80;

server_name localhost;

#配置存储服务集群 group1 的负载均衡参数

location /group1/M00 {

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://fdfs_group1;

expires 30d;

}

#配置存储服务集群 group2 的负载均衡参数

location /group2/M00 {

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://fdfs_group2;

expires 30d;

}

#配置清除缓存的访问权限

location ~/purge(/.*) {

allow 127.0.0.1;

allow 10.1.1.0/24;

deny all;

proxy_cache_purge http-cache $1$is_args$args;

}

}

}

配置缓存文件夹

mkdir -p /fastdfs/cache/nginx/proxy_cache

mkdir -p /fastdfs/cache/nginx/proxy_cache/tmp

配置开机自启

echo /usr/local/nginx/sbin/nginx >> /etc/rc.d/rc.local && chmod +x /etc/rc.d/rc.local

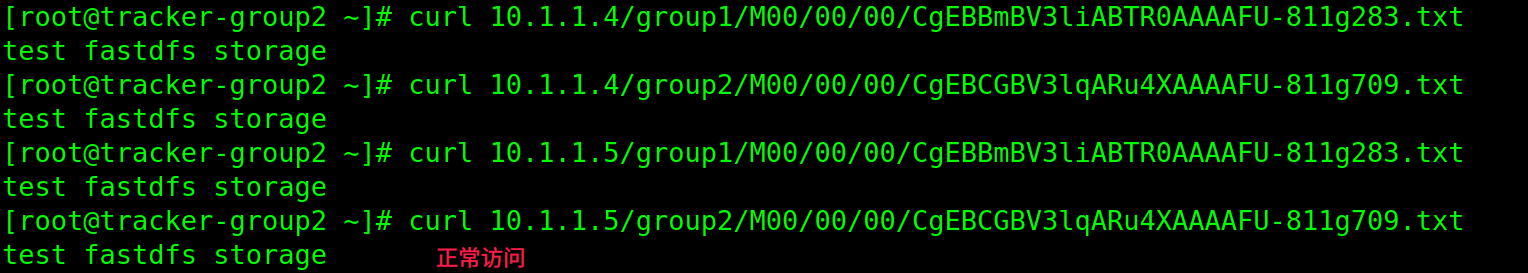

测试通过追踪节点访问数据

curl 10.1.1.4/group1/M00/00/00/CgEBBmBV3liABTR0AAAAFU-811g283.txt

curl 10.1.1.4/group2/M00/00/00/CgEBCGBV3lqARu4XAAAAFU-811g709.txt

curl 10.1.1.5/group1/M00/00/00/CgEBBmBV3liABTR0AAAAFU-811g283.txt

curl 10.1.1.5/group2/M00/00/00/CgEBCGBV3lqARu4XAAAAFU-811g709.txt

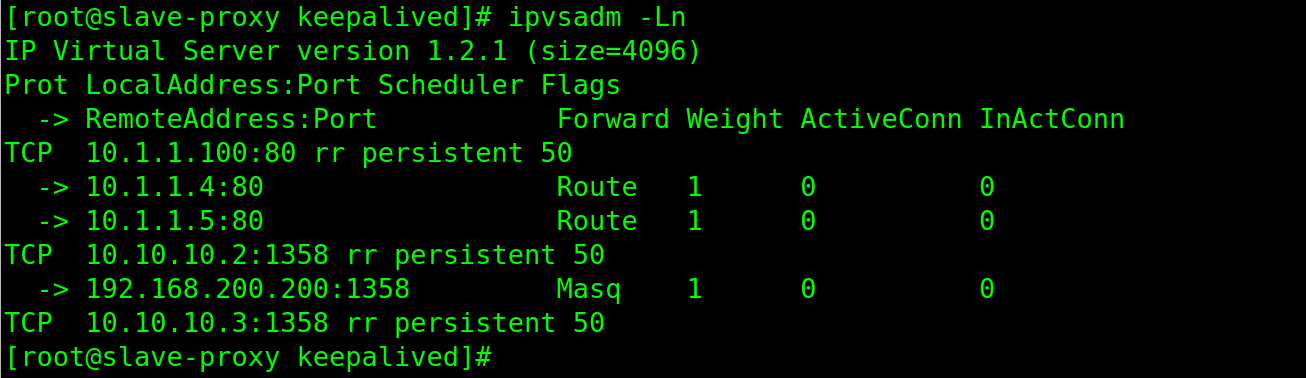

Lvs+Keepalived安装

Lvs安装

# 安装Lvs

yum install ipvsadm

网络转发

后端服务节点配置网络转发(10.1.1.4,10.1.1.5)

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

ifconfig lo:0 10.1.1.100 broadcast 10.1.1.100 netmask 255.255.255.255 up

echo route add -host 10.1.1.100 dev lo >> /etc/rc.local

# 永久添加

route add -host 10.1.1.100 dev lo:0

keepalived

安装

cd /opt

wget https://www.keepalived.org/software/keepalived-1.4.5.tar.gz

tar -zxvf keepalived-1.4.5.tar.gz

mv /opt/keepalived-1.4.5 /usr/local/src/

cd /usr/local/src/keepalived-1.4.5

./configure --prefix=/usr/local/keepalived

make && make install

配置

### 安装完成,复制配置文件模板到/etc/keepalived

mkdir /etc/keepalived

cp /usr/local/src/keepalived-1.4.5/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

cp /usr/local/src/keepalived-1.4.5/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

### 复制服务启动脚本

cp /usr/local/src/keepalived-1.4.5/keepalived/etc/init.d/keepalived /etc/init.d/

ln -snf /usr/local/keepalived/sbin/keepalived /usr/sbin/keepalived

# 赋予执行权限

chmod +x /etc/init.d/keepalived

修改启动服务文件

vim /lib/systemd/system/keepalived.service

### 将下面两行

EnvironmentFile=-/usr/local/keepalived/etc/sysconfig/keepalived

ExecStart=/usr/local/keepalived/sbin/keepalived $KEEPALIVED_OPTIONS

### 修改为

EnvironmentFile=/etc/sysconfig/keepalived

ExecStart=/sbin/keepalived $KEEPALIVED_OPTIONS

# 加载文件

systemctl daemon-reload

配置日志文件

vim /etc/sysconfig/keepalived

KEEPALIVED_OPTIONS="-D -d -S 0" ## -S 是syslog的facility,0表示放在local 0

vim /etc/rsyslog.conf

local0.* /var/log/keepalived.log

# 重启日志

systemctl restart rsyslog

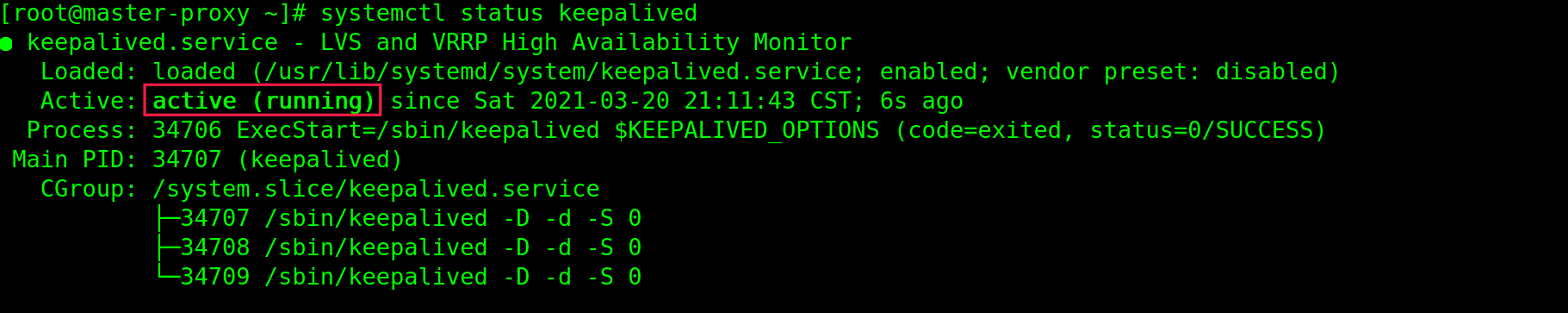

启动服务

systemctl enable keepalived.service

systemctl start keepalived

systemctl status keepalived

systemctl restart keepalived

配置master节点

cd /etc/keepalived/

cp keepalived.conf keepalived.conf.bak

global_defs {

router_id fdfs-proxy1

}

vrrp_script chk_nginx {

#检查nginx的脚本,需要我们自己定义,下面讲到

script "/etc/keepalived/nginx_check.sh"

#检查时间间隔,这个时间不要超过脚本的执行时间,否则会报“Track script chk_nginx is being timed out, expect idle - skipping run”

interval 2

#脚本执行失败则优先级减20

weight -20

#表示两次失败才算失败

fall 2

}

#配置实例

vrrp_instance apache {

state MASTER

interface ens34 #生成VIP地址的接口

virtual_router_id 10 #主、备机的 virtual_router_id 必须相同,取值 0-255

priority 150 #主机的优先级,备份机改为 50,主机优先级一定要大于备机

advert_int 1 #主备之间的通告间隔秒数

authentication {

auth_type PASS #设置验证类型,主要有 PASS 和 AH 两种

auth_pass root

}

virtual_ipaddress {

10.1.1.100

}

}

## 虚拟服务配置

virtual_server 10.1.1.100 80 {

delay_loop 6 #Keepalived 多长时间监测一次 RS

lb_algo rr #lvs 调度算法,这里使用轮叫

lb_kind DR #LVS 是用 DR 模式

#persistence_timeout 50 #同一 IP 50 秒内的请求都发到同个 real server 超过50S 发到另外一个节点

protocol TCP #指定转发协议类型,有 tcp 和 udp 两种

real_server 10.1.1.4 80 { #配置服务节点

weight 1 ##默认为1,0为失效

TCP_CHECK{ ##TCP方式的健康检查,realserve 的状态检测设置部分,单位是秒

connect_timeout 3 #10 秒无响应超时

retry 3 #重试次数

delay_before_retry 3 #重试间隔

connect_port 80 # 连接端口

}

}

real_server 10.1.1.5 80 {

weight 1

TCP_CHECK{

connect_timeout 3

retry 3

delay_before_retry 3

connect_port 80

}

}

}

# 配置检测脚本

vim /etc/keepalived/nginx_check.sh

#!/bin/sh

A=`ps -C nginx --no-header |wc -l`

if [ $A -eq 0 ]

then

### 这种方式启动nginx,使用 systemctl status nginx 查看状态非 active

#/usr/local/nginx/sbin/nginx

systemctl start nginx

sleep 1

A2=`ps -C nginx --no-header |wc -l`

if [ $A2 -eq 0 ]

then

exit 1

fi

fi

chmod +x nginx_check.sh

# 重启服务

systemctl start keepalived

配置backup节点

cd /etc/keepalived/

cp keepalived.conf keepalived.conf.bak

global_defs {

router_id fdfs-proxy2

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 2

}

#配置实例

vrrp_instance apache {

state BACKUP

interface ens34 #生成VIP地址的接口

virtual_router_id 10 #主、备机的 virtual_router_id 必须相同,取值 0-255

priority 120 #主机的优先级,备份机改为 50,主机优先级一定要大于备机

advert_int 1 #主备之间的通告间隔秒数

authentication {

auth_type PASS #设置验证类型,主要有 PASS 和 AH 两种

auth_pass root

}

virtual_ipaddress {

10.1.1.100

}

}

## 虚拟服务配置

virtual_server 10.1.1.100 80 {

delay_loop 6 #Keepalived 多长时间监测一次 RS

lb_algo rr #lvs 调度算法,这里使用轮叫

lb_kind DR #LVS 是用 DR 模式

#persistence_timeout 50 #同一 IP 50 秒内的请求都发到同个 real server 超过50S 发到另外一个节点

protocol TCP #指定转发协议类型,有 tcp 和 udp 两种

real_server 10.1.1.4 80 { #配置服务节点

weight 1 ##默认为1,0为失效

TCP_CHECK{ ##TCP方式的健康检查,realserve 的状态检测设置部分,单位是秒

connect_timeout 3 #10 秒无响应超时

retry 3 #重试次数

delay_before_retry 3 #重试间隔

connect_port 80 # 连接端口

}

}

real_server 10.1.1.5 80 {

weight 1

TCP_CHECK{

connect_timeout 3

retry 3

delay_before_retry 3

connect_port 80

}

}

}

# nginx检测脚本

vim /etc/keepalived/nginx_check.sh

#!/bin/sh

A=`ps -C nginx --no-header |wc -l`

if [ $A -eq 0 ]

then

### 这种方式启动nginx,使用 systemctl status nginx 查看状态非 active

#/usr/local/nginx/sbin/nginx

systemctl start nginx

sleep 1

A2=`ps -C nginx --no-header |wc -l`

if [ $A2 -eq 0 ]

then

systemctl stop keepalived

fi

fi

chmod +x /etc/keepalived/nginx_check.sh

# 重启服务

systemctl start keepalived

浙公网安备 33010602011771号

浙公网安备 33010602011771号