Paper | Recovering Realistic Texture in Image Super-resolution by Deep Spatial Feature Transform

Recovering Realistic Texture in Image Super-resolution by Deep Spatial Feature Transform

发表在2018年CVPR。

摘要:

Despite that convolutional neural networks (CNN) have recently demonstrated high-quality reconstruction for single-image super-resolution (SR), recovering natural and realistic texture remains a challenging problem. In this paper, we show that it is possible to recover textures faithful to semantic classes. In particular, we only need to modulate features of a few intermediate layers in a single network conditioned on semantic segmentation probability maps. This is made possible through a novel Spatial Feature Transform (SFT) layer that generates affine transformation parameters for spatial-wise feature modulation. SFT layers can be trained end-to-end together with the SR network using the same loss function. During testing, it accepts an input image of arbitrary size and generates a high-resolution image with just a single forward pass conditioned on the categorical priors. Our final results show that an SR network equipped with SFT can generate more realistic and visually pleasing textures in comparison to state-of-the-art SRGAN [27] and EnhanceNet [38].

结论:

We have explored the use of semantic segmentation maps as categorical prior for constraining the plausible solution space in SR. A novel Spatial Feature Transform (SFT) layer has been proposed to efficiently incorporate the categorical conditions into a CNN-based SR network. Thanks to the SFT layers, our SFT-GAN is capable of generating distinct and rich textures for multiple semantic regions in a super-resolved image in just a single forward pass. Extensive comparisons and a user study demonstrate the capability of SFT-GAN in generating realistic and visually pleasing textures, outperforming previous GAN-based methods [27, 38]. Our work currently focuses on SR of outdoor scenes.

Despite robust to out-of-category images, it does not consider priors of finer categories, especially for indoor scenes, e.g., furniture, appliance and silk. In such a case, it puts forward challenging requirements for segmentation tasks from an LR image. Future work aims at addressing these shortcomings. Furthermore, segmentation and SR may benefit from each other and jointly improve the performance.

要点:

-

本文的重点,是在SR时更好地恢复自然纹理信息。

-

具体而言,通过输入语义分割概率图(semantic segmentation probability maps),为CNN提供类别先验(categorical priors),从而让纹理与类别一一对应。

-

实现该功能的网络层称为空域特征转换层(spatial feature transform layer)。它可以生成对空域特征进行仿射变换的参数,并且与SR网络一起训练。

-

尽管SFT-GAN对于未知类别的图像也是健壮的,但未知类别确实是一个问题。

亮点:

-

这算是一个借助语义分割信息的超分辨工作,思想符合逻辑,实验效果也好。Fig. 1给出了说明:

-

这种思想还可以拓展到其他先验,例如深度图(depth map),从而增强纹理的颗粒度(granularity)。

-

类似于BN,对特征进行正则化,从而置入类别先验。

局限:

-

语义分割图是LR图像经过双三次插值后,输入已训练好的分割网络[31]得到的,与超分辨网络独立。

-

作者通过仿射变换特征的方式,置入类别先验。这种方式有效果,但可能还有更好的方式。

故事背景

如上图,如果缺乏对类别的先验,我们的解空间是很难约束的。特别是对于两个相似的场景,如上图的植物和砖块。

历史工作中,就有人专门对不同的分类训练各自的模型。但在这里,作者想让语义分割图作为CNN的输入。关键就在于如何输入。如果只是简单地输入分割图,或者在中间层输入分割图,效果是不好的。

空域特征转换

为了解决语义分割图的输入有效性问题,我们引出了空域特征转换(SFT)层。

实际上,SFT的思想起源于BN。BN是对特征作仿射变换。条件正则化(conditional normalization, CN)则是采用在某条件下学习得到的函数,代替BN中的仿射变换。那么SFT是怎么做的呢?

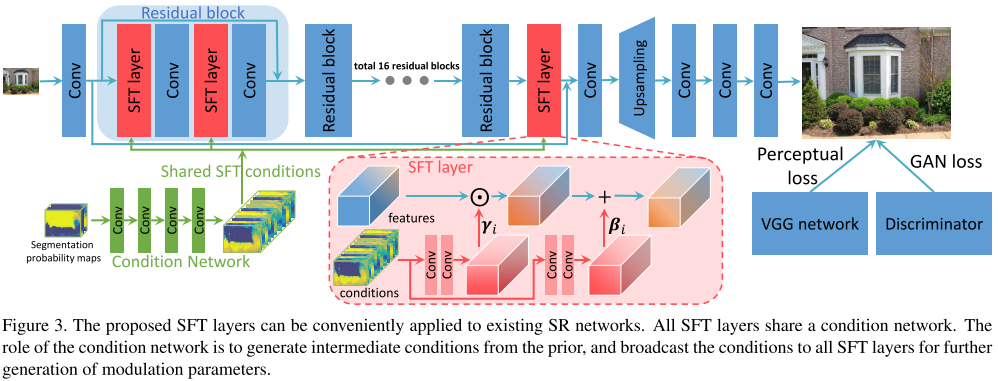

具体而言,SFT基于先验,输出调整参数对(modulation parameter pair)\((\gamma, \beta)\)。该调整参数对将会对中间层的特征\(F\)进行仿射变换:\(SFT(F|\gamma, \beta) = \gamma \odot F + \beta\),其中\(\odot\)是哈达玛乘积(逐点点乘)。换句话说:借助SFT,原本关于类别的先验,就转化为了调整参数信息。

在网络中是这么实现的:

我们先关注SFT结构。

-

如图,分割概率图没有直接输入网络,而是先经过一个浅层CNN学习,我们称之为condition network。

-

网络的输出(conditions)会在整个网络的每一个中间层共享。在内部,如图,conditions分别经过2层CNN,得到参数对即可。然后执行仿射变换,完毕。

4.3节实验发现,直接拼接分割信息图,效果是很差的。

超分辨率网络

我们首先看一看分割网络。

-

LR图像先经过了双三次插值升采样,然后经过分割网络[31],得到语义分割概率图。该网络是独立训练的,与我们现在的工作独立。

-

实验发现,哪怕经过放缩因子为4的降采样,分割效果也是不错的(如图4)。如果类别未知,那么该目标会落入背景(background)。

整体结构是一个GAN,参见3.2节。

实验略。

浙公网安备 33010602011771号

浙公网安备 33010602011771号