【ARM+Qt+OpenCV】基于ARM的双目图像采集系统

1.基于C语言实现UDP服务器2.C++中线程同步与互斥的四种方式介绍及对比详解3.Jsoncpp的安装与使用方式4.C++ open()和read()函数使用详解5.深入理解C++ 空类大小6.C++17 Filesystem 实用教程7.C语言格式输出方式8.C语言线程池的常见实现方式详解9.opencv实现像素统计的示例代码10.C/C++ 创建Socket实现双工通信11.在Linux系统中下载`gcc-linaro-7.2.1-2017.11-x86_64_aarch64-linux-gnu`工具链12.linux安装lspci13.制作ubuntu22.04的根文件系统

14.【ARM+Qt+OpenCV】基于ARM的双目图像采集系统

15.通用C++ Makefile16.linux下安装cmake版本17.添加xxx.so到环境变量里18.在Linux系统下验证万兆网络(10Gbps)的性能和配置情况,可以通过多种方法来实现19.【Ryan】: linux下安装ftp20.【Ryan】: linux下挂在共享文件夹21.linux下配置ip为动态获取22.字符串值相加校验23.数据十六进制打印输出24.linux服务问题传文件连不上问题远程问题等25.提供一个纯C语言的图像压缩程序,但是要达到将6MB的图片压缩到100KB以内的要求,有损压缩肯定是必须的。同时,要在速度上有所提升,我可以为您提供一个基于多线程的图像压缩程序。26.cmake工程构建开发过程27.使用QT开发远程linux服务器过程28.linux系统测试磁盘IO速度 - fio使用点击查看代码

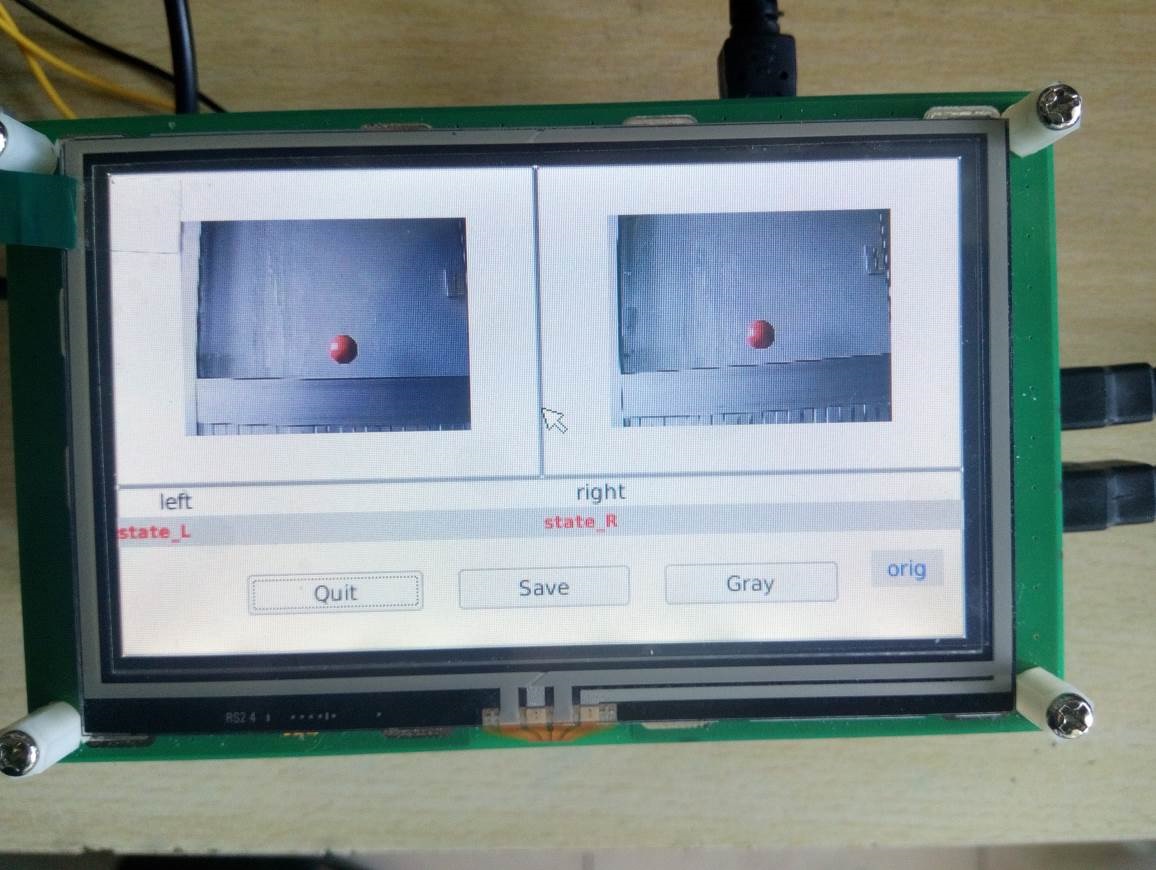

系统使用ARM处理器,运行linux系统,Qt创建工程编写主程序,可以使用OpenCV进行图像处理。

通过两个摄像头采集会图像,在LCD上进行显示,然后通过LCD上的按钮实现退出程序、保存图像、显示灰度三个功能。细节的东西就不说了,网上一抓一大把,直接上代码。。。

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>走----------------你<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

这是widget.h

点击(此处)折叠或打开

#ifndef WIDGET_H

#define WIDGET_H

#include <QWidget>

#include <QtGui>

#include <QDateTime>

#include <cv.h>

#include <highgui.h>

#include <cxcore.h>

#include "camthread.h"

#include "globaldata.h"

namespace Ui {

class Widget;

}

class Widget : public QWidget

{

Q_OBJECT

public:

explicit Widget(QWidget *parent = 0);

~Widget();

private slots:

void slotShowImage(int num, unsigned char* rgb);

void slotGrayImage();

void slotSaveImage();

private:

Ui::Widget *ui;

volatile bool m_saveFlag_L;

volatile bool m_saveFlag_R;

volatile bool m_grayFlag_L;

volatile bool m_grayFlag_R;

CamThread* m_cam_L;

CamThread* m_cam_R;

};

#endif // WIDGET_H

这是widget.cpp

点击(此处)折叠或打开

#include "widget.h"

#include "ui_widget.h"

Widget::Widget(QWidget *parent) :

QWidget(parent),

ui(new Ui::Widget)

{

ui->setupUi(this);

//flag set

m_saveFlag_L = 0;

m_saveFlag_R = 0;

m_grayFlag_L = 0;

m_grayFlag_R = 0;

//camera setup

m_cam_L = new CamThread("/dev/video1", LEFT_CAM);

if(m_cam_L->initCam() == -1)

printf("open %s error\n", m_cam_L->m_devName.toStdString().c_str());

connect(m_cam_L, SIGNAL(sigGotImage(int, unsigned char*)), this, SLOT(slotShowImage(int, unsigned char*)));

m_cam_R = new CamThread("/dev/video2", RIGHT_CAM);

if(m_cam_R->initCam() == -1)

printf("open %s error\n", m_cam_L->m_devName.toStdString().c_str());

connect(m_cam_R, SIGNAL(sigGotImage(int, unsigned char*)), this, SLOT(slotShowImage(int, unsigned char*)));

m_cam_L->start();

m_cam_R->start();

printf("thread start\n");

connect(ui->saveButton, SIGNAL(clicked()), this, SLOT(slotSaveImage()));

connect(ui->grayButton, SIGNAL(clicked()), this, SLOT(slotGrayImage()));

connect(ui->quitButton, SIGNAL(clicked()), qApp, SLOT(quit()));

}

Widget::~Widget()

{

delete ui;

free(m_cam_L);

free(m_cam_R);

}

void Widget::slotShowImage(int num, unsigned char* rgb)

{

///////////////left////////////////////////

if(num == LEFT_CAM)

{

cv::Mat mat_src_L(IMG_HEIGHT, IMG_WIDTH, CV_8UC3, rgb);

QImage qimage_L;

if(m_grayFlag_L == 1)

{ //gray

cv::Mat mat_gray_L(IMG_WIDTH, IMG_HEIGHT, CV_8UC1);

cv::cvtColor(mat_src_L, mat_gray_L, CV_BGR2GRAY);

qimage_L = QImage(mat_gray_L.cols, mat_gray_L.rows, QImage::Format_Indexed8); //Format_Indexed8

qimage_L.setColorCount(256);

for(int i = 0; i < 256; i++)

{

qimage_L.setColor(i, qRgb(i, i, i));

}

unsigned char* pSrc = mat_gray_L.data;

for(int row = 0; row < mat_gray_L.rows; row++)

{

unsigned char* pDest = qimage_L.scanLine(row);

memcpy(pDest, pSrc, mat_gray_L.cols);

pSrc += mat_gray_L.step;

}

}

else

{

qimage_L = QImage(mat_src_L.data, mat_src_L.cols, mat_src_L.rows, QImage::Format_RGB888);

}

ui->imgLabel_L->setPixmap(QPixmap::fromImage(qimage_L, Qt::AutoColor));

if(m_saveFlag_L == 1)

{ //save

QDateTime date_time_L = QDateTime::currentDateTime();

QString imgName_L = QString("/cam/%1-%2_%3:%4:%5_L.bmp")

.arg(date_time_L.date().month(), 2, 10, QChar('0'))

.arg(date_time_L.date().day(), 2, 10, QChar('0'))

.arg(date_time_L.time().hour(), 2, 10, QChar('0'))

.arg(date_time_L.time().minute(), 2, 10, QChar('0'))

.arg(date_time_L.time().second(), 2, 10, QChar('0'));

qimage_L.save(imgName_L);

imgName_L = imgName_L + " saved.";

ui->stateLabel_L->setText(imgName_L );

m_saveFlag_L = 0;

}

}

///////////////right////////////////////////

if(num == RIGHT_CAM)

{

cv::Mat mat_src_R(IMG_HEIGHT, IMG_WIDTH, CV_8UC3, rgb);

QImage qimage_R;

if(m_grayFlag_R == 1)

{ //gray

cv::Mat mat_gray_R(IMG_WIDTH, IMG_HEIGHT, CV_8UC1);

cv::cvtColor(mat_src_R, mat_gray_R, CV_BGR2GRAY);

qimage_R = QImage(mat_gray_R.cols, mat_gray_R.rows, QImage::Format_Indexed8); //Format_Indexed8

qimage_R.setColorCount(256);

for(int i = 0; i < 256; i++)

{

qimage_R.setColor(i, qRgb(i, i, i));

}

unsigned char* pSrc = mat_gray_R.data;

for(int row = 0; row < mat_gray_R.rows; row++)

{

unsigned char* pDest = qimage_R.scanLine(row);

memcpy(pDest, pSrc, mat_gray_R.cols);

pSrc += mat_gray_R.step;

}

}

else

{

qimage_R = QImage(mat_src_R.data, mat_src_R.cols, mat_src_R.rows, QImage::Format_RGB888);

}

ui->imgLabel_R->setPixmap(QPixmap::fromImage(qimage_R, Qt::AutoColor));

if(m_saveFlag_R == 1)

{ //save

QDateTime date_time_R = QDateTime::currentDateTime();

QString imgName_R = QString("/cam/%1-%2_%3:%4:%5_R.bmp")

.arg(date_time_R.date().month(), 2, 10, QChar('0'))

.arg(date_time_R.date().day(), 2, 10, QChar('0'))

.arg(date_time_R.time().hour(), 2, 10, QChar('0'))

.arg(date_time_R.time().minute(), 2, 10, QChar('0'))

.arg(date_time_R.time().second(), 2, 10, QChar('0'));

qimage_R.save(imgName_R);

imgName_R = imgName_R + " saved.";

ui->stateLabel_R->setText(imgName_R);

m_saveFlag_R = 0;

}

}

}

void Widget::slotSaveImage()

{

m_saveFlag_L = 1;

m_saveFlag_R = 1;

}

void Widget::slotGrayImage()

{

if(m_grayFlag_L == 0)

{

//change state

ui->garyLabel->setText("gray");

m_grayFlag_L =1;

m_grayFlag_R =1;

return;

}

if(m_grayFlag_L == 1)

{

//change state

ui->garyLabel->setText("orig");

m_grayFlag_L = 0;

m_grayFlag_R = 0;

return;

}

}

上面这的代码中使用了一个自定义的CamThread类,这是为了实现对两个摄像头进行多线操作而创建的类。

值得注意的是在connect(m_cam_X, SIGNAL(sigGotImage(int, unsigned char*)), this, SLOT(slotShowImage(int, unsigned char*)));中,子线程中图像数据是如何被传入主线程中的,这里实验了很久才成功。

还有将OpenCV中的图像数据进行灰度转化时,长x宽 和 宽x长 似乎有变化,这里感谢佩佩童鞋帮我找出了这个问题。

这是camthread.h

点击(此处)折叠或打开

/* 相机线程类

* 完成对相机的所用的操作,包括控制,采集

* 最后发送数据到主线程

*/

#ifndef CAMTHREAD_H

#define CAMTHREAD_H

#include <string.h>

#include <stdlib.h>

#include <errno.h>

#include <fcntl.h>

#include <unistd.h>

#include <sys/ioctl.h>

#include <sys/mman.h>

#include <asm/types.h>

#include <linux/videodev2.h>

#include <QString>

#include <QObject>

#include <QThread>

#include <QFile>

#include <QtGui>

#include <stdio.h>

#include "globaldata.h"

#define PIXE_FORMAT V4L2_PIX_FMT_YUYV//V4L2_PIX_FMT_MJPEG

#define CLEAR(x) memset(&(x), 0, sizeof(x))

class CamThread : public QThread

{

Q_OBJECT

public:

CamThread(QString devName, int num);

~CamThread();

int initCam(); //初始化设备

private:

int openDevice();

int initDevice();

int startCapturing();

//int getFrame(void** frameBuf, size_t* len);

int getFrame();

int ungetFrame();

int stopCapturing();

int uninitDevice();

int closeDevice();

int initMmap();

//MJPEG

//int is_huffman(unsigned char *buf);

//YUYV

int convertYUVtoRGBpixel(int y, int u, int v);

int convertYUVtoRGBbuffer(unsigned char* yuv, unsigned char* rgb, unsigned int w, unsigned int h);

protected:

void run();

signals:

//void sigGotImage(int num, unsigned char* &rgb);

void sigGotImage(int num, unsigned char* rgb);

public:

struct camBuffer //定义一个结构体来映射每个缓存帧

{

void* start;

size_t length;

};

QString m_devName; //设备文件名

int m_camNum; //用于区别左右摄像头

int m_fd; //文件描述符

camBuffer* m_buffers; //数据缓存

unsigned int m_nbuffers; //记录分配内存数量

int m_index;

unsigned char* m_yuv;

unsigned char* m_rgb;

};

#endif // CAMTHREAD_H

这是camthread.cpp

点击(此处)折叠或打开

#include "camthread.h"

CamThread::CamThread(QString devName, int num)

{

m_devName = devName; //设备文件名

m_camNum = num; //用于区别左右摄像头

m_fd = -1; //文件描述符

m_buffers = NULL; //数据缓存

m_nbuffers = 0; //记录分配内存数量

m_yuv = NULL;

m_rgb = NULL;

//m_qimage = NULL;

}

CamThread::~CamThread()

{

}

//////////////////////////图像获取///////////////////////////////////////////////////

int CamThread::initCam()

{

if(openDevice() == -1)

{

printf("open %s error\n", m_devName.toStdString().c_str());

closeDevice();

return -1;

}

if(initDevice() == -1)

{

printf("init %s error\n", m_devName.toStdString().c_str());

closeDevice();

return -1;

}

if(startCapturing() == -1)

{

printf("startCapturing %s error\n", m_devName.toStdString().c_str());

closeDevice();

return -1;

}

return 0;

}

void CamThread::run()

{

while(1)

{

getFrame();

//printf("getFrame()\n");

convertYUVtoRGBbuffer(m_yuv, m_rgb, IMG_WIDTH, IMG_HEIGHT);

//printf("YUV2RGB\n");

emit sigGotImage(m_camNum, m_rgb);

//printf("emit signal\n");

ungetFrame();

msleep(30);

}

}

////////////////////////摄像头具体操作/////////////////////////////////////////////////////

int CamThread::openDevice()

{

m_fd = open(m_devName.toStdString().c_str(), O_RDWR, 0);

if(m_fd == -1)

{

printf("open: %s\n", strerror(errno));

return -1;

}

return 0;

}

int CamThread::initDevice()

{

//查询设备属性

v4l2_capability cap;

if(ioctl(m_fd, VIDIOC_QUERYCAP, &cap) == -1)

{

if(EINVAL == errno)

printf("%s is no v4l2 device\n", (char*)&m_devName);

else

printf("VIDIOC_QUERYCAP: %s\n", strerror(errno));

return -1;

}

if(!(cap.capabilities & V4L2_CAP_VIDEO_CAPTURE))

{

printf("%s is not video capture device\n", (char*)&m_devName);

return -1;

}

if(!(cap.capabilities & V4L2_CAP_STREAMING))

{

printf("%s dose not support streaming\n", (char*)&m_devName);

return -1;

}

if(!(cap.capabilities & V4L2_CAP_READWRITE))

{

printf("%s dose not support read.write\n", m_devName.toStdString().c_str());

//return -1;

}

//图像的缩放

v4l2_cropcap cropcap;

v4l2_crop crop;

CLEAR(cropcap);

cropcap.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if(ioctl(m_fd, VIDIOC_CROPCAP, &cropcap) == 0)

{

CLEAR(crop);

crop.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

crop.c = cropcap.defrect;

if(ioctl(m_fd, VIDIOC_S_CROP, &crop) == -1)

{

if(EINVAL == errno)

{

printf("VIDIOC_S_CROP not supported\n");

}

else

{

printf("VIDIOC_S_CROP: %s\n", strerror(errno));

return -1;

}

}

}

else

{

printf("VIDIOC_CROPCAP: %s\n", strerror(errno));

return -1;

}

//查询所有支持格式

v4l2_fmtdesc fmtdesc;

CLEAR(fmtdesc);

fmtdesc.index = 0;

fmtdesc.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

printf("support format:\n");

while(ioctl(m_fd, VIDIOC_ENUM_FMT, &fmtdesc) != -1)

{

printf("\t%d.%s\n", fmtdesc.index+1, fmtdesc.description);

fmtdesc.index++;

}

//设置格式

v4l2_format fmt;

CLEAR(fmt);

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

fmt.fmt.pix.width = IMG_WIDTH;

fmt.fmt.pix.height = IMG_HEIGHT;

fmt.fmt.pix.pixelformat = PIXE_FORMAT;

fmt.fmt.pix.field = V4L2_FIELD_INTERLACED;

if(ioctl(m_fd, VIDIOC_S_FMT, &fmt) == -1)

{

printf("VIDIOC_S_FMT: %s\n", strerror(errno));

return -1;

}

//mmap

if(initMmap() == -1)

{

printf("init mmap error \n");

return -1;

}

//m_rgb m_qimage

m_yuv = (unsigned char*)malloc(IMG_WIDTH*IMG_HEIGHT*3*sizeof(char));

m_rgb = (unsigned char*)malloc(IMG_WIDTH*IMG_HEIGHT*3*sizeof(char));

return 0;

}

int CamThread::startCapturing()

{

//把四个缓冲帧放入队列,并启动数据流

for(unsigned int i = 0; i < m_nbuffers; ++i)

{

v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

if(ioctl(m_fd, VIDIOC_QBUF, &buf) == -1) //缓冲帧放入队列

{

printf("VIDIOC_QBUF %s\n",strerror(errno));

return -1;

}

}

v4l2_buf_type type;

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if(ioctl(m_fd, VIDIOC_STREAMON, &type) == -1)

{

printf("VIDIOC_STREAMON %s\n", strerror(errno));

return -1;

}

return 0;

}

//int CamThread::getFrame(void** frameBuf, size_t* len)

int CamThread::getFrame()

{

//从缓冲区取出一帧,并保存

v4l2_buffer queue_buf;

CLEAR(queue_buf);

queue_buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

queue_buf.memory = V4L2_MEMORY_MMAP;

if(ioctl(m_fd, VIDIOC_DQBUF, &queue_buf) == -1)

{

switch(errno)

{

case EAGAIN:

return -1;

case EIO:

return -1;

default:

printf("VIDIOC_DQUBUF: %s\n", strerror(errno));

return -1;

}

}

//printf("DQBUF done\n");

//*frameBuf = m_buffers[queue_buf.index].start;

//*len = m_buffers[queue_buf.index].length; //queue_buf.bytesused;

m_index = queue_buf.index; //buffer的序号

//YUYV

memcpy(m_yuv, m_buffers[queue_buf.index].start, m_buffers[queue_buf.index].length);

//printf("memcpy done\n");

/*

//MJPEG

memcpy(m_tmpBuffer, m_buffers[queue_buf.index].start, queue_buf.bytesused );

m_fileFd = fopen("cam.jpg", "wb");

unsigned char *ptdeb, *ptcur = m_tmpBuffer;

int sizein;

if(!is_huffman(m_tmpBuffer))

{

ptdeb = ptcur = m_tmpBuffer;

while (((ptcur[0] << 8) | ptcur[1]) != 0xffc0)

ptcur++;

sizein = ptcur - ptdeb;

fwrite(m_tmpBuffer, sizein, 1, m_fileFd);

fwrite(dht_data, DHT_SIZE, 1, m_fileFd);

fwrite(ptcur, queue_buf.bytesused-sizein, 1, m_fileFd);

}

else

{

fwrite(ptcur, queue_buf.bytesused, 1, m_fileFd);

}

fclose(m_fileFd);

*/

return 0;

}

int CamThread::ungetFrame()

{

if(m_index != -1)

{

//将取出的帧放回缓冲区(队列)

v4l2_buffer queue_buf;

CLEAR(queue_buf);

queue_buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

queue_buf.memory = V4L2_MEMORY_MMAP;

queue_buf.index = m_index;

if(ioctl(m_fd, VIDIOC_QBUF, &queue_buf) == -1)

{

printf("VIDIOC_QBUF: %s\n", strerror(errno));

return -1;

}

return 0;

}

printf("m_index = -1\n");

return -1;

}

int CamThread::stopCapturing()

{

v4l2_buf_type type;

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if(ioctl(m_fd, VIDIOC_STREAMOFF, &type) == -1)

{

printf("VIDIOC_STREAMOFF %s\n", strerror(errno));

return -1;

}

return 0;

}

int CamThread::uninitDevice()

{

for(unsigned int i = 0; i < m_nbuffers; ++i)

{

if(munmap(m_buffers[i].start, m_buffers[i].length))

{

printf("munmap %s\n", strerror(errno));

return -1;

}

}

free(m_buffers);

free(m_yuv);

free(m_rgb);

return 0;

}

int CamThread::closeDevice()

{

if(close(m_fd) == -1)

{

printf("close: %s", strerror(errno));

return -1;

}

return 0;

}

int CamThread::initMmap()

{

//申请缓冲区 ?申请设备中的缓存帧

v4l2_requestbuffers reqbuf;

CLEAR(reqbuf);

reqbuf.count = 4; //缓冲区内缓冲帧数目

reqbuf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //缓存帧数据格式

reqbuf.memory = V4L2_MEMORY_MMAP; //I/O方式,内存映射的方式

if(ioctl(m_fd, VIDIOC_REQBUFS, &reqbuf) == -1)

{

if(EINVAL == errno)

printf("%s does not support memory mapping\n", (char*)&m_devName);

else

printf("VIDIOC_REQUBUF %s\n", strerror(errno));

return -1;

}

if(reqbuf.count < 2)

{

printf("insufficient buffer memory on %s\n", (char*)&m_devName);

return -1;

}

//映射缓存区帧

m_buffers = (camBuffer*)calloc(reqbuf.count, sizeof(*m_buffers));

if(!m_buffers)

{

printf("out of memory\n");

return -1;

}

for(m_nbuffers = 0; m_nbuffers < reqbuf.count; ++m_nbuffers)

{

v4l2_buffer buf; //缓存帧

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = m_nbuffers; //buffer的序号

// 查询序号为n_buffers 的缓冲区,得到其起始物理地址和大小

if(ioctl(m_fd, VIDIOC_QUERYBUF, &buf) == -1) //获取(查询)这些缓存帧的地址、长度

{

printf("VIDIOC_QUERYBUF: %s\n", strerror(errno));

return -1;

}

m_buffers[m_nbuffers].length = buf.length; //设置

//将申请到的缓存帧映射到应用程序,用m_buffers指针记录

m_buffers[m_nbuffers].start = mmap(NULL,

buf.length,

PROT_READ | PROT_WRITE,

MAP_SHARED,

m_fd,

buf.m.offset);

if(m_buffers[m_nbuffers].start == MAP_FAILED)

{

printf("mmap %s\n", strerror(errno));

return -1;

}

}

return 0;

}

//////////////////////MJPEG/////////////////////////////////

/*

int CamThread::is_huffman(unsigned char *buf)

{

unsigned char *ptbuf;

int i = 0;

ptbuf = buf;

while (((ptbuf[0] << 8) | ptbuf[1]) != 0xffda)

{

if(i++ > 2048)

return 0;

if(((ptbuf[0] << 8) | ptbuf[1]) == 0xffc4)

return 1;

ptbuf++;

}

return 0;

}

*/

//////////////////////YUYV图像转换////////////////////////////////////////

int CamThread::convertYUVtoRGBpixel(int y, int u, int v)

{

unsigned int pixel_32 = 0;

unsigned char *pixel = (unsigned char *)&pixel_32;

int r, g, b;

r = y + (1.370705 * (v-128));

g = y - (0.698001 * (v-128)) - (0.337633 * (u-128));

b = y + (1.732446 * (u-128));

if(r > 255)

r = 255;

if(g > 255)

g = 255;

if(b > 255)

b = 255;

if(r < 0)

r = 0;

if(g < 0)

g = 0;

if(b < 0)

b = 0;

pixel[0] = r*220/256;

pixel[1] = g*220/256;

pixel[2] = b*220/256;

return pixel_32;

}

int CamThread::convertYUVtoRGBbuffer(unsigned char* yuv, unsigned char* rgb, unsigned int w, unsigned int h)

{

unsigned int in, out = 0;

unsigned int pixel_16;

unsigned char pixel_24[3];

unsigned int pixel_32;

int y0, u, y1, v;

for(in = 0; in < w*h*2; in+=4)

{

pixel_16 = yuv[in+3]<<24 |

yuv[in+2]<<16 |

yuv[in+1]<<8 |

yuv[in+0];

y0 = (pixel_16 & 0x000000ff);

u = (pixel_16 & 0x0000ff00) >> 8;

y1 = (pixel_16 & 0x00ff0000) >> 16;

v = (pixel_16 & 0xff000000) >> 24;

pixel_32 = convertYUVtoRGBpixel(y0, u, v);

pixel_24[0] = (pixel_32 & 0x000000ff);

pixel_24[1] = (pixel_32 & 0x0000ff00) >> 8;

pixel_24[2] = (pixel_32 & 0x00ff0000) >> 16;

rgb[out++] = pixel_24[0];

rgb[out++] = pixel_24[1];

rgb[out++] = pixel_24[2];

pixel_32 = convertYUVtoRGBpixel(y1, u, v);

pixel_24[0] = (pixel_32 & 0x000000ff);

pixel_24[1] = (pixel_32 & 0x0000ff00) >> 8;

pixel_24[2] = (pixel_32 & 0x00ff0000) >> 16;

rgb[out++] = pixel_24[0];

rgb[out++] = pixel_24[1];

rgb[out++] = pixel_24[2];

}

return 0;

}

CamThread类是通过V4L2来实现对摄像头的操作,从摄像头中获取到YUV格式的数据后,还要将其转换为RGB格式的,这里看了关于YUV与RGB颜色空间之间关系的论文,他俩之间是可以相互转换的,最后参考了网上一位大神的代码,时间久远查找不便,不能给出出处请见谅。发现论文中的参数和大神代码中的参数是有些微不同的,但大神的代码运行良好而且参数差值很小,因此没有修改。

至于main.cpp就不用在赘述了。。。

附上运行图

个人水平有限难免有错误之处,希望不吝敕教

未能详尽解说,之后会再进行补充

本文来自博客园,作者:Ryan,转载请注明原文链接:https://www.cnblogs.com/Ryan9399/p/18719524

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!