Tensorflow2(预课程)---7.1、cifar10分类-层方式

一、总结

一句话总结:

可以看到,用全连接层神经网络来对cifar10分类的时候,准确率只有50%左右,可以用卷积神经网络,准确率应该高很多

1、rgb3通道图片处理?

也是一样打平:model.add(tf.keras.layers.Flatten(input_shape=(32,32,3)))

# 将多维数据(60000, 32, 32, 3)变成一维

# 把图像扁平化成一个向量

model.add(tf.keras.layers.Flatten(input_shape=(32,32,3)))

2、提高神经网络准确率的方法?

甲、增加层

乙、增加每层节点数

二、cifar10分类-层方式

博客对应课程的视频位置:

步骤

1、读取数据集

2、拆分数据集(拆分成训练数据集和测试数据集)

3、构建模型

4、训练模型

5、检验模型

需求

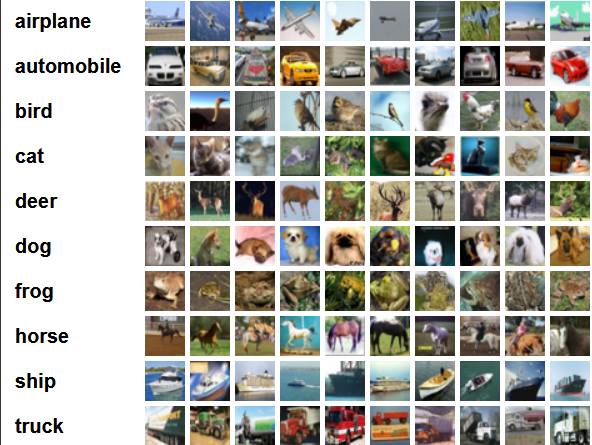

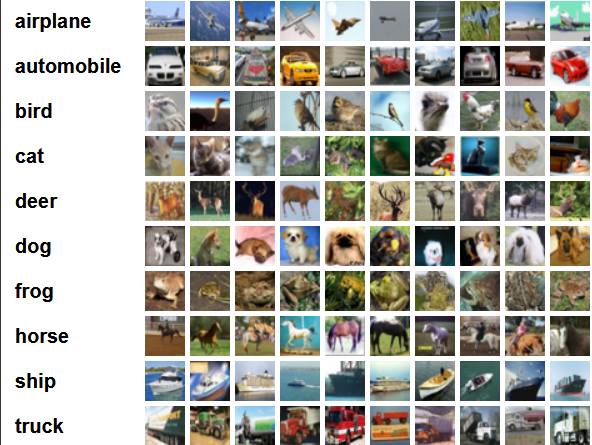

cifar10(物品分类)

该数据集共有60000张彩色图像,这些图像是32*32,分为10个类,每类6000张图。这里面有50000张用于训练,构成了5个训练批,每一批10000张图;另外10000用于测试,单独构成一批。测试批的数据里,取自10类中的每一类,每一类随机取1000张。抽剩下的就随机排列组成了训练批。注意一个训练批中的各类图像并不一定数量相同,总的来看训练批,每一类都有5000张图。

直接从tensorflow的dataset来读取数据集即可

(50000, 32, 32, 3) (50000, 1)

[[3]

[8]

[8]

...

[5]

[1]

[7]]

应该构建一个怎么样的模型:

输入是32*32*3维,输出是一个label,是一个10分类问题,

需要one_hot编码么,如果是one_hot编码,那么输出是10维

也就是 32*32*3->n->10,可以试下3072->1024->256->128->64->32->10

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten (Flatten) (None, 3072) 0

_________________________________________________________________

dense (Dense) (None, 256) 786688

_________________________________________________________________

dense_1 (Dense) (None, 128) 32896

_________________________________________________________________

dense_2 (Dense) (None, 64) 8256

_________________________________________________________________

dense_3 (Dense) (None, 32) 2080

_________________________________________________________________

dense_4 (Dense) (None, 10) 330

=================================================================

Total params: 830,250

Trainable params: 830,250

Non-trainable params: 0

_________________________________________________________________

Epoch 1/50

1563/1563 [==============================] - 6s 4ms/step - loss: 1.9072 - acc: 0.3012 - val_loss: 1.7286 - val_acc: 0.3780

Epoch 2/50

1563/1563 [==============================] - 6s 4ms/step - loss: 1.7166 - acc: 0.3824 - val_loss: 1.6491 - val_acc: 0.4011

Epoch 3/50

1563/1563 [==============================] - 6s 4ms/step - loss: 1.6342 - acc: 0.4131 - val_loss: 1.6009 - val_acc: 0.4249

Epoch 4/50

1563/1563 [==============================] - 7s 5ms/step - loss: 1.5760 - acc: 0.4325 - val_loss: 1.5339 - val_acc: 0.4532

Epoch 5/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.5387 - acc: 0.4476 - val_loss: 1.5336 - val_acc: 0.4525

Epoch 6/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.5044 - acc: 0.4603 - val_loss: 1.5341 - val_acc: 0.4531

Epoch 7/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4788 - acc: 0.4724 - val_loss: 1.4793 - val_acc: 0.4754

Epoch 8/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4557 - acc: 0.4815 - val_loss: 1.4708 - val_acc: 0.4715

Epoch 9/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4345 - acc: 0.4883 - val_loss: 1.4712 - val_acc: 0.4776

Epoch 10/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4201 - acc: 0.4931 - val_loss: 1.4784 - val_acc: 0.4718

Epoch 11/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3992 - acc: 0.5003 - val_loss: 1.4802 - val_acc: 0.4699

Epoch 12/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3818 - acc: 0.5043 - val_loss: 1.4721 - val_acc: 0.4841

Epoch 13/50

1563/1563 [==============================] - 7s 5ms/step - loss: 1.3723 - acc: 0.5082 - val_loss: 1.4530 - val_acc: 0.4930

Epoch 14/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3573 - acc: 0.5141 - val_loss: 1.4627 - val_acc: 0.4802

Epoch 15/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3416 - acc: 0.5212 - val_loss: 1.4565 - val_acc: 0.4872

Epoch 16/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3314 - acc: 0.5228 - val_loss: 1.4438 - val_acc: 0.4914

Epoch 17/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3201 - acc: 0.5274 - val_loss: 1.4539 - val_acc: 0.4972

Epoch 18/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3068 - acc: 0.5323 - val_loss: 1.4281 - val_acc: 0.4979

Epoch 19/50

1563/1563 [==============================] - 7s 5ms/step - loss: 1.2945 - acc: 0.5356 - val_loss: 1.4282 - val_acc: 0.4998

Epoch 20/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2850 - acc: 0.5411 - val_loss: 1.4722 - val_acc: 0.4866

Epoch 21/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2758 - acc: 0.5431 - val_loss: 1.4234 - val_acc: 0.5056

Epoch 22/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2678 - acc: 0.5473 - val_loss: 1.4430 - val_acc: 0.4976

Epoch 23/50

1563/1563 [==============================] - 7s 5ms/step - loss: 1.2570 - acc: 0.5504 - val_loss: 1.4371 - val_acc: 0.5049

Epoch 24/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2479 - acc: 0.5545 - val_loss: 1.4330 - val_acc: 0.4973

Epoch 25/50

1563/1563 [==============================] - 7s 5ms/step - loss: 1.2376 - acc: 0.5569 - val_loss: 1.4484 - val_acc: 0.4962

Epoch 26/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2319 - acc: 0.5582 - val_loss: 1.4493 - val_acc: 0.4992

Epoch 27/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2268 - acc: 0.5599 - val_loss: 1.4509 - val_acc: 0.4970

Epoch 28/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2151 - acc: 0.5627 - val_loss: 1.4624 - val_acc: 0.4929

Epoch 29/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2167 - acc: 0.5638 - val_loss: 1.4369 - val_acc: 0.5055

Epoch 30/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2028 - acc: 0.5683 - val_loss: 1.4270 - val_acc: 0.5103

Epoch 31/50

1563/1563 [==============================] - 7s 5ms/step - loss: 1.1950 - acc: 0.5713 - val_loss: 1.4530 - val_acc: 0.5043

Epoch 32/50

1563/1563 [==============================] - 7s 5ms/step - loss: 1.1897 - acc: 0.5735 - val_loss: 1.4564 - val_acc: 0.5033

Epoch 33/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1838 - acc: 0.5766 - val_loss: 1.4682 - val_acc: 0.4981

Epoch 34/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1748 - acc: 0.5783 - val_loss: 1.5145 - val_acc: 0.4862

Epoch 35/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1732 - acc: 0.5806 - val_loss: 1.4676 - val_acc: 0.4941

Epoch 36/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1645 - acc: 0.5827 - val_loss: 1.4899 - val_acc: 0.4923

Epoch 37/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1583 - acc: 0.5830 - val_loss: 1.4780 - val_acc: 0.5017

Epoch 38/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1580 - acc: 0.5825 - val_loss: 1.4808 - val_acc: 0.4928

Epoch 39/50

1563/1563 [==============================] - 7s 5ms/step - loss: 1.1505 - acc: 0.5866 - val_loss: 1.4767 - val_acc: 0.5002

Epoch 40/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1446 - acc: 0.5881 - val_loss: 1.4793 - val_acc: 0.4922

Epoch 41/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1426 - acc: 0.5901 - val_loss: 1.5011 - val_acc: 0.4976

Epoch 42/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1357 - acc: 0.5936 - val_loss: 1.4820 - val_acc: 0.5033

Epoch 43/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1299 - acc: 0.5922 - val_loss: 1.5110 - val_acc: 0.4964

Epoch 44/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1244 - acc: 0.5957 - val_loss: 1.5044 - val_acc: 0.4945

Epoch 45/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1231 - acc: 0.5957 - val_loss: 1.4931 - val_acc: 0.4981

Epoch 46/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1148 - acc: 0.6001 - val_loss: 1.4952 - val_acc: 0.4983

Epoch 47/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1112 - acc: 0.6006 - val_loss: 1.5073 - val_acc: 0.4976

Epoch 48/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1085 - acc: 0.6033 - val_loss: 1.5084 - val_acc: 0.5002

Epoch 49/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.0985 - acc: 0.6059 - val_loss: 1.5110 - val_acc: 0.5010

Epoch 50/50

1563/1563 [==============================] - 7s 4ms/step - loss: 1.0957 - acc: 0.6073 - val_loss: 1.5539 - val_acc: 0.4895

[[6.2265161e-02 3.6133263e-02 2.9608410e-02 ... 1.4575418e-02

2.3273245e-01 3.9462153e-02]

[1.2520383e-03 2.8723732e-02 1.3520659e-05 ... 1.4830942e-05

2.0657738e-01 7.6337880e-01]

[4.4282374e-01 2.5962757e-02 4.9018036e-03 ... 5.0854594e-03

4.7830233e-01 3.1182669e-02]

...

[2.5298037e-02 1.5645087e-03 4.5591959e-01 ... 2.0820173e-02

4.5593046e-02 5.5354494e-03]

[4.8573306e-03 1.7568754e-02 2.7253026e-01 ... 2.8846616e-01

3.5799999e-02 1.9565571e-02]

[1.8785211e-04 9.9887984e-06 4.1551115e-03 ... 9.8020053e-01

2.0824935e-05 2.1540496e-04]]

tf.Tensor(

[[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 1. 0.]

[0. 0. 0. ... 0. 1. 0.]

...

[0. 0. 0. ... 0. 0. 0.]

[0. 1. 0. ... 0. 0. 0.]

[0. 0. 0. ... 1. 0. 0.]], shape=(10000, 10), dtype=float32)

tf.Tensor([8 9 8 ... 2 7 7], shape=(10000,), dtype=int64)

tf.Tensor([3 8 8 ... 5 1 7], shape=(10000,), dtype=int64)