TensorFlow2_200729系列---12、全连接层

TensorFlow2_200729系列---12、全连接层

一、总结

一句话总结:

tf.keras.layers.Dense(2, activation='relu')

1、为什么神经节点一般有多个w和一个b?

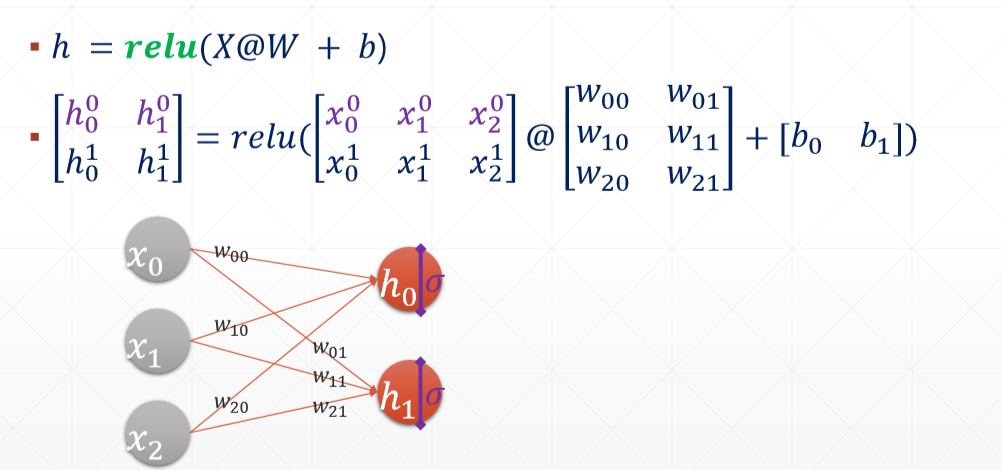

A、h=relu(X@W)+b,这里的b表示bias,也就是误差,

B、比如第一层3个节点,第二层两个节点,对应的式子为:h1=x1*w1+x2*w2+x3*w3+b

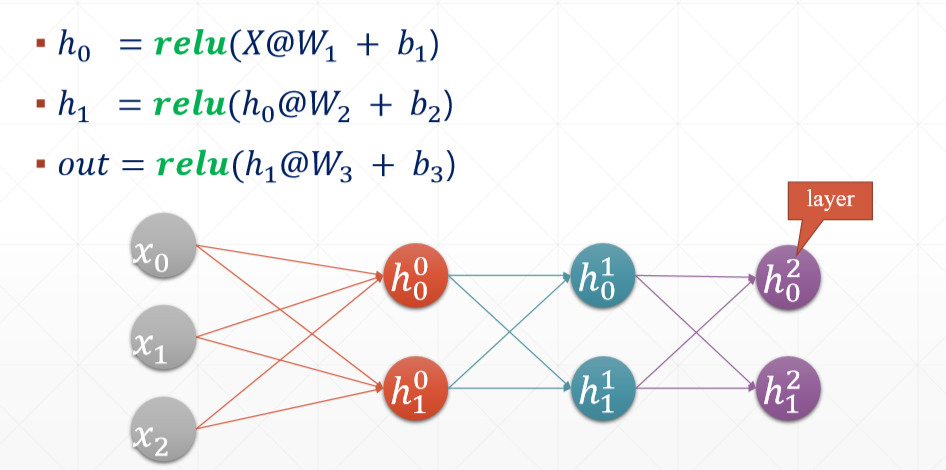

2、tf.keras.layers.Dense(2, activation='relu') 中的dense表示什么意思?

dense表示连接的密度,也就是全连接,也就是所有的点都连上

3、tensorflow的model打印w和b?

for p in model.trainable_variables:print(p.name, p.shape)

# trainable_variables: # Sequence of trainable variables owned by this module and its submodules. for p in model.trainable_variables: print(p.name, p.shape) dense_12/kernel:0 (3, 2) dense_12/bias:0 (2,) dense_13/kernel:0 (2, 2) dense_13/bias:0 (2,) dense_14/kernel:0 (2, 2) dense_14/bias:0 (2,)

4、python 如何查看object有哪些属性和方法?

a、使用dir(object)或者object.__dict__

b、也可以使用help函数

二、全连接层

博客对应课程的视频位置:

import tensorflow as tf from tensorflow import keras x = tf.random.normal([2, 3]) model = keras.Sequential([ keras.layers.Dense(2, activation='relu'), keras.layers.Dense(2, activation='relu'), keras.layers.Dense(2) ]) model.build(input_shape=[None, 3]) model.summary() # trainable_variables: # Sequence of trainable variables owned by this module and its submodules. for p in model.trainable_variables: print(p.name, p.shape)

Model: "sequential_4" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense_12 (Dense) multiple 8 _________________________________________________________________ dense_13 (Dense) multiple 6 _________________________________________________________________ dense_14 (Dense) multiple 6 ================================================================= Total params: 20 Trainable params: 20 Non-trainable params: 0 _________________________________________________________________ dense_12/kernel:0 (3, 2) dense_12/bias:0 (2,) dense_13/kernel:0 (2, 2) dense_13/bias:0 (2,) dense_14/kernel:0 (2, 2) dense_14/bias:0 (2,)