使用不完整的线性自动编码器执行PCA

使用不完整的线性自动编码器执行PCA

如果自动编码器仅使用线性激活,并且成本函数是均方误差MSE,则最后执行的是主成分分析(PCA)

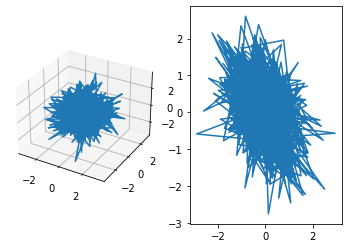

以下代码构建了一个简单的线性自动编码器,来对3D数据集执行PCA,将其投影到2D:

from tensorflow import keras

encoder = keras.models.Sequential([

keras.layers.Dense(2, input_shape=[3])

])

decoder = keras.models.Sequential([

keras.layers.Dense(3, input_shape=[2])

])

autoencoder = keras.models.Sequential([encoder, decoder])

autoencoder.compile(loss='mse', optimizer=keras.optimizers.SGD(lr=.1))

- 将自动编码器分为两个子主件:编码器和解码器。两者都具有单一Dense层的常规Sequential模型,而自动编码器是包含编码器和解码器的Sequential模型(一个模型可以用作另一个模型中的层)

- 自动编码器的输出数量等于输入的数量

- 为了执行简单的PCA,不使用任何激活函数(即所有神经元都是线性的),成本函数为MSE

import tensorflow as tf

X_train = tf.random.normal([1000, 3], 0, 1)

print(X_train.shape)

history = autoencoder.fit(X_train, X_train, epochs=20)

# X_train=X_train[np.newaxis,...]

codings = encoder.predict(X_train)

print(codings.shape)

(1000, 3)

Epoch 1/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3010

Epoch 2/20

32/32 [==============================] - 0s 2ms/step - loss: 0.3008

Epoch 3/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3005

Epoch 4/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3007

Epoch 5/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3009

Epoch 6/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3004

Epoch 7/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3004

Epoch 8/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3011

Epoch 9/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3001

Epoch 10/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3007

Epoch 11/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3010

Epoch 12/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3004

Epoch 13/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3004

Epoch 14/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3003

Epoch 15/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3007

Epoch 16/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3004

Epoch 17/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3005

Epoch 18/20

32/32 [==============================] - 0s 1ms/step - loss: 0.2999

Epoch 19/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3003

Epoch 20/20

32/32 [==============================] - 0s 1ms/step - loss: 0.3005

(1000, 2)

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

fig = plt.figure()

ax = fig.add_subplot(121, projection='3d')

ax.plot(X_train[:, 0], X_train[:, 1], X_train[:, 2])

ax = fig.add_subplot(122)

ax.plot(codings[:, 0], codings[:, 1])

浙公网安备 33010602011771号

浙公网安备 33010602011771号