Elastic (ELK) Stack产品部署

Elastic (ELK) Stack产品相关简介请看这篇博客

Elasticsearch

参考地址:

https://github.com/elastic/elasticsearch/blob/main/distribution/src/config/elasticsearch.yml

https://www.elastic.co/guide/en/elasticsearch/reference/current/settings.html

镜像

elasticsearch 有两个镜像,都是一样的pull必须制定tag

docker.elastic.co需要使用国内源,建议使用阿里源

| 镜像 | 网站 |

|---|---|

| elasticsearch | https://hub.docker.com/_/elasticsearch |

| docker.elastic.co/elasticsearch/elasticsearch | https://www.docker.elastic.co/r/elasticsearch |

docker pull elasticsearch:8.5.0

docker pull docker.elastic.co/elasticsearch/elasticsearch:8.5.0

部署

安装之前找一个目录docker挂载目录新建一个elasticsearch.yml。建议目录:/docker/data/elasticsearch/config/elasticsearch.yml。这是elasticsearch的配置文件。初始化只有一个http.host。

建议配置如下:

# 节点名称

node.name: elasticsearch

# 绑定host,代表当前节点的ip

network.host: 0.0.0.0

# 如果要使用head,那么需要解决跨域问题,使head插件可以访问es

# 是否支持跨域,默认为false

http.cors.enabled: true

# 当设置允许跨域,默认为*

http.cors.allow-origin: "*"

# 跨域请求头

http.cors.allow-headers: Authorization,X-Requested-With,Content-Type,Content-Length

http.cors.allow-credentials: true

# java优化内存使用

bootstrap.memory_lock: true

#单节点部署

#discovery.seed_hosts: "single-node"

# 多节点集群

#discovery.seed_hosts: ["host1", "host2"]

# 开启X-Pack认证,配置用户名密码

xpack.security.enabled: false

# 开启Htpps

xpack.security.transport.ssl.enabled: false

# 设置对外服务的http端口,默认为9200

#http.port: 9200

# 设置节点间交互的tcp端口,默认是9300

#transport.tcp.port: 9300

#集群名称

#cluster.name: my-application

给本地目录添加写入权限,不然会报错failed to obtain node locks,一定要加

chown -R 1000:1000 /docker/data

chmod -R 777 /docker/data

起容器

mkdir -p /docker/data/elasticsearch/config

vi /docker/data/elasticsearch/config/elasticsearch.yml

docker run --name elasticsearch -d -p 9200:9200 -p 9300:9300 \

-e ELASTIC_PASSWORD=123456 \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms64m -Xmx512m" \

-v /docker/data/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

-v /docker/data/elasticsearch/data:/usr/share/elasticsearch/data \

-v /docker/data/elasticsearch/plugins:/usr/share/elasticsearch/plugins elasticsearch:8.5.0

| 参数 | 含义 |

|---|---|

| 9200 | http端口 |

| 9300 | TCP端口 |

| discovery.type=single-node | 单节点部署 |

| ELASTIC_PASSWORD | elastic密码,当认证没开时此变量无用 |

| ES_JAVA_OPTS | 限制ES内存。Xms64m表示初始内存64m,Xmx512m表示足底啊512m,最大1g内存写法:Xmx1g |

开启认证

修改elasticsearch.yml文件并重启容器

ES8.0版本后默认开启

xpack.security.enabled: true

root用户进入容器修改登录用户密码

docker exec -it -u root elasticsearch bash

# 系统自动生成密码

/usr/share/elasticsearch/bin/elasticsearch-setup-passwords auto

# 自定义密码

/usr/share/elasticsearch/bin/elasticsearch-setup-passwords interactive

# 随机重新设置用户密码

/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic

# 获取用户kibana的token

需要在配置文件配置 xpack.security.enrollment.enabled: true 并开启Https正式认证才可以使用

/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token --scope kibana

总共有以下几个用户,其中elastic是超级管理员。

- elastic

- logstash_system

- kibana

- kibana_system

- apm_system

- beats_system

- remote_monitoring_user

Api修改用户密码

# 修改elastic密码为123456

curl -u elastic:123456 -X POST 'http://192.168.1.5:9200/_security/user/kibana/_password' -H 'Content-Type: application/json' -d '{ "password" : "1234567"}'

访问

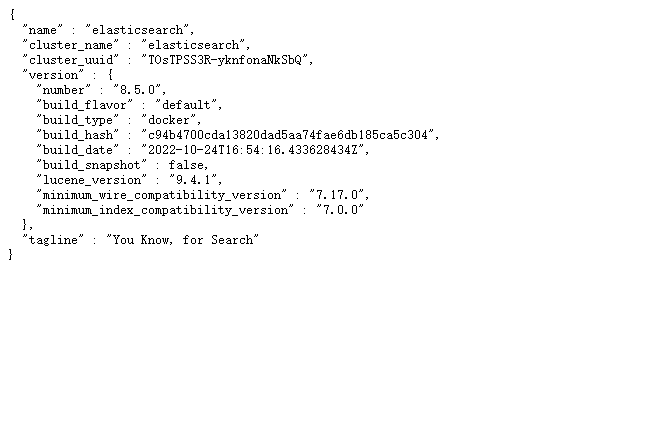

部署成功后浏览器访问http://192.168.1.5:9200/显示以下内容表示部署成功

清理历史日志

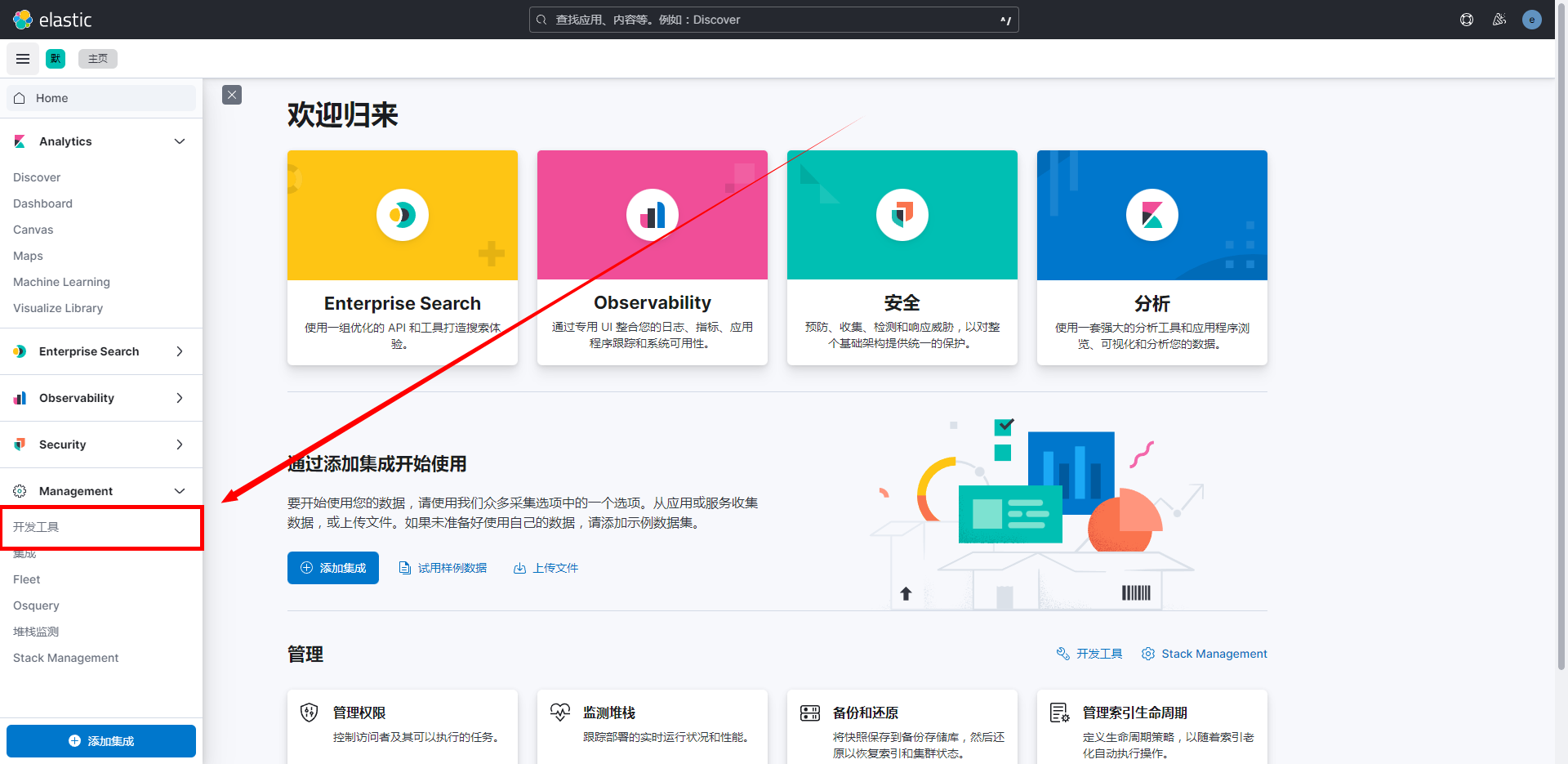

在Kibana 中打开开发工具

输入以下代码并运行

# 指令(删除15天前的数据)

POST /你的索引/_delete_by_query

{

"query": {

"range": {

"@time_stamp": {

"lt": "now-15d",

"format": "epoch_millis"

}

}

}

}

Logstash

参考地址:

https://www.elastic.co/guide/en/logstash/current/index.html

https://github.com/elastic/logstash/blob/main/config/logstash.yml

https://github.com/elastic/logstash/blob/main/config/pipelines.yml

https://github.com/deviantony/docker-elk/blob/main/logstash/pipeline/logstash.conf

https://www.elastic.co/guide/en/logstash/current/pipeline-to-pipeline.html

https://www.elastic.co/guide/en/logstash/current/plugins-inputs-http.html

镜像

logstash有两个镜像,都是一样的pull必须制定tag

docker.elastic.co需要使用国内源,建议使用阿里源

| 镜像 | 网站 |

|---|---|

| logstash | https://hub.docker.com/_/logstash |

| docker.elastic.co/logstash/logstash | https://www.docker.elastic.co/r/logstash |

docker pull elasticsearch:8.5.0

docker pull docker.elastic.co/logstash/logstash:8.5.0

部署

先部署一个镜像拷贝配置文件然后删除

docker run -d --name=logstash logstash:8.5.0

# 创建本地映射目录

mkdir -p /dockerdata/logstash

# 拷贝相关文件

docker cp logstash:/usr/share/logstash/config/ /dockerdata/logstash

docker cp logstash:/usr/share/logstash/data /dockerdata/logstash

docker cp logstash:/usr/share/logstash/pipeline /dockerdata/logstash

# 附加文件夹读写权限

chown -R 1000:1000 /dockerdata

chmod -R 777 /dockerdata

## 移除容器

docker rm -f logstash

修改config目录的logstash.yml服务配置文件,修改如下:

# 0.0.0.0:允许任何IP访问

http.host: "0.0.0.0"

# 配置elasticsearch集群地址

xpack.monitoring.elasticsearch.hosts: [ "http://192.168.172.131:9200","http://192.168.172.129:9200"]

xpack.monitoring.elasticsearch.username: "elastic"

xpack.monitoring.elasticsearch.password: "123456"

# 允许监控

xpack.monitoring.enabled: true

# 指定收集log路径

path.logs: /usr/share/logstash/pipeline

# 读取配置目录conf文件。对应 /usr/share/logstash/config/pipelines.yml 文件的 path.config 配置

#path.config: /usr/share/logstash/pipeline/*.conf

#Node节点名称

#node.name: logstash-203

# 验证配置文件及存在性

#config.test_and_exit: false

# 配置文件改变时是否自动加载

# config.reload.automatic: false

# 重新加载配置文件间隔

#config.reload.interval: 60s

# debug模式 开启后会打印解析后的配置文件 包括密码等信息 慎用

# 需要同时配置日志等级为debug

#config.debug: true

#log.level: debug

起容器站点

docker run -d \

--name=logstash \

-p "5044:5044" \

-p "9600:9600" \

-p "9650:9650" \

-p "9651:9651/tcp" \

-p "9651:9651/udp" \

-e ES_JAVA_OPTS="-Xms64m -Xmx512m" \

-e LOGSTASH_INTERNAL_PASSWORD="123456" \

-v /dockerdata/logstash/data:/usr/share/logstash/data \

-v /dockerdata/logstash/logs:/usr/share/logstash/logs \

-v /dockerdata/logstash/pipeline:/usr/share/logstash/pipeline \

-v /dockerdata/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml \

logstash:8.5.0

| 选项 | 参数 | 含义 |

|---|---|---|

| -p | 5044 | beats端口 |

| -p | 9600 | http访问端口 |

| -p | 9650 | 自定义Http端口号,一般9600-9700之间的端口用于http api请求 |

| -p | 9651 | 自定义Tcp/Udp端口号 |

| -e | ES_JAVA_OPTS | 限制内存 |

| -e | LOGSTASH_INTERNAL_PASSWORD | 指定ES 的logstash_system 账号密码;方便密码统一配置;此变量可不加 |

| -v | /usr/share/logstash/data | logstash运行data;保持持久化 |

| -v | /usr/share/logstash/logs | 日志收集配置挂载位置 |

| -v | /usr/share/logstash/pipeline | 日志挂载位置 |

| -v | /usr/share/logstash/config/logstash.yml | logstash的配置文件 |

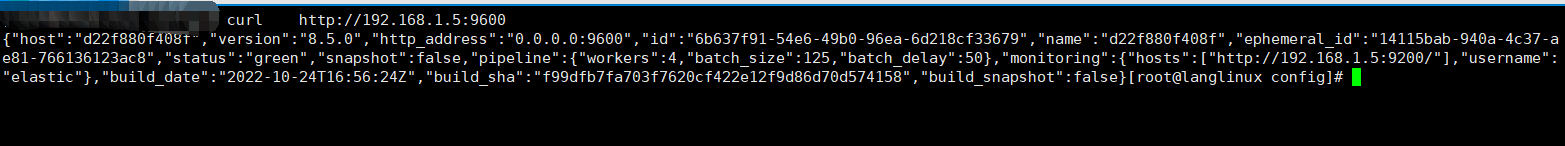

访问logstash:9600端口,有返回值代表成功

curl http://192.168.1.5:9600

日志收集配置

logstash通过读取pipelines.yml的path.config配置路径下*.conf收集日志。默认路径:/usr/share/logstash/pipeline

conf文件模板

input {

#输入(日志来源)

}

filter {

#日志过滤

}

output {

#输出

}

通用

input {

# http

http {

host => "0.0.0.0"

port => 10000

additional_codecs => {"application/json"=>"json"}

codec => "plain"

threads => 1

ssl => false

}

# beats 组件

beats {

port => 5044

}

# TCP

tcp {

mode => "server"

host => "0.0.0.0"

port => 5000

codec => json_lines

type=> "datalog"

}

# 文件

file {

#标签

#type => "systemlog-localhost"

#采集点

#path => "/var/log/*.log"

#开始收集点

#start_position => "beginning"

#扫描间隔时间,默认是1s,建议5s

#stat_interval => "5"

}

}

filter {

# 日志过滤

ruby { #设置一个自定义字段'timestamp'[这个字段可自定义],将logstash自动生成的时间戳中的值加8小时,赋给这个字段

code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*3600)"

}

ruby { #将自定义时间字段中的值重新赋给@timestamp

code => "event.set('@timestamp',event.get('timestamp'))"

}

mutate { #删除自定义字段

remove_field => ["timestamp"]

}

}

output {

# 输出至ES

elasticsearch {

hosts => ["192.168.31.196:9200"]

index => "logstash-system-localhost-%{+YYYY.MM.dd}"

user => "elastic"

password => "xxxx"

#索引名,必须全部小写

index => "xxxx-%{+YYYY.MM.dd}"

#传来的id作为es文档的_id

document_id => "%{id}"

}

# IF判断

if[type] == "datalog"{

elasticsearch{

hosts=>["192.168.xx.xx:9200"]

}

# 标准输出

stdout{

# codec => rubydebug

}

}

收集JSON日志

systemName是JSON中的一个字段名称。要注意的是索引名称必须全部小写。

input {

file {

path => "/usr/share/logstash/logs/*.log"

start_position => "beginning"

codec => json {

charset => ["UTF-8"]

}

#扫描间隔时间,默认是1s,建议5s

stat_interval => "5"

}

}

output {

elasticsearch {

hosts => ["elasticsearch:9200"]

#索引名称必须全部小写

index => "%{systemName}-%{+YYYY.MM.dd}"

}

}

案列JSON数据

{"method":"register","user_id":2933,"user_name":"name_91","level":27,"login_time":1470179550}

{"method":"login","user_id":1247,"user_name":"name_979","level":1,"register_time":1470179550}

{"method":"register","user_id":2896,"user_name":"name_1972","level":17,"login_time":1470179550}

{"method":"login","user_id":2411,"user_name":"name_2719","level":1,"register_time":1470179550}

{"method":"register","user_id":1588,"user_name":"name_1484","level":4,"login_time":1470179550}

{"method":"login","user_id":2507,"user_name":"name_1190","level":1,"register_time":1470179550}

{"method":"register","user_id":2382,"user_name":"name_234","level":21,"login_time":1470179550}

{"method":"login","user_id":1208,"user_name":"name_443","level":1,"register_time":1470179550}

{"method":"register","user_id":1331,"user_name":"name_1297","level":3,"login_time":1470179550}

{"method":"login","user_id":2809,"user_name":"name_743","level":1,"register_time":1470179550}

对应模板

input {

file {

path => "/usr/share/logstash/logs/*.log"

start_position => "beginning"

codec => json {

charset => ["UTF-8"]

}

#扫描间隔时间,默认是1s,建议5s

stat_interval => "5"

}

}

output {

elasticsearch {

hosts => ["elasticsearch:9200"]

#索引名称必须全部小写

index => "data_%{method}"

}

}

常见问题

启动后自定停止,查看日志也没有错误。可能原因是pipeline 映射目录缺少logstash.conf。这是logstash初始化的时候自带的。需要新建后在映射。

input {

beats {

port => 5044

}

}

output {

stdout {

codec => rubydebug

}

}

Kibana

镜像

参考地址:

https://github.com/elastic/kibana/blob/main/config/kibana.yml

https://www.elastic.co/guide/en/kibana/current/settings.html

https://www.elastic.co/guide/cn/kibana/current/docker.html

kibana 有两个镜像,都是一样的。pull必须制定tag

docker.elastic.co需要使用国内源,建议使用阿里源

| 镜像 | 网站 |

|---|---|

| kibana | https://hub.docker.com/_/kibana |

| docker.elastic.co/kibana/kibana | https://www.docker.elastic.co/r/kibana |

docker pull kibana:8.5.0

docker pull docker.elastic.co/kibana/kibana:8.5.0

部署

部署之前新建一个kibana.yml配置文件。内容如下:

#server.port: 5601

#server.name: kibana

# Host地址,默认"0",这里要设置下,不然宿主机无法映射端口

server.host: "0.0.0.0"

# elasticsearch地址,这里不能填0.0.0.0和127.0.0.1,只能填IP或者容器网络

elasticsearch.hosts: ["http://192.168.1.5:9200"]

# elasticsearch认证账号,配置文件不能使用elastic,建议使用kibana或kibana_system账号(读取elasticsearch的账号)

elasticsearch.username: "kibana_system"

elasticsearch.password: "123456"

# 设置kibana中文显示

i18n.locale: "zh-CN"

xpack.monitoring.ui.container.elasticsearch.enabled: true

起容器

mkdir -p /docker/data/kibana/config

vi /docker/data/kibana/config/kibana.yml

docker run -d --name kibana -p 5601:5601 -v /docker/data/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml kibana:8.5.0

指定es url

docker run -d --name kibana -p 5601:5601 -e ELASTICSEARCH_HOSTS=http://elasticsearch:9200 -v /docker/data/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml kibana:8.5.0

访问

部署成功后浏览器访问http://192.168.1.5:5601/显示以下内容表示部署成功

kibana认证读取自elasticsearch

查询日志

根据索引查询所有日志

kibana开发工具控制台输入以下API,muxue-*是索引,*代表所有

GET /muxue-*/_search

查询所有索引

GET /_cat/indices?format=json

查询一天的日志

GET /muxue-2022.11.07/_search

Apm Server

参考地址

https://www.elastic.co/guide/en/apm/guide/current/apm-quick-start.html

https://github.com/elastic/apm-server/blob/main/apm-server.yml

镜像

apm-server 有两个镜像。pull必须制定tag

docker.elastic.co需要使用国内源,建议使用阿里源

| 镜像 | 网站 |

|---|---|

| elastic/apm-server | https://hub.docker.com/r/elastic/apm-server |

| docker.elastic.co/apm/apm-server:8.5.0 | https://www.docker.elastic.co/r/apm |

docker pull elastic/apm-server

docker pull docker.elastic.co/apm/apm-server:8.5.0

部署

部署之前新建一个apm-server.yml配置文件。内容如下:

apm-server:

#Host

host: "0.0.0.0:8200"

#apm-server自定义认证

auth:

secret_token: "123456"

rum:

enabled: true

#kibana地址

kibana:

enabled: true

host: "http://192.168.1.5:5601"

username: "elastic"

password: "123456"

#ES地址

output.elasticsearch:

enabled: true

hosts: ["http://192.168.1.5:9200"]

username: "elastic"

password: "123456"

apm-server.data_streams.wait_for_integration: false

Run

mkdir -p /docker/data/kibana/config

vi /docker/data/kibana/config/apm-server.yml

docker run -d -p 8200:8200 --name=apm-server -u=root --volume="/docker/data/apm-server.yml:/usr/share/apm-server/apm-server.yml" docker.elastic.co/apm/apm-server:8.5.0 --strict.perms=false -e

访问

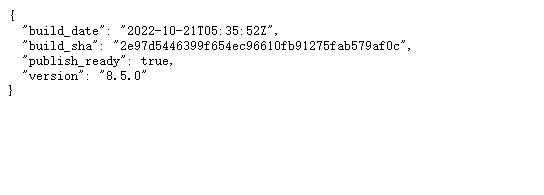

部署成功后浏览器访问http://192.168.1.5:8200/显示以下内容表示部署成功。开启认证访问是空白界面。因为kibana关联应用比较多,最好使用docekr logs kibana查看容器是否有错误日志。

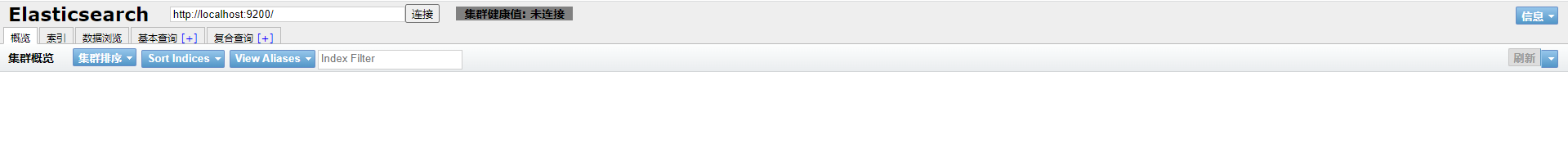

Elasticsearch-head

Elasticsearch-head 是一个搜索ES的Web程序。

有两种方式可以使用:

- 谷歌商店下载浏览器插件Elasticsearch-head使用,谷歌商店地址

- Docker部署站点服务

参考文档:

https://github.com/mobz/elasticsearch-head

https://hub.docker.com/r/mobz/elasticsearch-head

拉取镜像

docker pull mobz/elasticsearch-head:5

Run

docker run --name=elasticsearch-head -p 9100:9100 -d mobz/elasticsearch-head:5

访问站点http://192.168.1.5:9100/

如果ES开启了认证,则需要使用在Url中加上认证:http://192.168.1.5:9100/?auth_user=elastic&auth_password=123456在访问并且ES的elasticsearch.yml 配置文件需要添加跨域配置

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-headers: Authorization,X-Requested-With,Content-Type,Content-Length

Docker-Compose 编排部署

ELK

ELK三个版本号建议保持一致

Github地址:https://github.com/deviantony/docker-elk/blob/main/docker-compose.yml

简化版本,需要先做以下步骤:

- 新建

elasticsearch.yml文件 - 新建

logstash.conf文件 - 添加目录权限

version: '3.7'

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:8.5.0

container_name: elk_elasticsearch

environment:

- "discovery.type=single-node"

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

volumes:

- /dockerdata/elasticsearch/data:/usr/share/elasticsearch/data

- /dockerdata/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

ports:

- 9200:9200

- 9300:9300

networks:

- elk

kibana:

image: docker.elastic.co/kibana/kibana:8.5.0

container_name: elk_kibana

depends_on:

- elasticsearch #kibana在elasticsearch启动之后再启动

environment:

- ELASTICSEARCH_HOSTS=http://elasticsearch:9200 #设置访问elasticsearch的地址

ports:

- 5601:5601

networks:

- elk

logstash:

image: docker.elastic.co/logstash/logstash:8.5.0

container_name: elk_logstash

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

volumes:

- /dockerdata/logstash/data:/usr/share/logstash/data

- /dockerdata/logstash/logs:/usr/share/logstash/logs

- /dockerdata/logstash/pipeline:/usr/share/logstash/pipeline

depends_on:

- elasticsearch #kibana在elasticsearch启动之后再启动

networks:

- elk

ports:

- 5044:5044

- 9600:9600

- 9650:9650

networks:

elk:

driver: bridge

Apm

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.13.2

restart: always

container_name: elasticsearch

hostname: elasticsearch

environment:

- discovery.type=single-node

ports:

- 9200:9200

- 9300:9300

kibana:

image: docker.elastic.co/kibana/kibana:7.13.2

restart: always

container_name: kibana

hostname: kibana

environment:

- ELASTICSEARCH_HOSTS=http://elasticsearch:9200

ports:

- 5601:5601

depends_on:

- elasticsearch

apm_server:

image: docker.elastic.co/apm/apm-server:7.13.2

restart: always

container_name: apm_server

hostname: apm_server

command: --strict.perms=false -e

environment:

- output.elasticsearch.hosts=["elasticsearch:9200"]

ports:

- 8200:8200

depends_on:

- kibana

- elasticsearch

Elasticsearch + Kibana + Fleet-server + Metricbeat

Github地址:https://github.com/elastic/apm-server/blob/main/docker-compose.yml

version: '3.9'

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:8.7.0-188e6a3a-SNAPSHOT

ports:

- 9200:9200

healthcheck:

test: ["CMD-SHELL", "curl -s http://localhost:9200/_cluster/health?wait_for_status=yellow&timeout=500ms"]

retries: 300

interval: 1s

environment:

- "ES_JAVA_OPTS=-Xms1g -Xmx1g"

- "network.host=0.0.0.0"

- "transport.host=127.0.0.1"

- "http.host=0.0.0.0"

- "cluster.routing.allocation.disk.threshold_enabled=false"

- "discovery.type=single-node"

- "xpack.security.authc.anonymous.roles=remote_monitoring_collector"

- "xpack.security.authc.realms.file.file1.order=0"

- "xpack.security.authc.realms.native.native1.order=1"

- "xpack.security.enabled=true"

- "xpack.license.self_generated.type=trial"

- "xpack.security.authc.token.enabled=true"

- "xpack.security.authc.api_key.enabled=true"

- "logger.org.elasticsearch=${ES_LOG_LEVEL:-error}"

- "action.destructive_requires_name=false"

volumes:

- "./testing/docker/elasticsearch/roles.yml:/usr/share/elasticsearch/config/roles.yml"

- "./testing/docker/elasticsearch/users:/usr/share/elasticsearch/config/users"

- "./testing/docker/elasticsearch/users_roles:/usr/share/elasticsearch/config/users_roles"

- "./testing/docker/elasticsearch/ingest-geoip:/usr/share/elasticsearch/config/ingest-geoip"

kibana:

image: docker.elastic.co/kibana/kibana:8.7.0-188e6a3a-SNAPSHOT

ports:

- 5601:5601

healthcheck:

test: ["CMD-SHELL", "curl -s http://localhost:5601/api/status | grep -q 'All services are available'"]

retries: 300

interval: 1s

environment:

ELASTICSEARCH_HOSTS: '["http://elasticsearch:9200"]'

ELASTICSEARCH_USERNAME: "${KIBANA_ES_USER:-kibana_system_user}"

ELASTICSEARCH_PASSWORD: "${KIBANA_ES_PASS:-changeme}"

XPACK_FLEET_AGENTS_FLEET_SERVER_HOSTS: '["https://fleet-server:8220"]'

XPACK_FLEET_AGENTS_ELASTICSEARCH_HOSTS: '["http://elasticsearch:9200"]'

depends_on:

elasticsearch: { condition: service_healthy }

volumes:

- "./testing/docker/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml"

fleet-server:

image: docker.elastic.co/beats/elastic-agent:8.7.0-188e6a3a-SNAPSHOT

ports:

- 8220:8220

healthcheck:

test: ["CMD-SHELL", "curl -s -k https://localhost:8220/api/status | grep -q 'HEALTHY'"]

retries: 300

interval: 1s

environment:

FLEET_SERVER_ENABLE: "1"

FLEET_SERVER_POLICY_ID: "fleet-server-apm"

FLEET_SERVER_ELASTICSEARCH_HOST: http://elasticsearch:9200

FLEET_SERVER_ELASTICSEARCH_USERNAME: "${ES_SUPERUSER_USER:-admin}"

FLEET_SERVER_ELASTICSEARCH_PASSWORD: "${ES_SUPERUSER_PASS:-changeme}"

FLEET_SERVER_CERT: /etc/pki/tls/certs/fleet-server.pem

FLEET_SERVER_CERT_KEY: /etc/pki/tls/private/fleet-server-key.pem

FLEET_URL: https://fleet-server:8220

KIBANA_FLEET_SETUP: "true"

KIBANA_FLEET_HOST: "http://kibana:5601"

KIBANA_FLEET_USERNAME: "${ES_SUPERUSER_USER:-admin}"

KIBANA_FLEET_PASSWORD: "${ES_SUPERUSER_PASS:-changeme}"

depends_on:

elasticsearch: { condition: service_healthy }

kibana: { condition: service_healthy }

volumes:

- "./testing/docker/fleet-server/certificate.pem:/etc/pki/tls/certs/fleet-server.pem"

- "./testing/docker/fleet-server/key.pem:/etc/pki/tls/private/fleet-server-key.pem"

metricbeat:

image: docker.elastic.co/beats/metricbeat:8.7.0-188e6a3a-SNAPSHOT

environment:

ELASTICSEARCH_HOSTS: '["http://elasticsearch:9200"]'

ELASTICSEARCH_USERNAME: "${KIBANA_ES_USER:-admin}"

ELASTICSEARCH_PASSWORD: "${KIBANA_ES_PASS:-changeme}"

depends_on:

elasticsearch: { condition: service_healthy }

fleet-server: { condition: service_healthy }

volumes:

- "./testing/docker/metricbeat/elasticsearch-xpack.yml://usr/share/metricbeat/modules.d/elasticsearch-xpack.yml"

- "./testing/docker/metricbeat/apm-server.yml://usr/share/metricbeat/modules.d/apm-server.yml"

profiles:

- monitoring