opencv 学习笔记

1 配置环境

下载,可以从以下网站下载,国内镜像速度快,此次我选用4.5.4版本

OpenCV/opencv_contrib国内快速下载 – 绕云技术笔记 (raoyunsoft.com)

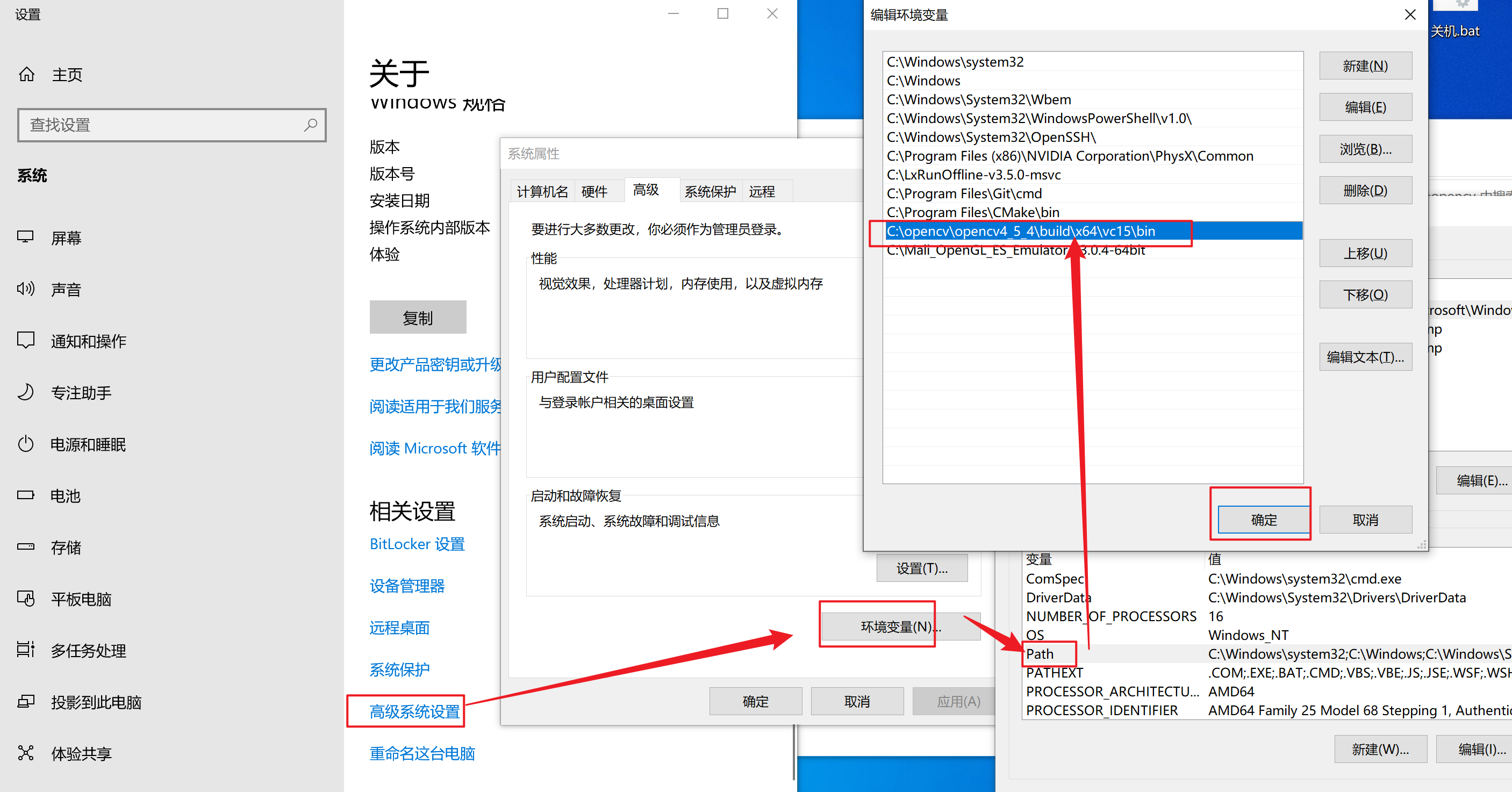

下载安装后,记得配置环境变量,主要是程序运行时需要调用的动态库目录,此处使用的是msvc2019 选vc15(给vs2017,2019使用)

配置完毕创建vs2019 或者vs2022工程,新建一个控制台,有helloworld的demo的工程,修改为release x64,copy如下代码,自己根据需要进行修改

#include <iostream>

#include <opencv2/opencv.hpp>

using namespace cv;

int main()

{

std::cout << "Hello World!\n";

Mat src = imread("E:/project/09-opencv_pytorch/test.jpg");

imshow("input", src);

waitKey(0);

destroyAllWindows();

}

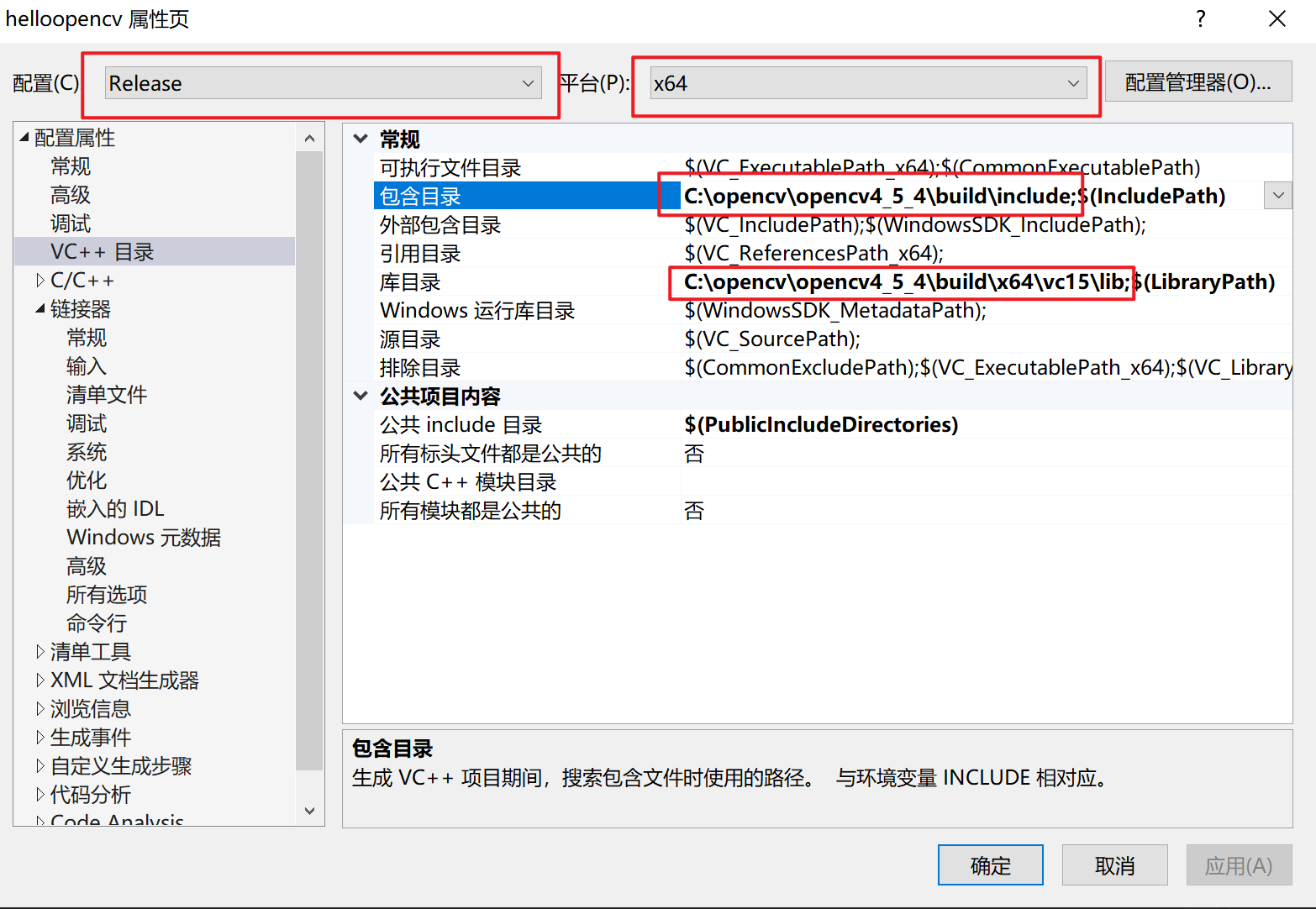

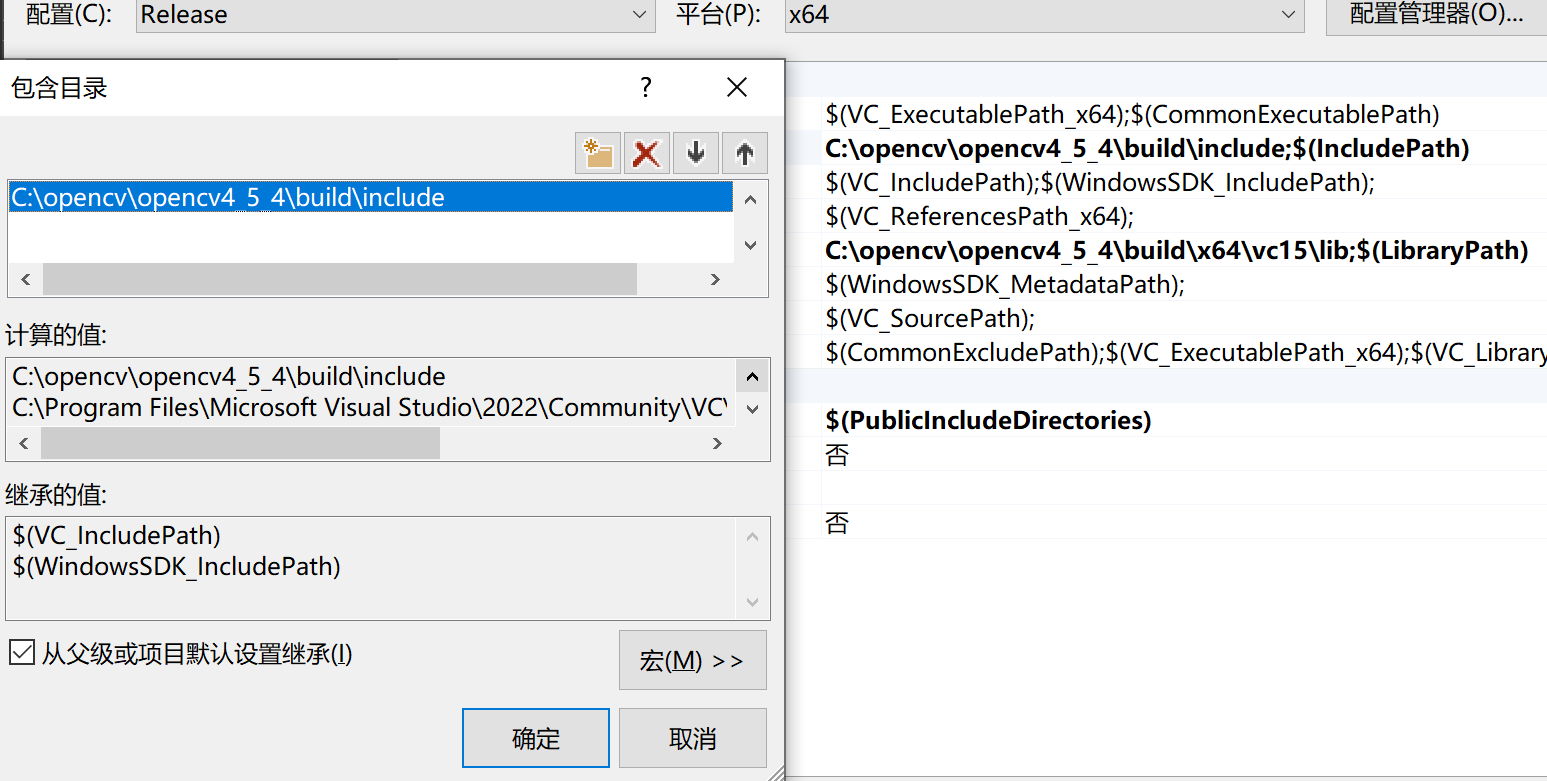

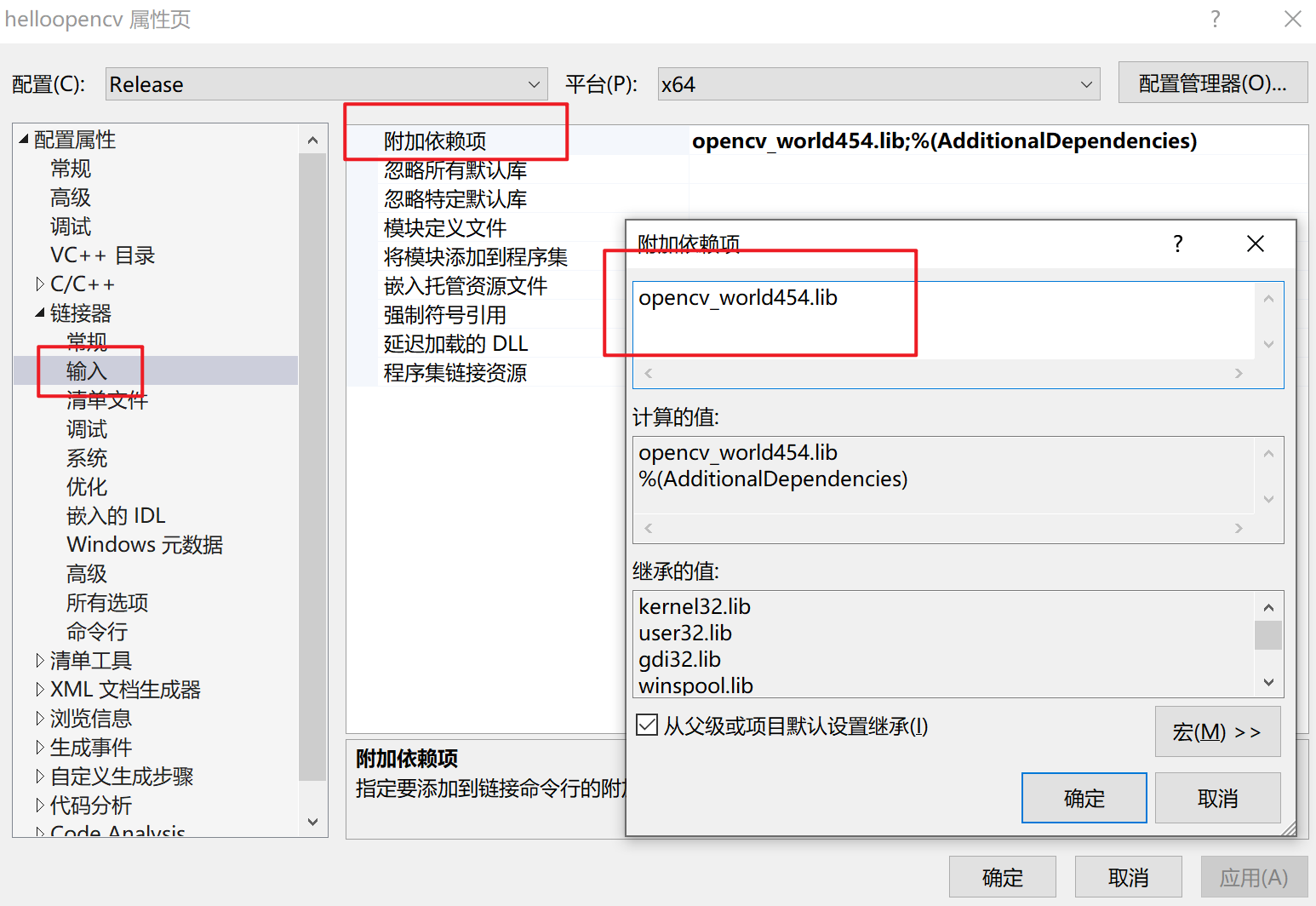

然后打开工程属性目录,配置头文件目录,库文件目录,库文件名称,

具体目录,是自己安装的opencv目录

C:\opencv\opencv4_5_4\build\include

C:\opencv\opencv4_5_4\build\x64\vc15\lib

opencv_world454.lib

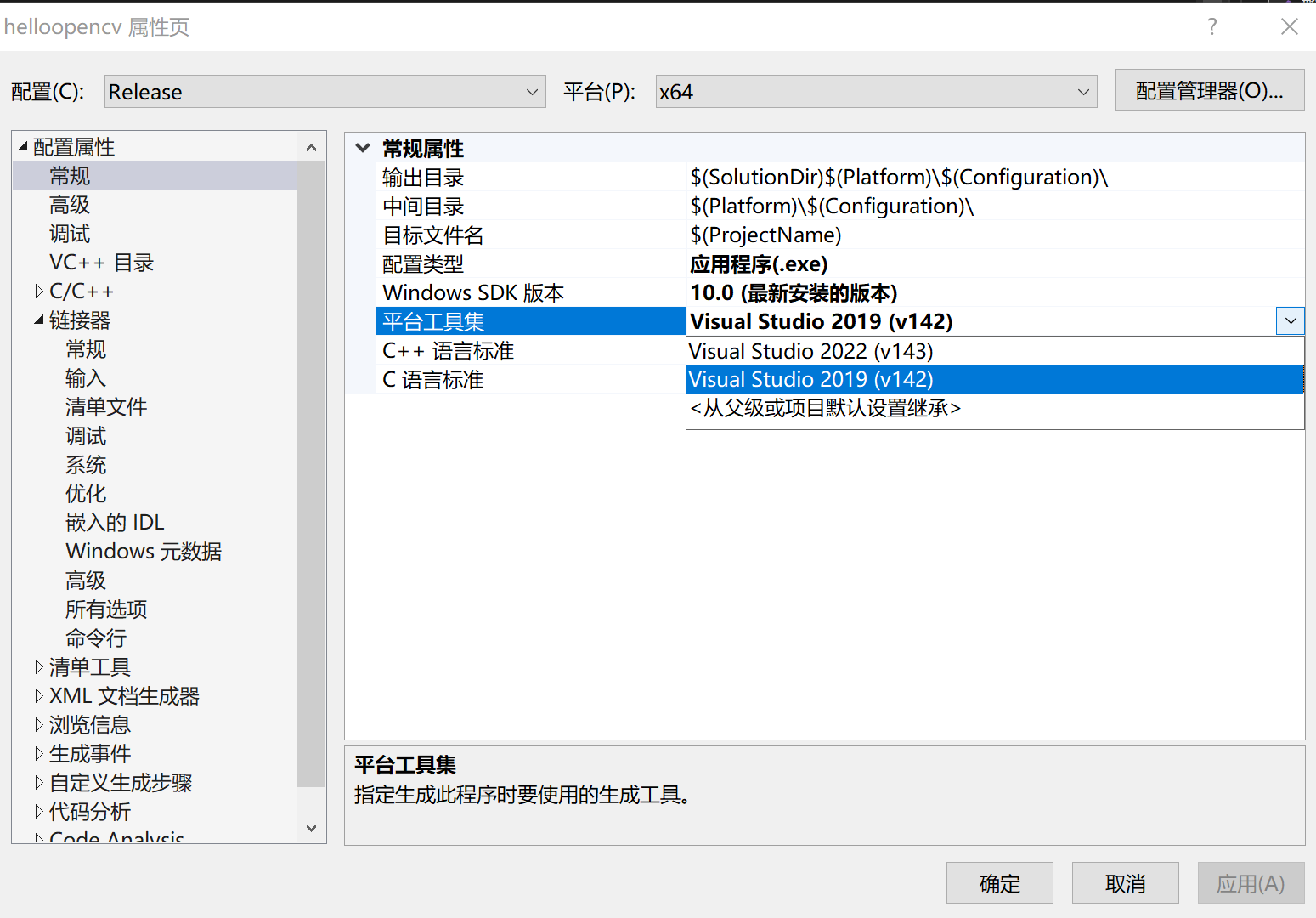

此次我安装的是2022,默认使用的是V143(vs2022),此处需要修改为v142(vs2019)

//代码分析

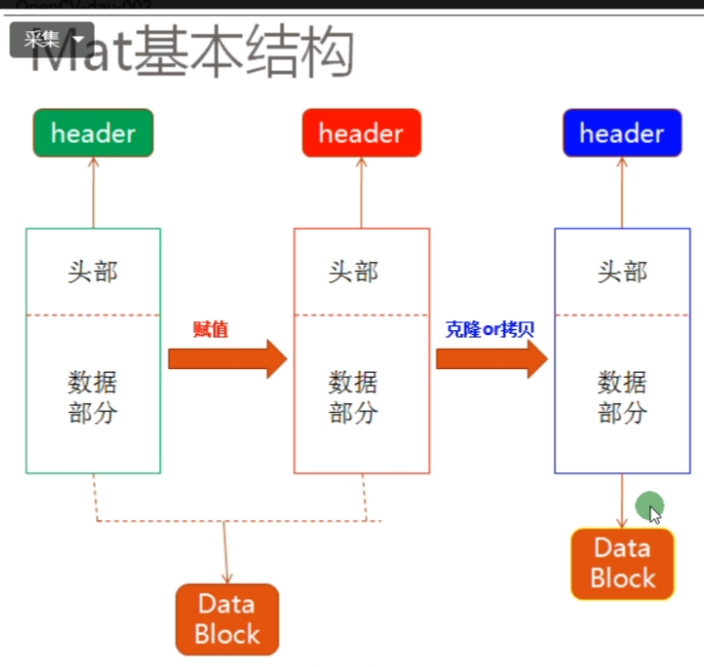

MAT结构体,赋值,只copy头部,copy才会全部复制一遍

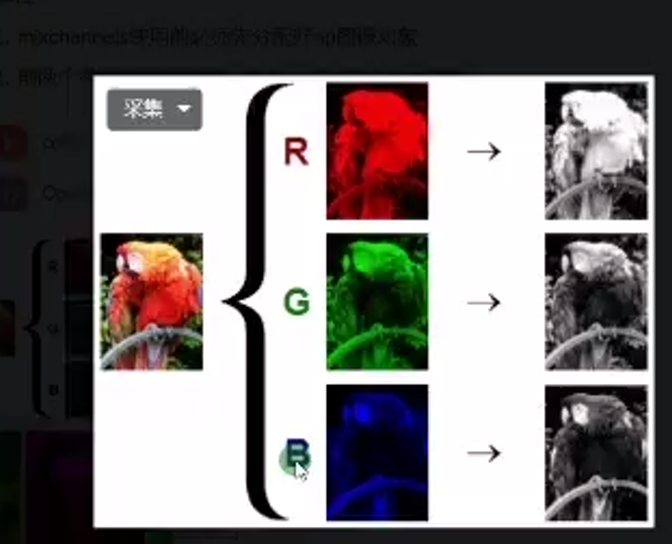

图片基本属性 宽度,高度,通道数

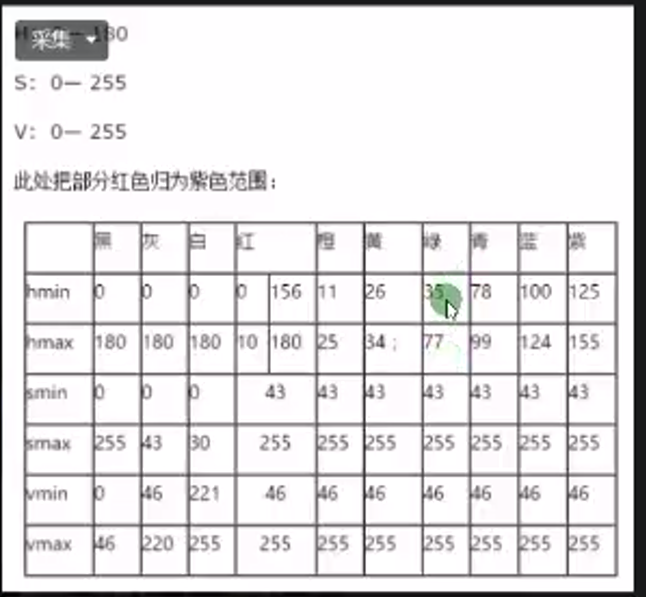

HSV色彩范围

图像像素类型归一化

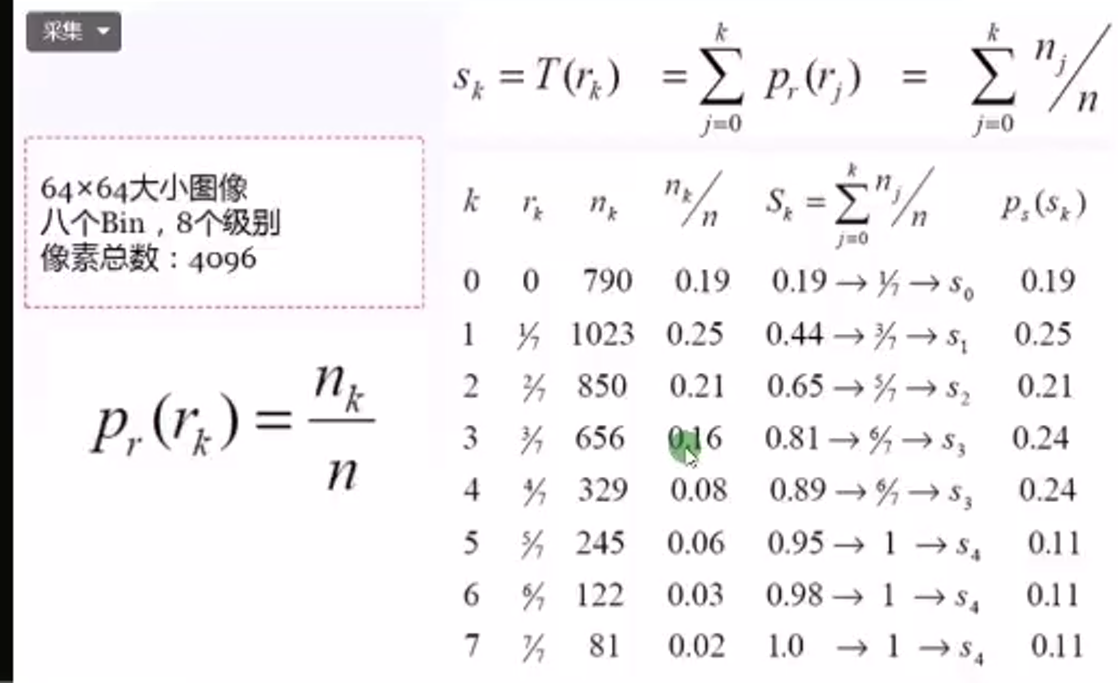

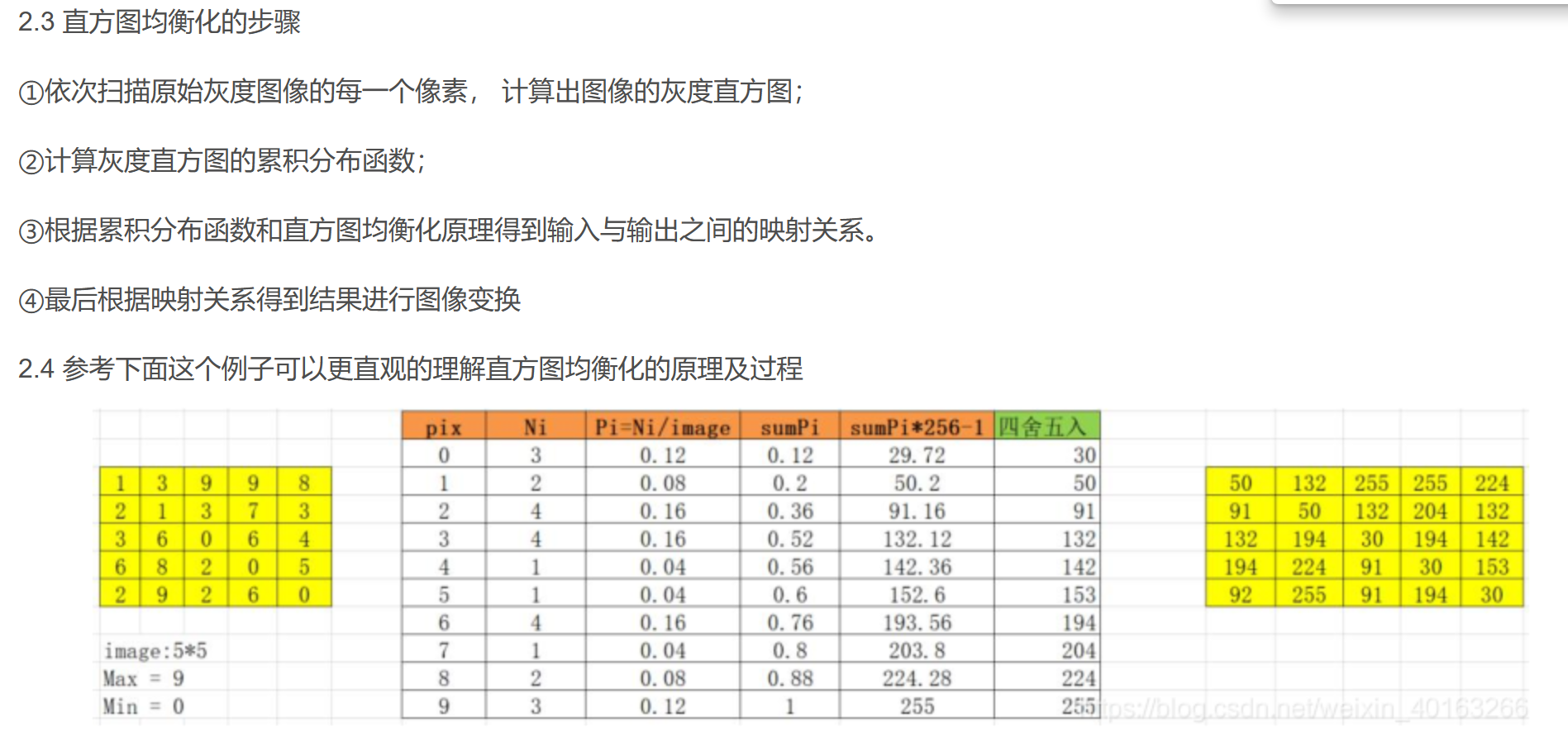

//直方图均衡化 -> 增强对比度 ,让图像中元素区别更大(让暗的元素不那么暗)

图像的灰度直方图就描述了图像中灰度分布情况, 能够很直观的展示出图像中各个灰度级所占的多少。图像的灰度直方图是灰度级的函数, 描述的是图像中具有该灰度级的像素的个数: 其中, 横坐标是灰度级, 纵坐标是该灰度级出现的率

直方图均衡化(Histogram Equalization)是一种增强图像对比度(Image Contrast)的方法,其主要思想是将一副图像的直方图分布通过累积分布函数变成近似均匀分布,从而增强图像的对比度。为了将原图像的亮度范围进行扩展, 需要一个映射函数, 将原图像的像素值均衡映射到新直方图中

(223条消息) 直方图均衡化的原理及实现_直方图均衡化原理_knowyourself1的博客-CSDN博客

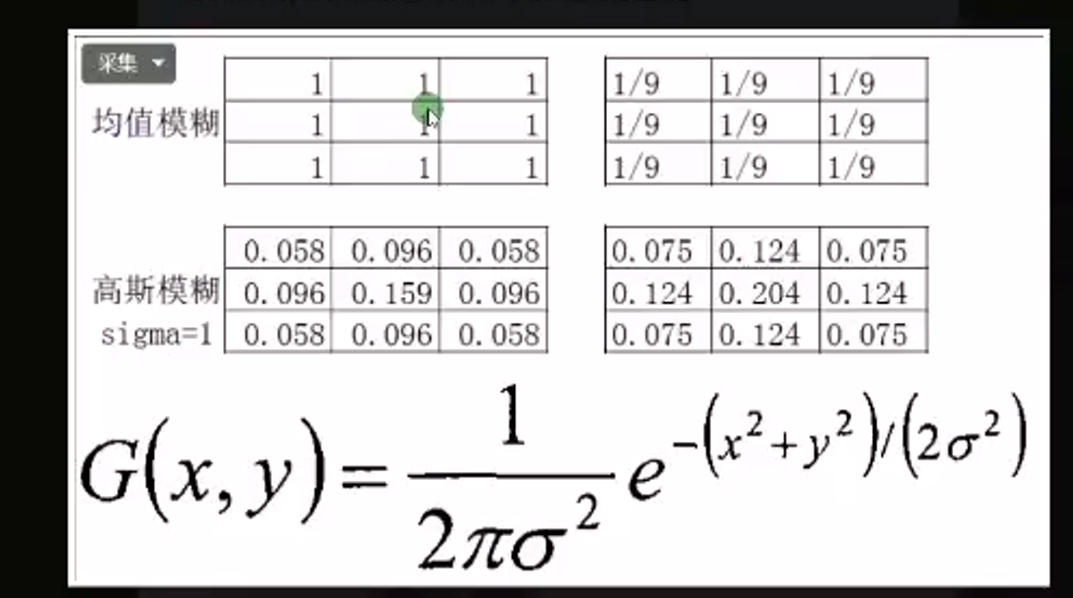

线性卷积

高斯模糊

双边高斯模糊,常用于边缘保留,去除噪声

//

// helloopencv.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。 // #include <iostream> #include <opencv2/opencv.hpp> #include <opencv2/highgui.hpp> #include <opencv2/dnn.hpp>//载入模型 #define mydebug std::cout << __func__ << "\t" << __LINE__ << std::endl; #define mydebugMsg(msg) std::cout << __func__ << "\t" << __LINE__ <<"\t"<<#msg<<":"<<msg<< std::endl; using namespace cv; //#define demofunc demoImgTrance void demoImgTrance(Mat& img) { Mat gray, hsv; cvtColor(img, hsv, COLOR_BGR2HSV); //H 0-180 色彩 S 饱和度 V 色彩亮度 cvtColor(img, gray, COLOR_BGR2GRAY); imshow("hsv", hsv); imshow("gray", gray); imwrite("E:/project/09-opencv_pytorch/test_hsv.jpg", hsv); imwrite("E:/project/09-opencv_pytorch/test_gray.jpg", gray); } //#define demofunc demoMat void demoMat(Mat& img) { Mat m1, m2; m1 = img.clone(); img.copyTo(m2); //创建空白文件 Mat m3 = Mat::zeros(800, 800, CV_8UC3); // Mat m3 = Mat::ones(Size(8,8), CV_8UC1);//注意ones有坑,只有第一个通道赋值1 // 可用Scalar 来赋值 eg: m3=Scalar(127,127,127); //std::cout << m3 << std::endl; m3 = Scalar(0, 255, 0); std::cout << "w:" << m3.cols << std::endl; std::cout << "h:" << m3.rows << std::endl; std::cout << "channel:" << m3.channels() << std::endl; imshow("m3", m3); } //#define demofunc demoModifyPix void demoModifyPix(Mat& img) { int w = img.cols;//宽度,//列 int h = img.rows;//高度,//行 int dims = img.channels();//维度 //for (int row = 0; row < h; row++) //{ // for (int col = 0; col < w; col++) { // if (dims == 1) {//灰度 // int pv = img.at<uchar>(row, col); // img.at<uchar>(row, col) = 255 - pv; // } // else if (dims == 3) { // Vec3b bgr = img.at<Vec3b>(row, col); // img.at<Vec3b>(row, col)[0] = 255 - bgr[0]; // img.at<Vec3b>(row, col)[1] = 255 - bgr[1]; // img.at<Vec3b>(row, col)[2] = 255 - bgr[2]; // } // } //} //使用指针,更快,更方便 for (int row = 0; row < h; row++) { for (int col = 0; col < w; col++) { uchar* current = img.ptr<uchar>(row, col); if (dims == 1) {//灰度 // saturate_cast 会对输入的数据 进行uchar的范围判断 *current++ = saturate_cast<uchar>(255 - *current); } else if (dims == 3) { *current++ = saturate_cast<uchar>(255 - *current); *current++ = saturate_cast<uchar>(255 - *current); *current++ = saturate_cast<uchar>(255 - *current); } } } namedWindow("img", WINDOW_FREERATIO); imshow("img", img); } //#define demofunc demoModifyOperator void demoModifyOperator(Mat& img) { namedWindow("img2", WINDOW_FREERATIO); Mat dst;//加减 //dst = img / Scalar(50, 50, 50); //imshow("img2", dst); //乘除需要矩阵相同 Mat m = Mat::zeros(img.size(), img.type()); m = Scalar(2, 2, 2); //multiply(img, m, dst);//乘法是专门的 //类似的加法 add 除法 divide 减法 subtract dst = img / m; imshow("img2", dst); } Mat demoTrackingBarm, demoTrackingBardst, demoTrackingBarsrc; int demoTrackingBarcurrent; static void onDemoTrackingBar(int , void*) { demoTrackingBarm = Scalar(demoTrackingBarcurrent, demoTrackingBarcurrent, demoTrackingBarcurrent); add(demoTrackingBarsrc, demoTrackingBarm, demoTrackingBardst); imshow("demoTrackingBar", demoTrackingBardst); } static void onDemoTrackingBar2(int b, void* _img) { Mat img = *(Mat*)_img; Mat m = Mat::zeros(img.size(), img.type()); Mat dst = Mat::zeros(img.size(), img.type()); m = Scalar(b, b, b); add(img, m, dst); imshow("demoTrackingBar", dst); } //调整亮度//对所有像素同时添加相同的值 static void onDemoTrackingBar_lightness(int b, void* _img) { Mat img = *(Mat*)_img; Mat m = Mat::zeros(img.size(), img.type()); Mat dst = Mat::zeros(img.size(), img.type()); //dst = src1*alpha + src2*beta + gamma; addWeighted(img, 1.0, m, 0.0, b, dst); imshow("demoTrackingBar", dst); } //调整对比度//让差值更大 static void onDemoTrackingBar_contrast(int b, void* _img) { Mat img = *(Mat*)_img; Mat m = Mat::zeros(img.size(), img.type()); Mat dst = Mat::zeros(img.size(), img.type()); double contrast = b / 100.0; //dst = src1*alpha + src2*beta + gamma; addWeighted(img, contrast, m, 0.0, 0, dst); imshow("demoTrackingBar", dst); } //滚动条,调试图片时,比较方便 //#define demofunc demoTrackingBar void demoTrackingBar(Mat& img) { //std::cout<< __func__ <<std::endl; namedWindow(__func__, WINDOW_FREERATIO); //imshow(__func__, dst); demoTrackingBarm = Mat::zeros(img.size(), img.type()); demoTrackingBardst = Mat::zeros(img.size(), img.type()); demoTrackingBarsrc = img; int maxlitness = 100; //createTrackbar("demobar", __func__, &demoTrackingBarcurrent, maxlitness, onDemoTrackingBar); //createTrackbar("demobar", __func__, &demoTrackingBarcurrent, maxlitness, onDemoTrackingBar2,(void*)&img); //onDemoTrackingBar(50, 0); int contrast = 50, lightness = 50; createTrackbar("contrast", __func__, &contrast, 200, onDemoTrackingBar_contrast, (void*)&img); createTrackbar("lightness", __func__, &lightness, 100, onDemoTrackingBar_lightness, (void*)&img); onDemoTrackingBar_lightness(50, (void*)&img); } //#define demofunc demoKey void demoKey(Mat& img) { //namedWindow(__func__, WINDOW_FREERATIO); Mat dst = Mat::zeros(img.size(), img.type()); while (true) { int c = waitKey(100); mydebugMsg(c); switch (c) { case 27: {mydebug; return; } case 49: { //按键1 mydebug; cvtColor(img, dst, COLOR_BGR2HSV); }break; case 50: { //按键1 mydebug; cvtColor(img, dst, COLOR_BGR2GRAY); }break; default: break; } imshow(__func__, dst); } } //#define demofunc demoColorStyle void demoColorStyle(Mat& img) { //namedWindow(__func__, WINDOW_FREERATIO); Mat dst = Mat::zeros(img.size(), img.type()); int index = 0; while (true) { int c = waitKey(100); if (c == 27) { return; } mydebugMsg(index); applyColorMap(img, dst, index % 19);//也可以刚给灰度图像进行伪彩色 index++; imshow(__func__, dst); } } //与或非逻辑操作 //#define demofunc demoBitwise void demoBitwise(Mat& img) { //namedWindow(__func__, WINDOW_FREERATIO); Mat m1 = Mat::zeros(Size(256, 256), CV_8UC3); Mat m2 = Mat::zeros(Size(256, 256), CV_8UC3); //绘制矩形 <0表示填充 >0表示 绘制外边框 // LINE_8 抗锯齿处理 rectangle(m1, Rect(100, 100, 80, 80), Scalar(255, 255, 0), -1, LINE_8, 0); rectangle(m2, Rect(150, 150, 80, 80), Scalar(0, 255, 255), -1, LINE_8, 0); imshow("m1", m1); imshow("m2", m2); Mat dst; //bitwise_and(m1, m2, dst); //bitwise_or(m1, m2, dst); //bitwise_not(m1, dst); //dst = ~img; bitwise_xor(m1,m2,dst); imshow(__func__, dst); } //通道的分离合并 //#define demofunc demoChannelSplitMerge void demoChannelSplitMerge(Mat& img) { //namedWindow(__func__, WINDOW_FREERATIO); //imshow(__func__, dst); std::vector<Mat> mv; split(img, mv); imshow("蓝色 blue", mv[0]); imshow("绿色 green", mv[1]); imshow("红色 red", mv[2]); Mat dst; mv[0] = 0; mv[2] = 0; merge(mv, dst);//绿色 imshow("merge", dst); int from_to[] = { 0,2,1,1,2,0 }; mixChannels(&img,1, &dst,1, from_to, 3); imshow("mixChannels", dst); } //色彩空间转换与色彩范围提取//使用绿幕,更换各种场景 //#define demofunc demoInrangeCvtcolor void demoInrangeCvtcolor(Mat& img) { //namedWindow(__func__, WINDOW_FREERATIO); //imshow(__func__, dst); Mat hsv; cvtColor(img, hsv, COLOR_BGR2HSV); Mat mask; //根据hsv的值范围,取出一定范围内的图像数据,结果存放在mask inRange(hsv, Scalar(35, 43, 46), Scalar(77, 255, 255), mask); imshow("mask1", mask); Mat redback = Mat::zeros(img.size(), img.type()); redback = Scalar(40, 40, 200);//背景色 imshow("redback", redback); bitwise_not(mask, mask);//对颜色取反,取出主体 imshow("mask2", mask); img.copyTo(redback, mask);//以redback作为背景,img和mask做逻辑与计算 imshow("redback2", redback); } //像素值统计//最小值 ,最大值,均值,方差, //有时候使用均值和方差可以 用来看用哪个通道抠图比较好,方差越小,说明图像色彩越统一 //#define demofunc demoPixelStatistic void demoPixelStatistic(Mat& img) { //namedWindow(__func__, WINDOW_FREERATIO); //imshow(__func__, dst); double minv, maxv; Point minloc, maxloc;//找最大值,最小值所在的点 std::vector<Mat> mv; split(img, mv); for (int i = 0; i < mv.size(); i++) { minMaxLoc(mv[i], &minv, &maxv, &minloc, &maxloc, Mat()); std::cout << "No.channel:" << i << "\t minv:" << minv << "\t maxv:" << maxv << "\t minloc:" << minloc << "\t maxloc" << maxloc << std::endl; } Mat mean, stddev; meanStdDev(img, mean, stddev); std::cout << "img mean:\n" << mean << std::endl; std::cout << "img stddev:\n" << stddev << std::endl; Mat redback = Mat::zeros(img.size(), img.type()); redback = Scalar(40, 40, 200);//背景色 imshow("redback", redback); meanStdDev(redback, mean, stddev); std::cout << "mean:\n" << mean << std::endl; std::cout << "stddev:\n" << stddev << std::endl; } //绘制图形//填充 //#define demofunc demoDrawing void demoDrawing(Mat& img) { //namedWindow(__func__, WINDOW_FREERATIO); //imshow(__func__, dst); Mat m = Mat::zeros(img.size(), img.type()); //m = Scalar(40, 40, 200);//背景色 rectangle(m, Rect(50, 50, 100, 100), Scalar(0, 0, 255), 2, 8, 0); circle(m, Point(100, 100), 50, Scalar(0, 255, 0), -1, 8, 0); line(m, Point(100, 100), Point(200, 200), Scalar(255, 0, 0), 10, LINE_AA, 0);//LINE_AA抗锯齿效果更好 //椭圆 ellipse(m, RotatedRect(Point(100, 100), Size(100, 200), 30.0), Scalar(255, 255, 0), 5, 8); Mat dst; //dst = src1*alpha + src2*beta + gamma; addWeighted(img, 0.7, m, 0.3, 0, dst); imshow(__func__, dst); } //#define demofunc demoDrawingRandam void demoDrawingRandam(Mat& img) { //namedWindow(__func__, WINDOW_FREERATIO); //imshow(__func__, dst); Mat canvas = Mat::zeros(img.size(), img.type()); RNG rng(12345); int w = canvas.cols; int h = canvas.rows; while (true) { int c = waitKey(10); if (c == 27) { return; } //canvas = Scalar(0, 0, 0); line(canvas, Point(rng.uniform(0, w), rng.uniform(0, h)), Point(rng.uniform(0, w), rng.uniform(0, h)), Scalar(rng.uniform(0, 255), rng.uniform(0, 255), rng.uniform(0, 255)), 1, 8); imshow(__func__, canvas); } } //绘制多边形和多边形填充 //#define demofunc demoDrawingPolyline void demoDrawingPolyline(Mat& img) { //namedWindow(__func__, WINDOW_FREERATIO); //imshow(__func__, dst); Mat canvas = Mat::zeros(512,512, CV_8UC3); std::vector<Point> pts; pts.push_back(Point(100, 100)); pts.push_back(Point(350, 100)); pts.push_back(Point(450, 280)); pts.push_back(Point(320, 450)); pts.push_back(Point(80, 400)); ////这个样子比较麻烦,可以使用 drawContours //fillPoly(canvas, pts, Scalar(0, 255, 0), 8, 0);//填充多边形 //polylines(canvas, pts, true, Scalar(0, 0, 255), 20, LINE_AA, 0);//绘制多边形框 std::vector<std::vector<Point>> contours; contours.push_back(pts); // drawContours 可以一次绘制多个多边形 // contourIdx <0 表示绘制全部 >=0 表示绘制指定index的多边形 drawContours(canvas, contours, -1, Scalar(0, 0, 255), -1); imshow(__func__, canvas); } static Point sp(-1, -1); static Point ep(-1, -1); static Mat temp; static void onDemoMouse(int event, int x, int y, int flags, void* userdata) { Mat img = *(Mat*)userdata; if (event == EVENT_LBUTTONDOWN) { sp.x = x; sp.y = y; mydebugMsg(sp); } else if (event == EVENT_LBUTTONUP) { ep.x = x; ep.y = y; mydebugMsg(ep); temp.copyTo(img); Rect box(sp.x, sp.y, ep.x - sp.x, ep.y - sp.y); imshow("demoMouse ROI", img(box)); rectangle(img, box, Scalar(0, 0, 255), 3, 8, 0); imshow("demoMouse", img); sp.x = -1; sp.y = -1; } else if (event == EVENT_MOUSEMOVE) { if (sp.x > 0 && sp.y > 0) { ep.x = x; ep.y = y; mydebugMsg(ep); temp.copyTo(img); rectangle(img, Rect(sp.x, sp.y, ep.x - sp.x, ep.y - sp.y), Scalar(0, 0, 255), 3, 8, 0); imshow("demoMouse", img); } } } //鼠标的应用 //#define demofunc demoMouse void demoMouse(Mat& img) { //使用 setMouseCallback 不加这一句会导致崩溃 namedWindow(__func__, WINDOW_AUTOSIZE); //imshow(__func__, dst); //Mat canvas = Mat::zeros(512, 512, CV_8UC3); setMouseCallback("demoMouse", onDemoMouse,(void*)&img); imshow("demoMouse", img); temp = img.clone(); } //图像像素类型归一化处理//像素值转换到0-1之间//L1 L2 最大值最小值 最大值 //#define demofunc demoNorm void demoNorm(Mat& img) { //namedWindow(__func__, WINDOW_AUTOSIZE); //imshow(__func__, dst); Mat dst; mydebugMsg(img.type()); img.convertTo(dst, CV_32F);//像素数据 浮点化处理 深度学习中常用 //CV_8UC3 -> CV_32FC3 mydebugMsg(dst.type()); imshow("dst_32f", dst);//此处浮点数取值未规范,浮点数图像显示不正常 Mat norm; //L1 L2 最大值最小值 最大值 normalize(dst, norm, 1.0, 0, NORM_MINMAX);//归一化处理,各自减去0.5就是中心化处理 mydebugMsg(norm.type()); imshow("norm_32f", norm);//浮点数取值规范到0-1之间后,浮点图像可以正常显示 //要想转回去,可以,乘法 255 再转为 CV_8UC3 Mat m = Mat::zeros(norm.size(), norm.type()); m = Scalar(255, 255, 255); Mat _dst32fto8,dst32fto8; multiply(norm, m, _dst32fto8);//乘法是专门的 _dst32fto8.convertTo(dst32fto8, CV_8U); imshow("dst32fto8", dst32fto8); } //图像缩放与插值(涉及到图像变化的都有插值)//最常见的四种插值方法//线性插值(最快),立方插值,最近邻插值 //#define demofunc demoResize void demoResize(Mat& img) { //namedWindow(__func__, WINDOW_AUTOSIZE); //imshow(__func__, dst); Mat zoomin,zoomout; int w = img.cols; int h = img.rows; resize(img, zoomin, Size(w / 2, h / 2), 0, 0, INTER_LINEAR); imshow("zoomin", zoomin); resize(img, zoomout, Size(w * 1.5, h * 1.5), 0, 0, INTER_LINEAR); imshow("zoomout", zoomout); } //翻转 //#define demofunc demoFlip void demoFlip(Mat& img) { //namedWindow(__func__, WINDOW_AUTOSIZE); //imshow(__func__, dst); Mat dst; flip(img, dst, -1);//0上下对称//1 左右对称 -1 旋转180度 imshow(__func__, dst); } //旋转平移//旋转后宽高的重定义 #define demofunc demoRotate void demoRotate(Mat& img) { //namedWindow(__func__, WINDOW_AUTOSIZE); //imshow(__func__, dst); Mat dst, m, dst2, m2; int w = img.cols; int h = img.rows; //scale是指缩放 ,此处不需要就设置为1 m = getRotationMatrix2D(Point2f(w / 2, h / 2), 45.0, 1); warpAffine(img, dst, m, img.size(), INTER_LINEAR, 0, Scalar(255, 0, 0)); imshow("demoRotate m1", dst);//图片宽高被压缩 m2 = getRotationMatrix2D(Point2f(w / 2, h / 2), 150.0, 1);; double cos = abs(m2.at<double>(0, 0)); double sin = abs(m2.at<double>(0, 1)); int nw = cos * w + sin * h; int nh = sin * w + cos * h; m2.at<double>(0, 2) += (nw / 2 - w / 2); m2.at<double>(1, 2) += (nh / 2 - h / 2); warpAffine(img, dst, m2, Size(nw, nh), INTER_LINEAR, 0, Scalar(0, 255, 0)); imshow("demoRotate m2", dst);//图片宽高被压缩 } //打开摄像头 //#define demofunc demoCamera void demoCamera(Mat& img) { //namedWindow(__func__, WINDOW_AUTOSIZE); //imshow(__func__, dst); //int w = img.cols; //int h = img.rows; //VideoCapture capture(0);//打开摄像头 VideoCapture capture("./test.mp4");//打开视频 Mat frame; while (true) { int c = waitKey(10); if (c == 27) { break; } capture.read(frame); //todo imshow(__func__, frame); } capture.release(); } //视频处理与保存 //sp 标准清晰度 //HD 高清 720p 1080p //超清 //蓝光 //OPENCV文件保存理论上,大小不要超过2G //如果想要音视频同时处理,可以使用ffmpeg //#define demofunc demoVideo void demoVideo(Mat& img) { //namedWindow(__func__, WINDOW_AUTOSIZE); //imshow(__func__, dst); //int w = img.cols; //int h = img.rows; VideoCapture capture(0);//打开视频 int fw = capture.get(CAP_PROP_FRAME_WIDTH); int fh = capture.get(CAP_PROP_FRAME_HEIGHT); int count = capture.get(CAP_PROP_FRAME_COUNT);//总帧数 double fps = capture.get(CAP_PROP_FPS);//帧率,一秒图片数 int fourcc = capture.get(CAP_PROP_FOURCC);//获取编码方式 mydebugMsg(fw); mydebugMsg(fw); mydebugMsg(count); mydebugMsg(fps); mydebugMsg(fourcc); //OPENCV文件保存理论上,大小不要超过2G //如果想要音视频同时处理,可以使用ffmpeg VideoWriter writer("E:/project/09-opencv_pytorch/video2.mp4", fourcc, 25, Size(fw, fh), true); Mat frame; while (true) { int c = waitKey(10); if (c == 27) { break; } capture.read(frame); writer.write(frame); //todo imshow(__func__, frame); } capture.release(); writer.release(); } //直方图(对图像像素的统计0-255之间) //直方图在对图片平移,旋转,缩放等操作后,整体比例不变 //直方图丢失了图像的位置信息,因此,直方图并不能表示一个完整的图 //#define demofunc demoHistogram void demoHistogram(Mat& img) { //namedWindow(__func__, WINDOW_AUTOSIZE); //imshow(__func__, dst); std::vector<Mat> bgr_plane; split(img, bgr_plane); //定义参数变量 const int channels[1] = { 0 }; const int bins[1] = { 256 }; float hranges[2] = { 0,255 }; const float* ranges[1] = { hranges }; Mat b_hist, g_hist, r_hist; //计算bgr通道直方图 /* 参数解释: • images:输入的图像的指针; • nimages:输入图像个数; • channels:需要统计直方图的第几通道; • mask:掩模,mask必须是一个8位(CV_8U)的数组并且和images的数组大小相同; • hist:直方图计算的输出值; • dims:输出直方图的维度(由channels指定); • histSize:直方图中每个dims维度需要分成多少个区间(如果把直方图看作一个一个竖条的话,就是竖条的个数); • ranges:统计像素值的区间; • uniform=true:是否对得到的直方图数组进行归一化处理; • accumulate=false:在多个图像时,是否累积计算像素值的个数; */ calcHist(&bgr_plane[0], 1, 0, Mat(), b_hist, 1, bins, ranges); calcHist(&bgr_plane[1], 1, 0, Mat(), g_hist, 1, bins, ranges); calcHist(&bgr_plane[2], 1, 0, Mat(), r_hist, 1, bins, ranges); //显示 int hist_w = 512; int hist_h = 400; int bin_w = cvRound((double)hist_w / bins[0]); Mat histimg = Mat::zeros(hist_h, hist_w, CV_8UC3); //归一化数据 min 0 max histimg.rows /* src 输入数组; dst 输出数组,数组的大小和原数组一致; alpha 用来规范值或者规范范围,并且是下限; beta 只用来规范范围并且是上限,因此只在NORM_MINMAX中起作用; norm_type 归一化选择的数学公式类型; dtype 当为负,输出在大小深度通道数都等于输入,当为正,输出只在深度与输如不同,不同 的地方由dtype决定; mark 掩码。选择感兴趣区域,选定后只能对该区域进行操作。 */ normalize(b_hist, b_hist, 0, histimg.rows, NORM_MINMAX, -1, Mat()); normalize(g_hist, g_hist, 0, histimg.rows, NORM_MINMAX, -1, Mat()); normalize(r_hist, r_hist, 0, histimg.rows, NORM_MINMAX, -1, Mat()); for (int i = 1; i < bins[0]; i++) { line(histimg, Point(bin_w * (i - 1), hist_h - cvRound(b_hist.at<float>(i - 1))), Point(bin_w * (i), hist_h - cvRound(b_hist.at<float>(i))), Scalar(255, 0, 0), 2, 8, 0); line(histimg, Point(bin_w * (i - 1), hist_h - cvRound(g_hist.at<float>(i - 1))), Point(bin_w * (i), hist_h - cvRound(g_hist.at<float>(i))), Scalar(0, 255, 0), 2, 8, 0); line(histimg, Point(bin_w * (i - 1), hist_h - cvRound(r_hist.at<float>(i - 1))), Point(bin_w * (i), hist_h - cvRound(r_hist.at<float>(i))), Scalar(0, 0, 255), 2, 8, 0); } namedWindow(__func__, WINDOW_AUTOSIZE); imshow(__func__, histimg); } //绘制二维直方图 //#define demofunc demoHistogram2d void demoHistogram2d(Mat& img) { Mat hsv, hs_hist; cvtColor(img, hsv, COLOR_BGR2HSV); //H取值范围 0-180 s 取值范围 0-256 int hbins = 30, sbins = 32;//eg 0-180 30组,每组6个 0-256 32组,每组8个 int hs_bins[] = { hbins ,sbins }; float h_range[] = { 0,180 }; float s_range[] = { 0,256 }; const float* hs_ranges[] = { h_range ,s_range }; int hs_channels[] = { 0,1 }; calcHist(&hsv, 1, hs_channels, Mat(), hs_hist, 2, hs_bins, hs_ranges, true, false); double maxval = 0; minMaxLoc(hs_hist, 0, &maxval, 0, 0); int scale = 10; Mat hist2d_img = Mat::zeros(hbins * scale, sbins * scale, CV_8UC3); for (int h = 0; h < hbins; h++) { for (int s = 0; s < sbins; s++) { float binval = hs_hist.at<float>(h, s); int intennsity = cvRound(binval * 255 / maxval); rectangle(hist2d_img, Point(h * scale, s * scale), Point((h + 1) * scale - 1, (s + 1) * scale - 1), Scalar::all(intennsity), -1); } } applyColorMap(hist2d_img, hist2d_img, COLORMAP_JET); imshow(__func__, hist2d_img); } //直方图均衡化//可用于图像单通道效果增强 //#define demofunc demoHistogrameq void demoHistogrameq(Mat& img) { Mat gray, dst; cvtColor(img, gray, COLOR_BGR2GRAY); equalizeHist(gray, dst); imshow(__func__, dst); } //图像卷积计算//常用线性卷积//卷积核越大 ,模糊越厉害//卷积核系数都是1 //#define demofunc demoblur void demoblur(Mat& img) { Mat dst; blur(img, dst, Size(13, 2), Point(-1, -1)); imshow(__func__, dst); } //图像卷积 高斯卷积//卷积核越大 ,模糊越厉害//卷积核系数,中心区域系数最大,四周小(二维高斯函数) //size(0,0)时,opencv会自己计算sigma //size(5,5),卷积核,注意卷积核必须为奇数 //sigma一般取值3,5,10,15 对比看效果 //#define demofunc demoblurgaussian void demoblurgaussian(Mat& img) { Mat dst; GaussianBlur(img, dst, Size(0, 0),15); imshow(__func__, dst); } //高斯双边模糊//去处噪声,保留边缘 /* - src:Source 8-bit or floating-point, 1-channel or 3-channel image; - dst:Destination image of the same size and type as src; - d:用于滤波的每个像素邻域的直径。如果它是非正数,则从sigmaSpace计算; - sigmaColor:在颜色空间中过滤。较大的参数值意味着像素邻域内的较远颜色(参见sigmaSpace)将混合在一起,从而产生较大的半等色区域; - sigmaSpace:在坐标空间中过滤。参数值越大,表示距离越远的像素只要颜色足够接近就会相互影响(参见sigmaColor)。当d > 0时,它指定了与sigmaSpace无关的邻域大小。否则,d与sigmaSpace成正比; - borderType:用于推断图像外部像素的边界模式,请参见#BorderTypes。 */ //#define demofunc demobifilter void demobifilter(Mat& img) { Mat dst; bilateralFilter(img, dst,0,100,10); imshow(__func__, dst); } //人脸检测,opencv自带的只有几个MB,但是满足日常使用需求 #define demofunc demoface void demoface(Mat& img) { dnn::Net net = dnn::readNetFromTensorflow("E:/project/09-opencv_pytorch/models/opencv_face_detector_uint8.pb", "E:/project/09-opencv_pytorch/models/opencv_face_detector.pbtxt"); VideoCapture capture(0);//打开视频 Mat frame, frame2; while (true) { int c = waitKey(10); if (c == 27) { break; } capture.read(frame); //cvtColor(frame2, frame, COLOR_YUV2BGRA_NV21); //到 models.yml 中找内容 Mat blob = dnn::blobFromImage(frame, 1.0, Size(300, 300), Scalar(104, 177, 123), false, false); net.setInput(blob); Mat probs = net.forward();//NCHW N通道数,C个数,H高度,W宽度 Mat detectionMat(probs.size[2], probs.size[3], CV_32F, probs.ptr<float>()); // 解析结果 for (int i = 0; i < detectionMat.rows; i++) { float confidence = detectionMat.at<float>(i, 2); if (confidence > 0.5) { int x1 = static_cast<int>(detectionMat.at<float>(i, 3) * frame.cols); int y1 = static_cast<int>(detectionMat.at<float>(i, 4) * frame.rows); int x2 = static_cast<int>(detectionMat.at<float>(i, 5) * frame.cols); int y2 = static_cast<int>(detectionMat.at<float>(i, 6) * frame.rows); Rect box(x1, y1, x2 - x1, y2 - y1); rectangle(frame, box, Scalar(0, 0, 255), 2, 8, 0); } } //todo imshow(__func__, frame); } capture.release(); } // opencv_tutorial_data\source\cpp 目录下即可看到! //或者 git clone https ://gitee.com/opencv_ai/opencv_tutorial_data //一键获取本公众号所有代码!不保证bug - free int main() { std::cout << "Hello World!\n"; //Mat是opencv图像的二维化数组//读进来默认是BGR Mat src = imread("E:/project/09-opencv_pytorch/test.jpg",IMREAD_UNCHANGED); //IMREAD_GRAYSCALE 灰度显示 ,默认是彩色显示 //IMREAD_UNCHANGED 保留透明通道显示(png图片貌似有透明通道) //注意src需要判断,不判断,显示时候就会报错 empty if (src.empty()) { std::cout << "src is empty !!!\n"; return -1; } //namedWindow("input1", WINDOW_FREERATIO);//手动拉动窗口大小 imshow("input1", src);//只支持8位,或者浮点显示,其他显示多少可能有点问题 #ifdef demofunc demofunc(src); #endif // demofunc waitKey(0);//0表示阻塞,>0表示等待多少毫秒 destroyAllWindows();//销毁窗口 } // 运行程序: Ctrl + F5 或调试 >“开始执行(不调试)”菜单 // 调试程序: F5 或调试 >“开始调试”菜单 // 入门使用技巧: // 1. 使用解决方案资源管理器窗口添加/管理文件 // 2. 使用团队资源管理器窗口连接到源代码管理 // 3. 使用输出窗口查看生成输出和其他消息 // 4. 使用错误列表窗口查看错误 // 5. 转到“项目”>“添加新项”以创建新的代码文件,或转到“项目”>“添加现有项”以将现有代码文件添加到项目 // 6. 将来,若要再次打开此项目,请转到“文件”>“打开”>“项目”并选择 .sln 文件

//

浙公网安备 33010602011771号

浙公网安备 33010602011771号