linux下 ubantu 使用笔记本电脑摄像头,v4l2采集摄像头数据

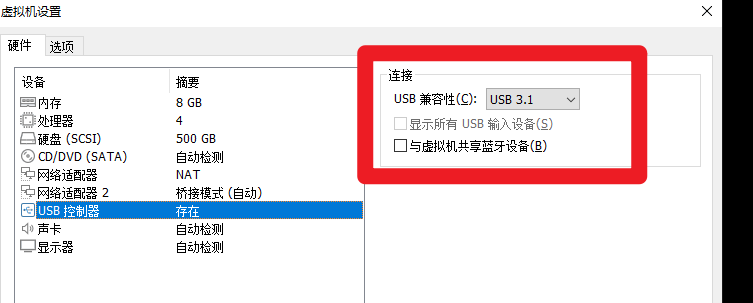

1 虚拟机添加usb控制器,注意选择兼容3.1

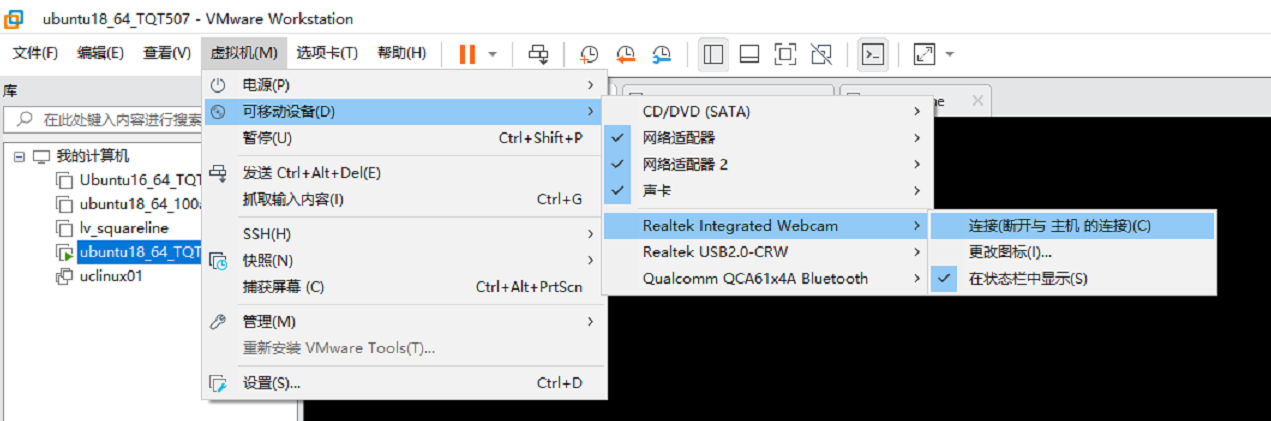

2 添加移动设备

3 在ubantu中输入命令行 cheese 检测摄像头是否工作正常

4 gcc编译如下代码,注意根据摄像头支持的格式 V4L2_PIX_FMT_YUYV 或者其他 代码参考

#Makefile CC=gcc CFLAGS= -g -Wall -O2 TARGET= test1.bin SRCS= camer.c C_OBJS= camer.o all:$(TARGET) $(TARGET):$(C_OBJS) $(CC) $(CFLAGS) -o $@ $^ %.o:%.c $(CC) $(CFLAGS) -c -o $@ $< .PHONY:clean clean: rm -rf *.o $(TARGET) $(CXX_OBJS) $(C_OBJS) test1 out.*

参考 新建标签页 (csdn.net) https://blog.csdn.net/weixin_43937576/article/details/109901691

/************************************************************************* > File Name: camer.c > 作者:YJK > Mail: 745506980@qq.com > Created Time: 2020年11月18日 星期三 14时17分50秒 ************************************************************************/ #include<stdio.h> #include<unistd.h> #include<fcntl.h> #include<stdlib.h> #include<linux/videodev2.h> #include<sys/ioctl.h> #include<errno.h> #include<string.h> #include<assert.h> #include<getopt.h> #include<sys/stat.h> #include<sys/mman.h> #include<asm/types.h> #include<linux/fb.h> #define CLEAN(x) (memset(&(x), 0, sizeof(x))) #define WIDTH 640 #define HEIGHT 480 typedef struct Video_Buffer{ void * start; unsigned int length; }Video_Buffer; int ioctl_(int fd, int request, void *arg); void sys_exit(const char *s); int open_device(const char * device_name); int open_file(const char * file_name); void start_stream(void); void end_stream(void); int init_device(void); int init_mmap(void); static int read_frame(void); int process_frame(void); void close_mmap(void); void close_device(void); //#include"camer.h" #include <linux/i2c.h> #include <linux/videodev2.h> #include <sys/ioctl.h> #include <sys/mman.h> int fd; int file_fd; int frame_size; static Video_Buffer * buffer = NULL; FILE * file = NULL; int ioctl_(int fd, int request, void *arg) { int ret = 0; do{ ret = ioctl(fd, request, arg); }while(ret == -1 && ret == EINTR); } int open_device(const char * device_name) { struct stat st; if( -1 == stat( device_name, &st ) ) { printf( "Cannot identify '%s'\n" , device_name ); return -1; } if ( !S_ISCHR( st.st_mode ) ) { printf( "%s is no device\n" , device_name ); return -1; } fd = open(device_name, O_RDWR | O_NONBLOCK , 0); if ( -1 == fd ) { printf( "Cannot open '%s'\n" , device_name ); return -1; } return 0; } int init_device(void) { //查询设备信息 struct v4l2_capability cap; if (ioctl_(fd, VIDIOC_QUERYCAP, &cap) == -1) { perror("VIDIOC_QUERYCAP"); return -1; } printf("---------------------LINE:%d\n", __LINE__); printf("DriverName:%s\nCard Name:%s\nBus info:%s\nDriverVersion:%u.%u.%u\n", cap.driver,cap.card,cap.bus_info,(cap.version>>16)&0xFF,(cap.version>>8)&0xFF,(cap.version)&0xFF); if (!(cap.capabilities & V4L2_CAP_VIDEO_CAPTURE)) { perror("Device not a video capture device\n"); return -1; } if(cap.capabilities & V4L2_CAP_STREAMING) { printf("Device supports streaming i/o\n"); } if (cap.capabilities & V4L2_CAP_READWRITE) { printf("Device supports read i/o\n"); } printf("\n"); //查看视频输入 if(1){ struct v4l2_input fmtdesc; fmtdesc.index = 0; printf("input:\n"); while(ioctl(fd, VIDIOC_ENUMINPUT, &fmtdesc) != -1){ printf("%d.%s\n", fmtdesc.index, fmtdesc.name); fmtdesc.index++; } printf("\n"); } //查看摄像头支持的格式 if(1){ struct v4l2_fmtdesc fmtdesc; fmtdesc.index = 0; fmtdesc.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; printf("fm:\n"); while(ioctl(fd, VIDIOC_ENUM_FMT, &fmtdesc) != -1){ printf("%d.%s %c%c%c%c\n", fmtdesc.index + 1, fmtdesc.description, fmtdesc.pixelformat & 0xFF, (fmtdesc.pixelformat >> 8) & 0xFF, (fmtdesc.pixelformat >> 16) & 0xFF, (fmtdesc.pixelformat >> 24) & 0xFF); fmtdesc.index++; } printf("\n"); } //选择视频输入 struct v4l2_input input; CLEAN(input); input.index = 0; if ( ioctl_(fd, VIDIOC_S_INPUT,&input) == -1){ printf("VIDIOC_S_INPUT IS ERROR! LINE:%d\n",__LINE__); return -1; } //设置帧格式 struct v4l2_format fmt; CLEAN(fmt); fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; fmt.fmt.pix.width = WIDTH; fmt.fmt.pix.height = HEIGHT; fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV; // fmt.fmt.pix.field = V4L2_FIELD_INTERLACED; if (ioctl_(fd, VIDIOC_S_FMT, &fmt) == -1) { printf("VIDIOC_S_FMT IS ERROR! LINE:%d\n",__LINE__); return -1; } // 要处理这个调用,驱动会查看请求的视频格式,然后断定硬件是否支持这个格式。如果应用请求的格式是硬件不能支持的,就返回-EINVAL 查看当前设备是否支持请求的帧格式 // v4l2_format 结构体会在调用后复制给用户空间;驱动应该更新这个结构体以反映改变的参数,这样应用才知道他真正得到的是什么。 fmt.type = V4L2_BUF_TYPE_PRIVATE; if (ioctl_(fd, VIDIOC_S_FMT, &fmt) == -1){ printf("VIDIOC_S_FMT IS ERROR! LINE:%d\n", __LINE__); return -1; } //查看帧格式 fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; if ( ioctl_(fd, VIDIOC_G_FMT, &fmt) == -1){ printf("VIDIOC_G_FMT IS ERROR! LINE:%d\n", __LINE__); return -1; } printf("width:%d\nheight:%d\npixelformat:%c%c%c%c\n", fmt.fmt.pix.width, fmt.fmt.pix.height, fmt.fmt.pix.pixelformat & 0xFF, (fmt.fmt.pix.pixelformat >> 8) & 0xFF, (fmt.fmt.pix.pixelformat >> 16) & 0xFF, (fmt.fmt.pix.pixelformat >> 24) & 0xFF ); __u32 min = fmt.fmt.pix.width * 2; if ( fmt.fmt.pix.bytesperline < min ) fmt.fmt.pix.bytesperline = min; min = ( unsigned int )WIDTH * HEIGHT * 3 / 2; if ( fmt.fmt.pix.sizeimage < min ) fmt.fmt.pix.sizeimage = min; frame_size = fmt.fmt.pix.sizeimage; printf("After Buggy driver paranoia\n"); printf(" >>fmt.fmt.pix.sizeimage = %d\n", fmt.fmt.pix.sizeimage); printf(" >>fmt.fmt.pix.bytesperline = %d\n", fmt.fmt.pix.bytesperline); printf("-#-#-#-#-#-#-#-#-#-#-#-#-#-\n"); printf("\n"); return 0; } int init_mmap() { //申请帧缓冲区 struct v4l2_requestbuffers req; CLEAN(req); req.count = 4; req.memory = V4L2_MEMORY_MMAP; //使用内存映射缓冲区 req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //申请4个帧缓冲区,在内核空间中 if ( ioctl_(fd, VIDIOC_REQBUFS, &req) == -1 ) { printf("VIDIOC_REQBUFS IS ERROR! LINE:%d\n",__LINE__); return -1; } //获取每个帧信息,并映射到用户空间 buffer = (Video_Buffer *)calloc(req.count, sizeof(Video_Buffer)); if (buffer == NULL){ printf("calloc is error! LINE:%d\n",__LINE__); return -1; } struct v4l2_buffer buf; int buf_index = 0; for (buf_index = 0; buf_index < req.count; buf_index ++) { CLEAN(buf); buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; buf.index = buf_index; buf.memory = V4L2_MEMORY_MMAP; if (ioctl_(fd, VIDIOC_QUERYBUF, &buf) == -1) //获取每个帧缓冲区的信息 如length和offset { printf("VIDIOC_QUERYBUF IS ERROR! LINE:%d\n",__LINE__); return -1; } //将内核空间中的帧缓冲区映射到用户空间 buffer[buf_index].length = buf.length; buffer[buf_index].start = mmap(NULL, //由内核分配映射的起始地址 buf.length,//长度 PROT_READ | PROT_WRITE, //可读写 MAP_SHARED,//可共享 fd, buf.m.offset); if (buffer[buf_index].start == MAP_FAILED){ printf("MAP_FAILED LINE:%d\n",__LINE__); return -1; } //将帧缓冲区放入视频输入队列 if (ioctl_(fd, VIDIOC_QBUF, &buf) == -1) { printf("VIDIOC_QBUF IS ERROR! LINE:%d\n", __LINE__); return -1; } printf("Frame buffer :%d address :0x%x length:%d\n",buf_index, (__u32)buffer[buf_index].start, buffer[buf_index].length); } } void start_stream() { enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE; if (ioctl_(fd, VIDIOC_STREAMON, &type) == -1){ printf("VIDIOC_STREAMON IS ERROR! LINE:%d\n", __LINE__); exit(EXIT_FAILURE); } } void end_stream() { enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE; if (ioctl_(fd, VIDIOC_STREAMOFF, &type) == -1){ printf("VIDIOC_STREAMOFF IS ERROR! LINE:%d\n", __LINE__); exit(EXIT_FAILURE); } } static int read_frame() { struct v4l2_buffer buf; int ret = 0; CLEAN(buf); buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; buf.memory = V4L2_MEMORY_MMAP; if (ioctl_(fd, VIDIOC_DQBUF, &buf) == -1){ printf("VIDIOC_DQBUF! LINEL:%d\n", __LINE__); return -1; } ret = write(file_fd, buffer[buf.index].start ,frame_size); if (ret == -1) { printf("write is error !\n"); return -1; } if (ioctl_(fd, VIDIOC_QBUF, &buf) == -1){ printf("VIDIOC_QBUF! LINE:%d\n", __LINE__); return -1; } return 0; } int open_file(const char * file_name) { file_fd = open(file_name, O_RDWR | O_CREAT, 0777); if (file_fd == -1) { printf("open file is error! LINE:%d\n", __LINE__); return -1; } // file = fopen(file_name, "wr+"); } void close_mmap() { int i = 0; for (i = 0; i < 4 ; i++) { munmap(buffer[i].start, buffer[i].length); } free(buffer); } void close_device() { close(fd); close(file_fd); } int process_frame() { struct timeval tvptr; int ret; tvptr.tv_usec = 0; //等待50 us tvptr.tv_sec = 2; fd_set fdread; FD_ZERO(&fdread); FD_SET(fd, &fdread); ret = select(fd + 1, &fdread, NULL, NULL, &tvptr); if (ret == -1){ perror("EXIT_FAILURE"); exit(EXIT_FAILURE); } if (ret == 0){ printf("timeout! \n"); } read_frame(); } #include<stdio.h> //#include"camer.h" #define DEVICE_NAME "/dev/video0" #define FILE_NAME "./out.yuv" int main(int argc,char *argv[]) { int ret = 0; int i; ret = open_device(DEVICE_NAME); if (ret == -1) exit(EXIT_FAILURE); open_file(FILE_NAME); init_device(); init_mmap(); start_stream(); for (i = 0 ; i < 100; i++) { process_frame(); printf("frame:%d\n",i); } end_stream(); close_mmap(); close_device(); return 0; }

yuv视频播放 借用开源库 GitHub - Yonsm/RawPlayer: Raw (YUV/RGB) video player and subjective assess tool

我编译的exe文件 https://files.cnblogs.com/files/RYSBlog/YUVPlayer.zip?t=1667271754

源码文件 https://files.cnblogs.com/files/RYSBlog/YUVPlayer-master.zip?t=1667271743

关于 v4l2 与ffmpeg结合可参考 (146条消息) Linux下ffmpeg库开发之读取摄像头数据_IT_阿水的博客-CSDN博客_ffmpeg读取摄像头

#include <stdio.h> #include "libavcodec/avcodec.h" #include "libavformat/avformat.h" #include "libswscale/swscale.h" #include <libswresample/swresample.h> #include <libavdevice/avdevice.h> #include <libavutil/imgutils.h> #include <SDL.h> #include <SDL_image.h> #include <pthread.h> #include <unistd.h> #define VIDEO_DEV "/dev/video2" pthread_mutex_t fastmutex = PTHREAD_MUTEX_INITIALIZER;//互斥锁 pthread_cond_t cond = PTHREAD_COND_INITIALIZER;//条件变量 typedef enum { false=0, true, }bool; int width; int height; int size; unsigned char *rgb_buff=NULL; unsigned char video_flag=1; void *Video_CollectImage(void *arg); int main() { /*创建摄像头采集线程*/ pthread_t pthid; pthread_create(&pthid,NULL,Video_CollectImage, NULL); pthread_detach(pthid);/*设置分离属性*/ sleep(1); while(1) { if(width!=0 && height!=0 && size!=0)break; if(video_flag==0)return 0; } printf("image:%d * %d,%d\n",width,height,size); unsigned char *rgb_data=malloc(size); /*创建窗口 */ SDL_Window *window=SDL_CreateWindow("SDL_VIDEO", SDL_WINDOWPOS_CENTERED,SDL_WINDOWPOS_CENTERED,800,480,SDL_WINDOW_ALLOW_HIGHDPI|SDL_WINDOW_RESIZABLE); /*创建渲染器*/ SDL_Renderer *render=SDL_CreateRenderer(window,-1,SDL_RENDERER_ACCELERATED); /*清空渲染器*/ SDL_RenderClear(render); /*创建纹理*/ SDL_Texture*sdltext=SDL_CreateTexture(render,SDL_PIXELFORMAT_IYUV,SDL_TEXTUREACCESS_STREAMING,width,height); bool quit=true; SDL_Event event; SDL_Rect rect; while(quit) { while(SDL_PollEvent(&event))/*事件监测*/ { if(event.type==SDL_QUIT)/*退出事件*/ { quit=false; video_flag=0; pthread_cancel(pthid);/*杀死指定线程*/ continue; } } if(!video_flag) { quit=false; continue; } pthread_mutex_lock(&fastmutex);//互斥锁上锁 pthread_cond_wait(&cond,&fastmutex); memcpy(rgb_data,rgb_buff,size); pthread_mutex_unlock(&fastmutex);//互斥锁解锁 SDL_UpdateTexture(sdltext,NULL,rgb_data,width); //SDL_RenderCopy(render, sdltext, NULL,NULL); // 拷贝纹理到渲染器 SDL_RenderCopyEx(render, sdltext,NULL,NULL,0,NULL,SDL_FLIP_NONE); SDL_RenderPresent(render); // 渲染 } SDL_DestroyTexture(sdltext);/*销毁纹理*/ SDL_DestroyRenderer(render);/*销毁渲染器*/ SDL_DestroyWindow(window);/*销毁窗口 */ SDL_Quit();/*关闭SDL*/ pthread_mutex_destroy(&fastmutex);/*销毁互斥锁*/ pthread_cond_destroy(&cond);/*销毁条件变量*/ free(rgb_buff); free(rgb_data); return 0; } void *Video_CollectImage(void *arg) { int res=0; AVFrame *Input_pFrame=NULL; AVFrame *Output_pFrame=NULL; printf("pth:%s\n",avcodec_configuration()); /*注册设备*/ avdevice_register_all(); /*查找输入格式*/ AVInputFormat *ifmt=av_find_input_format("video4linux2"); if(ifmt==NULL) { printf("av_find_input_format failed\n"); video_flag=0; return 0; } /*打开输入流并读取头部信息*/ AVFormatContext *ps=NULL; res=avformat_open_input(&ps,VIDEO_DEV,ifmt,NULL); if(res) { printf("open input failed\n"); video_flag=0; return 0; } /*查找流信息*/ res=avformat_find_stream_info(ps,NULL); if(res) { printf("find stream failed\n"); video_flag=0; return 0; } /*打印有关输入或输出格式信息*/ av_dump_format(ps, 0, "video4linux2", 0); /*寻找视频流*/ int videostream=-1; videostream=av_find_best_stream(ps,AVMEDIA_TYPE_VIDEO,-1,-1,NULL,0); printf("videostram=%d\n",videostream); /*寻找编解码器*/ AVCodec *video_avcodec=NULL;/*保存解码器信息*/ AVStream *stream = ps->streams[videostream]; AVCodecContext *context=stream->codec; video_avcodec=avcodec_find_decoder(context->codec_id); if(video_avcodec==NULL) { printf("find video decodec failed\n"); video_flag=0; return 0; } /*初始化音视频解码器*/ res=avcodec_open2(context,video_avcodec,NULL); if(res) { printf("avcodec_open2 failed\n"); video_flag=0; return 0; } AVPacket *packet=av_malloc(sizeof(AVPacket));/*分配包*/ AVFrame *frame=av_frame_alloc();/*分配视频帧*/ AVFrame *frameyuv=av_frame_alloc();/*申请YUV空间*/ /*分配空间,进行图像转换*/ width=context->width; height=context->height; int fmt=context->pix_fmt;/*流格式*/ size=av_image_get_buffer_size(AV_PIX_FMT_YUV420P,width,height,16); unsigned char *buff=NULL; printf("w=%d,h=%d,size=%d\n",width,height,size); buff=av_malloc(size); rgb_buff=malloc(size);//保存RGB颜色数据 /*存储一帧图像数据*/ av_image_fill_arrays(frameyuv->data,frameyuv->linesize,buff,AV_PIX_FMT_YUV420P,width,height, 16); /*转换上下文,使用sws_scale()执行缩放/转换操作。*/ struct SwsContext *swsctx=sws_getContext(width,height, fmt,width,height, AV_PIX_FMT_YUV420P,SWS_BICUBIC,NULL,NULL,NULL); /*读帧*/ int go=0; int Framecount=0; printf("read fream buff\n"); while(av_read_frame(ps,packet)>=0 && video_flag) { Framecount++; if(packet->stream_index == AVMEDIA_TYPE_VIDEO)/*判断是否为视频*/ { /*解码一帧视频数据。输入一个压缩编码的结构体AVPacket,输出一个解码后的结构体AVFrame*/ res=avcodec_decode_video2(ps->streams[videostream]->codec,frame,&go,packet); if(res<0) { printf("avcodec_decode_video2 failed\n"); break; } if(go) { /*转换像素的函数*/ sws_scale(swsctx,(const uint8_t * const*)frame->data,frame->linesize,0,height,frameyuv->data,frameyuv->linesize); pthread_mutex_lock(&fastmutex);//互斥锁上锁 memcpy(rgb_buff,buff,size); pthread_cond_broadcast(&cond);//广播唤醒所有线程 pthread_mutex_unlock(&fastmutex);//互斥锁解锁 } } } sws_freeContext(swsctx);/*释放上下文*/ av_frame_free(&frameyuv);/*释放YUV空间*/ av_packet_unref(packet);/*释放包*/ av_frame_free(&frame);/*释放视频帧*/ avformat_close_input(&ps);/*关闭流*/ video_flag=0; pthread_exit(NULL); }

//

浙公网安备 33010602011771号

浙公网安备 33010602011771号