kube-prometheus 监控etcd

有的云原生应用会暴露一个/metrics接口、而以前的一些比较老的不会暴露、这时候需要用到export来手动暴露、这样就可以对他进行监控

我们会创建一个endpoint、来连接到有metrics的服务上,如果我们的服务已经部署在k8s内部的,那么他可能是已经创建好的。

如果我们创建了一个endpoint那么还要创建一个名字一样的service,这样他会自动建立链接

kube-prometheus项目地址:

https://github.com/prometheus-operator/kube-prometheus

实验环境

k8s集群为二进制安装

[root@master01 ~]# kubectl get node -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master01 Ready master 34d v1.19.16 172.16.1.11 <none> CentOS Linux 7 (Core) 5.15.5-1.el7.elrepo.x86_64 docker://20.10.11 master02 Ready master 34d v1.19.16 172.16.1.12 <none> CentOS Linux 7 (Core) 5.15.5-1.el7.elrepo.x86_64 docker://20.10.11 master03 Ready master 34d v1.19.16 172.16.1.13 <none> CentOS Linux 7 (Core) 5.15.5-1.el7.elrepo.x86_64 docker://20.10.11 node01 Ready <none> 34d v1.19.16 172.16.1.14 <none> CentOS Linux 7 (Core) 5.15.5-1.el7.elrepo.x86_64 docker://20.10.11 node02 Ready <none> 34d v1.19.16 172.16.1.15 <none> CentOS Linux 7 (Core) 5.15.5-1.el7.elrepo.x86_64 docker://20.10.11

解析kube-prometheus 监控宿主机的etcd

servicemonitor来监控宿主机的一些组件是怎么实现的呢? 1.通过service暴露外部服务来实现通过service的方式能够访问到k8s集群之外的服务 2.然后kube-prometheus通过标签来过滤出目标service,然后通过service来获取/metrics 暴露的数据 大白话:就相当于service是一个桥梁,连接宿主机和kube-prometheus,让kube-prometheus能够获取到宿主机暴露的数据。

手动查看etcd暴露的数据

curl --cert /etc/etcd/ssl/etcd.pem --key /etc/etcd/ssl/etcd-key.pem https://172.16.1.12:2379/metrics -k | more #因为etcd是必须启用https的、而且我们启用了证书认证,所以得加上证书

方案

创建etcd service

#创建service关键信息不要错了:1.endpoint的名字和service名字。2.标签。3.ip。4.port、name(下文以标出) #创建service --- apiVersion: v1 kind: Endpoints metadata: labels: k8s-app: etcd1 name: etcd namespace: kube-system subsets: - addresses: - ip: 172.16.1.11 - ip: 172.16.1.12 - ip: 172.16.1.13 ports: - name: etcd #name port: 2379 #port protocol: TCP --- apiVersion: v1 kind: Service metadata: labels: k8s-app: etcd1 name: etcd namespace: kube-system spec: ports: - name: etcd port: 2379 protocol: TCP targetPort: 2379 sessionAffinity: None type: ClusterIP

验证service

[root@master01 ~]# kubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE etcd ClusterIP 10.96.131.104 <none> 2379/TCP 8m14s kube-controller-manager-monitoring ClusterIP 10.96.187.77 <none> 10252/TCP 20h kube-dns ClusterIP 10.96.0.2 <none> 53/UDP,53/TCP,9153/TCP 34d kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 2d6h ratel NodePort 10.96.15.187 <none> 8888:29999/TCP 8d scheduler ClusterIP 10.96.57.82 <none> 10251/TCP 20h ##访问etcd服务ip curl --cert /etc/etcd/ssl/etcd.pem --key /etc/etcd/ssl/etcd-key.pem https://10.96.131.104:2379/metrics -k | more 访问到数据说明正确、没有访问到数据说明没有成功。

……

# HELP process_resident_memory_bytes Resident memory size in bytes.

# TYPE process_resident_memory_bytes gauge

process_resident_memory_bytes 1.70909696e+08

# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE process_start_time_seconds gauge

process_start_time_seconds 1.64147009048e+09

# HELP process_virtual_memory_bytes Virtual memory size in bytes.

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 1.1008647168e+10

#这里截取尾部小部分

创建etcd servicemonitor

kubectl -n monitoring create secret generic etcd-certs --from-file=/etc/etcd/ssl/ca.pem --from-file=/etc/etcd/ssl/etcd.pem --from-file=/etc/etcd/ssl/etcd-key.pem #将证书挂到prometheus #修改prometheus-prometheus.yaml 将刚刚创建的secret挂载到prometheus 在末尾添加: …… spec: …… secrets: - etcd-certs kubectl replace -f kube-prometheus/manifests/prometheus-prometheus.yaml

[root@master01 ~]# kubectl get pod -n monitoring NAME READY STATUS RESTARTS AGE alertmanager-main-0 2/2 Running 12 2d7h blackbox-exporter-6798fb5bb4-ltnvl 3/3 Running 21 2d21h grafana-696d8f4f9c-rvzrb 1/1 Running 6 2d6h kube-state-metrics-85ccd987fc-wzr7v 3/3 Running 19 2d7h node-exporter-5r52x 2/2 Running 16 2d21h node-exporter-948d6 2/2 Running 16 2d21h node-exporter-99bwl 2/2 Running 12 2d21h node-exporter-kshxd 2/2 Running 14 2d21h node-exporter-t4r2p 2/2 Running 18 2d21h prometheus-adapter-67cfd8b5f6-m4spb 1/1 Running 7 2d7h prometheus-adapter-67cfd8b5f6-pcd9z 1/1 Running 6 2d7h prometheus-k8s-0 2/2 Running 0 5m19s prometheus-k8s-1 2/2 Running 0 5m44s prometheus-operator-7ddc6877d5-rwc2f 2/2 Running 13 2d21h [root@master01 ~]# kubectl exec -it -n monitoring prometheus-k8s-0 -- sh /prometheus $ ls /etc/prometheus/secrets/etcd-certs/ ca.pem etcd-key.pem etcd.pem

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: labels: k8s-app: etcd1 #这个serviceMonitor的标签 name: etcd namespace: monitoring spec: endpoints: - interval: 30s port: etcd #port名字就是service里面的spec.ports.name scheme: https #访问的方式 tlsConfig: caFile: /etc/prometheus/secrets/etcd-certs/ca.pem #证书位置/etc/prometheus/secrets,这个路径是默认的挂载路径 certFile: /etc/prometheus/secrets/etcd-certs/etcd.pem keyFile: /etc/prometheus/secrets/etcd-certs/etcd-key.pem selector: matchLabels: k8s-app: etcd1 namespaceSelector: matchNames: - kube-system #匹配的命名空间 kubectl appply -f etcd-serviceMonitor.yaml #etcd-serviceMonitor.yaml 是以上的yaml文件

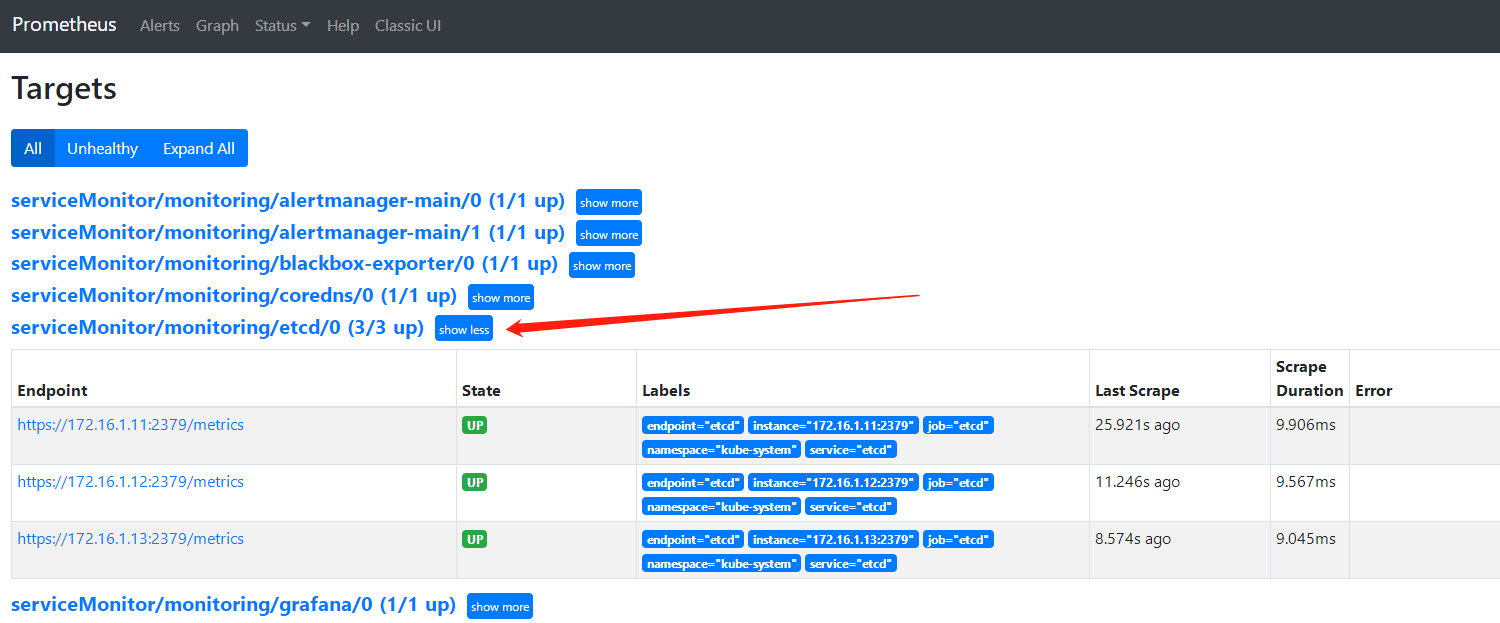

最终结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号