AI: Chapter 2-Intelligent Agents

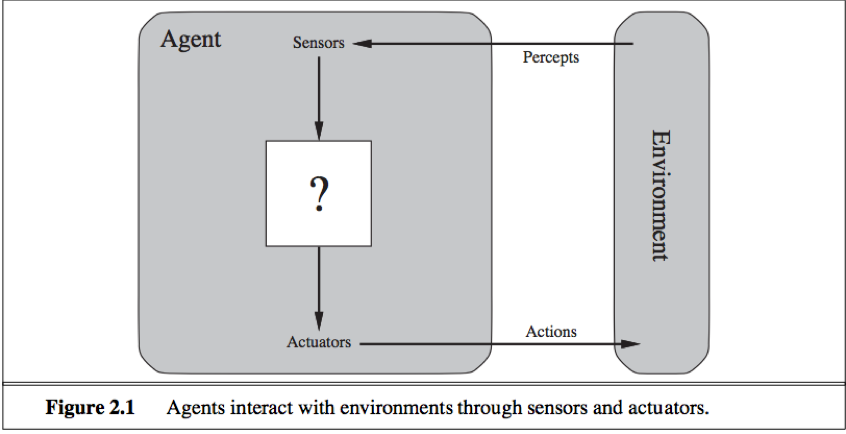

Agent

The agent function: abstract mathematical description, the table of the agent function is an external characterization of the agent.

The agent program: a concrete implementation running within some physical system internally.

Performance measure: evaluates any sequence of environment states to capture desirability (the behavior of the agent in an environment).

Distinguish between rationality and ominiscience

Omniscience: Perfection maximizes actual performance. an omniscient agent knows the actual outcome of its actions and can act accordingly(impossible in reality).

Rationality maximizes expected performance.

Autonomy: To the extent that an agent relies on the prior knowledge of its designer rather than on its own percepts, we say that the agent lacks autonomy. An autonomous agent should learn what it can to compensate for partial or incorrect prior knowledge.

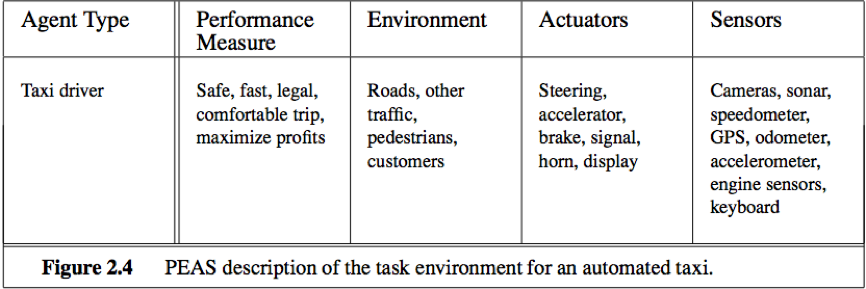

task environments

In designing an agent, the first step must always be to specify the task environment as fully as possible.

PEAS description of the task environment

Some dimensions to categorize task environments:

1. Fully observable/partially observable:

If an agent’s sensors give it access to the complete state of the environment at each point in time, then we say that the task environment is fully observable.

An environment might be partially observable because of noisy and inaccurate sensors or because parts of the state are simply missing from the sensor data.

If the agent has no sensors at all then the environment is unobservable.

2. Single agent/multiagent:

If an object B’s behavior is best described as maximizing a performance measure whose value depends on agent A’s behavior, then agent A have to treat B as an agent, otherwise B can be treated merely as an object.

Exp: Chess is a competitive multiagent environment; Taxi-driving is a partially cooperative/competitive multiagent environment.

3. Deterministic/stochastic:

If the next state of the environment is completely determined by the current state and the action executed by the agent, we say the environment is deterministic, otherwise it is stochastic.

In principle, an agent need not worry about uncertainty in a fully observable, deterministic environment. We say an environment is uncertain if it is not fully observable or not deterministic.

A stochastic environment ‘s uncertainty about outcomes is quantified in terms of probabilities; a nondeterministic environment is one in which actions are characterized by their possible outcomes, but no probabilities are attached to them.

4. Episodic/sequential:

In an episodic task environment, the agent’s experience is divided into atomic episodes, in each episode the agent receives a percept and then performs a single action. The next episode does not depend on the actions taken in previous episodes.

In a sequential environment, the current decision could affect all future decisions.

5. Static/dynamic:

If the environment can change while an agent is deliberating(deciding on an action), we say the environment is dynamic for that agent, otherwise it is static.

If the environment itself does not change with the passage of time but the agent’s performance score does, we say the environment is semidynamic.

6. Discrete/continuous:

The discrete/continuous distinction applies to the state of the environment, to the way time is handled, and to the percepts and actions of the agent.

7. Known/unknown

The known/unknown distinction refers to the agent/designer’s state of knowledge about the “law of physics” of the environment.

In a known environment, the outcomes/outcome probabilities for all actions are given.

In an unknown environment, the agent will have to learn how it works in order to make good decisions.

The distinction between known and unknown environments is not the same as the one between fully and partially observable environments.

Structure of agents

Agent = architecture + program

The agent program implements the agent function.

The agent program takes just the current percept as input; The agent function takes the entire percept history as input.

4 basic kinds of agent programs that embody the principles underlying almost all intelligent systems:

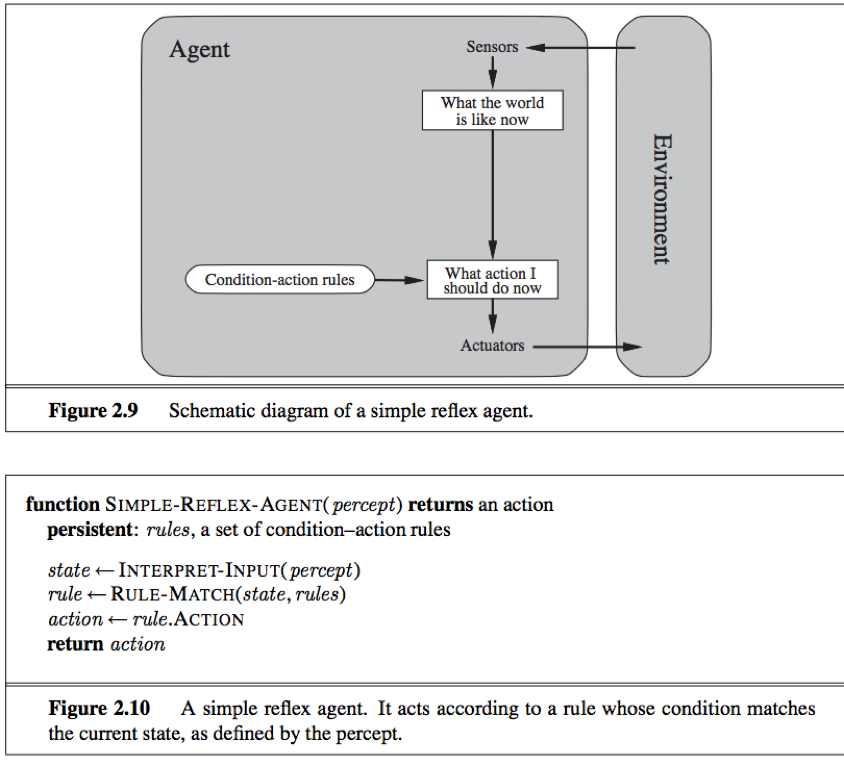

1. Simple reflex agents:

Select actions on the basis of the current percept, ignoring the rest of the percept history. (respond directly to percept.)

Simple, but of limited intelligence.

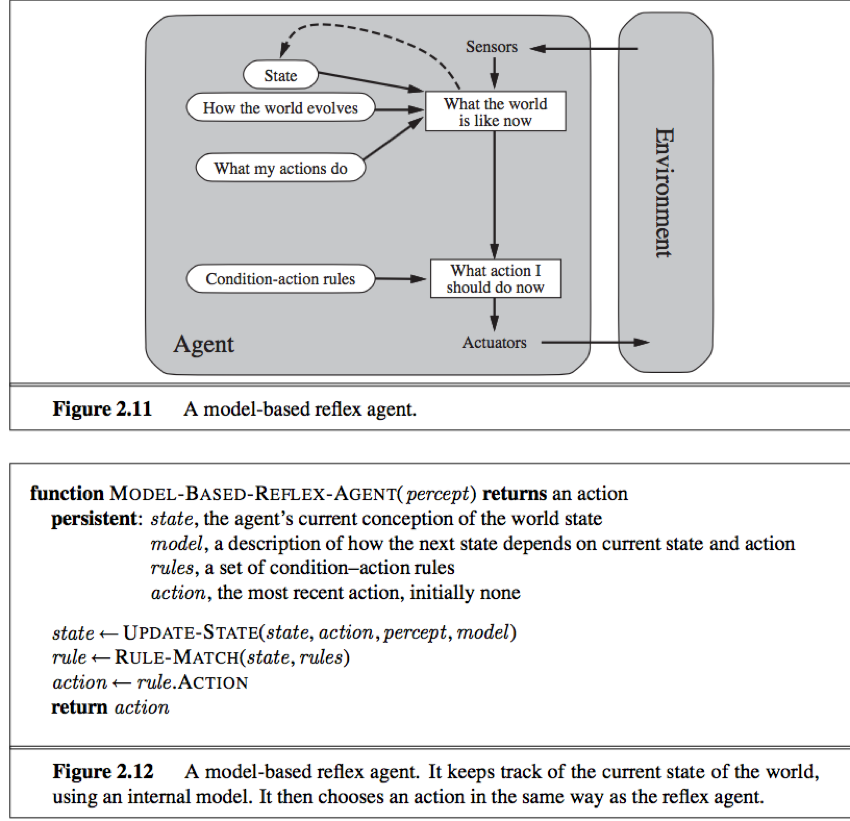

2. Model-based reflex agents

The knowledge about “how the world works” is called a model of the world, an agent that uses such a model is called a model-based agent. (maintain internal state to track aspects of the world that are not evident in the current percept.)

Based on the agent’s model of how the world works, the current percept is combined with the old internal state to generate the updated description of the current state.

3. Goal-based agents

The agent program can combine some sort of goal information with the model to choose actions that achieve the goal. (act to achieve their goals.)

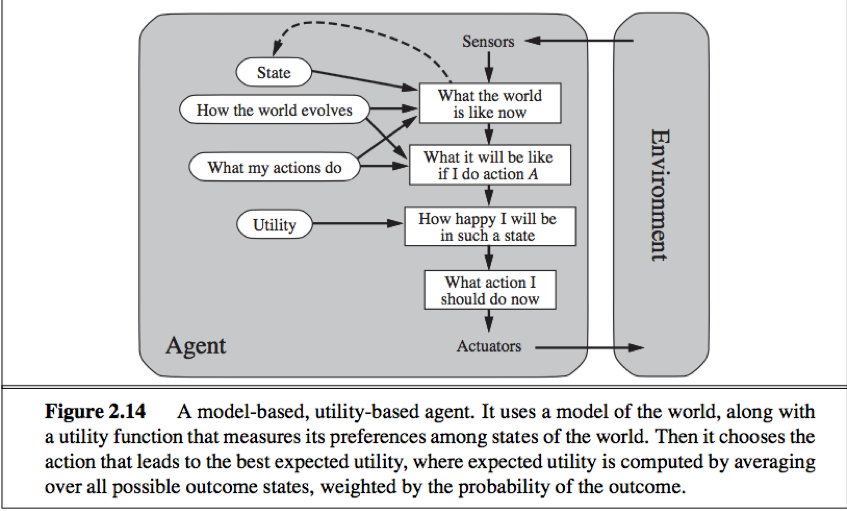

4. Utility-based agents

Utility function: an internalization of the performance measure.

A rational utility-based agent chooses the action that maximizes the expected utility of the action outcomes. (try to maximize their own expected “happiness”.)

Learning agents

learning element: is responsible for making improvements, uses feedback from the critic on how the agent is doing and determines how the performance element should be modified to do better in the future.

performance element: is responsible for selecting external actions (previous the entire agent, takes in percepts and decides on actions).

critic: tells the learning element on how well the agent is doing with respect to a fixed performance standard.

problem generator: is responsible for suggesting actions that will lead to new and informative experiences.

How the components of agent programs work

We can place the representations of the environment that the agent inhabits along an axis of increasing complexity and expressiveness(ability to express concisely): atomic, factored, and structured.

Atomic representation: each state of the world is indivisible – it has no internal structure.

Factored representation: splits up each state into a fixed set of variables or attributes, each of which can have a value.

Structured representation: in which objects and their various and varying relationship can be described explicitly.