![]()

![]()

![]()

#协程greenlet

from greenlet import greenlet

def eat(name):

print('%s eat 1'%name)

g2.switch('taibai')#第一次调用必须传值

print('%s eat 2'%name)

g2.switch()

def play(name):

print('%s play 1'%name)

g1.switch()

print('%s play 2'%name)

g1=greenlet(eat)

g2=greenlet(play)

g1.switch('taibai')

# 线程的其他方法

from threading import Thread

import threading

import time

from multiprocessing import Process

import os

def work():

import time

time.sleep(1)

# print('子线程',threading.get_ident()) #2608

print(threading.current_thread().getName()) # Thread-1

if __name__ == '__main__':

# 在主进程下开启线程

t = Thread(target=work)

t.start()

# print(threading.current_thread())#主线程对象 #<_MainThread(MainThread, started 1376)>

# print(threading.current_thread().getName()) #主线程名称 #MainThread

# print(threading.current_thread().ident) #主线程ID #1376

# print(threading.get_ident()) #主线程ID #1376

time.sleep(3)

print(

threading.enumerate()) # 连同主线程在内有两个运行的线程,[<_MainThread(MainThread, started 13396)>, <Thread(Thread-1, started 572)>]

print(threading.active_count()) # 2

print('主线程/主进程')

# 队列

import queue

#队列先进先出

q2=queue.Queue()

q2.put('frist')

q2.put('second')

q2.put('third')

print(q2.get())

print(q2.get())

print(q2.get())

#类似于栈的队列

q1=queue.LifoQueue()

q1.put(1)

q1.put(2)

q1.put(3)

print(q1.get())

print(q1.get())

print(q1.get())

# print(q1.get())#阻塞

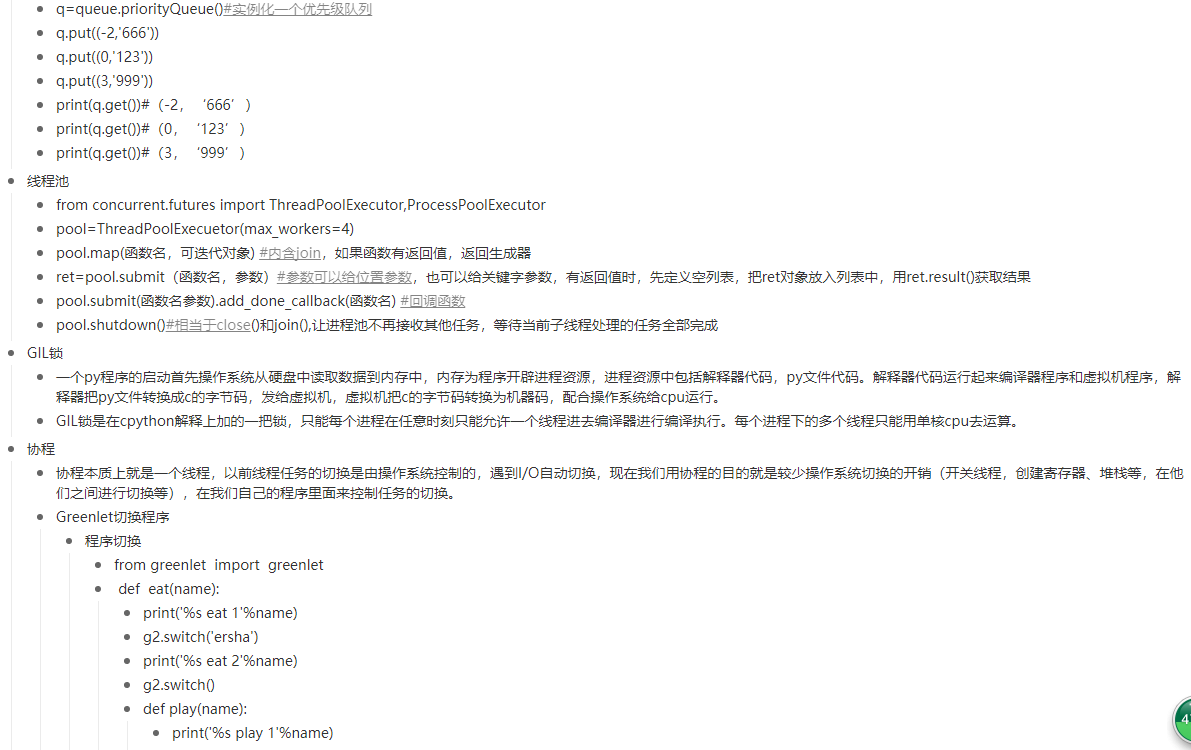

#优先级队列

import queue

q=queue.PriorityQueue()#创建优先级队列对象

q.put((-1,'666'))

q.put((0,'999'))

q.put((3,'hahaha'))

q.put((9,'123'))

print(q.get())

print(q.get())

print(q.get())

print(q.get())

#线程池的方法

from concurrent.futures import ThreadPoolExecutor,ProcessPoolExecutor

def func(i):

print(i)

time.sleep(1)

return i**2

t_pool=ThreadPoolExecutor(max_workers=4)#实例化个线程池,设置最大线程数

ret=t_pool.map(func,range(10))#map自带join,返回生成器

print(ret,[i for i in ret])

#多线程与多进程在纯计算或者io密集型的两种场景运行时间的比较

from multiprocessing import Process

from threading import Thread

def func():

num=0

# time.sleep(1)

for i in range(100000000):

num += i

if __name__ == '__main__':

p_s_t = time.time()

p_list = []

for i in range(10):

p = Process(target=func, )

p_list.append(p)

p.start()

[pp.join() for pp in p_list]

p_e_t = time.time()

p_dif_t = p_e_t - p_s_t

t_s_t=time.time()

t_list = []

for i in range(10):

t=Thread(target=func,)

t_list.append(t)

t.start()

[tt.join() for tt in t_list]

t_e_t=time.time()

t_dif_t=t_e_t-t_s_t

print("多进程:", p_dif_t)

print("多线程:",t_dif_t)

#纯计算的程序切换反而更慢

import time

def consumer():

'''任务1:接收数据,处理数据'''

while True:

x=yield

# time.sleep(1) #发现什么?只是进行了切换,但是并没有节省I/O时间

print('处理了数据:',x)

def producer():

'''任务2:生产数据'''

g=consumer()

# print('asdfasfasdf')

next(g) #找到了consumer函数的yield位置

for i in range(3):

# for i in range(10000000):

g.send(i) #给yield传值,然后再循环给下一个yield传值,并且多了切换的程序,比直接串行执行还多了一些步骤,导致执行效率反而更低了。

print('发送了数据:',i)

start=time.time()

#基于yield保存状态,实现两个任务直接来回切换,即并发的效果

#PS:如果每个任务中都加上打印,那么明显地看到两个任务的打印是你一次我一次,即并发执行的.

producer() #我在当前线程中只执行了这个函数,但是通过这个函数里面的send切换了另外一个任务

stop=time.time()

# 串行执行的方式

res=producer()

consumer(res)

stop=time.time()

print(stop-start)

浙公网安备 33010602011771号

浙公网安备 33010602011771号