第十章 Kubernetes进阶之k8s集群资源监控

第十章 Kubernetes进阶之k8s集群资源监控

参考:https://www.jianshu.com/p/91f9d9ec374f

https://www.cnblogs.com/zealousness/p/11174365.html

1.Kubernetes监控指标

集群监控

- 节点资源利用率

- 节点数

- 运行Pods

Pod监控

- Kubernetes指标

- 容器指标

- 应用程序

2.Kubernetes监控方案

| 监控方案 | 告警 | 特点 | 适用 |

| Zabbix | Y | 大量定制工作 | 大部分互联网公司 |

| open-falcon | Y | 功能模块分解比较细显得复杂 | 系统和应用监控 |

| cAdvisor+Heapster+InfluxDB+Grafana | Y | 简单易用 | 容器监控 |

| cAdvisor/exporter+Prometheus+Granfana | Y | 扩展性好 | 容器,应用,主机全方面监控 |

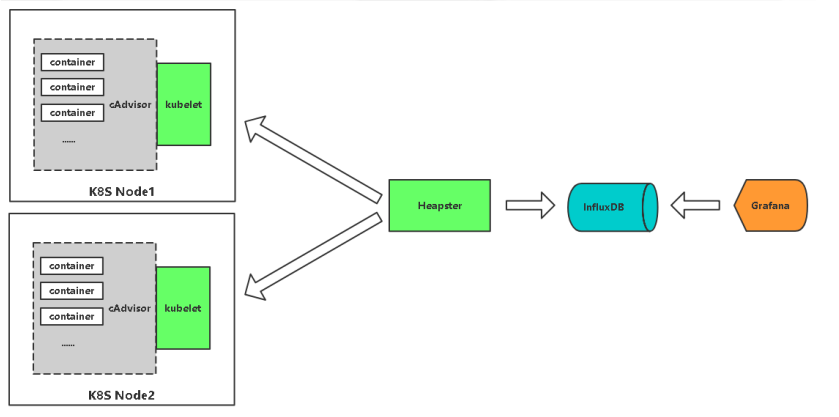

3.Heapster+InfluDB+Grafana

监控构架图

1、cAdvisor为谷歌开源的专门用于监控容器的服务,已经集成到了k8s里面(数据采集Agent)

Kubernetes有个出名的监控agent—cAdvisor。在每个kubernetes Node上都会运行cAdvisor,它会收集本机以及容器的监控数据(cpu,memory,filesystem,network,uptime)。在较新的版本中,K8S已经将cAdvisor功能集成到kubelet组件中。每个Node节点可以直接进行web访问。

2、Heapster是容器集群监控和性能分析工具,天然的支持Kubernetes和CoreOS。但是Heapster已经退休了!(数据收集)

Heapster是一个收集者,Heapster可以收集Node节点上的cAdvisor数据,将每个Node上的cAdvisor的数据进行汇总,还可以按照kubernetes的资源类型来集合资源,比如Pod、Namespace,可以分别获取它们的CPU、内存、网络和磁盘的metric。默认的metric数据聚合时间间隔是1分钟。还可以把数据导入到第三方工具(如InfluxDB)。

3、InfluxDB是一个开源的时序数据库。(数据存储)

4、grafana是一个开源的数据展示工具。(数据展示)

部署监控

由于cAdvisor已经在k8s里面集成了,其他部件部署顺序:influxDB->Heapster->grafana

1,部署influxDB

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

# cat influxdb.yaml apiVersion: apps/v1kind: Deploymentmetadata: name: monitoring-influxdb namespace: kube-systemspec: replicas: 1 selector: matchLabels: task: monitoring k8s-app: influxdb template: metadata: labels: task: monitoring k8s-app: influxdb spec: containers: - name: influxdb #image: k8s.gcr.io/heapster-influxdb-amd64:v1.3.3 image: statemood/heapster-influxdb-amd64:v1.3.3 volumeMounts: - mountPath: /data name: influxdb-storage volumes: - name: influxdb-storage emptyDir: {}---apiVersion: v1kind: Servicemetadata: labels: task: monitoring kubernetes.io/cluster-service: 'true' kubernetes.io/name: monitoring-influxdb name: monitoring-influxdb namespace: kube-systemspec: type: NodePort ports: - nodePort: 31001 port: 8086 targetPort: 8086 selector: k8s-app: influxdb |

2,部署heapster

Heapster首先从apiserver获取集群中所有Node的信息,然后通过这些Node上的kubelet获取有用数据,而kubelet本身的数据则是从cAdvisor得到。所有获取到的数据都被推到Heapster配置的后端存储中,并还支持数据的可视化。

由于Heapster需要从apiserver获取数据,所以需要对其进行授权。用户为cluster-admin,集群管理员用户。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

|

# cat heapster.yaml apiVersion: v1kind: ServiceAccountmetadata: name: heapster namespace: kube-system --- kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1beta1metadata: name: heapsterroleRef: kind: ClusterRole name: cluster-admin apiGroup: rbac.authorization.k8s.iosubjects: - kind: ServiceAccount name: heapster namespace: kube-system --- #apiVersion: extensions/v1beta1apiVersion: apps/v1kind: Deploymentmetadata: name: heapster namespace: kube-systemspec: selector: matchLabels: k8s-app: heapster replicas: 1 template: metadata: labels: task: monitoring k8s-app: heapster spec: serviceAccountName: heapster containers: - name: heapster image: registry.cn-hangzhou.aliyuncs.com/google-containers/heapster-amd64:v1.4.2 imagePullPolicy: IfNotPresent command: - /heapster - --source=kubernetes:https://kubernetes.default - --sink=influxdb:http://monitoring-influxdb:8086 --- apiVersion: v1kind: Servicemetadata: labels: task: monitoring kubernetes.io/cluster-service: 'true' kubernetes.io/name: Heapster name: heapster namespace: kube-systemspec: ports: - port: 80 targetPort: 8082 selector: k8s-app: heapster |

3,部署grafana

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

|

# cat grafana.yaml apiVersion: apps/v1kind: Deploymentmetadata: name: monitoring-grafana namespace: kube-systemspec: replicas: 1 selector: matchLabels: task: monitoring k8s-app: grafana template: metadata: labels: task: monitoring k8s-app: grafana spec: containers: - name: grafana #image: k8s.gcr.io/heapster-grafana-amd64:v4.4.3 image: pupudaye/heapster-grafana-amd64:v4.4.3 ports: - containerPort: 3000 protocol: TCP volumeMounts: - mountPath: /etc/ssl/certs name: ca-certificates readOnly: true - mountPath: /var name: grafana-storage env: - name: INFLUXDB_HOST value: monitoring-influxdb - name: GF_SERVER_HTTP_PORT value: "3000" # The following env variables are required to make Grafana accessible via # the kubernetes api-server proxy. On production clusters, we recommend # removing these env variables, setup auth for grafana, and expose the grafana # service using a LoadBalancer or a public IP. - name: GF_AUTH_BASIC_ENABLED value: "false" - name: GF_AUTH_ANONYMOUS_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ORG_ROLE value: Admin - name: GF_SERVER_ROOT_URL # If you're only using the API Server proxy, set this value instead: # value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy value: / volumes: - name: ca-certificates hostPath: path: /etc/ssl/certs - name: grafana-storage emptyDir: {}---apiVersion: v1kind: Servicemetadata: labels: # For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons) # If you are NOT using this as an addon, you should comment out this line. kubernetes.io/cluster-service: 'true' kubernetes.io/name: monitoring-grafana name: monitoring-grafana namespace: kube-systemspec: # In a production setup, we recommend accessing Grafana through an external Loadbalancer # or through a public IP. # type: LoadBalancer # You could also use NodePort to expose the service at a randomly-generated port type: NodePort ports: - nodePort: 30108 port: 80 targetPort: 3000 selector: k8s-app: grafana |

自定义了端口为30108

通过30108端口访问

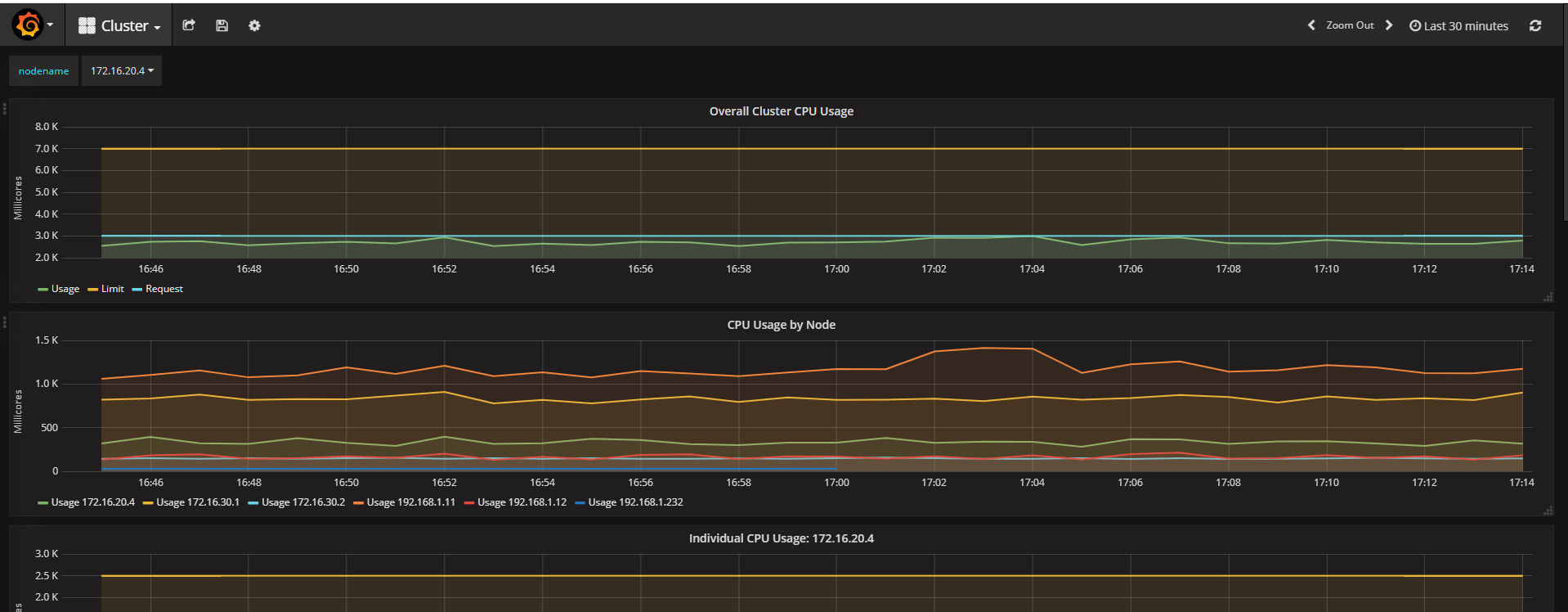

自带cluser和pods模板

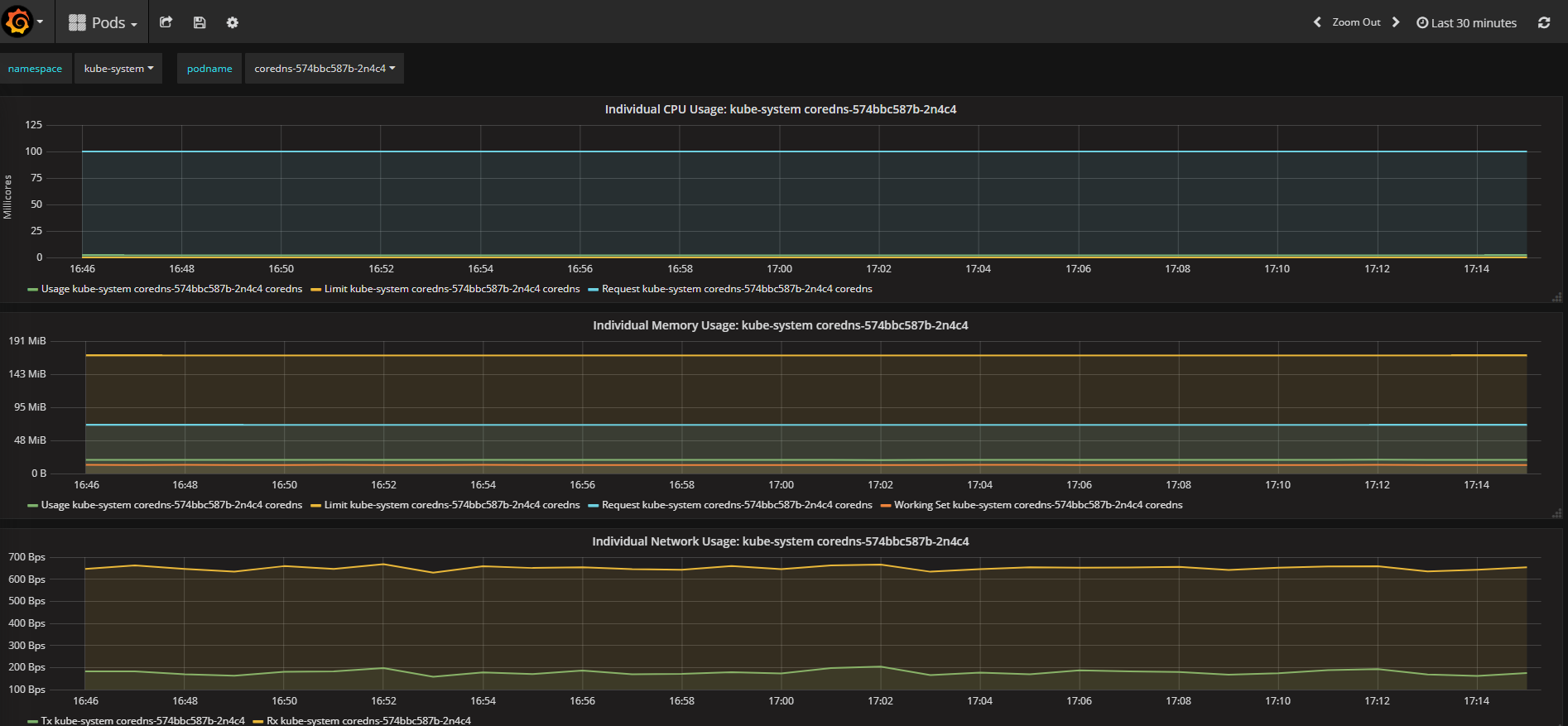

Pods

浙公网安备 33010602011771号

浙公网安备 33010602011771号