2023数据采集与融合技术实践作业4

2023数据采集与融合技术实践作业4

Gitee文件夹链接:https://gitee.com/PicaPicasso/crawl_project.git

作业①

- 要求:

- 熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

- 使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

- 候选网站:东方财富网:http://quote.eastmoney.com/center/gridlist.html#hs_a_board

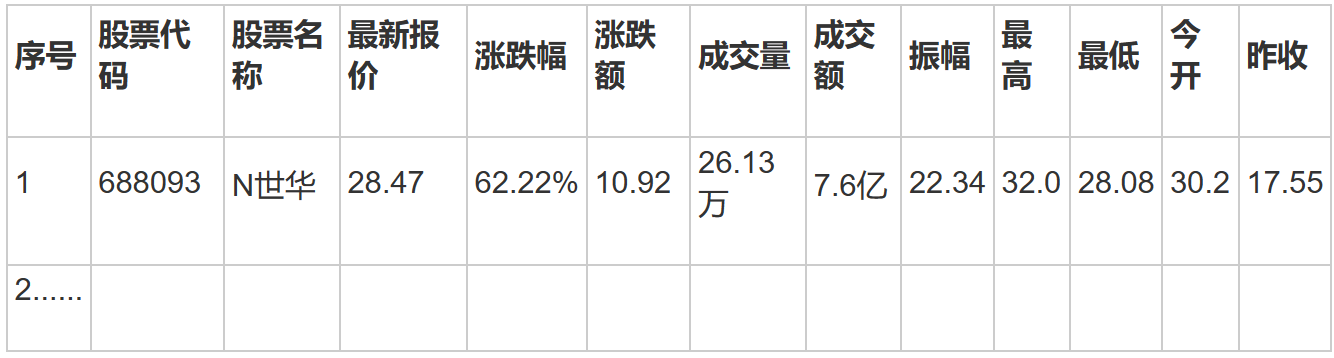

- 输出信息:MYSQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头:

(1)作业内容

1.selenium初始化

# # 实现无可视化界面的操作

# chrome_options = Options()

# chrome_options.add_argument('--headless')

# chrome_options.add_argument('--disable-gpu')

# 实现规避检测

option = ChromeOptions()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

# 如何实现让selenium规避被检测到的风险

driver = webdriver.Chrome(executable_path='chromedriver.exe', options=option)#chrome_options=chrome_options,

driver.maximize_window()

2.定义爬取页面数据的函数

def spiderOnePage():

time.sleep(3)

trs = driver.find_elements(By.XPATH,'//table[@id="table_wrapper-table"]//tr[@class]')

for tr in trs:

tds = tr.find_elements(By.XPATH,'.//td')

num = tds[0].text

id = tds[1].text

name = tds[2].text

Latest_quotation = tds[6].text

Chg = tds[7].text

up_down_amount = tds[8].text

turnover = tds[9].text

transaction_volume = tds[10].text

amplitude = tds[11].text

highest = tds[12].text

lowest = tds[13].text

today = tds[14].text

yesterday = tds[15].text

cursor.execute('INSERT INTO stock VALUES ("%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s")' % (num,id,name,Latest_quotation,

Chg,up_down_amount,turnover,transaction_volume,amplitude,highest,lowest,today,yesterday))

db.commit()

3.利用selenium访问特定页面

# 访问东方财富网

driver.get('https://www.eastmoney.com/')

# 访问行情中心

driver.get(WebDriverWait(driver,10,0.48).until(EC.presence_of_element_located((By.XPATH,'/html/body/div[3]/div[8]/div/div[2]/div[1]/div[1]/a'))).get_attribute('href'))

# 访问沪深京A股

driver.get(WebDriverWait(driver,10,0.48).until(EC.presence_of_element_located((By.ID,'menu_hs_a_board'))).get_attribute('href'))

# 爬取两页的数据

spider(2)

driver.back()

# 访问上证A股

driver.get(WebDriverWait(driver,10,0.48).until(EC.presence_of_element_located((By.ID,'menu_sh_a_board'))).get_attribute('href'))

spider(2)

driver.back()

# 访问深证A股

driver.get(WebDriverWait(driver,10,0.48).until(EC.presence_of_element_located((By.ID,'menu_sz_a_board'))).get_attribute('href'))

spider(2)

4.数据库相关操作

开启连接

# 连接crawl数据库,创建表

try:

db = pymysql.connect(host='127.0.0.1', port=3306, user='root', password='123', db='crawl',

charset='utf8')

cursor = db.cursor()

cursor.execute('DROP TABLE IF EXISTS stock')

sql = '''CREATE TABLE stock(num varchar(32),id varchar(12),name varchar(32),Latest_quotation varchar(32),Chg varchar(12),up_down_amount varchar(12),

turnover varchar(16),transaction_volume varchar(16),amplitude varchar(16),highest varchar(32), lowest varchar(32),today varchar(32),yesterday varchar(32))'''

cursor.execute(sql)

except Exception as e:

print(e)

关闭连接

try:

cursor.close()

db.close()

except:

pass

5.关闭selenium浏览器

time.sleep(3)

driver.quit()

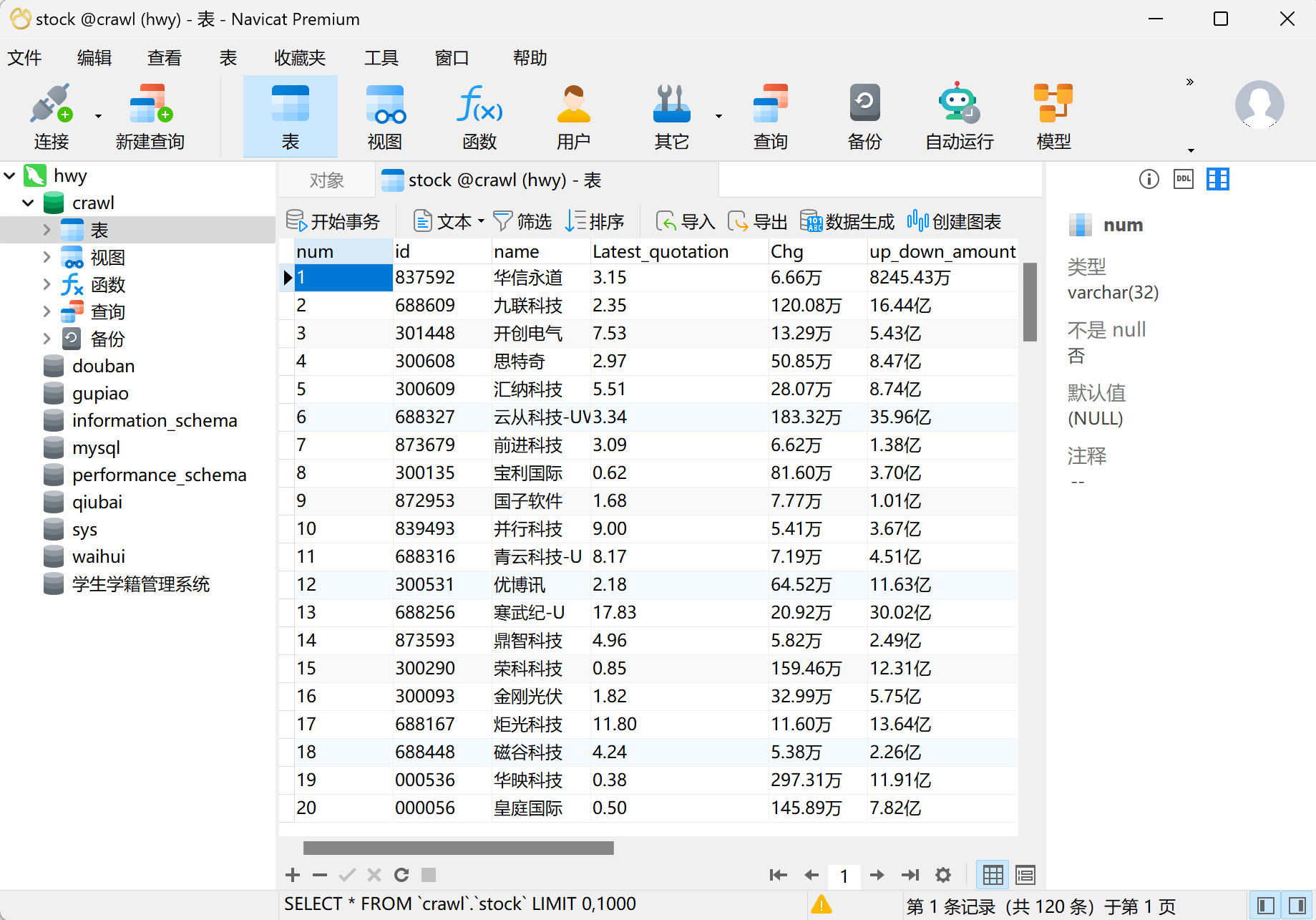

navicat

点击查看完整代码

from selenium.webdriver.support import expected_conditions as EC

from selenium import webdriver

from time import sleep

# 实现无可视化界面

from selenium.webdriver.chrome.options import Options

# 实现规避检测

from selenium.webdriver import ChromeOptions

import time

import pymysql

from selenium.webdriver.common.by import By

from selenium.webdriver.support.wait import WebDriverWait

# # 实现无可视化界面的操作

# chrome_options = Options()

# chrome_options.add_argument('--headless')

# chrome_options.add_argument('--disable-gpu')

# 实现规避检测

option = ChromeOptions()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

# 如何实现让selenium规避被检测到的风险

driver = webdriver.Chrome(executable_path='chromedriver.exe', options=option)#chrome_options=chrome_options,

driver.maximize_window()

# 连接crawl数据库,创建表

try:

db = pymysql.connect(host='127.0.0.1', port=3306, user='root', password='123', db='crawl',

charset='utf8')

cursor = db.cursor()

cursor.execute('DROP TABLE IF EXISTS stock')

sql = '''CREATE TABLE stock(num varchar(32),id varchar(12),name varchar(32),Latest_quotation varchar(32),Chg varchar(12),up_down_amount varchar(12),

turnover varchar(16),transaction_volume varchar(16),amplitude varchar(16),highest varchar(32), lowest varchar(32),today varchar(32),yesterday varchar(32))'''

cursor.execute(sql)

except Exception as e:

print(e)

def spider(page_num):

cnt = 0

while cnt < page_num:

spiderOnePage()

driver.find_element(By.XPATH,'//a[@class="next paginate_button"]').click()

cnt +=1

time.sleep(2)

# 爬取一个页面的数据

def spiderOnePage():

time.sleep(3)

trs = driver.find_elements(By.XPATH,'//table[@id="table_wrapper-table"]//tr[@class]')

for tr in trs:

tds = tr.find_elements(By.XPATH,'.//td')

num = tds[0].text

id = tds[1].text

name = tds[2].text

Latest_quotation = tds[6].text

Chg = tds[7].text

up_down_amount = tds[8].text

turnover = tds[9].text

transaction_volume = tds[10].text

amplitude = tds[11].text

highest = tds[12].text

lowest = tds[13].text

today = tds[14].text

yesterday = tds[15].text

cursor.execute('INSERT INTO stock VALUES ("%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s")' % (num,id,name,Latest_quotation,

Chg,up_down_amount,turnover,transaction_volume,amplitude,highest,lowest,today,yesterday))

db.commit()

# 访问东方财富网

driver.get('https://www.eastmoney.com/')

# 访问行情中心

driver.get(WebDriverWait(driver,10,0.48).until(EC.presence_of_element_located((By.XPATH,'/html/body/div[3]/div[8]/div/div[2]/div[1]/div[1]/a'))).get_attribute('href'))

# 访问沪深京A股

driver.get(WebDriverWait(driver,10,0.48).until(EC.presence_of_element_located((By.ID,'menu_hs_a_board'))).get_attribute('href'))

# 爬取两页的数据

spider(2)

driver.back()

# 访问上证A股

driver.get(WebDriverWait(driver,10,0.48).until(EC.presence_of_element_located((By.ID,'menu_sh_a_board'))).get_attribute('href'))

spider(2)

driver.back()

# 访问深证A股

driver.get(WebDriverWait(driver,10,0.48).until(EC.presence_of_element_located((By.ID,'menu_sz_a_board'))).get_attribute('href'))

spider(2)

try:

cursor.close()

db.close()

except:

pass

time.sleep(3)

driver.quit()

(2)作业心得

本题中规中矩,主要是熟悉了一下selenium的相关操作

作业②

- 要求:

- 熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

- 使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

- 候选网站:中国mooc网:https://www.icourse163.org

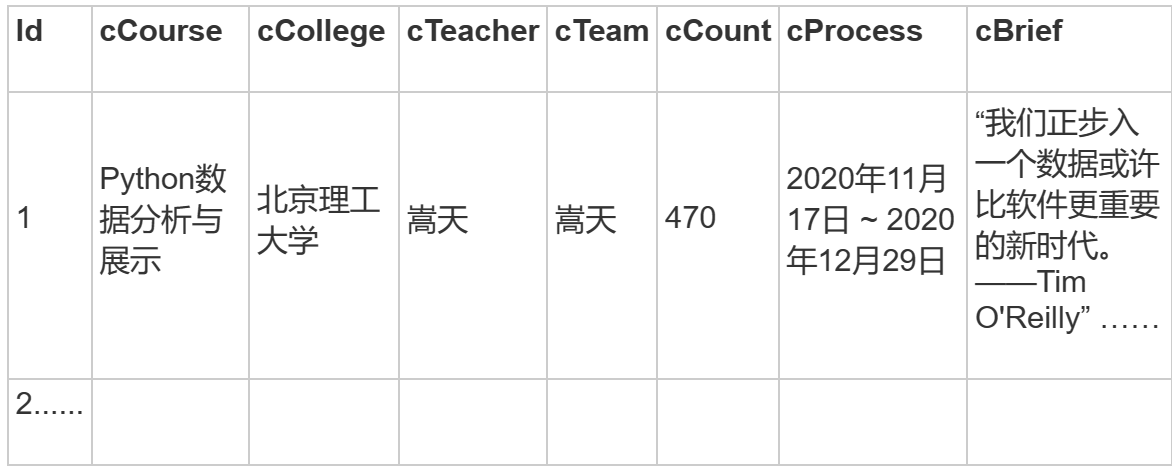

- 输出信息:MYSQL数据库存储和输出格式

(1)作业内容

1.初始化selenium

# 实现规避检测

option = ChromeOptions()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

# 如何实现让selenium规避被检测到的风险

driver = webdriver.Chrome(executable_path='chromedriver.exe', options=option) # chrome_options=chrome_options,

driver.maximize_window() # 使浏览器窗口最大化

2.定义爬取页面内容的函数

# 爬取一个页面的数据

def spiderOnePage():

time.sleep(5) # 等待页面加载完成

courses = driver.find_elements(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[1]/div')

current_window_handle = driver.current_window_handle

for course in courses:

cCourse = course.find_element(By.XPATH, './/h3').text # 课程名

cCollege = course.find_element(By.XPATH, './/p[@class="_2lZi3"]'

).text # 大学名称

cTeacher = course.find_element(By.XPATH, './/div[@class="_1Zkj9"]'

).text # 主讲老师

cCount = course.find_element(By.XPATH, './/div[@class="jvxcQ"]/span'

).text # 参与该课程的人数

cProcess = course.find_element(By.XPATH, './/div[@class="jvxcQ"]/div'

).text # 课程进展

course.click()

Handles = driver.window_handles

driver.switch_to.window(Handles[1])

time.sleep(5)

# 爬取课程详情数据

cBrief = driver.find_element(By.XPATH, '//*[@id="j-rectxt2"]'

).text

if len(cBrief) == 0:

cBriefs = driver.find_elements(By.XPATH, '//*[@id="content-section"]/div[4]/div//*')

cBrief = ""

for c in cBriefs:

cBrief += c.text

cBrief = cBrief.replace('"', r'\"').replace("'", r"\'")

cBrief = cBrief.strip()

# 爬取老师团队信息

nameList = []

cTeachers = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_con_item"]')

for Teacher in cTeachers:

name = Teacher.find_element(By.XPATH, './/h3[@class="f-fc3"]'

).text.strip()

nameList.append(name)

# 如果有下一页的标签,就点击它,然后继续爬取

nextButton = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_next f-pa"]')

while len(nextButton) != 0:

nextButton[0].click()

time.sleep(3)

cTeachers = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_con_item"]')

for Teacher in cTeachers:

name = Teacher.find_element(By.XPATH, './/h3[@class="f-fc3"]'

).text.strip()

nameList.append(name)

nextButton = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_next f-pa"]')

cTeam = ','.join(nameList)

driver.close() # 关闭新标签页

driver.switch_to.window(current_window_handle) # 跳转回原始页面

try:

cursor.execute('INSERT INTO mooc VALUES ("%s","%s","%s","%s","%s","%s","%s")' % (

cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief))

db.commit()

except Exception as e:

print(e)

3.利用selenium访问特定页面(相比于上一题,本题主要是实现模拟登录)

# 访问中国大学慕课

driver.get('https://www.icourse163.org/')

# 点击登录按钮

WebDriverWait(driver, 10, 0.48).until(

EC.presence_of_element_located((By.XPATH, '//a[@class="f-f0 navLoginBtn"]'))).click()

iframe = WebDriverWait(driver, 10, 0.48).until(EC.presence_of_element_located((By.XPATH, '//*[@frameborder="0"]')))

driver.switch_to.frame(iframe)

# 输入账号密码并点击登录按钮

driver.find_element(By.XPATH, '//*[@id="phoneipt"]'

).send_keys("双引号里需要替换成你自己的手机号")

time.sleep(2)

driver.find_element(By.XPATH, '//*[@class="j-inputtext dlemail"]'

).send_keys("双引号里需要替换成你自己的密码")

time.sleep(2)

driver.find_element(By.ID, 'submitBtn').click()

WebDriverWait(driver, 10).until(lambda d: d.execute_script('return document.readyState') == 'complete')

driver.get(WebDriverWait(driver, 10, 0.48).until(

EC.presence_of_element_located((By.XPATH, '//*[@id="app"]/div/div/div[1]/div[1]/div[1]/span[1]/a')))

.get_attribute('href'))

spiderOnePage() # 爬取第一页的内容

count = 1

# 翻页

next_page = driver.find_element(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[2]/div/a[10]')

while next_page.get_attribute('class') == '_3YiUU ':

if count == 2:

break

count += 1

next_page.click()

spiderOnePage()

next_page = driver.find_element(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[2]/div/a[10]')

4.数据库相关操作

开启连接

# 连接MySql

try:

db = pymysql.connect(host='127.0.0.1', port=3306, user='root', password='123', db='crawl',charset='utf8')

cursor = db.cursor()

cursor.execute('DROP TABLE IF EXISTS mooc')

sql = '''CREATE TABLE mooc(cCourse varchar(64),cCollege varchar(64),cTeacher varchar(16),cTeam varchar(256),cCount varchar(16),

cProcess varchar(32),cBrief varchar(2048))'''

cursor.execute(sql)

except Exception as e:

print(e)

关闭连接

try:

cursor.close()

db.close()

except:

pass

5.关闭selenium浏览器

time.sleep(3)

driver.quit()

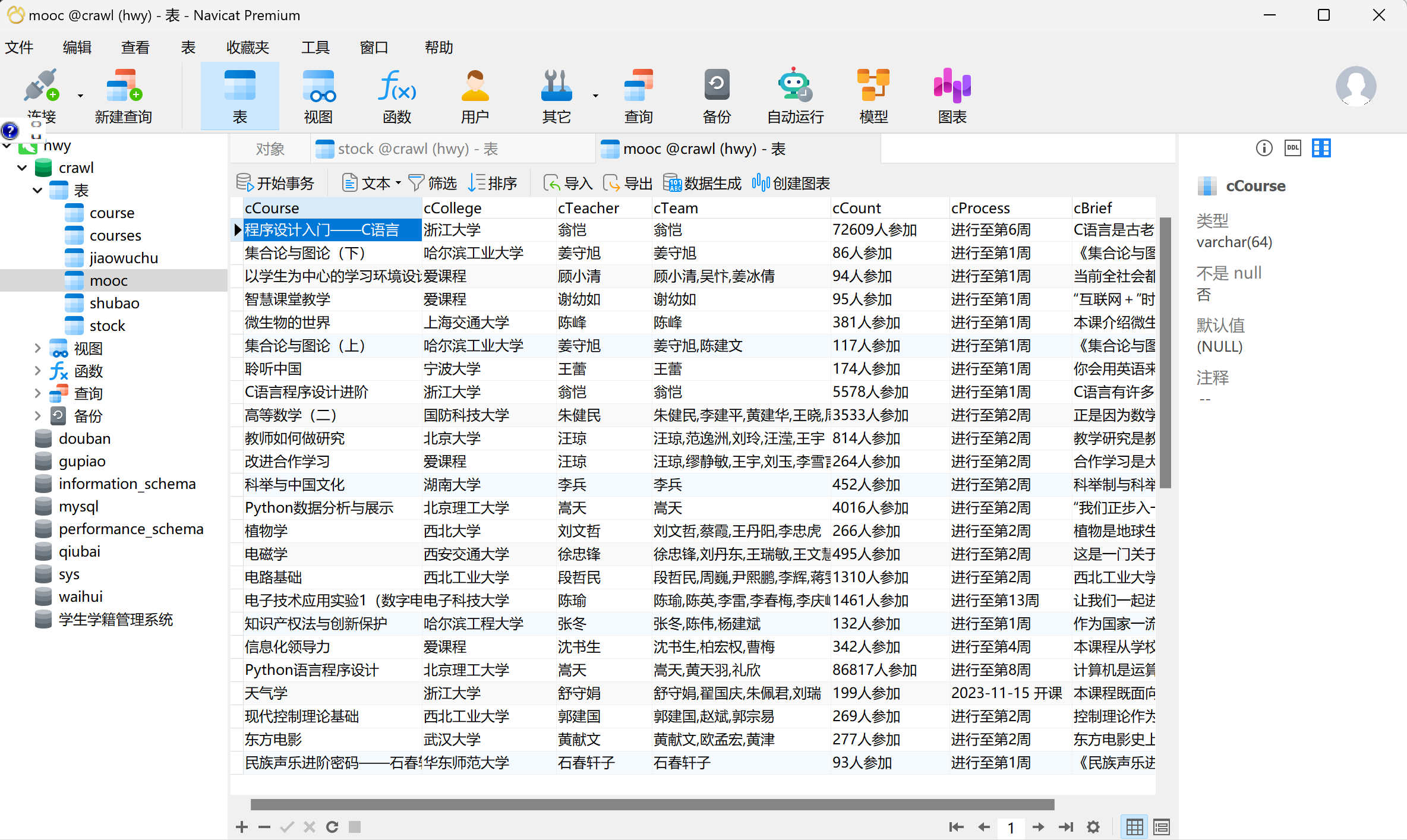

navicat

点击查看完整代码

<details>

<summary>点击查看完整代码</summary>

from selenium.webdriver.support import expected_conditions as EC

from selenium import webdriver

from time import sleep

实现无可视化界面

from selenium.webdriver.chrome.options import Options

实现规避检测

from selenium.webdriver import ChromeOptions

import time

import pymysql

from selenium.webdriver.common.by import By

from selenium.webdriver.support.wait import WebDriverWait

def spider_mooc_courses():

# 实现规避检测

option = ChromeOptions()

option.add_experimental_option('excludeSwitches', ['enable-automation'])

# 如何实现让selenium规避被检测到的风险

driver = webdriver.Chrome(executable_path='chromedriver.exe', options=option) # chrome_options=chrome_options,

driver.maximize_window() # 使浏览器窗口最大化

# 连接MySql

try:

db = pymysql.connect(host='127.0.0.1', port=3306, user='root', password='123', db='crawl',charset='utf8')

cursor = db.cursor()

cursor.execute('DROP TABLE IF EXISTS mooc')

sql = '''CREATE TABLE mooc(cCourse varchar(64),cCollege varchar(64),cTeacher varchar(16),cTeam varchar(256),cCount varchar(16),

cProcess varchar(32),cBrief varchar(2048))'''

cursor.execute(sql)

except Exception as e:

print(e)

# 爬取一个页面的数据

def spiderOnePage():

time.sleep(5) # 等待页面加载完成

courses = driver.find_elements(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[1]/div')

current_window_handle = driver.current_window_handle

for course in courses:

cCourse = course.find_element(By.XPATH, './/h3').text # 课程名

cCollege = course.find_element(By.XPATH, './/p[@class="_2lZi3"]'

).text # 大学名称

cTeacher = course.find_element(By.XPATH, './/div[@class="_1Zkj9"]'

).text # 主讲老师

cCount = course.find_element(By.XPATH, './/div[@class="jvxcQ"]/span'

).text # 参与该课程的人数

cProcess = course.find_element(By.XPATH, './/div[@class="jvxcQ"]/div'

).text # 课程进展

course.click()

Handles = driver.window_handles

driver.switch_to.window(Handles[1])

time.sleep(5)

# 爬取课程详情数据

cBrief = driver.find_element(By.XPATH, '//*[@id="j-rectxt2"]'

).text

if len(cBrief) == 0:

cBriefs = driver.find_elements(By.XPATH, '//*[@id="content-section"]/div[4]/div//*')

cBrief = ""

for c in cBriefs:

cBrief += c.text

cBrief = cBrief.replace('"', r'\"').replace("'", r"\'")

cBrief = cBrief.strip()

# 爬取老师团队信息

nameList = []

cTeachers = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_con_item"]')

for Teacher in cTeachers:

name = Teacher.find_element(By.XPATH, './/h3[@class="f-fc3"]'

).text.strip()

nameList.append(name)

# 如果有下一页的标签,就点击它,然后继续爬取

nextButton = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_next f-pa"]')

while len(nextButton) != 0:

nextButton[0].click()

time.sleep(3)

cTeachers = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_con_item"]')

for Teacher in cTeachers:

name = Teacher.find_element(By.XPATH, './/h3[@class="f-fc3"]'

).text.strip()

nameList.append(name)

nextButton = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_next f-pa"]')

cTeam = ','.join(nameList)

driver.close() # 关闭新标签页

driver.switch_to.window(current_window_handle) # 跳转回原始页面

try:

cursor.execute('INSERT INTO mooc VALUES ("%s","%s","%s","%s","%s","%s","%s")' % (

cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief))

db.commit()

except Exception as e:

print(e)

# 访问中国大学慕课

driver.get('https://www.icourse163.org/')

# 点击登录按钮

WebDriverWait(driver, 10, 0.48).until(

EC.presence_of_element_located((By.XPATH, '//a[@class="f-f0 navLoginBtn"]'))).click()

iframe = WebDriverWait(driver, 10, 0.48).until(EC.presence_of_element_located((By.XPATH, '//*[@frameborder="0"]')))

driver.switch_to.frame(iframe)

# 输入账号密码并点击登录按钮

driver.find_element(By.XPATH, '//*[@id="phoneipt"]'

).send_keys("双引号里需要替换成你自己的手机号")

time.sleep(2)

driver.find_element(By.XPATH, '//*[@class="j-inputtext dlemail"]'

).send_keys("双引号里需要替换成你自己的密码")

time.sleep(2)

driver.find_element(By.ID, 'submitBtn').click()

WebDriverWait(driver, 10).until(lambda d: d.execute_script('return document.readyState') == 'complete')

driver.get(WebDriverWait(driver, 10, 0.48).until(

EC.presence_of_element_located((By.XPATH, '//*[@id="app"]/div/div/div[1]/div[1]/div[1]/span[1]/a')))

.get_attribute('href'))

spiderOnePage() # 爬取第一页的内容

count = 1

# 翻页

next_page = driver.find_element(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[2]/div/a[10]')

while next_page.get_attribute('class') == '_3YiUU ':

if count == 2:-

(2)作业心得

- 利用selenium实现模拟登录时发现查找到对应的按钮元素实现点击,弹出一个iframe页面,这里需要注意的是,我们首先需要让浏览器驱动将"焦点"切换到这一个窗口,因为这个iframe 和主页页面不属于一个窗口,如果我们希望在iframe下查找元素,就必须要先切换焦点switch_to.frame(),然后才可以查找输入框元素。

- 切换到iframe还有一个坑点,就是一般来说每个ID属性都唯一的,利用ID能唯一检索出一个元素。但是该网站iframe的ID属性值是动态生成的,每次加载的ID都不一样,所以后来选择根据frameborder属性值来定位该iframe。

- 爬取教师团队信息时,当团队人数较多时,该网页并没有将所有老师一次性列出,需要进行局部翻页处理。与国家精品课程页面的翻页操作不同,当没有下一页时,下一页这个标签直接消失,而不是class属性值发生变化。可以改用find_elements查找该标签,如果没找到,只是返回空列表,并不会像find_element一样产生异常。

作业③

- 要求:

- 掌握大数据相关服务,熟悉Xshell的使用。

- 完成文档 华为云_大数据实时分析处理实验手册-Flume日志采集实验(部分)v2.docx 中的任务,即为下面5个任务,具体操作见文档。

(1)作业内容

-

环境搭建:

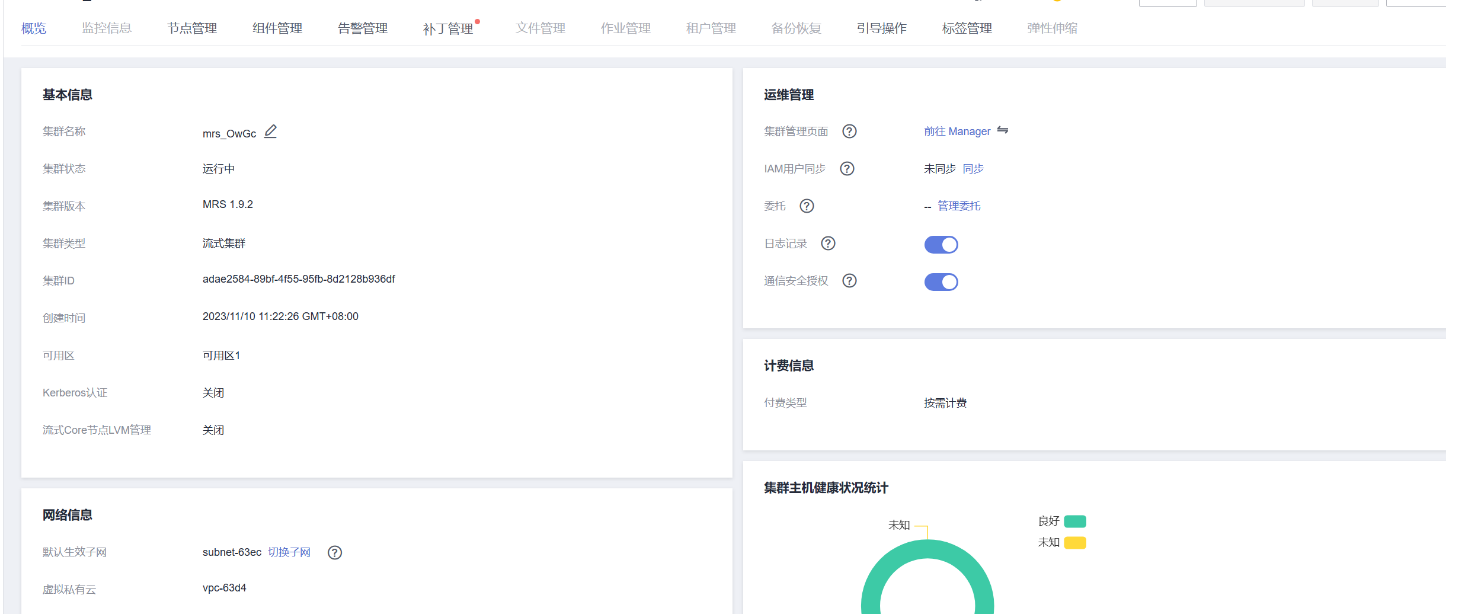

- 任务一:开通MapReduce服务

- 任务一:开通MapReduce服务

-

实时分析开发实战:

-

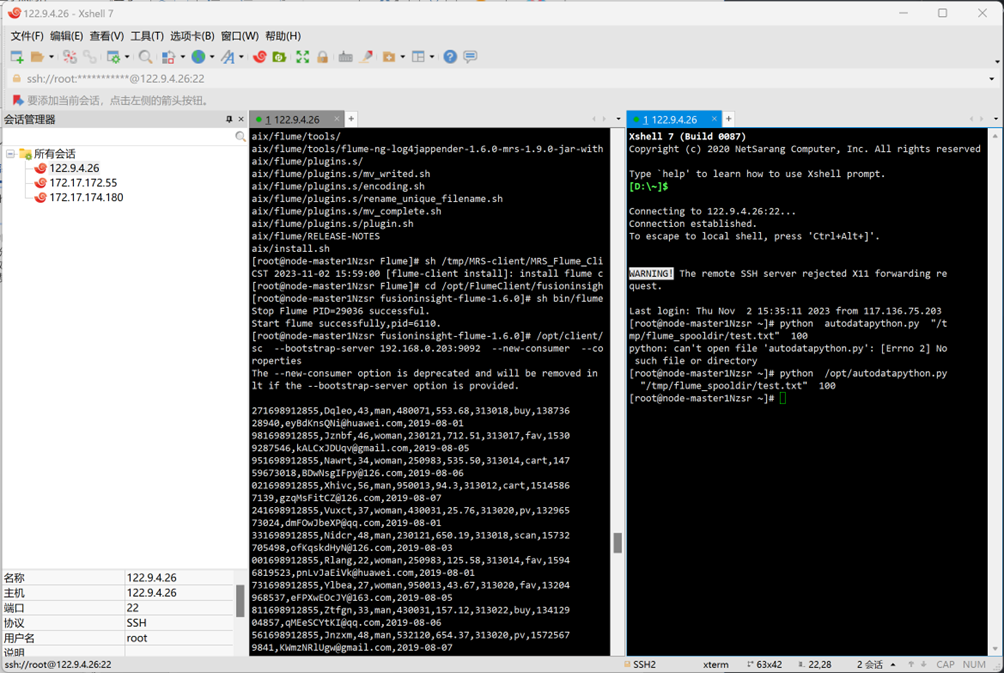

任务一:Python脚本生成测试数据

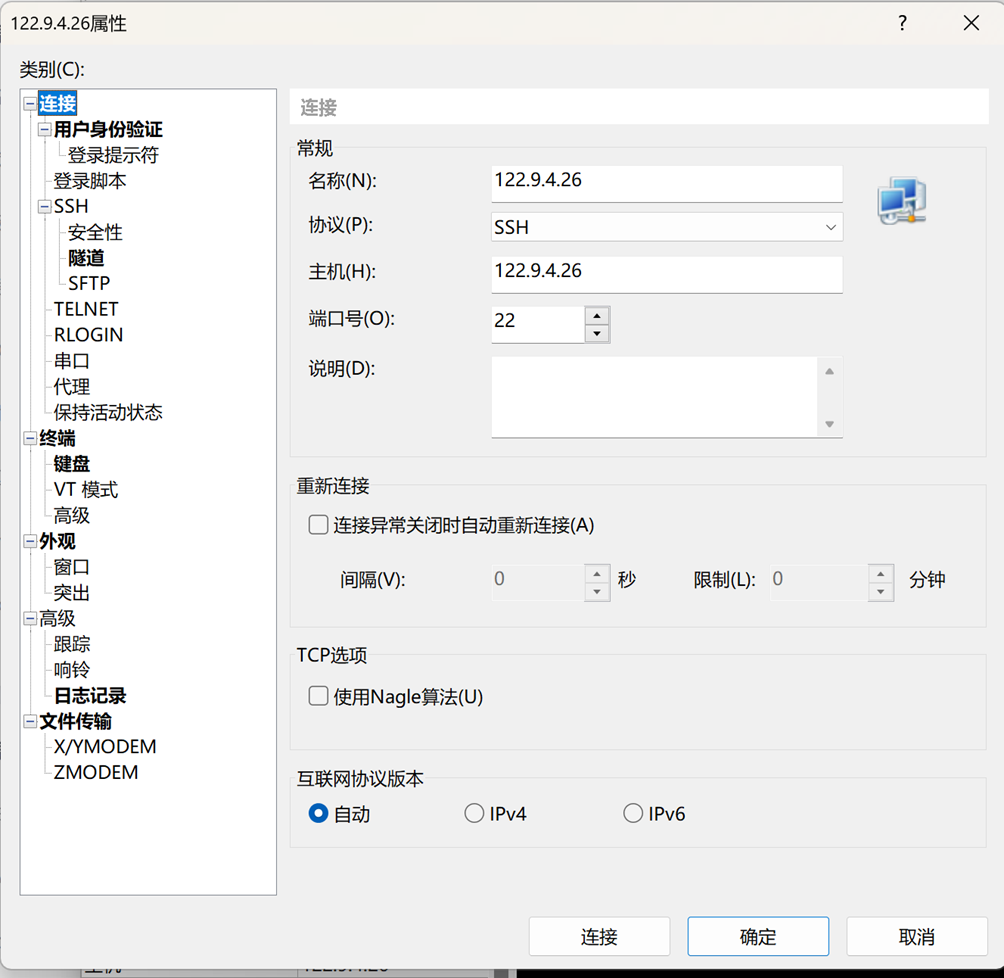

使用Xshell 7连接服务器:

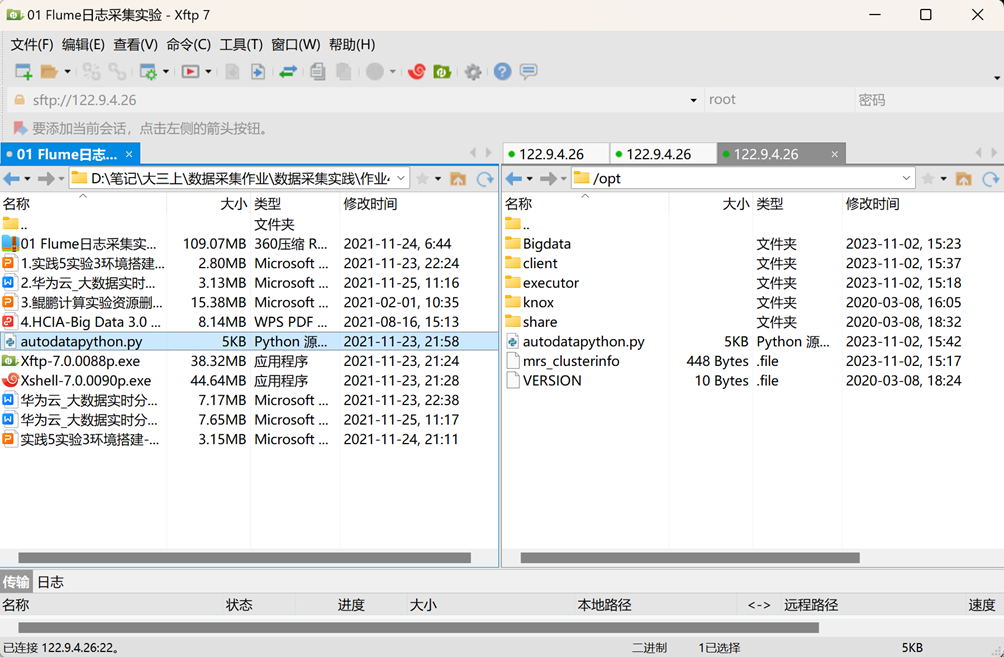

用xftp7将本地的autodatapython.py文件上传至服务器/opt目录下即可

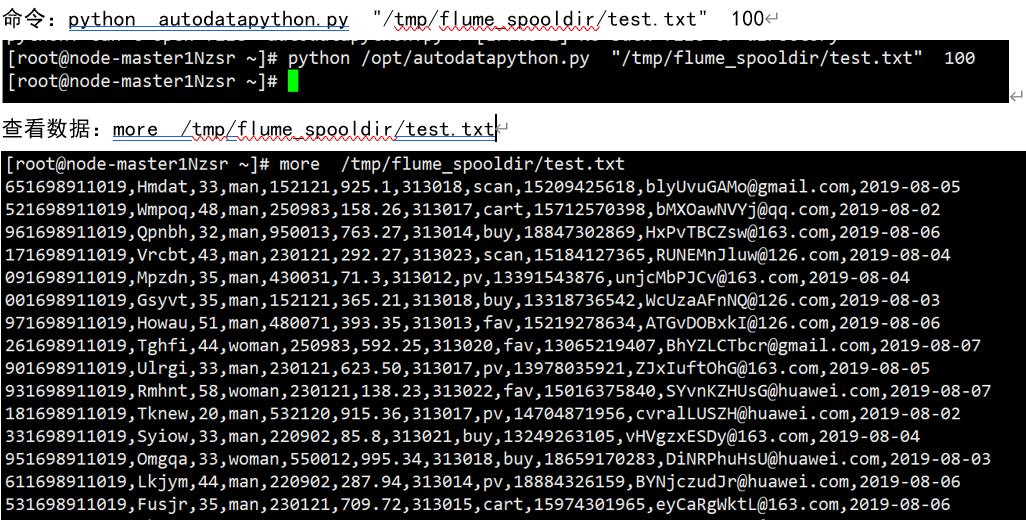

测试执行

-

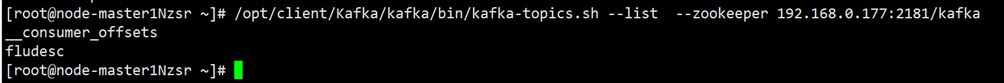

任务二:配置Kafka

-

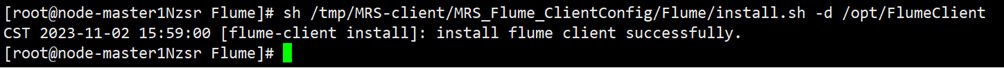

任务三: 安装Flume客户端

-

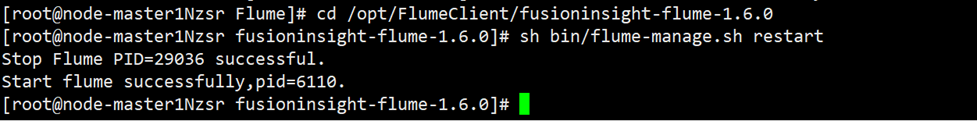

任务四:配置Flume采集数据

-

(2)作业心得

原理还有点懵,但是初步了解了一下Xshell连接服务器,然后上手实践了一下大数据应用。