CS229:Kernels

review

-

A decision boundary with the greatest possible geometric margin.

-

functional margin/geometric margin

maximize the margin as an optimizing problem

-

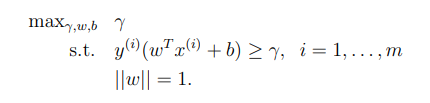

maximize the geometric margin \gamma in the worst case

-

-

choosing proper w and b defines a hyberplane

-

in this case, the problem has the same result as:

-

-

Suppose w can be represented by linear combination of training examples

-

actually has a coefficient containing y^{i}

-

extra constraint

-

represent theorem

-

actually, this can be derived by the Lagrangian for our optimization problem

-

-

Intuition:

-

gradient descent: after iteration, \theta is still linear combination.

-

w pins the direction of decision boundary, while b decides its position

-

-

using the linear representation can transfer the problem into a optimization about inner product of feature vectors.

-

more simplified one: dual optimization problem

-

using Lagrangian

-

-

KKT conditions are indeed satisfied

-

-

Apply kernel trick

-

Write algorithm in terms of inner product

-

generate mapping from x to \phi(x) , which is the high dimensional features.

-

find way to compute K(x, z)=\phi(x)^{T} \phi(z)

-

replace \langle x, z\rangle with K(x, z) (so you don't need to compute \phi (x) which maybe expensive)

-

compute K(x, z) only need O(n) time, while calculate \phi (X) may need O(N^{2})

-

-

other skills

-

adding a fixed number c controls the relative weighting between the first order and the second order terms

-

changing 2 into d will include all monomials that are up to order d

-

how to generate a valid kernel

-

Intuition: think of Kernel Function as some measurement of the similarity between two vectors

-

K(x, z)=\exp \left(-\frac{\|x-z\|^{2}}{2 \sigma^{2}}\right)

-

Gaussian kernel

-

corresponding to an infinite dimensional feature mapping

-

-

judge if a kernel is valid

-

Kernel Matrix

-

symmetric

-

semi-definite

-

necessary and sufficient

-

-

-

linear kernel: \phi(x) = x

soft margin SVM

-

when data is a little noisy(not linear separable), and you don't want to separate all the points

-

-

ensuring that most examples have functional margin at least 1.

-

makes the decision boundary less sensitive to just one data

Summary

At the beginning, we want to separate data with two labels, finding the best decision boundary. Assuming that the dataset is linearly separable, we come up with functional margin and geometric margin, and derive a algorithm called optimal margin classifier. To solve to optimization problem, we learn Lagrange duality and KKT conditions as necessary math tools. In cases that the original dataset is not linearly separable, we choose proper kernel function and perform kernel skills to mapping original features into high dimensional space, and find a hyperplane to separate them, which will result in a non-linear decision boundary in the original feature space. Kernels maybe linear, Gaussian, and may corresponding to infinite dimensional feature mapping. Though calculate this mapping can be expensive, we can still compute Kernel function in a cost of O(n)

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:基于图像分类模型对图像进行分类

· go语言实现终端里的倒计时

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 分享一个免费、快速、无限量使用的满血 DeepSeek R1 模型,支持深度思考和联网搜索!

· 25岁的心里话

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· ollama系列01:轻松3步本地部署deepseek,普通电脑可用

· 按钮权限的设计及实现