数据采集与融合技术实践作业四

102102136 陈耕

作业1:

(1)作业内容

实验要求:

熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

候选网站:

东方财富网:http://quote.eastmoney.com/center/gridlist.html#hs_a_board

输出信息:

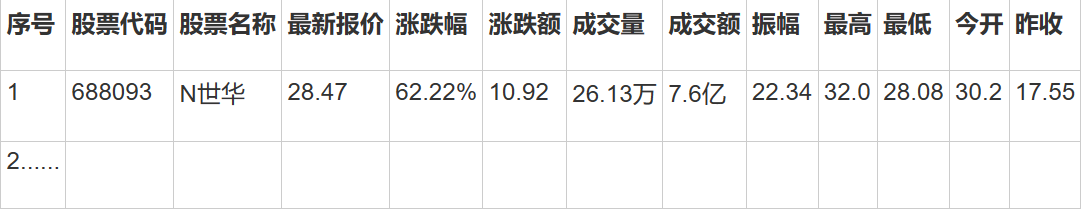

MYSQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头:

码云链接:

https://gitee.com/chen-pai/box/tree/master/实践课第四次作业

代码内容:

'''

MYSQL中需要创建的表的内容:

create database mydb;

use mydb;

create table stocks

(id varchar(128),

bCode varchar(128),

bName varchar(128),

bLatestPrice varchar(128),

bUpDownRange varchar(128),

bUpDownPrice varchar(128),

bTurnover varchar(128),

bTurnoverNum varchar(128),

bAmplitude varchar(128),

bHighest varchar(128),

bLowest varchar(128),

bToday varchar(128),

bYesterday varchar(128));

'''

#以下是具体的代码实现

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import time

import pymysql

from selenium.webdriver.common.by import By

class getStocks:

headers = {

"User-Agent":"Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US;rv:1.9pre)"

"Gecko/2008072421 Minefield/3.0.2pre"

}

num = 1

def startUp(self,url):

#建立浏览器对象

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

self.driver = webdriver.Chrome(options=chrome_options)

try:

#与mysql建立连接

self.con = pymysql.connect(host="localhost", port=3306, user="root", passwd="看不了一点", db="mydb",

charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from stocks")

except Exception as err:

print(err)

self.driver.get(url)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err)

def insertDB(self,id,bCode,bName,bLatestPrice,bUpDownRange,bUpDownPrice,bTurnover,bTurnoverNum,bAmplitude,bHighest,bLowest,bToday,bYesterday):

try:

print(id,bCode,bName,bLatestPrice,bUpDownRange,bUpDownPrice,bTurnover,bTurnoverNum,bAmplitude,bHighest,bLowest,bToday,bYesterday)

self.cursor.execute("insert into stocks (id,bCode,bName,bLatestPrice,bUpDownRange,bUpDownPrice,bTurnover,"

"bTurnoverNum,bAmplitude,bHighest,bLowest,bToday,bYesterday) values "

"(%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(id,bCode,bName,bLatestPrice,bUpDownRange,bUpDownPrice,bTurnover,bTurnoverNum,bAmplitude,bHighest,bLowest,bToday,bYesterday))

except Exception as err:

print(err)

def processSpider(self):

try:

time.sleep(1)

#输出当前url

print(self.driver.current_url)

trs =self.driver.find_elements(By.XPATH,"//div[@class='listview full']/table[@id='table_wrapper-table']/tbody/tr")

#print(trs)

for tr in trs:

#xpath获取信息

id = tr.find_element(By.XPATH,"./td[position()=1]").text

bCode = tr.find_element(By.XPATH,"./td[position()=2]/a").text

bName = tr.find_element(By.XPATH,"./td[position()=3]/a").text

bLatestPrice = tr.find_element(By.XPATH,"./td[position()=5]/span").text

bUpDownRange = tr.find_element(By.XPATH,"./td[position()=6]/span").text

bUpDownPrice = tr.find_element(By.XPATH,"./td[position()=7]/span").text

bTurnover = tr.find_element(By.XPATH,"./td[position()=8]").text

bTurnoverNum = tr.find_element(By.XPATH,"./td[position()=9]").text

bAmplitude = tr.find_element(By.XPATH,"./td[position()=10]").text

bHighest = tr.find_element(By.XPATH,"./td[position()=11]/span").text

bLowest = tr.find_element(By.XPATH,"./td[position()=12]/span").text

bToday = tr.find_element(By.XPATH,"./td[position()=13]/span").text

bYesterday = tr.find_element(By.XPATH,"./td[position()=14]").text

self.insertDB(id,bCode,bName,bLatestPrice,bUpDownRange,bUpDownPrice,bTurnover,bTurnoverNum,bAmplitude,bHighest,bLowest,bToday,bYesterday)

# 翻页操作

try:

self.driver.find_element(By.XPATH,"//div[@class='dataTables_wrapper']//div[@class='dataTables_paginate paging_input']//a[@class='next paginate_button disabled']")

except:

nextPage = self.driver.find_element(By.XPATH,"//div[@class='dataTables_wrapper']//div[@class='dataTables_paginate paging_input']//a[@class='next paginate_button']")

time.sleep(10)

self.num += 1

if(self.num<4):

nextPage.click()

self.processSpider()

except Exception as err:

print(err)

def executeSpider(self, url):

print("Spider starting......")

self.startUp(url)

print("Spider processing......")

#分别爬取三种股票

print("沪深A股")

self.processSpider()

self.num =1

url = "http://quote.eastmoney.com/center/gridlist.html#sh_a_board"

self.driver.get(url)

print("上证A股")

self.processSpider()

self.num = 1

url = "http://quote.eastmoney.com/center/gridlist.html#sz_a_board"

self.driver.get(url)

print("深证A股")

self.processSpider()

print("Spider closing......")

self.closeUp()

url = "http://quote.eastmoney.com/center/gridlist.html#hs_a_board"

spider = getStocks()

spider.executeSpider(url)

具体实现:

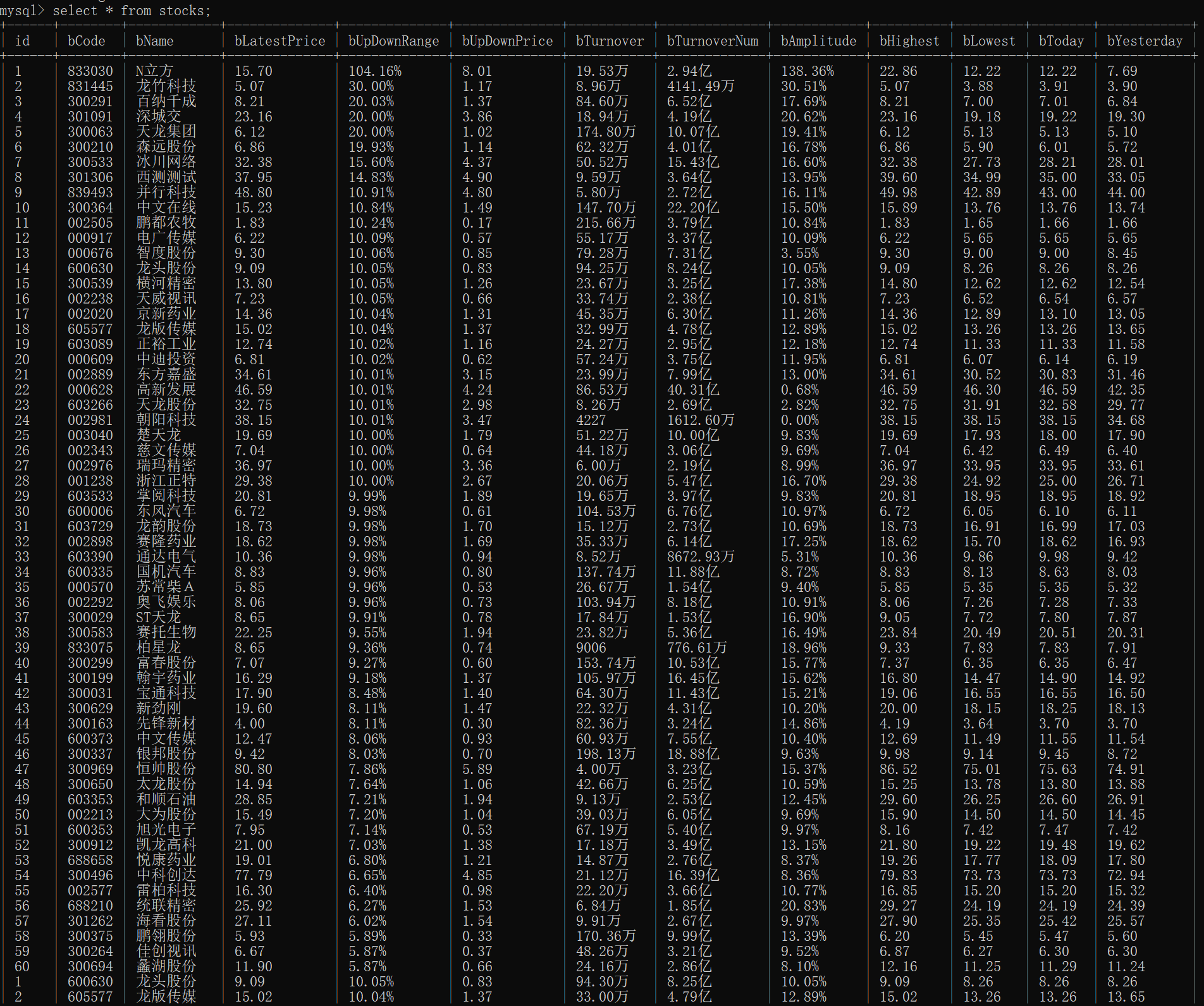

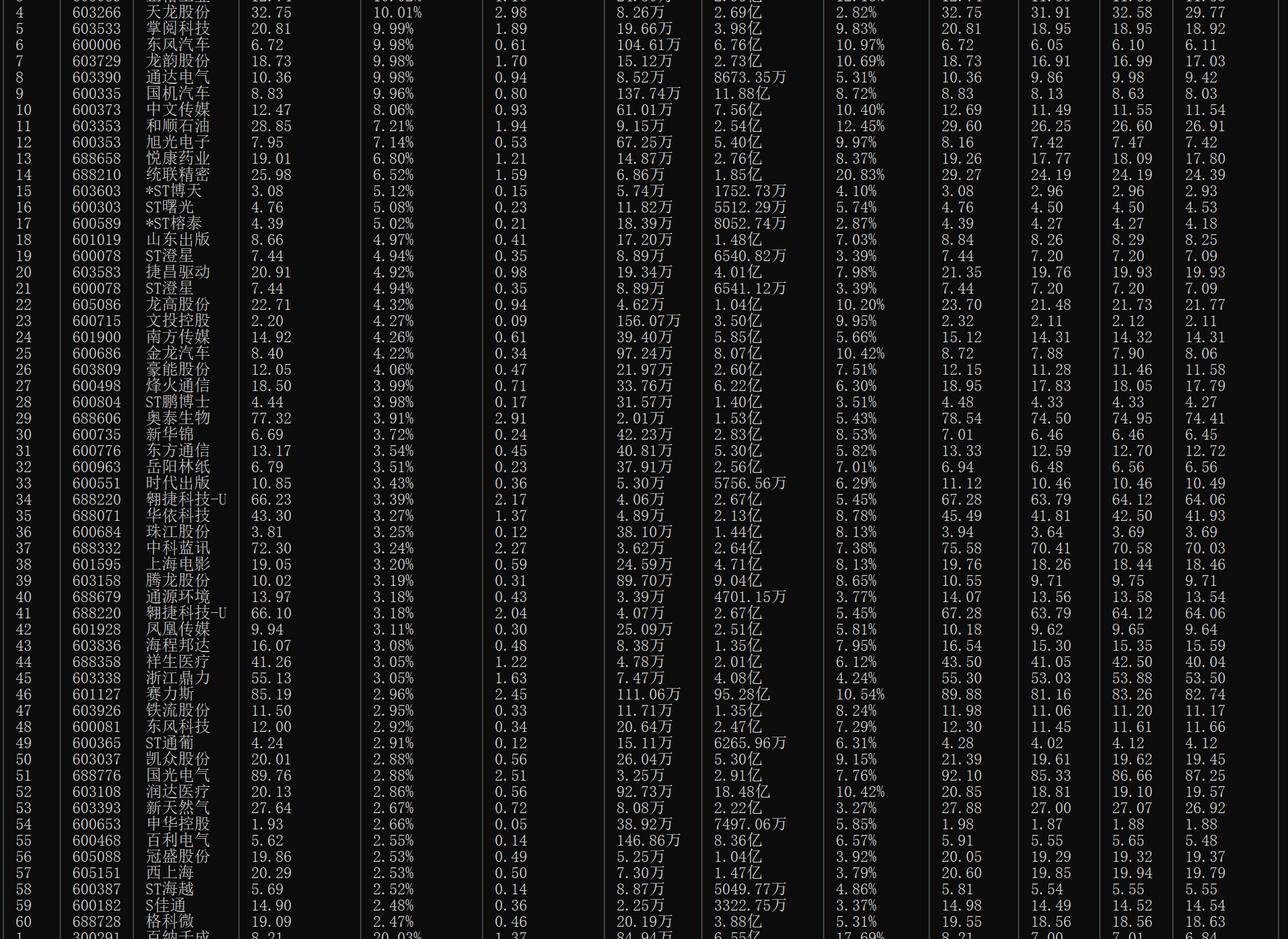

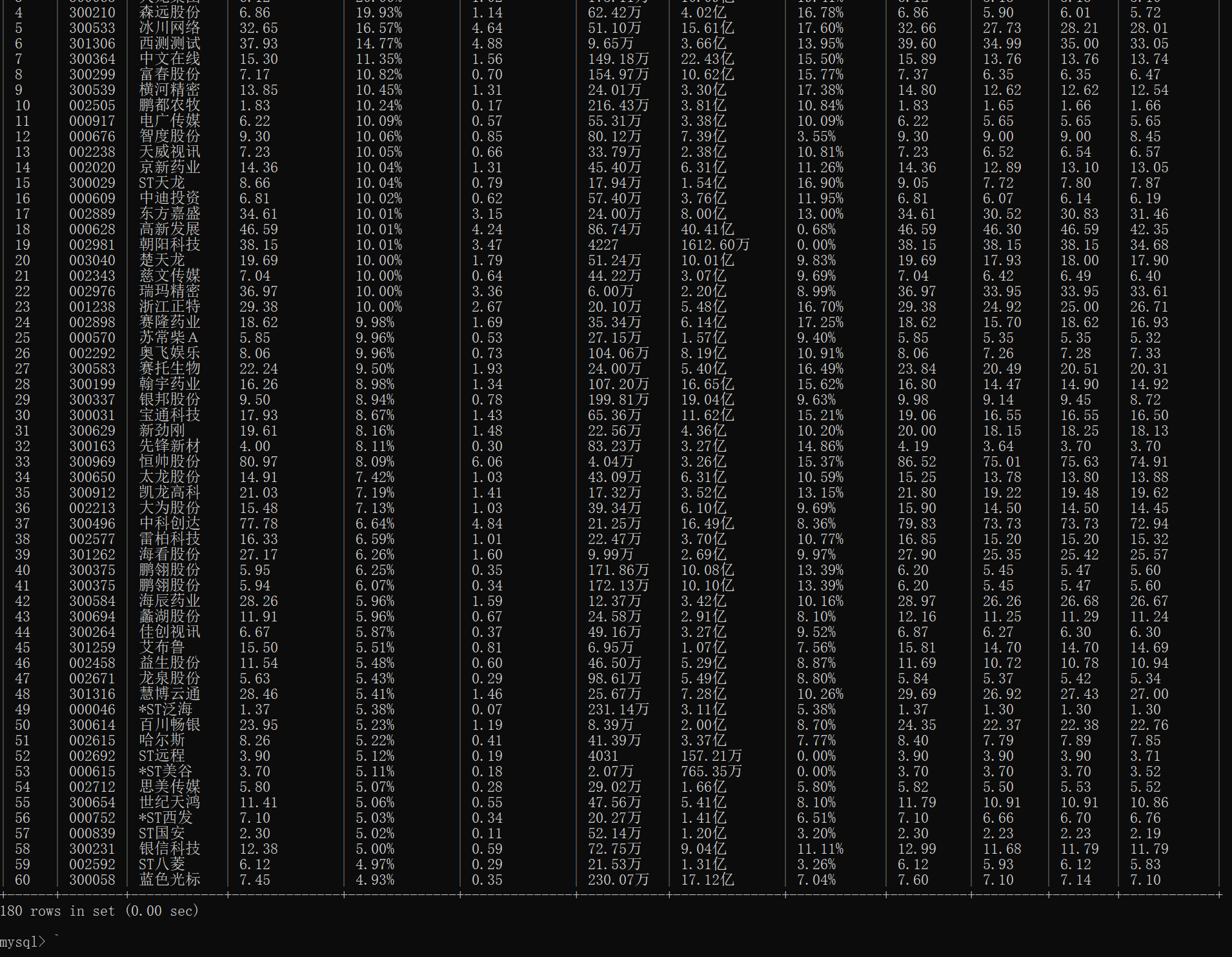

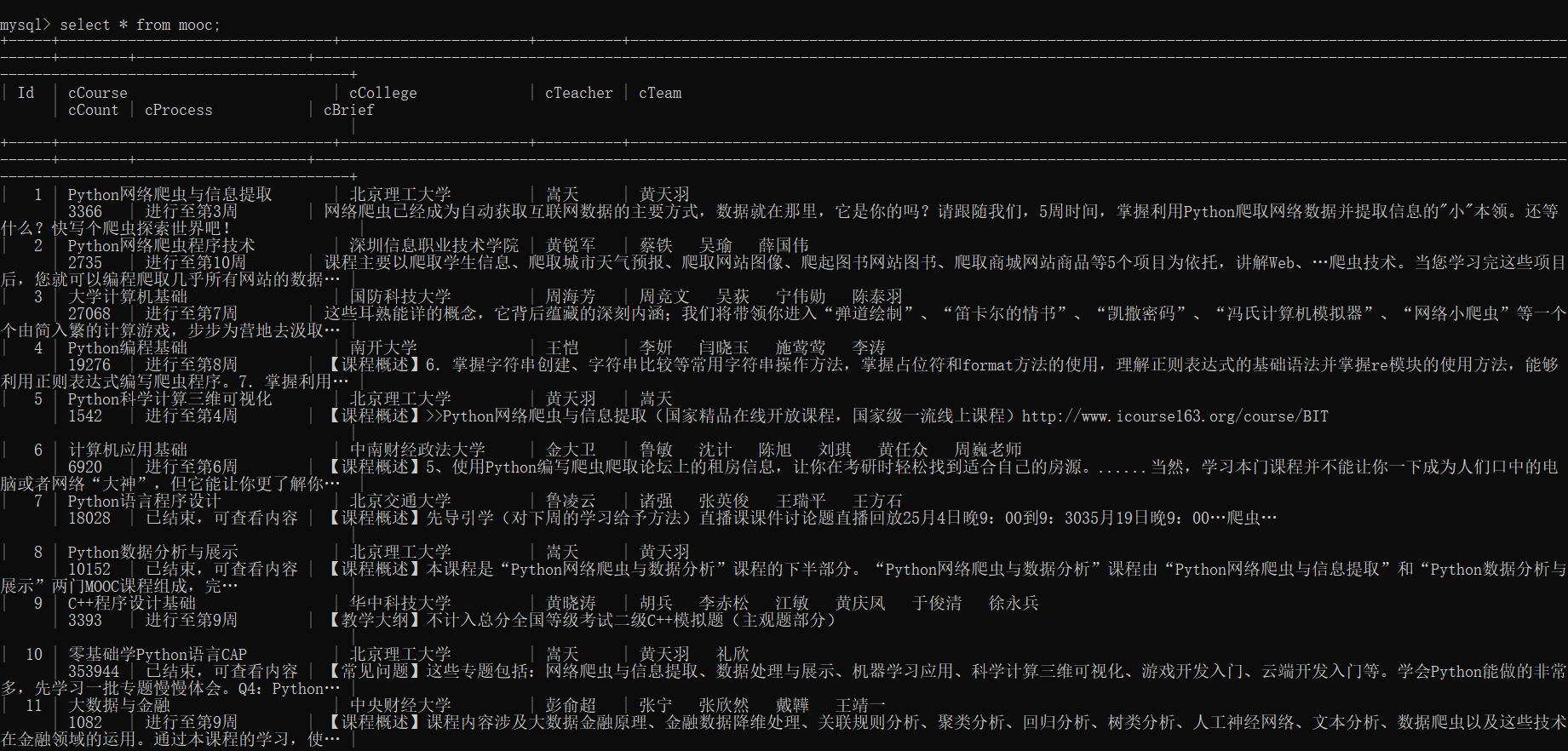

在MYSQL中查看:

(2)心得体会:

更加熟悉了selenium框架,对元素的定位也更加熟练

作业2:

(1)作业内容

实验要求:

熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

候选网站

中国mooc网:https://www.icourse163.org

输出信息:

MYSQL数据库存储和输出格式

码云链接:

https://gitee.com/chen-pai/box/tree/master/实践课第四次作业

代码内容:

# -*- coding: utf-8 -*-

import sqlite3

import urllib.request

import pymysql

from bs4 import BeautifulSoup

from selenium import webdriver

import time

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import os

import pickle

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.keys import Keys

rows = []

def findElement(html):

soup = BeautifulSoup(html, 'html.parser')

global rows

try:

# 尽量精确查找

row=''

div_elements = soup.select('div[class="m-course-list"]>div>div')

for div_element in div_elements:

cCourse = cCollege = cTeacher = cTeam = cCount = cProcess = cBrief = ""

course_name = div_element.select_one('span[class="u-course-name f-thide"]')

if course_name is not None:

cCourse = course_name.text.strip()

else:

continue

college_name = div_element.select_one('a[class="t21 f-fc9"]')

if college_name is not None:

cCollege = college_name.text.strip()

else:

continue

teacher_name = div_element.select_one('a[class="f-fc9"]')

if teacher_name is not None:

cTeacher = teacher_name.text.strip()

else:

continue

team_name = div_element.select_one('span[class="f-fc9"]')

if team_name is not None:

cTeam = team_name.text.replace('、', ' ').strip()

else:

continue

count_num = div_element.select_one('span[class="hot"]')

if count_num is not None:

cCount = count_num.text.replace('人参加', '')

else:

continue

process_info = div_element.select_one('span[class="txt"]')

if process_info is not None:

cProcess = process_info.text

else:

continue

brief_info = div_element.select_one('span[class="p5 brief f-ib f-f0 f-cb"]')

if brief_info is not None:

cBrief = brief_info.text

else:

continue

row = cCourse + "-" + cCollege + "-" + cTeacher + "-" + cTeam + "-" + str(

cCount) + "-" + cProcess + "-" + cBrief

rows.append(row)

except Exception as err:

print(err)

return rows

def saveData():

global rows

con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="chen1101", db="mydb",

charset="utf8")

cursor =con.cursor(pymysql.cursors.DictCursor)

#删除已有表

cursor.execute("drop table mooc")

#新建表

cursor.execute('''

CREATE TABLE mooc (

Id INT NOT NULL AUTO_INCREMENT PRIMARY KEY,

cCourse VARCHAR(45),

cCollege VARCHAR(45),

cTeacher VARCHAR(45),

cTeam VARCHAR(255),

cCount VARCHAR(45),

cProcess VARCHAR(45),

cBrief VARCHAR(255)

);

''')

# 向表中插入数据

for row in rows:

print(row)

data = row.split('-')

cursor.execute(

"INSERT INTO mooc (cCourse,cCollege,cTeacher,cTeam,cCount,cProcess,cBrief) VALUES (%s, %s, %s, %s, %s, %s, %s)",

(data[0], data[1], data[2], data[3], data[4], data[5], data[6])

)

con.commit()

try:

# chrome_options = Options()

# chrome_options.add_argument('--headless')

# chrome_options.add_argument('--disable-gpu')

# driver = webdriver.Chrome(options=chrome_options)

driver = webdriver.Chrome()

url = 'https://www.icourse163.org/'

driver.get(url)

# 等待页面成功加载

wait = WebDriverWait(driver, 10)

element = wait.until(EC.visibility_of_element_located((By.CSS_SELECTOR, ".navLoginBtn")))

# 找到登录按钮并点击

loginbutton = driver.find_element(By.CSS_SELECTOR, ".navLoginBtn")

loginbutton.click()

# 等待登录页面加载成功

time.sleep(2)

html = driver.page_source

# 定位到iframe

iframe = driver.find_element(By.TAG_NAME, "iframe")

# 句柄切换进iframe

driver.switch_to.frame(iframe)

# 找到手机号输入框并输入内容

phone_input = driver.find_element(By.ID, "phoneipt").send_keys("18960617360")

# 找到密码输入框并输入内容

psd_input = driver.find_element(By.CSS_SELECTOR, ".j-inputtext").send_keys("看不了一点")

# 找到登录按钮并点击

loginbutton = driver.find_element(By.ID, "submitBtn")

loginbutton.click()

# 等待登录成功页面加载完成

time.sleep(5)

# 跳出iframe

driver.switch_to.default_content()

# 等待页面成功加载

wait = WebDriverWait(driver, 10)

element = wait.until(EC.visibility_of_element_located((By.CSS_SELECTOR, ".inputtxt")))

# 找到输入框并输入内容

phone_input = driver.find_element(By.CSS_SELECTOR, ".inputtxt")

phone_input.send_keys("爬虫")

# 点击搜索按钮

searchbutton = driver.find_element(By.CSS_SELECTOR, ".j-searchBtn")

searchbutton.click()

time.sleep(2)

for i in range(10):

# 等待页面成功加载

wait = WebDriverWait(driver, 10) # 设置显式等待时间为10秒

element = wait.until(

EC.visibility_of_element_located((By.CSS_SELECTOR, ".th-bk-main-gh"))) # 等待元素可见

html = driver.page_source

findElement(html)

# 实现翻页

time.sleep(1)

nextbutton = driver.find_element(By.CSS_SELECTOR, ".th-bk-main-gh")

nextbutton.click()

saveData()

# 关闭浏览器

print('over')

driver.quit()

# saveData()

except Exception as err:

print(err)

具体实现:

(2)心得体会:

具体的难点是实现mooc的登录。需要找到sent账号、密码的框和点击登陆的按钮,最后发现需要用iframe进行转换,然后才能实现输入和点击。另外找寻合适的方法来爬取课程资源信息也是很重要的

作业3:

(1)作业内容

实验要求:

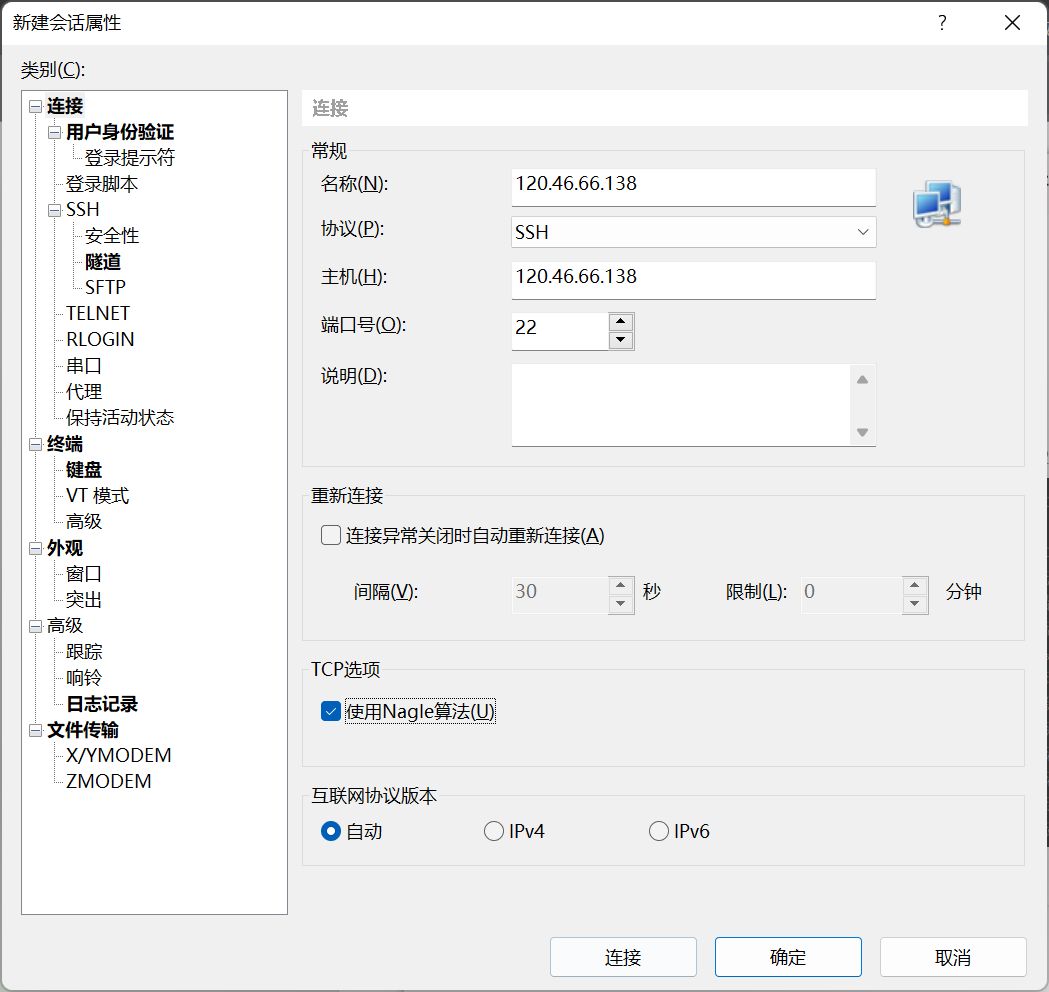

掌握大数据相关服务,熟悉 Xshell 的使用

• 完成文档 华为云_大数据实时分析处理实验手册-Flume 日志采集实验(部

分)v2.docx 中的任务,即为下面 5 个任务,具体操作见文档。

环境搭建:

任务一:开通MapReduce服务

按照ppt进行操作

实时分析开发实战:

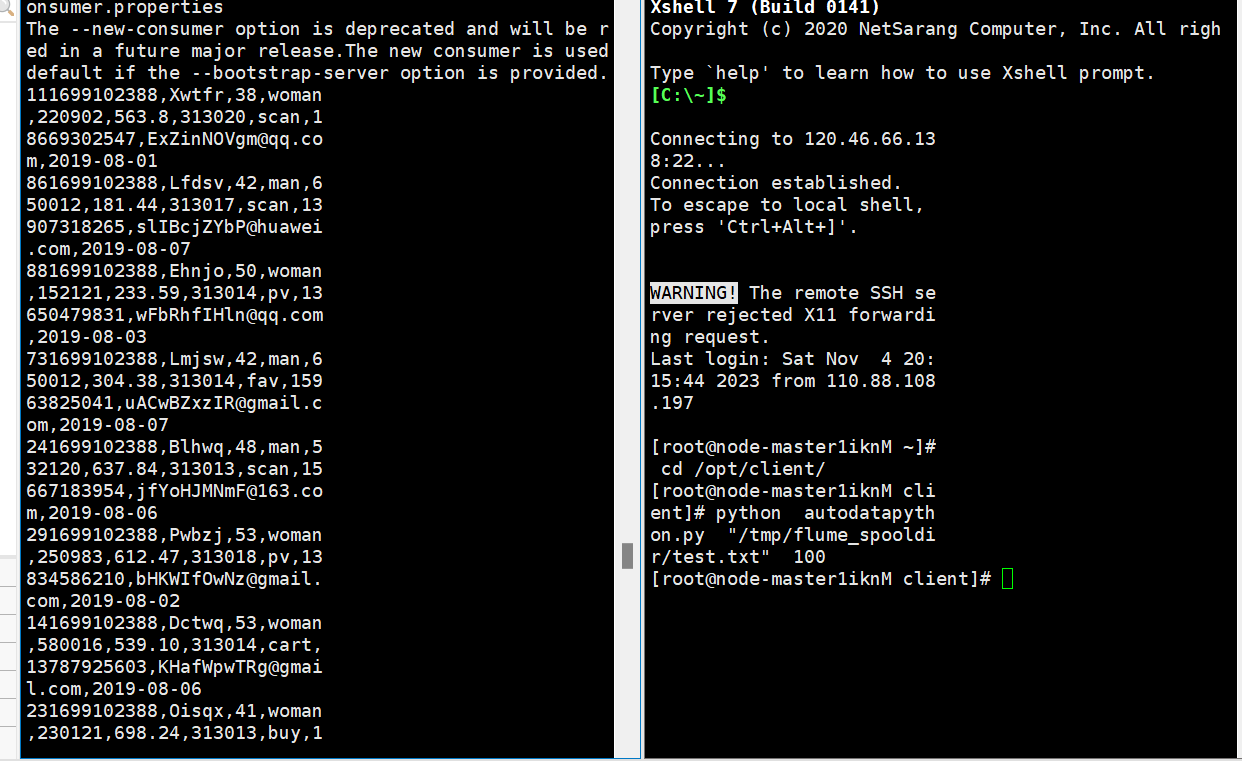

任务一:Python脚本生成测试数据

使用Xshell 7连接服务器:

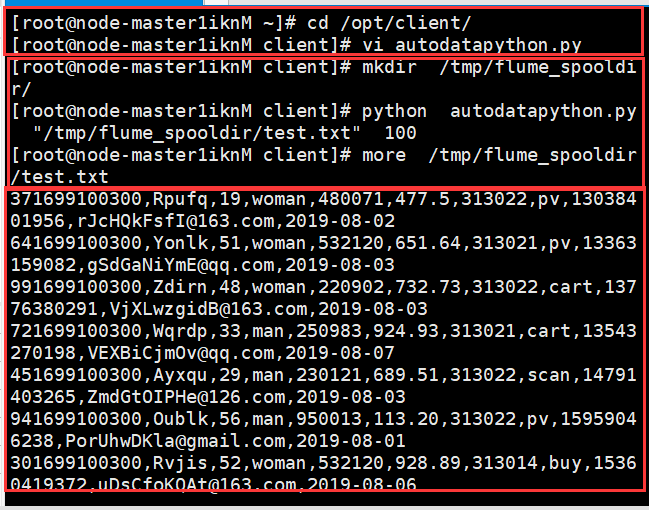

进入/opt/client/目录,使用vi命令编写Python脚本

使用mkdir命令在/tmp下创建目录flume_spooldir,我们把Python脚本模拟生成的数据放到此目录下,后面Flume就监控这个文件下的目录,以读取数据

执行Python命令,测试生成100条数据

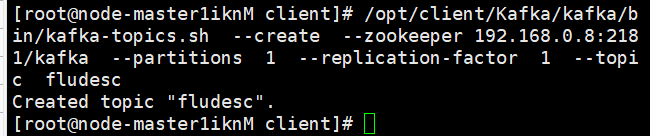

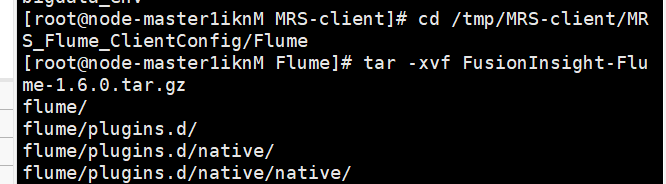

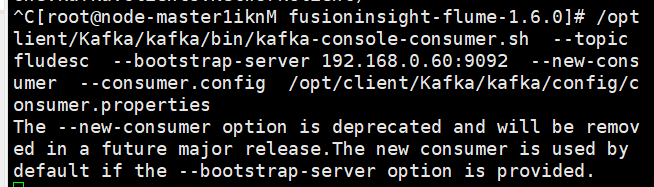

任务二:配置Kafka

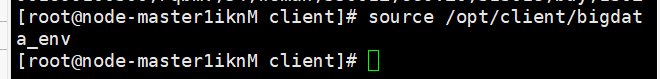

首先设置环境变量,执行source命令,使变量生效

在kafka中创建topic

查看topic信息

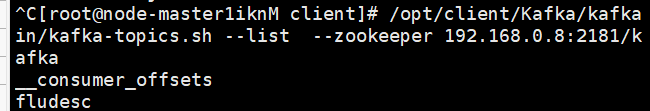

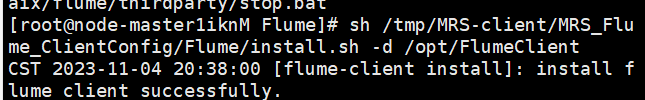

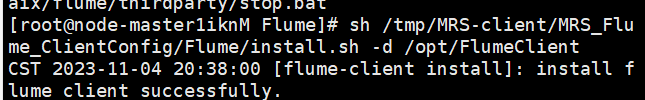

任务三: 安装Flume客户端

打开flume服务界面

点击下载客户端

解压下载的flume客户端文件

校验文件包

解压“MRS_Flume_ClientConfig.tar”文件

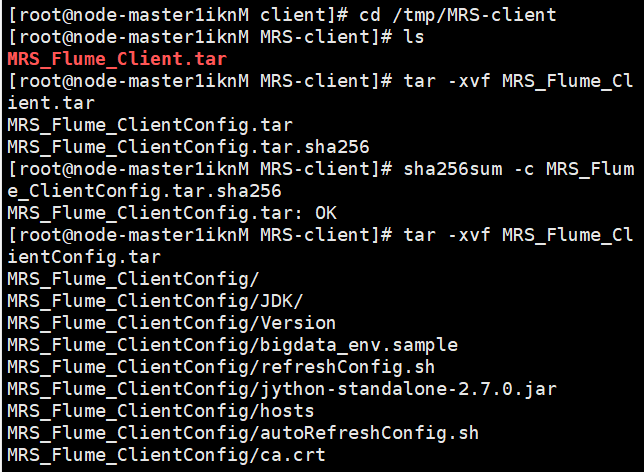

安装Flume环境变量

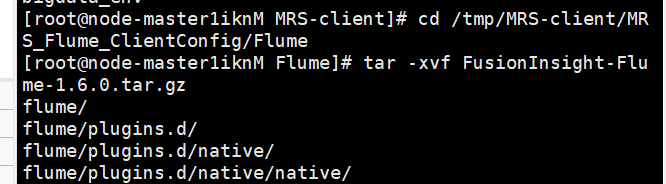

解压Flume客户端

安装Flume客户端

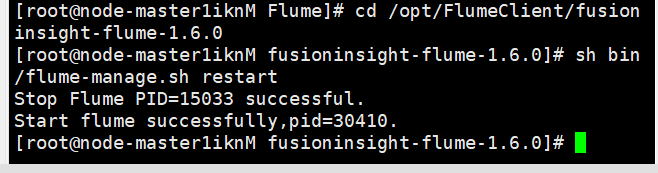

重启Flume服务

任务四:配置Flume采集数据

修改配置文件

步骤2创建消费者消费kafka中的数据

(2)心得体会:

通过华为云平台与Xshell进行实验,了解了Python脚本生成测试数据、配置Kafka、安装Flume客户端、配置Flume采集数据等过程,通过按照word一步一步操作,但是刚接触还是不熟练,希望今后能有更多的了解,也体会到服务器的高效、可靠和安全性。