数据采集与融合技术实践作业三

102102136 陈耕

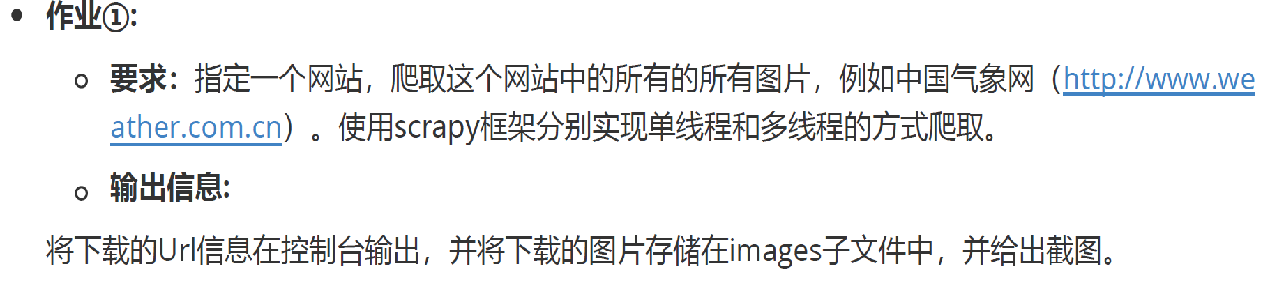

作业1:

(1)作业内容

实验要求:

码云链接:

https://gitee.com/chen-pai/box/tree/master/实践课第三次作业/实验3

代码内容:

items.py

class WeatherItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

img = scrapy.Field()

pass

settings.py

(单线程与多线程的区别即需将其中CONCURRENT_REQUESTS的默认值从8改为32。)

BOT_NAME = "weather"

SPIDER_MODULES = ["weather.spiders"]

NEWSPIDER_MODULE = "weather.spiders"

USER_AGENT = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.0.0 Safari/537.36"

LOG_ERROR = "ERROR"

ROBOTSTXT_OBEY = False

CONCURRENT_REQUESTS = 32

ITEM_PIPELINES = {

# "weather.pipelines.WeatherPipeline": 300,

"weather.pipelines.imgsPipeLine": 300,

}

MEDIA_ALLOW_REDIRECTS=True

# 设置存储路径

IMAGES_STORE='./images'

REQUEST_FINGERPRINTER_IMPLEMENTATION = "2.7"

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

FEED_EXPORT_ENCODING = "utf-8"

pipelines.py

from itemadapter import ItemAdapter

import scrapy

import time

from scrapy.pipelines.images import ImagesPipeline

class imgsPipeLine(ImagesPipeline):

#根据图片地址进行图片数据的请求

def get_media_requests(self, item, info):

yield scrapy.Request(item['img'])

#指定图片存储的路径

def file_path(self, request, response=None, info=None, *, item=None):

imgName=request.url.split('/')[-1]

return imgName

def item_completed(self, results, item, info):

return item#返回给下一个即将被执行的管道

scrapy_weather.py

import scrapy

from weather.items import WeatherItem

class ScraptWeatherSpider(scrapy.Spider):

name = "scrapt_weather"

allowed_domains = ["www.weather.com.cn"]

start_urls = ["http://www.weather.com.cn"]

# def download(self,img):

def parse(self, response):

# print(response.text)

imgs = response.xpath("//img/@src").extract()#获取图片地址

# print(imgs,type(imgs[0]))

for img in imgs:

pipe = WeatherItem() #创建传输通道

pipe['img'] = img

# print(img)

yield pipe #传输到管道

pass

具体实现:

(2)心得体会:

对scrapy的框架有了更深入的了解,更了解到scrapy是异步的以及scrapt的多线程实现。

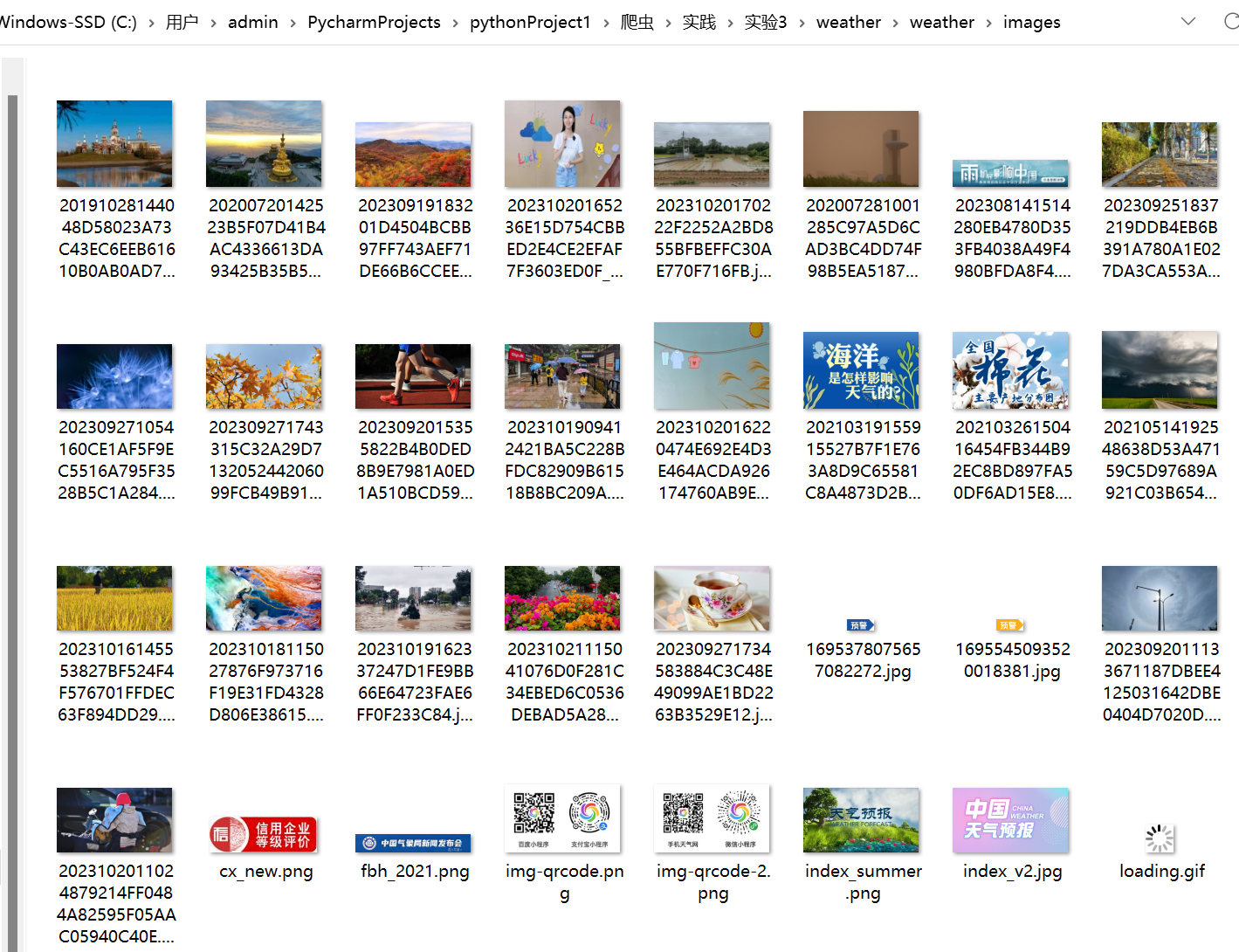

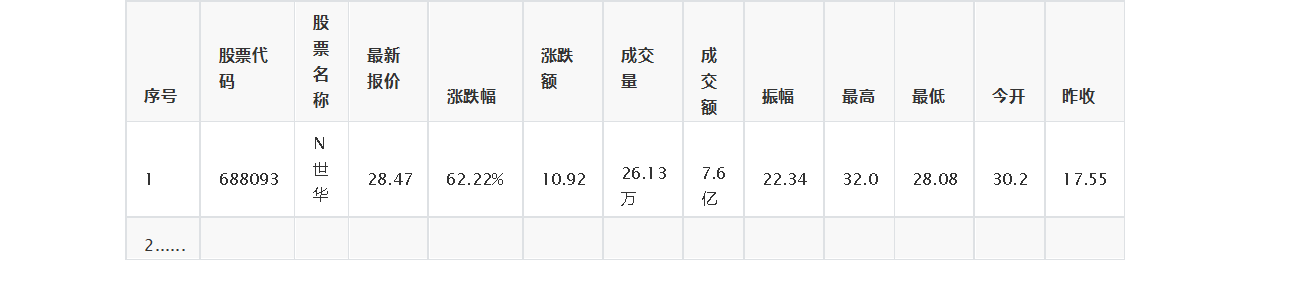

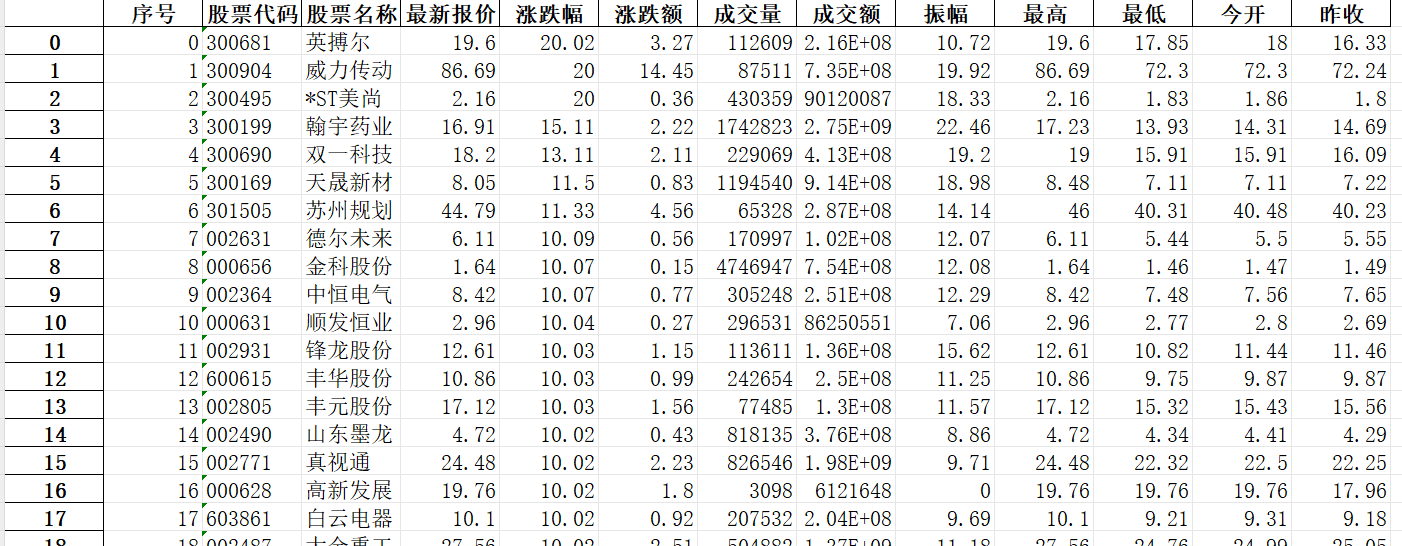

作业2:

(1)作业内容

实验要求:

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

候选网站

东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

输出信息:

码云链接:

https://gitee.com/chen-pai/box/tree/master/实践课第三次作业/实验3

代码内容:

items.py

import scrapy

class Demo2Item(scrapy.Item):

pass

pipelines.py

class Demo2Pipeline:

def process_item(self, item, spider):

return item

scrapy_stock.py

from typing import Iterable

import scrapy

from scrapy.http import Request

import json

import pandas as pd

class StockSpider(scrapy.Spider):

name = "scrapy_stock"

allowed_domains = ["eastmoney.com"]

start_urls = ["http://38.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112406848566904145428_1697696179672&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1697696179673"]

def parse(self, response):

# 提取括号内的JSON数据部分

start_index = response.text.find('(') + 1

end_index = response.text.rfind(')')

json_data = response.text[start_index:end_index]

# 解析JSON数据

json_obj = json.loads(json_data)

data = json_obj['data']['diff']

# # print(data,type(data))

goods_list = []

name = ['f12','f14','f2','f3','f4','f5','f6','f7','f15','f16','f17','f18']

count=0

for li in data:

list =[]

list.append(count)

for n in name:

list.append(li[n])

count += 1

goods_list.append(list)

df = pd.DataFrame(data=goods_list,columns=['序号','股票代码','股票名称','最新报价','涨跌幅','涨跌额','成交量','成交额','振幅','最高','最低','今开','昨收'])

df.set_index('序号')

print(df)

df.to_excel("stocks.xlsx")

具体实现:

(2)心得体会:

还是在上次的股票基础上稍加改造,需要注意的是动态网页,只能通过抓包的链接来进行爬取

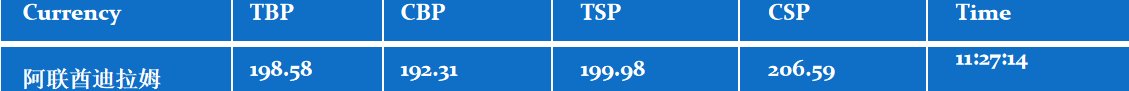

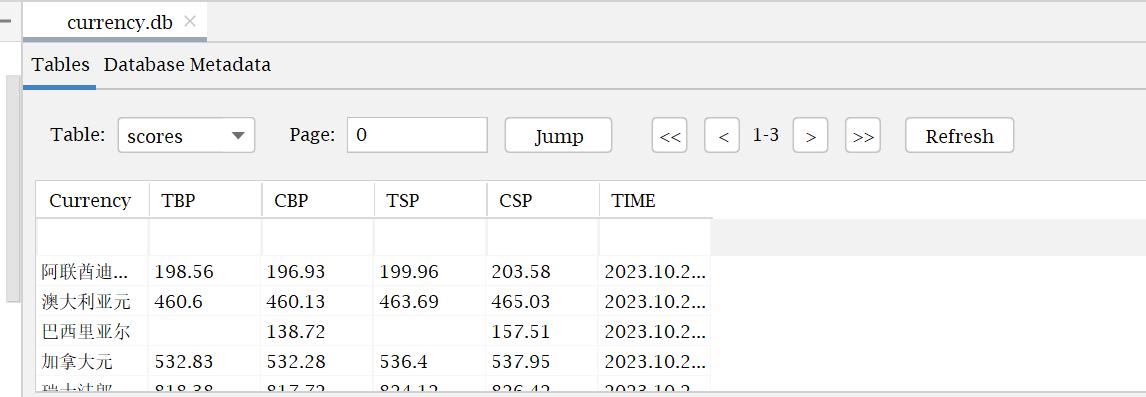

作业3:

(1)作业内容

实验要求:

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

候选网站

招商银行网:https://www.boc.cn/sourcedb/whpj/

输出信息:

码云链接:

https://gitee.com/chen-pai/box/tree/master/实践课第三次作业/实验3

代码内容:

items.py

class Demo3Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

currency = scrapy.Field()

tbp = scrapy.Field()

cbp = scrapy.Field()

tsp = scrapy.Field()

csp = scrapy.Field()

time = scrapy.Field()

settings.py

(单线程与多线程的区别即需将其中CONCURRENT_REQUESTS的默认值从8改为32。)

BOT_NAME = "demo3"

SPIDER_MODULES = ["demo3.spiders"]

NEWSPIDER_MODULE = "demo3.spiders"

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'demo3.pipelines.writeDB':300,

}

REQUEST_FINGERPRINTER_IMPLEMENTATION = "2.7"

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

FEED_EXPORT_ENCODING = "utf-8"

pipelines.py

import sqlite3

class Demo3Pipeline:

def process_item(self, item, spider):

return item

class writeDB:

def open_spider(self, spider):

self.fp = sqlite3.connect('currency.db')

# 建表的sql语句

sql_text_1 = '''CREATE TABLE scores

(Currency TEXT,

TBP TEXT,

CBP TEXT,

TSP TEXT,

CSP TEXT,

TIME TEXT);'''

# 执行sql语句

self.fp.execute(sql_text_1)

self.fp.commit()

def close_spider(self, spider):

self.fp.close()

def process_item(self, item, spider):

sql_text_1 = "INSERT INTO scores VALUES('" + item['currency'] + "', '" + item['tbp'] + "', '" + item[

'cbp'] + "', '" + item['tsp'] + "', '" + item['csp'] + "', '" + item['time'] + "')"

# 执行sql语句

self.fp.execute(sql_text_1)

self.fp.commit()

return item

currency.py

import scrapy

from ..items import Demo3Item

from bs4 import BeautifulSoup

import json

import re

class C_Spider(scrapy.Spider):

name = "currency"

allowed_domains = ["boc.cn"]

start_urls = ["https://www.boc.cn/sourcedb/whpj/"]

count = 0

def parse(self, response):

bs_obj = BeautifulSoup(response.body, features='lxml')

t = bs_obj.find_all('table')[1]

all_tr = t.find_all('tr')

all_tr.pop(0)

for r in all_tr:

item = Demo3Item()

all_td = r.find_all('td')

item['currency'] = all_td[0].text

item['tbp'] = all_td[1].text

item['cbp'] = all_td[2].text

item['tsp'] = all_td[3].text

item['csp'] = all_td[4].text

item['time'] = all_td[6].text

print(all_td)

yield item

self.count += 1

url = 'http://www.boc.cn/sourcedb/whpj/index_{}.html'.format(self.count)

if self.count != 5:

yield scrapy.Request(url=url, callback=self.parse)

具体实现:

(2)心得体会:

再次巩固了scrapy框架基础的知识