综合设计——多源异构数据采集与融合应用综合实践

职途启航

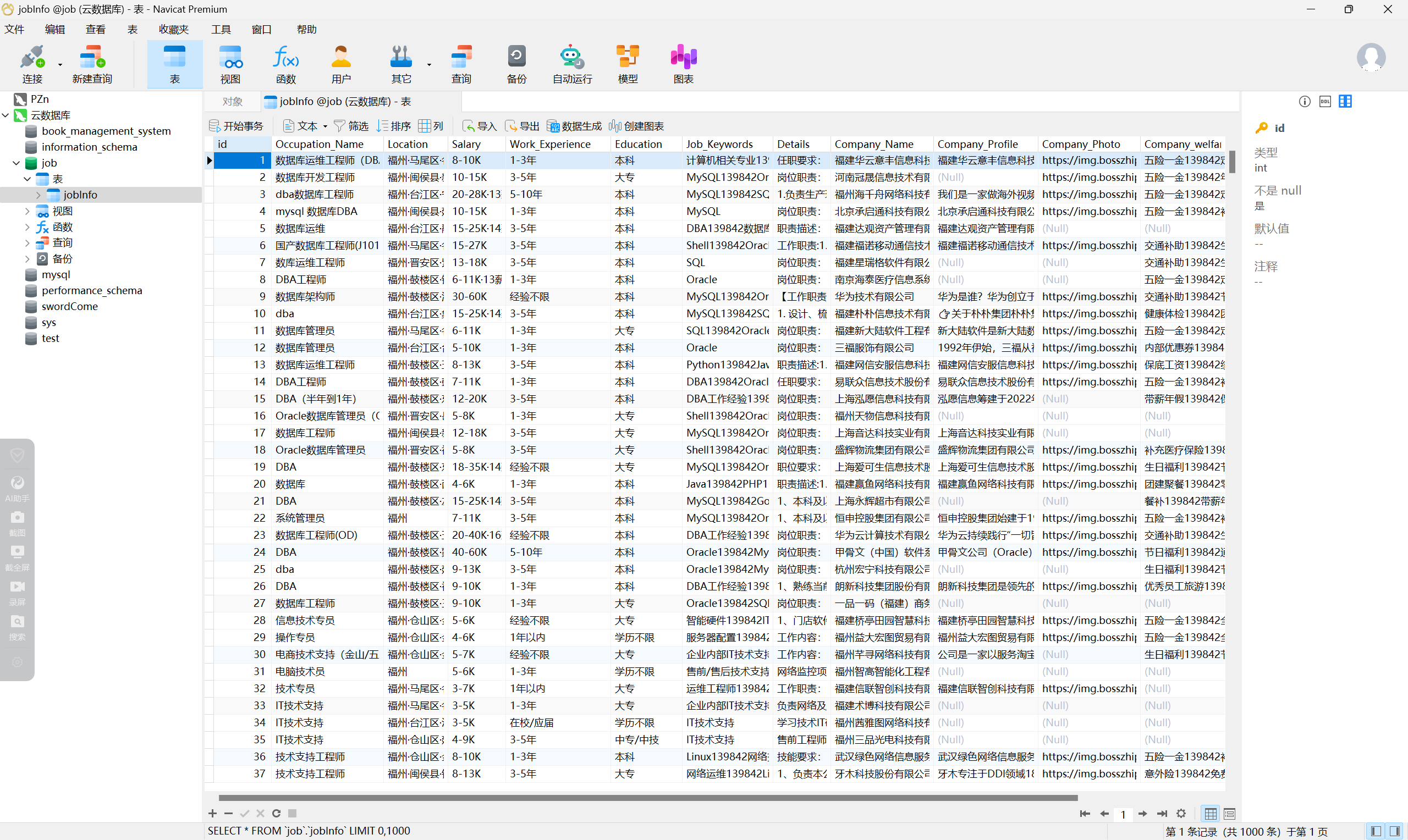

| 数据采集项目实践 | https://edu.cnblogs.com/campus/fzu/2024DataCollectionandFusiontechnology |

|---|---|

| 组名、项目简介 | 组名:数据矿工,项目需求:爬取招聘网站的求助信息、编辑信息匹配系统等,项目目标:根据求职者的个人信息为其推荐最合适的工作、根据全国各省的各行业信息为求职者提供合适的参考城市、项目开展技术路线:数据库操作:使用 pymysql 库与 MySQL 数据库进行交互,执行 SQL 查询和获取数据、Flask Web 框架:使用了 Flask 作为 Web 应用框架,用于创建 Web 服务和 API 端点 、WSGI 服务器 :pywsgi 作为 WSGI 服务器来运行 Flask 应用 |

| 团队成员学号 | 102202132、102202131、102202143、102202111、102202122、102202136 |

| 这个项目的目标 | 根据求职者的个人信息为其推荐最合适的工作 |

| 其他参考文献 | Flask Web框架官方文档 https://flask.palletsprojects.com/en/latest/ PyMySQL官方文档 https://github.com/PyMySQL/PyMySQL 讯飞星火大模型API文档 https://www.xfyun.cn/doc/spark/Web.html 数据库设计和优化 https://db-book.com/ 云服务器和数据库部署 https://cloud.tencent.com/ |

gitee地址:https://gitee.com/zheng-bingzhi/2022-level-data-collection/tree/master/职业规划与就业分析平台

1项目概述

互联网的发展成就了众多招聘APP,这些APP为求职者提供了更多的就业选择,但随着行业的细分化,许多求职者甚至不清楚以自己的技能和工作经历适合什么工作岗位。俗话说良禽择木而栖,不同的城市主要的发展行业不同,这也就导致相同行业在不同城市的薪资待遇和发展前景截然不同,因此选择一个好城市也十分重要。本项目便是为求职者匹配合适的招聘信息和为求职者提供各省份间的各行业发展,为求职者提供参考。

2个人分工

爬虫代码,AI智能推荐工作接口代码的编写与云服务器数据库的搭建

2.1爬虫代码

2.1.1scrapy代码

from job.items import JobItem

class ScrapyjobSpider(scrapy.Spider):

name = "scrapyJob"

allowed_domains = ["www.zhipin.com"]

base_url = 'https://www.zhipin.com/web/geek/job?city=101230100&position=100604&page={}'

def start_requests(self):

url = self.base_url.format(1)

yield scrapy.Request(url,callback=self.parse)

def parse(self, response):

jobs = response.xpath("//ul[@class='job-list-box']/li")

for job in jobs:

b = 0

t = ''

item = JobItem()

item['Occupation_Name'] = job.xpath(".//span[@class='job-name']//text()").extract_first()

item['Location'] = job.xpath(".//span[@class='job-area']//text()").extract_first()

item['Salary'] = job.xpath(".//span[@class='salary']//text()").extract_first()

item['Work_Experience'] = job.xpath(".//ul[@class='tag-list']/li[1]//text()").extract_first()

item['Education'] = job.xpath(".//ul[@class='tag-list']/li[2]//text()").extract_first()

tags = job.xpath(".//div[@class='job-card-footer clearfix']//ul[@class='tag-list']//li")

for tag in tags:

if b==0:

t = tag.xpath(".//text()").extract_first()

b = b + 1

else:

t = t + '139842'+tag.xpath(".//text()").extract_first()

item['Job_Keywords'] = t

part_url = job.xpath(".//a[@class='job-card-left']/@href").extract_first()

detail_url =response.urljoin(part_url)

yield scrapy.Request(

url=detail_url,

callback=self.parse_detail,

meta={'item' : item}

)

def parse_detail(self,response):

a = 0

w = ''

t = ''

item =response.meta['item']

texts = response.xpath("//div[@class='job-detail-section']//div[@class='job-sec-text']//text()")

for text in texts:

t = t + text.extract()

item['Details'] = t

welfares = response.xpath(".//div[@class='job-tags']//span")

for welfare in welfares:

if a == 0:

w = welfare.xpath(".//text()").extract_first()

a = a + 1

else:

w = w + '139842' + welfare.xpath(".//text()").extract_first()

item['Company_welfare'] = w

item['Company_Name'] = response.xpath("//li[@class='company-name']//text()").extract()[1]

part_url = response.xpath("//a[@class='look-all'][@ka='job-cominfo']/@href").extract_first()

image_url = response.urljoin(part_url)

yield scrapy.Request(

url = image_url,

callback=self.parse_image,

meta={'item' : item}

)

def parse_image(self,response):

a = 0

item = response.meta['item']

img_url = ''

t = ''

images = -1

if len(response.xpath("//ul[@class='swiper-wrapper swiper-wrapper-row']//li")) > 0:

images = response.xpath("//ul[@class='swiper-wrapper swiper-wrapper-row']//li")

if len(response.xpath("//ul[@class='swiper-wrapper swiper-wrapper-col']//li")) > 0:

images = response.xpath("//ul[@class='swiper-wrapper swiper-wrapper-col']//li")

texts = response.xpath("//div[@class='job-sec']//div[@class='text fold-text']//text()")

for text in texts:

t = t + text.extract()

item['Company_Profile'] = t

if images != -1:

for image in images:

if a ==0:

img_url = image.xpath(".//img/@src").extract_first()

a = a + 1

else:

img_url = img_url +'139842'+image.xpath(".//img/@src").extract_first()

item['Company_Photo'] = img_url

yield item

2.1.2item代码

class JobItem(scrapy.Item):

Occupation_Name = scrapy.Field()

Location= scrapy.Field()

Salary= scrapy.Field()

Work_Experience= scrapy.Field()

Education= scrapy.Field()

Job_Keywords= scrapy.Field()

Details= scrapy.Field()

Company_Name= scrapy.Field()

Company_Profile= scrapy.Field()

Company_welfare= scrapy.Field()

Company_Photo= scrapy.Field()

2.1.3middlewares代码

import logging

from scrapy.http import HtmlResponse

from selenium import webdriver

import random

from scrapy import signals

from selenium import webdriver

from selenium.webdriver.edge.options import Options

from scrapy.http import HtmlResponse

class jobDownloaderMiddleware:

def process_request(self, request, spider):

# 设置Edge的无头模式

options = Options()

options.add_argument('--headless') # 添加无头模式参数

# 设置代理(如果你需要的话)

# options.add_argument('--proxy-server=http://v161.kdltps.com:15818')

# 初始化Edge驱动

driver = webdriver.Edge()

driver.get(request.url)

time.sleep(6) # 等待页面加载

data = driver.page_source

driver.quit() # 关闭驱动

# 返回响应

return HtmlResponse(url=request.url, body=data.encode('utf-8'), encoding='utf-8', request=request)

# class ProxyDownloaderMiddleware:

# _proxy = ('v161.kdltps.com', '15818')

#

# def process_request(self, request, spider):

#

# # 用户名密码认证

# username = "t13280720858826"

# password = "iendiyt1"

# request.meta['proxy'] = "http://%(user)s:%(pwd)s@%(proxy)s/" % {"user": username, "pwd": password, "proxy": ':'.join(ProxyDownloaderMiddleware._proxy)}

#

# # 白名单认证

# # request.meta['proxy'] = "http://%(proxy)s/" % {"proxy": proxy}

#

# request.headers["Connection"] = "close"

# return None

#

# def process_exception(self, request, exception, spider):

# """捕获407异常"""

# if "'status': 407" in exception.__str__(): # 不同版本的exception的写法可能不一样,可以debug出当前版本的exception再修改条件

# from scrapy.resolver import dnscache

# dnscache.__delitem__(ProxyDownloaderMiddleware._proxy[0]) # 删除proxy host的dns缓存

# return exception

class RandomUserAgentMiddleware():

def __init__(self):

self.user_agents = [

'Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)',

'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.2 (KHTML, like Gecko) Chrome/22.0.1216.0 Safari/537.2',

'Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:15.0) Gecko/20100101 Firefox/15.0.1',

"Mozilla/5.0(WindowsNT6.1;WOW64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/39.0.2171.95Safari/537.36OPR/26.0.1656.60"

"Opera/8.0(WindowsNT5.1;U;en)",

"Mozilla/5.0(WindowsNT5.1;U;en;rv:1.8.1)Gecko/20061208Firefox/2.0.0Opera9.50",

"Mozilla/4.0(compatible;MSIE6.0;WindowsNT5.1;en)Opera9.50",

"Mozilla/5.0(WindowsNT6.1;WOW64;rv:34.0)Gecko/20100101Firefox/34.0",

"Mozilla/5.0(X11;U;Linuxx86_64;zh-CN;rv:1.9.2.10)Gecko/20100922Ubuntu/10.10(maverick)Firefox/3.6.10",

"Mozilla/5.0(Macintosh;U;IntelMacOSX10_6_8;en-us)AppleWebKit/534.50(KHTML,likeGecko)Version/5.1Safari/534.50",

"Mozilla/5.0(Windows;U;WindowsNT6.1;en-us)AppleWebKit/534.50(KHTML,likeGecko)Version/5.1Safari/534.50",

"Mozilla/5.0(WindowsNT6.1;WOW64)AppleWebKit/534.57.2(KHTML,likeGecko)Version/5.1.7Safari/534.57.2",

"Mozilla/5.0(WindowsNT6.1;WOW64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/39.0.2171.71Safari/537.36",

"Mozilla/5.0(X11;Linuxx86_64)AppleWebKit/537.11(KHTML,likeGecko)Chrome/23.0.1271.64Safari/537.11",

"Mozilla/5.0(Windows;U;WindowsNT6.1;en-US)AppleWebKit/534.16(KHTML,likeGecko)Chrome/10.0.648.133Safari/534.16",

"Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1;Maxthon2.0)",

"Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1;TencentTraveler4.0)",

"Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1)",

"Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1;TheWorld)",

"Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1;Trident/4.0;SE2.XMetaSr1.0;SE2.XMetaSr1.0;.NETCLR2.0.50727;SE2.XMetaSr1.0)",

"Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1;360SE)",

"Mozilla/5.0(WindowsNT6.1;WOW64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/30.0.1599.101Safari/537.36",

"Mozilla/5.0(WindowsNT6.1;WOW64;Trident/7.0;rv:11.0)likeGecko",

"Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1;AvantBrowser)",

"Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1)",

"Mozilla/5.0(compatible;MSIE9.0;WindowsNT6.1;Trident/5.0;)",

"Mozilla/4.0(compatible;MSIE8.0;WindowsNT6.0;Trident/4.0)",

"Mozilla/4.0(compatible;MSIE7.0;WindowsNT6.0)",

"Mozilla/4.0(compatible;MSIE6.0;WindowsNT5.1)",

'Mozilla/5.0(WindowsNT6.1;WOW64)AppleWebKit/536.11(KHTML,likeGecko)Chrome/20.0.1132.11TaoBrowser/2.0Safari/536.11',

"Mozilla/5.0(WindowsNT6.1;WOW64)AppleWebKit/537.1(KHTML,likeGecko)Chrome/21.0.1180.71Safari/537.1LBBROWSER",

"Mozilla/5.0(compatible;MSIE9.0;WindowsNT6.1;WOW64;Trident/5.0;SLCC2;.NETCLR2.0.50727;.NETCLR3.5.30729;.NETCLR3.0.30729;MediaCenterPC6.0;.NET4.0C;.NET4.0E;LBBROWSER)",

"Mozilla/4.0(compatible;MSIE6.0;WindowsNT5.1;SV1;QQDownload732;.NET4.0C;.NET4.0E;LBBROWSER)",

"Mozilla/5.0(compatible;MSIE9.0;WindowsNT6.1;WOW64;Trident/5.0;SLCC2;.NETCLR2.0.50727;.NETCLR3.5.30729;.NETCLR3.0.30729;MediaCenterPC6.0;.NET4.0C;.NET4.0E;QQBrowser/7.0.3698.400)",

"Mozilla/4.0(compatible;MSIE6.0;WindowsNT5.1;SV1;QQDownload732;.NET4.0C;.NET4.0E)",

"Mozilla/5.0(WindowsNT5.1)AppleWebKit/535.11(KHTML,likeGecko)Chrome/17.0.963.84Safari/535.11SE2.XMetaSr1.0",

]

def process_request(self, request, spider):

request.headers['User-Agent'] = random.choice(self.user_agents)

class RandomDelayMiddleware(object):

def __init__(self, delay):

self.delay = delay

@classmethod

def from_crawler(cls, crawler):

delay = crawler.spider.settings.get("DOWNLOAD_DELAY", 1) # setting里设置的时间,注释默认为1s

if not isinstance(delay, int):

raise ValueError("RANDOM_DELAY need a int")

return cls(delay)

def process_request(self, request, spider):

# delay = random.randint(0, self.delay)

delay = random.uniform(0, self.delay)

delay = float("%.1f" % delay)

logging.debug("### time is random delay: %s s ###" % delay)

time.sleep(delay)

2.1.4pipelines代码

import os

class JobPipeline:

def __init__(self):

# 以追加模式打开文件,如果文件不存在则创建

self.file_path = '技术项目管理/福州/项目专员.csv'

self.file = open(self.file_path, 'a', newline='', encoding='utf-8-sig')

# 准备列名列表

self.fieldnames = ['Occupation_Name', 'Location', 'Salary', 'Work_Experience', 'Education',

'Job_Keywords', 'Details', 'Company_Name', 'Company_Profile', 'Company_Photo',

'Company_welfare']

# 创建一个 csv.DictWriter 对象,指定列名

self.writer = csv.DictWriter(self.file, fieldnames=self.fieldnames)

# 检查文件是否为空,如果为空则写入列名

if os.path.getsize(self.file_path) == 0:

self.writer.writeheader()

# 将文件指针移动到文件末尾,以便后续写入数据

self.file.seek(0, os.SEEK_END)

def process_item(self, item, spider):

# 将 item 数据写入 CSV 文件

self.writer.writerow(item)

return item

def __del__(self):

# 关闭文件

self.file.close()

2.2智能推荐工作接口代码

2.2.1导入模块和设置CORS

import re

from flask_cors import CORS

import pymysql

import requests

from flask import Blueprint, Flask, request

match_bp = Blueprint('match', __name__)

CORS(match_bp)

2.2.2定义路由和函数

def recommendCareerAdviceFun():

2.2.3初始化变量和数据库连接

itemCount = 0

returnList = []

connection = pymysql.connect(host='81.70.22.101',

user='root',

password='yabdylm',

database='job',

charset='utf8mb4',

cursorclass=pymysql.cursors.DictCursor)

2.2.4获取请求参数

expectedSalary = request.form.get("expected_salary")

resume = request.form.get("resume")

requirements = request.form.get("requirements")

workExperience = request.form.get("work_experience")

2.2.5查询数据库并准备数据

cursor.execute(

"SELECT id, Occupation_Name, Location, Salary, Work_Experience, Education, Job_Keywords, Details FROM jobInfo")

results = cursor.fetchall()

data_list = [] # 初始化data_list

for result in results:

i = result['id']

o = result['Occupation_Name']

l = result['Location']

s = result['Salary']

w = result['Work_Experience']

e = result['Education']

# j = result['Job_Keywords']

item = f'id:{i},职业名称:{o},地点:{l},薪资:{s},工作经验:{w},学历:{e}'.replace('"','')

data_list.append(item)

2.2.6 调用讯飞星火大模型API进行工作推荐

# ...(省略了部分代码)

url = "https://spark-api-open.xf-yun.com/v1/chat/completions"

data = {

"max_tokens": 4096,

"top_k": 6,

"temperature": 1,

"messages": messages,

"model": "generalv3.5",

"stream": True

}

header = {

"Authorization": "Bearer VSjYPSByEwNEwDLkdJFS:WWkBQcXnjCoaNJYzgAXD"

}

response = requests.post(url, headers=header, json=data, stream=True)

# ...(省略了部分代码)

2.2.7解析API响应并提取推荐工作

matches = re.findall(pattern, full_content)

for match in matches:

returnList.append(match)

returnCount = returnCount + 1

print(match)

if returnCount >= 10:

break

if returnCount >= 10:

break

2.2.8根据推荐ID查询详细信息

for item in returnList:

with connection.cursor() as cursor:

cursor.execute(

f"SELECT Occupation_Name, Location, Salary, Company_Name, small_kind from jobInfo where id = {item}")

result = cursor.fetchone()

result_dict = {

"Occupation_Name": result['Occupation_Name'],

"Location": result['Location'],

"Salary": result['Salary'],

"Company_Name": result['Company_Name'],

"occupation_Type": result['small_kind'],

"recruitment_id": item

}

results_list.append(result_dict)

2.2.9返回响应

response = {

"code": 200,

"message": "Success",

"data": results_list

}

response_json = json.dumps(response, ensure_ascii=False)

return response_json

2.3云服务器数据库

3个人体会

技术实践与学习:通过实际编写爬虫代码和智能推荐接口,我对Scrapy框架、Flask Web框架以及数据库操作有了更深入的理解和实践。这些技术的应用不仅加深了我对理论知识的理解,也提升了我解决实际问题的能力。

团队合作:在项目中,我深刻体会到团队合作的重要性。每个成员都有自己的分工,但同时也需要相互沟通和协作,以确保项目的顺利进行。这种团队合作精神对于未来无论是在工作还是生活中都是非常重要的。

项目管理:项目的成功不仅取决于技术实现,还涉及到项目管理的方方面面,如时间管理、资源分配、风险控制等。在这个项目中,我学会了如何规划项目进度,如何协调团队成员的工作,以及如何应对突发情况。

问题解决:在项目实施过程中,我们遇到了许多预料之外的问题,比如数据采集的反爬虫机制、数据库的优化等。这些问题的解决过程锻炼了我的问题解决能力,也让我学会了如何快速学习新知识和技能。

创新思维:项目中的智能推荐系统让我意识到创新思维的重要性。通过结合讯飞星火大模型API,我们能够为用户提供更加个性化和精准的工作推荐,这种创新的尝试对于提升项目价值非常关键。

持续学习:技术是不断发展的,通过这个项目,我更加明确了持续学习的重要性。无论是学习新的编程语言、框架,还是深入了解数据库设计和优化,都需要不断学习和实践。

实践经验:最后,这个项目让我意识到理论知识和实践经验的结合是多么重要。理论知识为我们提供了解决问题的工具和方法,而实践经验则让我们能够更好地应用这些工具和方法。