多instance启动spark部分worker起不来 java.lang.OutOfMemoryError

问题描述:

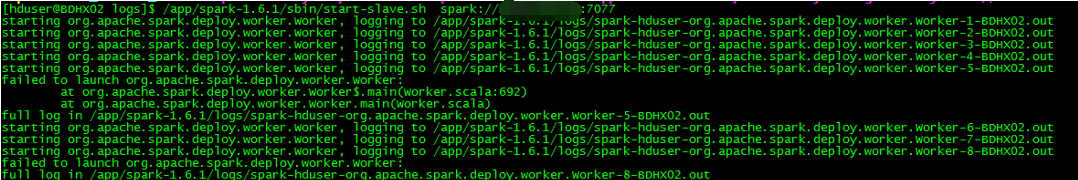

在一台机器上设置多个worker 本例为8个,启动时部分可以启动 部分起不来

[hduser@BDHX02 logs]$ cat /app/spark-1.6.1/logs/spark-hduser-org.apache.spark.deploy.worker.Worker-7-BDHX02.out

Spark Command: /usr/java/jdk1.7.0_75/bin/java -cp /app/spark-1.6.1/lib/:/app/devlib/spark-csv_2.10-1.2.0.jar:/app/spark-1.6.1/lib/:/app/devlib/spark-avro_2.10-2.0.1.jar:/app/spark-1.6.1/lib/:/app/devlib/commons-csv-1.1.jar:/app/spark-1.6.1/lib/:/app/devlib/mysql.jar:/app/spark-1.6.1/lib/:/app/devlib/ojdbc6.jar:/app/R-3.1.2/library/rJava/jri/JRIEngine.jar:/app/R-3.1.2/library/rJava/jri/REngine.jar:/app/R-3.1.2/library/rJava/jri/JRI.jar:/app/spark-1.6.1/lib/:/app/devlib/:/app/spark-1.6.1/conf/:/app/spark-1.6.1/lib/spark-assembly-1.6.1-hadoop2.6.0.jar:/app/spark-1.6.1/lib/datanucleus-core-3.2.10.jar:/app/spark-1.6.1/lib/datanucleus-rdbms-3.2.9.jar:/app/spark-1.6.1/lib/datanucleus-api-jdo-3.2.6.jar:/app/hadoop-2.6.0/etc/hadoop/ -Djava.net.preferIPv4Stack=true -Xms1g -Xmx1g -XX:MaxPermSize=256m org.apache.spark.deploy.worker.Worker --webui-port 8087 spark://xx.xx.xx.xx:7077

========================================

Exception in thread "main" java.lang.OutOfMemoryError: unable to create new native thread

at java.lang.Thread.start0(Native Method)

at java.lang.Thread.start(Thread.java:714)

at java.util.concurrent.ThreadPoolExecutor.addWorker(ThreadPoolExecutor.java:949)

at java.util.concurrent.ThreadPoolExecutor.execute(ThreadPoolExecutor.java:1360)

at org.apache.spark.rpc.netty.Dispatcher$$anonfun$1.apply$mcVI$sp(Dispatcher.scala:198)

at scala.collection.immutable.Range.foreach$mVc$sp(Range.scala:141)

at org.apache.spark.rpc.netty.Dispatcher.<init>(Dispatcher.scala:197)

at org.apache.spark.rpc.netty.NettyRpcEnv.<init>(NettyRpcEnv.scala:54)

at org.apache.spark.rpc.netty.NettyRpcEnvFactory.create(NettyRpcEnv.scala:447)

at org.apache.spark.rpc.RpcEnv$.create(RpcEnv.scala:53)

at org.apache.spark.deploy.worker.Worker$.startRpcEnvAndEndpoint(Worker.scala:711)

at org.apache.spark.deploy.worker.Worker$.main(Worker.scala:692)

at org.apache.spark.deploy.worker.Worker.main(Worker.scala)

这个异常问题本质原因是我们创建了太多的线程,而能创建的线程数是有限制的,导致了异常的发生。能创建的线程数的具体计算公式如下:

(MaxProcessMemory - JVMMemory - ReservedOsMemory) / (ThreadStackSize) = Number of threads

MaxProcessMemory 指的是一个进程的最大内存

JVMMemory JVM内存

ReservedOsMemory 保留的操作系统内存

ThreadStackSize 线程栈的大小

在java语言里, 当你创建一个线程的时候,虚拟机会在JVM内存创建一个Thread对象同时创建一个操作系统线程,而这个系统线程的内存用的不是JVMMemory,而是系统中剩下的内存(MaxProcessMemory - JVMMemory - ReservedOsMemory)。

结合上面例子我们来对公式说明一下:

MaxProcessMemory 在32位的 windows下是 2G

JVMMemory eclipse默认启动的程序内存是64M

ReservedOsMemory 一般是130M左右

ThreadStackSize 32位 JDK 1.6默认的stacksize 325K左右

公式如下:

(2*1024*1024-64*1024-130*1024)/325 = 5841

公式计算所得5841,和实践5602基本一致(有偏差是因为ReservedOsMemory不能很精确)

由公式得出结论:你给JVM内存越多,那么你能创建的线程越少,越容易发生java.lang.OutOfMemoryError: unable to create new native thread。

解决办法:

1, 如果程序中有bug,导致创建大量不需要的线程或者线程没有及时回收,那么必须解决这个bug,修改参数是不能解决问题的。

2, 如果程序确实需要大量的线程,现有的设置不能达到要求,那么可以通过修改MaxProcessMemory,JVMMemory,ThreadStackSize这三个因素,来增加能创建的线程数:

a, MaxProcessMemory 使用64位操作系统

b, JVMMemory 减少JVMMemory的分配

c, ThreadStackSize 减小单个线程的栈大小

上面这段错误提示的本质是Linux操作系统无法创建更多进程,导致出错,并不是系统的内存不足。因此要解决这个问题需要修改Linux允许创建更多的进程,就需要修改Linux最大进程数。

(1) 修改Linux最大进程数

[hduser@BDHX01 logs]$ ulimit -a

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 4133794

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 10240

cpu time (seconds, -t) unlimited

max user processes (-u) 1024

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

(2) 临时修改允许打开的最大进程数

(修改完后如果切换root用户查看 max user processes就会显示4133794)

[hduser@BDHX01 logs]$ ulimit -u 65535

[hduser@BDHX01 logs]$ ulimit -a

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 4133794

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 10240

cpu time (seconds, -t) unlimited

max user processes (-u) 65535

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

(3) 临时修改允许打开的文件句柄

[root@BDHX01 java]# ulimit -n 65535

[root@BDHX01 java]# ulimit -a

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 4133794

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 65535

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 10240

cpu time (seconds, -t) unlimited

max user processes (-u) 4133794

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

(4) 永久修改Linux最大进程数量

sudo vi /etc/security/limits.d/90-nproc.conf

* soft nproc 60000

root soft nproc unlimited

永久修改用户打开文件的最大句柄数,该值默认1024,一般都会不够,常见错误就是not open file

解决办法:

sudo vi /etc/security/limits.conf

bdata soft nofile 65536

bdata hard nofile 65536

另一种解决办法就是在登录的用户环境变量中设置

帮助连接

http://www.cnblogs.com/qifengle-2446/p/6424377.html

http://www.jianshu.com/p/23ee9db2a620