航空公司客户价值分析及客户流失分析

import pandas as pd datafile='air_data.csv' resultfile='explore.csv' data=pd.read_csv(datafile,encoding='utf-8') explore=data.describe(percentiles=[],include='all').T explore['null']=len(data)-explore['count'] explore=explore[['null','max','min']] explore.columns=[u'空值数',u'最大值',u'最小值'] explore.to_csv(resultfile)

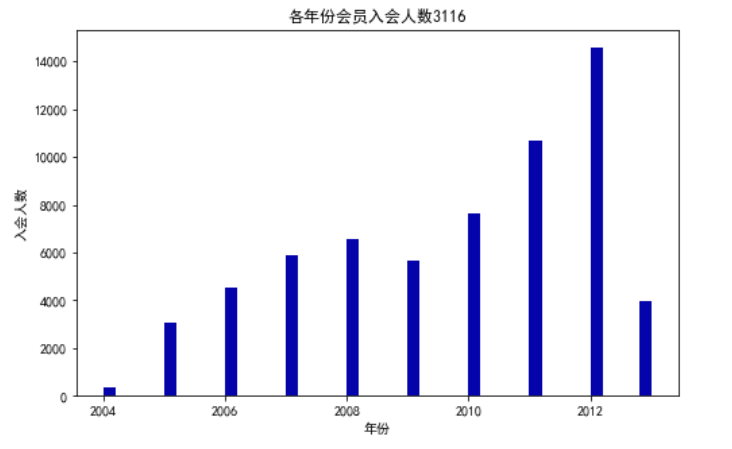

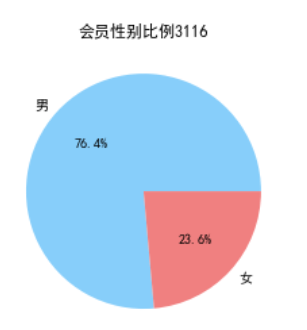

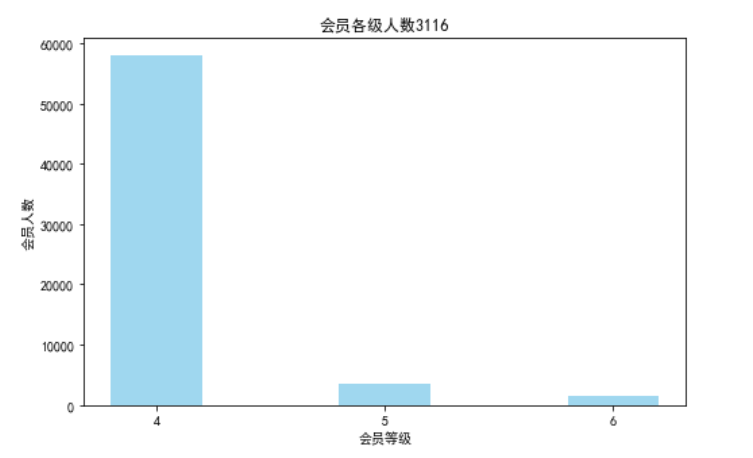

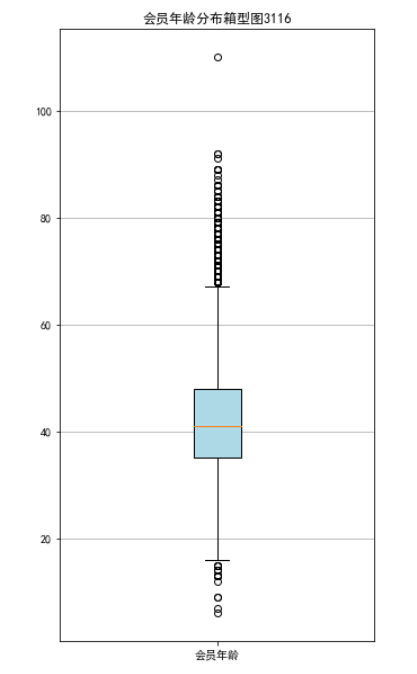

from datetime import datetime import matplotlib.pyplot as plt ffp=data['FFP_DATE'].apply(lambda x:datetime.strptime(x,'%Y/%m/%d')) ffp_year=ffp.map(lambda x:x.year) fig=plt.figure(figsize=(8,5)) plt.rcParams['font.sans-serif']='SimHei' plt.rcParams['axes.unicode_minus']=False plt.hist(ffp_year,bins='auto',color='#0504aa') plt.xlabel('年份') plt.ylabel('入会人数') plt.title('各年份会员入会人数3116') plt.show() plt.close male=pd.value_counts(data['GENDER'])['男'] female=pd.value_counts(data['GENDER'])['女'] fig=plt.figure(figsize=(7,4)) plt.pie([male,female],labels=['男','女'],colors=['lightskyblue','lightcoral'],autopct='%1.1f%%') plt.title('会员性别比例3116') plt.show() plt.close lv_four=pd.value_counts(data['FFP_TIER'])[4] lv_five=pd.value_counts(data['FFP_TIER'])[5] lv_six=pd.value_counts(data['FFP_TIER'])[6] fig=plt.figure(figsize=(8,5)) plt.bar(x=range(3),height=[lv_four,lv_five,lv_six],width=0.4,alpha=0.8,color='skyblue') plt.xticks([index for index in range(3)],['4','5','6']) plt.xlabel('会员等级') plt.ylabel('会员人数') plt.title('会员各级人数3116') plt.show() plt.close() age=data['AGE'].dropna() age=age.astype('int64') fig=plt.figure(figsize=(5,10)) plt.boxplot(age,patch_artist=True,labels=['会员年龄'],boxprops={'facecolor':'lightblue'}) plt.title('会员年龄分布箱型图3116') plt.grid(axis='y') plt.show() plt.close

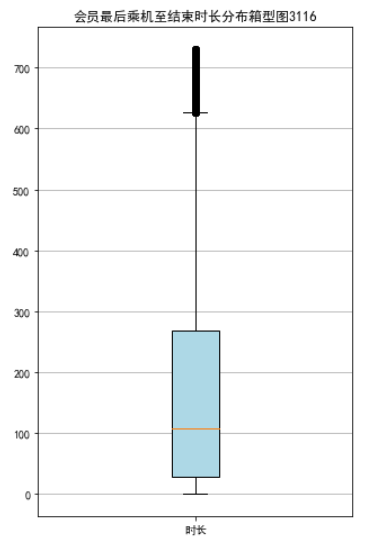

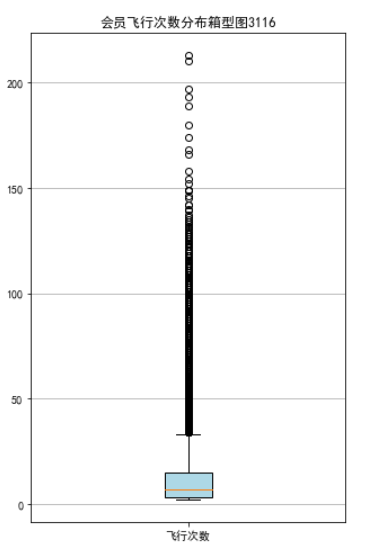

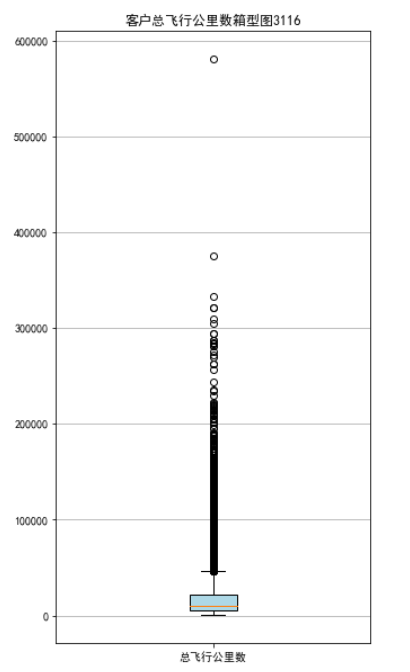

lte=data['LAST_TO_END'] fc=data['FLIGHT_COUNT'] sks=data['SEG_KM_SUM'] fig=plt.figure(figsize=(5,8)) plt.boxplot(lte,patch_artist=True,labels=['时长'],boxprops={'facecolor':'lightblue'}) plt.title('会员最后乘机至结束时长分布箱型图3116') plt.grid(axis='y') plt.show() plt.close fig=plt.figure(figsize=(5,8)) plt.boxplot(fc,patch_artist=True,labels=['飞行次数'],boxprops={'facecolor':'lightblue'}) plt.title('会员飞行次数分布箱型图3116') plt.grid(axis='y') plt.show() plt.close fig=plt.figure(figsize=(5,10)) plt.boxplot(sks,patch_artist=True,labels=['总飞行公里数'],boxprops={'facecolor':'lightblue'}) plt.title('客户总飞行公里数箱型图3116') plt.grid(axis='y') plt.show() plt.close

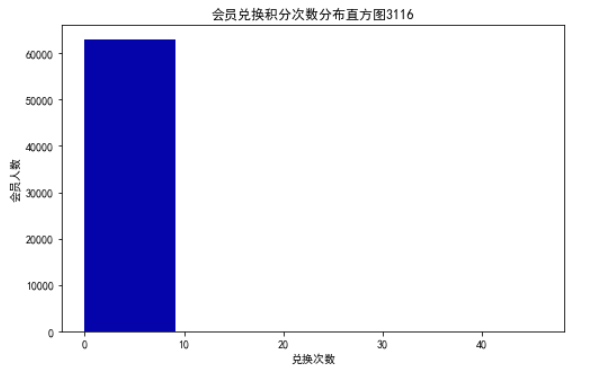

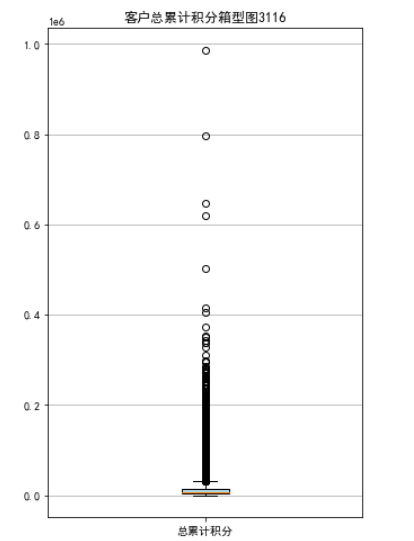

ec=data['EXCHANGE_COUNT'] fig=plt.figure(figsize=(8,5)) plt.hist(ec,bins=5,color='#0504aa') plt.xlabel('兑换次数') plt.ylabel('会员人数') plt.title('会员兑换积分次数分布直方图3116') plt.show() plt.close ps=data['Points_Sum'] fig=plt.figure(figsize=(5,8)) plt.boxplot(ps,patch_artist=True,labels=['总累计积分'],boxprops={'facecolor':'lightblue'}) plt.title('客户总累计积分箱型图3116') plt.grid(axis='y') plt.show() plt.close

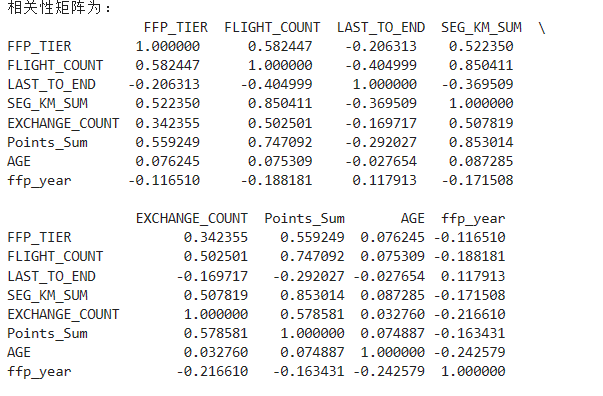

data_corr=data[['FFP_TIER','FLIGHT_COUNT','LAST_TO_END','SEG_KM_SUM','EXCHANGE_COUNT','Points_Sum']] age1=data['AGE'].fillna(0) data_corr['AGE']=age1.astype('int64') data_corr['ffp_year']=ffp_year dt_corr=data_corr.corr(method='pearson') print('相关性矩阵为:\n',dt_corr) import seaborn as sns plt.subplots(figsize=(10,10)) sns.heatmap(dt_corr,annot=True,vmax=1,square=True,cmap='Blues') plt.title('热力图3116') plt.rcParams['font.sans-serif']=['SimHei'] plt.rcParams['axes.unicode_minus']=False plt.show() plt.close

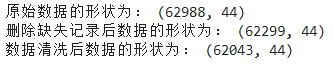

import numpy as np import pandas as pd datafile='air_data.csv' cleanedfile='data_cleaned.csv' airline_data=pd.read_csv(datafile,encoding='utf-8') print('原始数据的形状为:',airline_data.shape) airline_notnull=airline_data.loc[airline_data['SUM_YR_1'].notnull() & airline_data['SUM_YR_2'].notnull(),:] print('删除缺失记录后数据的形状为:',airline_notnull.shape) index1=airline_notnull['SUM_YR_1']!=0 index2=airline_notnull['SUM_YR_2']!=0 index3=(airline_notnull['SEG_KM_SUM']>0) & (airline_notnull['avg_discount']!=0) index4=airline_notnull['AGE']>100 airline=airline_notnull[(index1|index2)&index3& ~index4] print('数据清洗后数据的形状为:',airline.shape) airline.to_csv(cleanedfile)

import pandas as pd import numpy as np cleanedfile='data_cleaned.csv' airline=pd.read_csv(cleanedfile,encoding='utf-8') airline_selection=airline[['FFP_DATE','LOAD_TIME','LAST_TO_END','FLIGHT_COUNT','SEG_KM_SUM','avg_discount']] print('筛选的属性前5行为:\n',airline_selection.head())

L=pd.to_datetime(airline_selection['LOAD_TIME'])-pd.to_datetime(airline_selection['FFP_DATE']) L=L.astype('str').str.split().str[0] L=L.astype('int')/30 airline_features=pd.concat([L,airline_selection.iloc[:,2:]],axis=1) print('构建的LRFMC属性前5行为:\n',airline_features.head()) from sklearn.preprocessing import StandardScaler data=StandardScaler().fit_transform(airline_features) np.savez('airline_scale.npz',data) print('标准化后LRFMC 5个属性为:\n',data[:5,:])

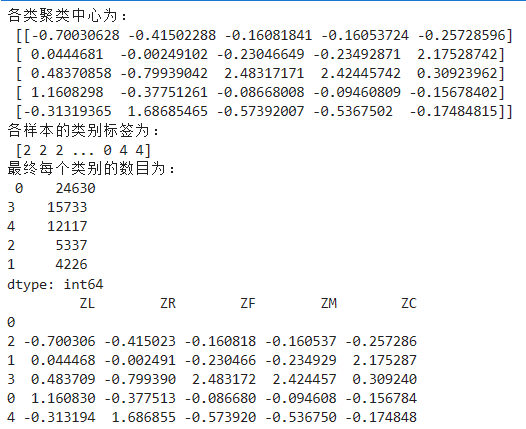

import pandas as pd import numpy as np from sklearn.cluster import KMeans airline_scale=np.load('airline_scale.npz')['arr_0'] k=5 kmeans_model=KMeans(n_clusters=k,random_state=123) fit_kmeans=kmeans_model.fit(airline_scale) kmeans_cc=kmeans_model.cluster_centers_ print('各类聚类中心为:\n',kmeans_cc) kmeans_labels=kmeans_model.labels_ print('各样本的类别标签为:\n',kmeans_labels) r1=pd.Series(kmeans_model.labels_).value_counts() print('最终每个类别的数目为:\n',r1) cluster_center=pd.DataFrame(kmeans_model.cluster_centers_,columns=['ZL','ZR','ZF','ZM','ZC']) cluster_center.index=pd.DataFrame(kmeans_model.labels_).drop_duplicates().iloc[:,0] print(cluster_center)

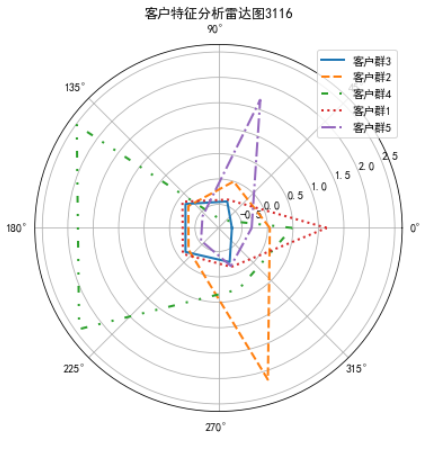

%matplotlib inline import matplotlib.pyplot as plt labels=['ZL','ZR','ZF','ZM','ZC'] legen=['客户群'+str(i+1) for i in cluster_center.index] lstype=['-','--',(0,(3,5,1,5,1,5)),':','-.'] kinds=list(cluster_center.iloc[:,0]) cluster_center=pd.concat([cluster_center,cluster_center[['ZL']]],axis=1) centers=np.array(cluster_center.iloc[:,0:]) n=len(labels) angle=np.linspace(0,2*np.pi,n,endpoint=False) angle=np.concatenate((angle,[angle[0]])) fig=plt.figure(figsize=(8,6)) ax=fig.add_subplot(111,polar=True) plt.rcParams['font.sans-serif']=['SimHei'] plt.rcParams['axes.unicode_minus']=False for i in range(len(kinds)): ax.plot(angle,centers[i],linestyle=lstype[i],linewidth=2,label=kinds[i]) #ax.set_thetagrids(angle*180/np.pi,labels) plt.title('客户特征分析雷达图3116') plt.legend(legen) plt.show() plt.close

客户流失分析:

import pandas as pd

#读数据

input_file = 'data_cleaned.csv'

output_file = 'selected.xls'

data = pd.read_csv(input_file)

#选取特征

data['单位里程票价'] = (data['SUM_YR_1'] + data['SUM_YR_2'])/data['SEG_KM_SUM']

data['单位里程积分'] = (data['P1Y_BP_SUM'] + data['L1Y_BP_SUM'])/data['SEG_KM_SUM']

data['飞行次数比例'] = data['L1Y_Flight_Count'] / data['P1Y_Flight_Count'] #第二年飞行次数与第一年飞行次数的比例

#筛选出老客户(飞行次数大于6次的为老客户)

data = data[data['FLIGHT_COUNT'] > 6]

#选择特征

data = data[['FFP_TIER','飞行次数比例','AVG_INTERVAL',

'avg_discount','EXCHANGE_COUNT','Eli_Add_Point_Sum','单位里程票价','单位里程积分']]

#导出

data.to_excel(output_file,index=None)

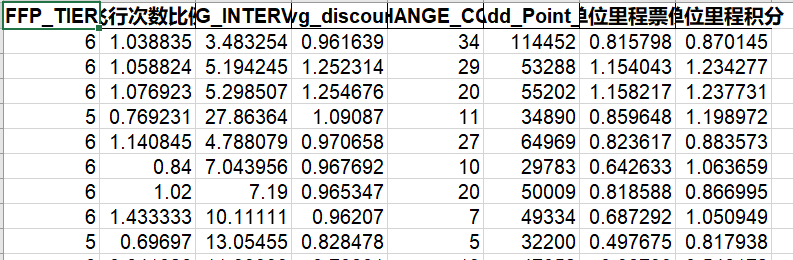

生成的部分数据如下:

import pandas as pd

input_file = 'selected.xls'

output_file = 'classfication.xls'

data = pd.read_excel(input_file)

data['客户类型'] = None

for i in range(len(data)):

#第一、二年飞行次数比例小于50%的客户定义为已流失

if data['飞行次数比例'][i] < 0.5:

data['客户类型'][i] = 0 #0代表已流失

#第一、二年飞行次数比例在[0.5,0.9)之间的客户定义为准流失

if (data['飞行次数比例'][i] >= 0.5) & (data['飞行次数比例'][i] < 0.9) :

data['客户类型'][i] = 1 #1代表准流失

#第一、二年飞行次数比例大于等于90%的客户定义为未流失

if data['飞行次数比例'][i] >= 0.9:

data['客户类型'][i] = 2 #2代表未流失

#导出

data.to_excel(output_file,index=None)

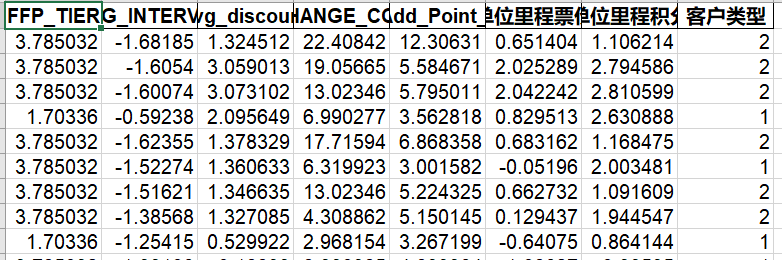

生成的部分数据如下:

import pandas as pd

#读数

input_file = 'classfication.xls'

output_file = 'std.xls'

data = pd.read_excel(input_file)

#去掉飞行次数比例

data = data[['FFP_TIER','AVG_INTERVAL','avg_discount','EXCHANGE_COUNT',

'Eli_Add_Point_Sum','单位里程票价','单位里程积分','客户类型']]

#标准化

data.loc[:,:'单位里程积分'] = (data.loc[:,:'单位里程积分'] - data.loc[:,:'单位里程积分'].mean(axis = 0)) \

/ (data.loc[:,:'单位里程积分'].std(axis = 0))

#导出

data.to_excel(output_file,index=None)

生成的部分数据如下:

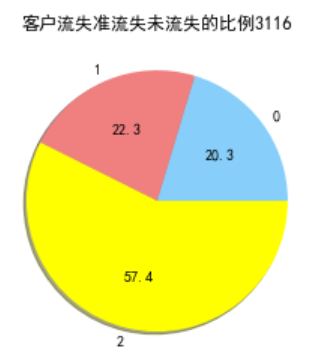

作出饼图:

import matplotlib.pyplot as plt

datafile='std.xls'

data=pd.read_excel(datafile)

ls=pd.value_counts(data['客户类型'])[0]

zls=pd.value_counts(data['客户类型'])[1]

wls=pd.value_counts(data['客户类型'])[2]

fig=plt.figure(figsize=(7,4))

plt.rcParams['font.sans-serif']='SimHei'

plt.rcParams['axes.unicode_minus']=False

plt.pie([ls,zls,wls],labels=['0','1','2'],colors=['lightskyblue','lightcoral','yellow'],autopct='%1.1f',shadow=True)

plt.title('客户流失准流失未流失的比例3116')

plt.show()

plt.close

运用SVM算法:

确定参数:

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn.svm import SVC

import joblib

#读取数据

input_file = 'std.xls'

output_file = 'loss.pkl'

data = pd.read_excel(input_file)

#划分训练集、测试集

X = data.loc[:,:'单位里程积分'].values

y = data.loc[:,'客户类型'].values

X_train,X_test,y_train,y_test = train_test_split(X,y,train_size = 0.8)

#采用网格搜索法来寻找SVC的最优参数

svc = SVC(kernel='rbf')

params = {'gamma':[0.1,1.0,10.0],

'C':[1.0,10.0,100.0]}

grid_search = GridSearchCV(svc,params,cv=5)

grid_search.fit(X_train,y_train)

print('模型最高分 {:.2f}'.format(grid_search.score(X_test,y_test)))

print('最优参数为: {}'.format(grid_search.best_params_))

最终确定'C'和'gamma'都为1.0

最终的模型为:

svc = SVC(kernel='rbf',C=1.0,gamma=1.0)

svc.fit(X_train,y_train)

joblib.dump(svc,output_file)

对模型进行预测:

import joblib

from model import X_test,y_test

#导入模型

model = joblib.load('loss.pkl')

#预测分类

y_predict = model.predict([X_test[0]])

print(y_predict)

print(y_test[0])