bp

import math

import numpy as np

import pandas as pd

from pandas import DataFrame

y =[0.14 ,0.64 ,0.28 ,0.33 ,0.12 ,0.03 ,0.02 ,0.11 ,0.08 ]

x1 =[0.29 ,0.50 ,0.00 ,0.21 ,0.10 ,0.06 ,0.13 ,0.24 ,0.28 ]

x2 =[0.23 ,0.62 ,0.53 ,0.53 ,0.33 ,0.15 ,0.03 ,0.23 ,0.03 ]

theata = [-1,-1,0,-1,-1,0,-1,0,-1]

x = np.array([x1,x2,theata])

W_mid = DataFrame(0.5,index=['input1','input2','theata'],columns=['mid1','mid2','mid3','mid4'])

W_out = DataFrame(0.5,index=['input1','input2','input3','input4','theata'],columns=['a'])

def sigmoid(x): #映射函数

return 1/(1+math.exp(-x))

#训练神经元

def train(W_out, W_mid,data,real):

#中间层神经元输入和输出层神经元输入

Net_in = DataFrame(data,index=['input1','input2','theata'],columns=['a'])

Out_in = DataFrame(0,index=['input1','input2','input3','input4','theata'],columns=['a'])

Out_in.loc['theata'] = -1

#中间层和输出层神经元权值

W_mid_delta = DataFrame(0,index=['input1','input2','theata'],columns=['mid1','mid2','mid3','mid4'])

W_out_delta = DataFrame(0,index=['input1','input2','input3','input4','theata'],columns=['a'])

#中间层的输出

for i in range(0,4):

Out_in.iloc[i] = sigmoid(sum(W_mid.iloc[:,i]*Net_in.iloc[:,0]))

#输出层的输出/网络输出

res = sigmoid(sum(Out_in.iloc[:,0]*W_out.iloc[:,0]))

#误差

error = abs(res-real)

#输出层权值变化量

#yita =学习率

yita =0.85

W_out_delta.iloc[:,0] = yita*res*(1-res)*(real-res)*Out_in.iloc[:,0]

W_out_delta.iloc[4,0] = -(yita*res*(1-res)*(real-res))

W_out = W_out + W_out_delta #输出层权值更新

#中间层权值变化量

for i in range(0,4):

W_mid_delta.iloc[:,i] = yita*Out_in.iloc[i,0]*(1-Out_in.iloc[i,0])*W_out.iloc[i,0]*res*(1-res)*(real-res)*Net_in.iloc[:,0]

W_mid_delta.iloc[2,i] = -(yita*Out_in.iloc[i,0]*(1-Out_in.iloc[i,0])*W_out.iloc[i,0]*res*(1-res)*(real-res))

W_mid = W_mid + W_mid_delta #中间层权值更新

return W_out,W_mid,res,error

def reault(data,W_out, W_mid):

Net_in = DataFrame(data,index=['input1','input2','theata'],columns=['a'])

Out_in = DataFrame(0,index=['input1','input2','input3','input4','theata'],columns=['a'])

Out_in.loc['theata'] = -1

#中间层的输出

for i in range(0,4):

Out_in.iloc[i] = sigmoid(sum(W_mid.iloc[:,i]*Net_in.iloc[:,0]))

#输出层的输出/网络输出

res = sigmoid(sum(Out_in.iloc[:,0]*W_out.iloc[:,0]))

return res

for i in range(0,9):

W_out,W_mid,res,error = train(W_out,W_mid,x[0:,i],y[i])

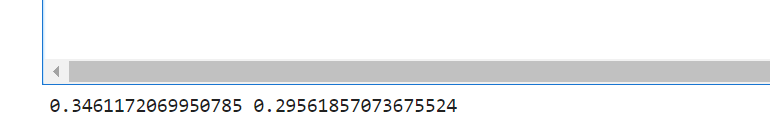

res1 = reault([0.38 ,0.49,-1 ], W_out, W_mid)

res2 = reault([0.29 ,0.47 ,-3], W_out, W_mid)

print(res1,res2)

import numpy as np

#定义sigmoid函数

def sigmoid(x, deriv = False):

if(deriv == True):

return x*(1-x)

else:

return 1/(1+np.exp(-x))

#input dataset

X = np.array([[0.29,0.23],

[0.50,0.62],

[0.00,0.53],

[0.21,0.53],

[0.10,0.33],

[0.06,0.15],

[0.13,0.03],

[0.24,0.23],

[0.28,0.03],

[0.38,0.49],

[0.29,0.49]])

#output dataset

y = np.array([[0.14,0.64,0.28,0.33,0.12,0.03,0.02,0.11,0.08,-1,-1]]).T

#初始化权重

weight01 = 2*np.random.random((2,4)) - 1

weight12 = 2*np.random.random((4,2)) - 1

weight23 = 2*np.random.random((2,1)) - 1

#初始化偏倚

b1 = 2*np.random.random((1,4)) - 1

b2 = 2*np.random.random((1,2)) - 1

b3 = 2*np.random.random((1,1)) - 1

bias1=np.array([b1[0],b1[0],b1[0],b1[0],b1[0],b1[0],b1[0],b1[0],b1[0],b1[0],b1[0]])

bias2=np.array([b2[0],b2[0],b2[0],b2[0],b2[0],b2[0],b2[0],b2[0],b2[0],b2[0],b2[0]])

bias3=np.array([b3[0],b3[0],b3[0],b3[0],b3[0],b3[0],b3[0],b3[0],b3[0],b3[0],b3[0]])

#开始训练

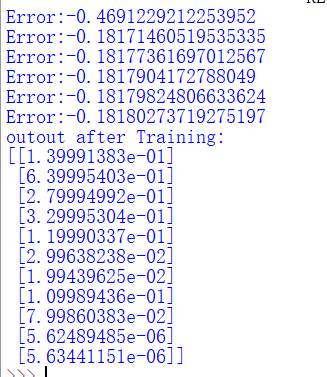

for j in range(60000):

I0 = X

O0=I0

I1=np.dot(O0 ,weight01)+bias1

O1=sigmoid(I1)

I2=np.dot(O1,weight12)+bias2

O2=sigmoid(I2)

I3=np.dot(O2,weight23)+bias3

O3=sigmoid(I3)

f3_error = y-O3

if(j%10000) == 0:

print ("Error:"+str(np.mean(f3_error)))

f3_delta = f3_error*sigmoid(O3,deriv = True)

f2_error = f3_delta.dot(weight23.T)

f2_delta = f2_error*sigmoid(O2,deriv = True)

f1_error = f2_delta.dot(weight12.T)

f1_delta = f1_error*sigmoid(O1,deriv = True)

weight23 += O2.T.dot(f3_delta) #调整权重

weight12 += O1.T.dot(f2_delta)

weight01 += O0.T.dot(f1_delta)

bias3 += f3_delta #调整偏倚

bias2 += f2_delta

bias1 += f1_delta

print ("outout after Training:")

print (O3)