卷积神经网络的网络层与参数的解析

参考博主:https://blog.csdn.net/weixin_41457494/article/details/86238443

import torch

from torch.autograd import Variable

import torch.nn as nn

import torch.nn.functional as F

# 神经网络参数解析

'''

神经网络参数解析

'''

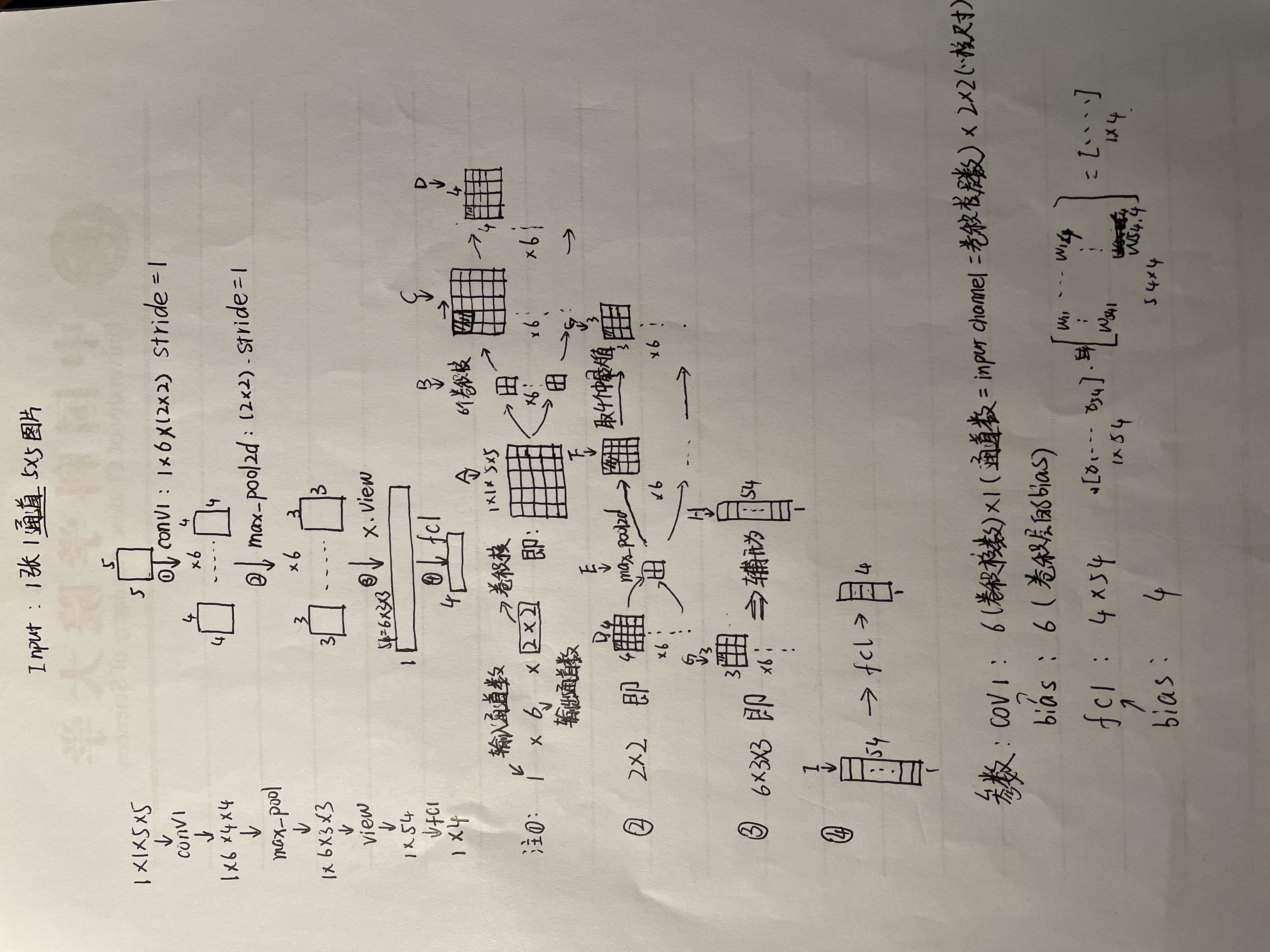

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# 1 input image channel, 6 output channels, 5x5 square convolution

# kernel

self.conv1 = nn.Conv2d(1, 6, 2)

# an affine operation: y = Wx + b

self.fc1 = nn.Linear(6 * 3 * 3, 4)

def forward(self, x):

# Max pooling over a (2, 2) window

print("x.size:{}".format(x.size()))

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2),stride=1)

print('================')

print("x.size:{}".format(x.size()))

x = x.view(-1, self.num_flat_features(x))

x = F.relu(self.fc1(x))

return x

def num_flat_features(self, x):

size = x.size()[1:] # all dimensions except the batch dimension

num_features = 1

for s in size:

num_features *= s

return num_features

if __name__ =='__main__':

net = Net()

# print("net:{}".format(net))

# print(net)

params = list(net.parameters())

print("创建的神经网络共有{}组参数\n".format(len(params)))

print("卷积核的初始化参数:{}\n".format(params[0]))

print("卷积核的初始化bias:{}\n".format( params[1]))

print("全连接层的初始化参数:{}\n".format( params[2]))

print("全连接层的初始化bias:{}\n".format( params[3]))

print("卷积核的初始化参数的size:{}\n".format(params[0].size()))

print("卷积核的初始化bias的size:{}\n".format( params[1].size()))

print("全连接层的初始化参数的size:{}\n".format( params[2].size()))

print("全连接层的初始化bias的size:{}\n".format( params[3].size()))

input = Variable(torch.randn(1, 1, 5, 5))

output = net(input)