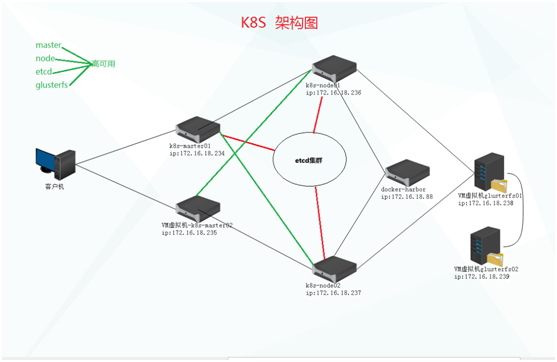

k8s测试集群部署

1. 关闭selinux ,清防火墙规则 安装yum install ntpdate lrzsz unzip git

net-tools

master:

生成密钥免密登陆

ssh-keygen

ssh-copy-id -i .ssh/id_rsa.pub root@172.16.18.235

ssh-copy-id -i .ssh/id_rsa.pub root@172.16.18.236

ssh-copy-id -i .ssh/id_rsa.pub root@172.16.18.237

host解析设置

[root@bogon ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.18.234 k8s-master01

172.16.18.235 k8s-master02

172.16.18.236 k8s-node01

172.16.18.237 k8s-node02

设置所有主机名

hostnamectl set-hostname k8s-master01

hostnamectl set-hostname k8s-master02

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

hosts文件批量分发

for i in 235 236 237 ;do scp /etc/hosts root@172.16.18.$i:/etc/hosts ;done

所有节点同步时间

ntpdate cn.pool.ntp.org

所有节点目录:

mkdir /opt/kubernetes/{bin,cfg,ssl} -p

node01 node02 安装docker

mkdir /etc/docker

[root@k8s-node01 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": [ "https://registry.docker-cn.com"]

}

scp /etc/docker/daemon.json root@172.16.18.236:/etc/docker/daemon.json

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

yum install docker-ce

# systemctl start docker

# systemctl enable docker

master:

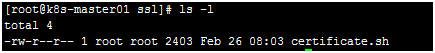

cfssl 生成证书

mkdir ssl

cd ssl

安装证书生成工具 cfssl :

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

rz certificate.sh

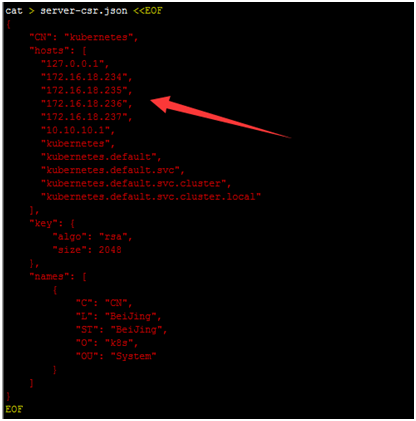

执行一遍注意 脚本里面的内容//注意箭头集群节点IP

ls | grep -v pem | xargs -i rm {}

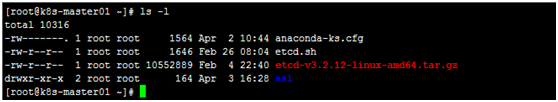

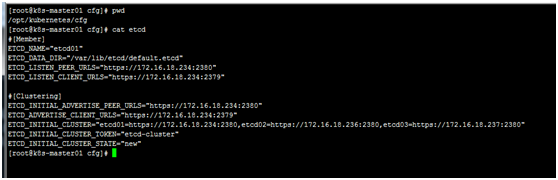

部署etcd集群(234,236,237)

mkdir /opt/kubernetes/{bin,cfg,ssl} -p

[root@k8s-master01 etcd-v3.2.12-linux-amd64]# mv etcd etcdctl /opt/kubernetes/bin/

[root@k8s-master01 bin]# ls

etcd etcdctl

[root@k8s-master01 cfg]# vi /usr/lib/systemd/system/etcd.service

[root@k8s-master01 cfg]# vi /usr/lib/systemd/system/etcd.service

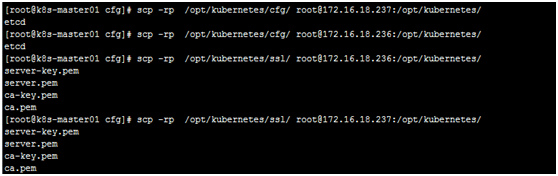

copyMaster的etcd配置文件到node

scp -rp /opt/kubernetes/bin/ root@172.16.18.236:/opt/kubernetes/

scp -rp /opt/kubernetes/bin/ root@172.16.18.237:/opt/kubernetes/

2个node节点操作 修改etcd配置文件

cd /opt/kubernetes/cfg

vi etcd

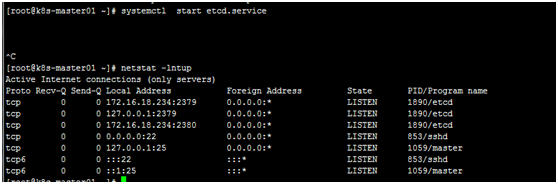

systemctl start etcd

systemctl enable etcd

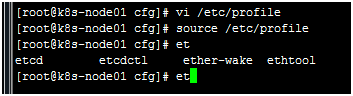

/etc/profile 最后加一条

PATH=$PATH:/opt/kubernetes/bin

健康检查 在node /opt/kubernetes/ssl/ 执行

etcdctl \

--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \

--endpoints="https://172.16.18.234:2379,https://172.16.18.236:2379,https://172.16.18.237:2379" \

cluster-health

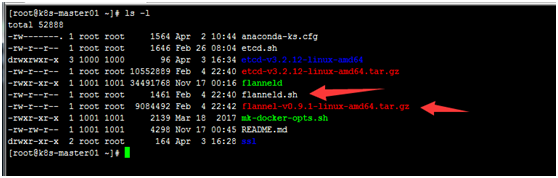

部署 Flannel 网络

master操作:

上传软件

node操作:

[root@k8s-node01 bin]# vi /opt/kubernetes/cfg/flanneld

[root@k8s-node01 bin]# cat /opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=https://172.16.18.234:2379,https://172.16.18.236:2379,https://172.16.18.237:2379 -etcd-cafile=/opt/kubernetes/ssl/ca.pem -etcd-certfile=/opt/kubernetes/ssl/server.pem -etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"

在node上执行

在ssl目录下执行

/opt/kubernetes/bin/etcdctl \

--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \

--endpoints="https://172.16.18.234:2379,https://172.16.18.236:2379,https://172.16.18.237:2379" \

set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

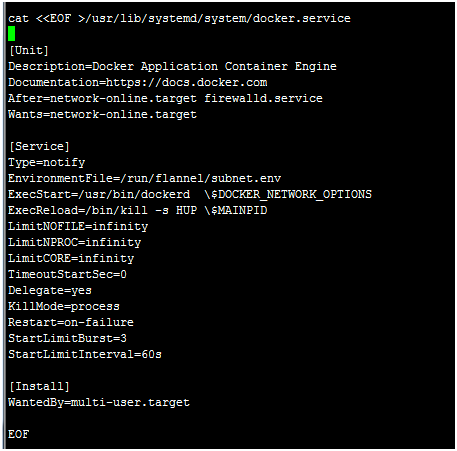

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

systemctl restart docker

[root@k8s-node01 system]# scp -rp /usr/lib/systemd/system/flanneld.service root@172.16.18.237:/usr/lib/systemd/system/

[root@k8s-node01 system]# scp -rp /opt/kubernetes/cfg/flanneld root@172.16.18.237:/opt/kubernetes/cfg/

在执行一遍 上述之前的脚本文件docker

ping互相ping通docker 0

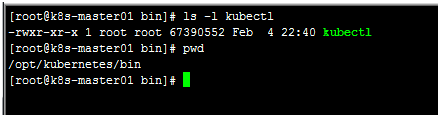

创建Node节点kubeconfig文件

cp /opt/kubernetes/bin/kubectl /usr/local/bin/

# 创建 TLS Bootstrapping Token

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://172.16.18.234:6443"

# 设置集群参数 (进到root/ssl/)

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

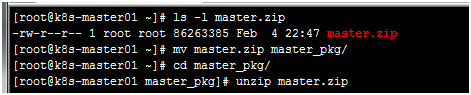

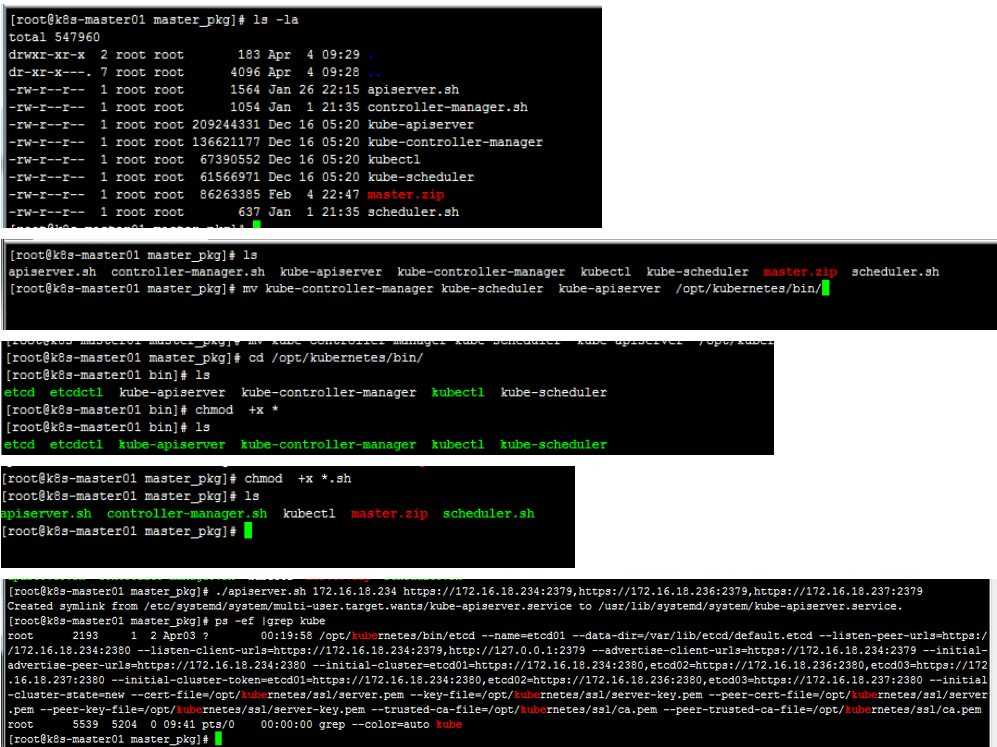

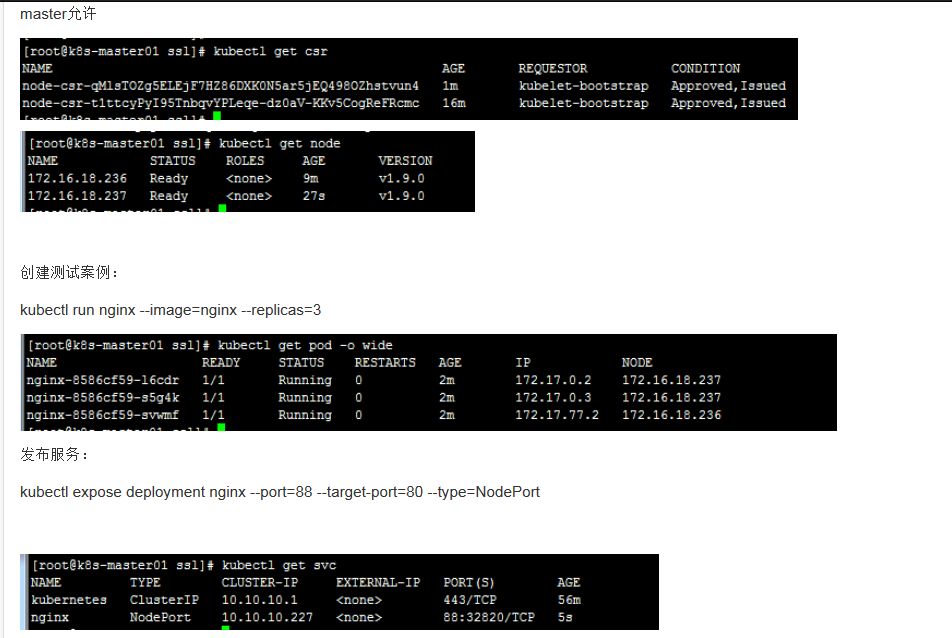

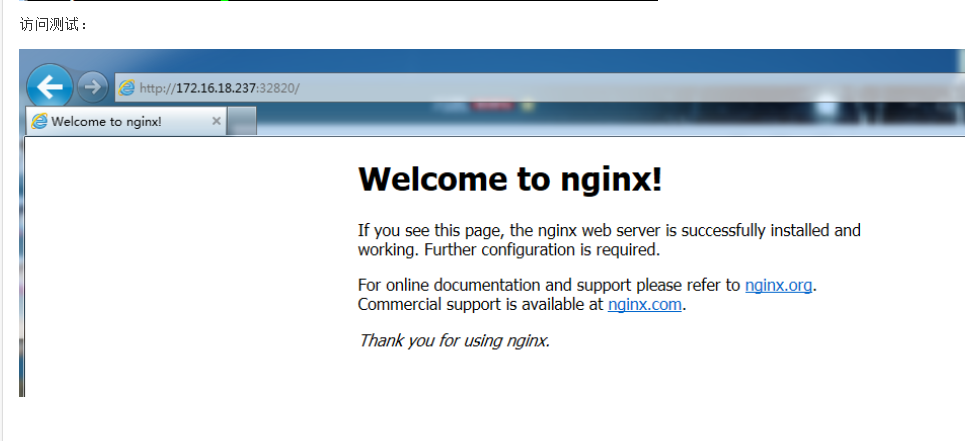

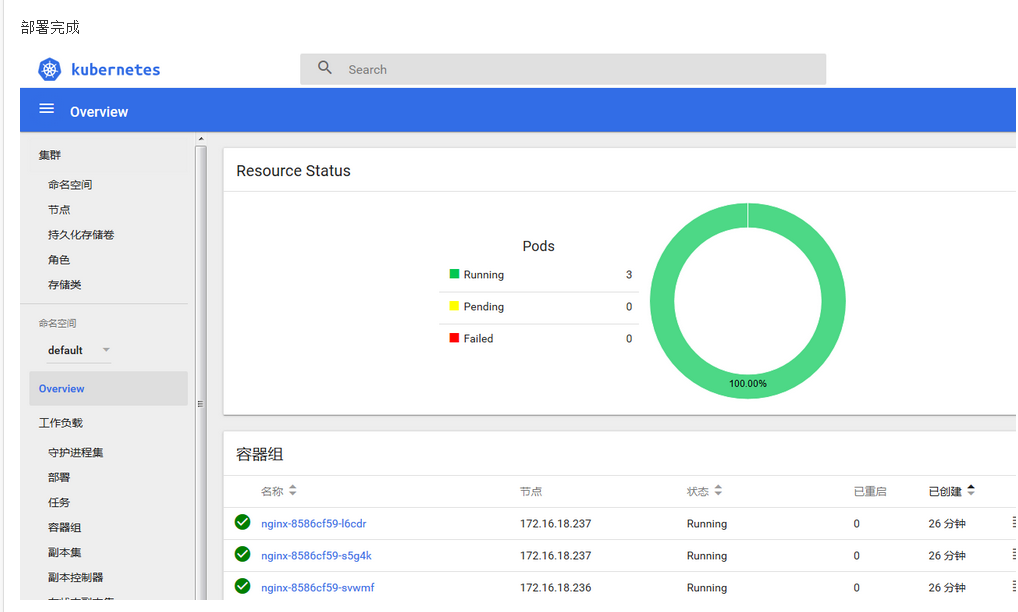

部署master

unzip master.zip

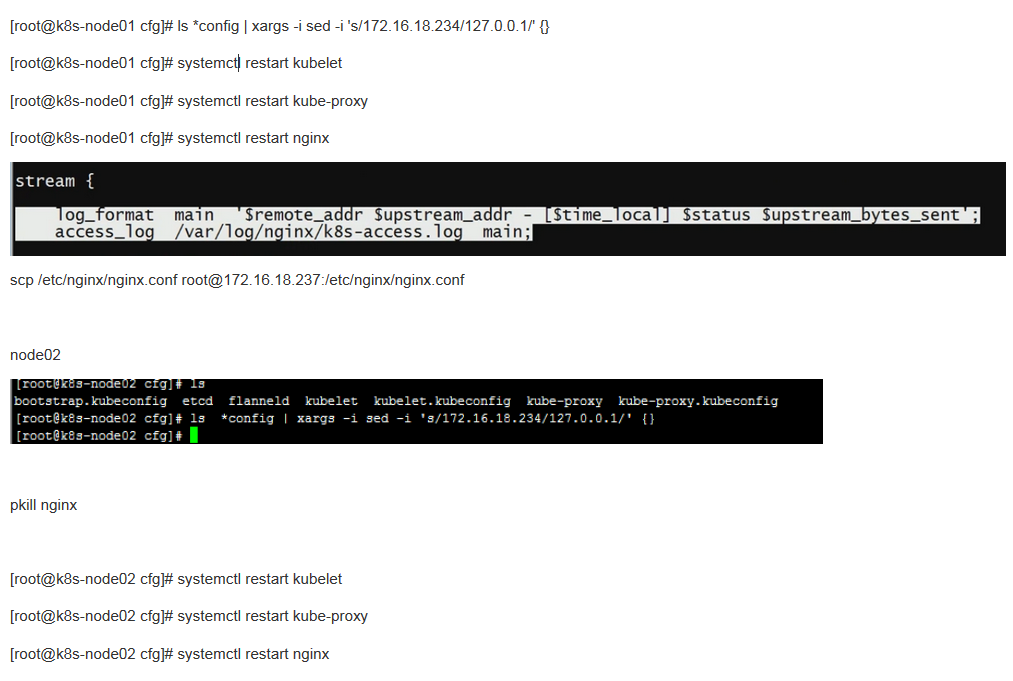

COPY到另个node节点

scp -rp /opt/kubernetes/bin root@172.16.18.237:/opt/kubernetes

scp -rp /opt/kubernetes/cfg root@172.16.18.237:/opt/kubernetes

scp /usr/lib/systemd/system/kubelet.service root@172.16.18.237:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/kube-proxy.service root@172.16.18.237:/usr/lib/systemd/system/

node02

vi /opt/kubernetes/cfg/kubelet

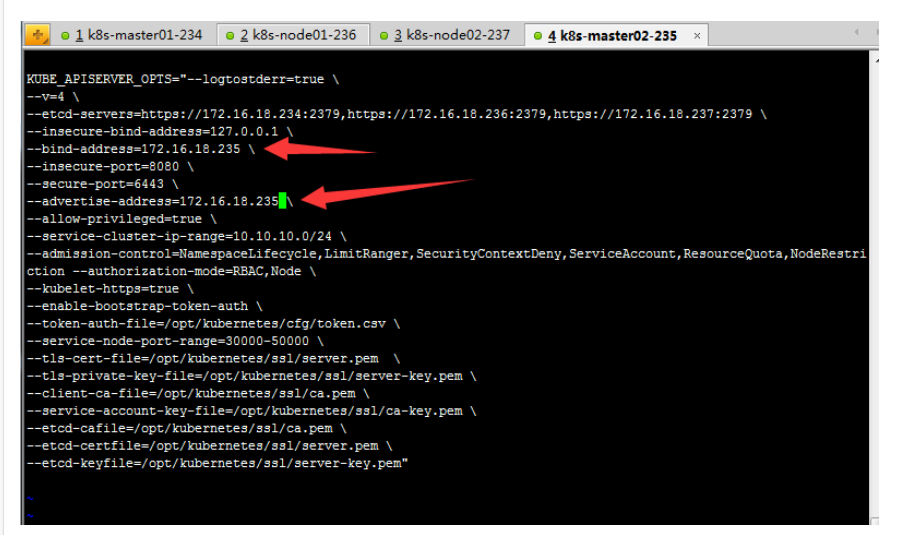

master 高可用

[root@k8s-master01 /]# scp -rp /opt/kubernetes/ root@172.16.18.235:/opt/

[root@k8s-master01 /]#

scp /usr/lib/systemd/system/{kube-apiserver,kube-scheduler,kube-controller-manager}.service root@172.16.18.235:/usr/lib/systemd/system

[root@k8s-master02 cfg]# vi kube-apiserver

2个node安装nginx 配置负载均衡

cat > /etc/yum.repos.d/nginx.repo (( EOF

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/\$basearch/

gpgcheck=0

EOF

yum install nginx

[root@k8s-node01 /]# vi /etc/nginx/nginx.conf

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

##########################

stream {

upstream k8s-apiserver {

server 172.16.18.234:6443;

server 172.16.18.235:6443;

}

server{

listen 127.0.0.1:6443;

proxy_pass k8s-apiserver;

}

}

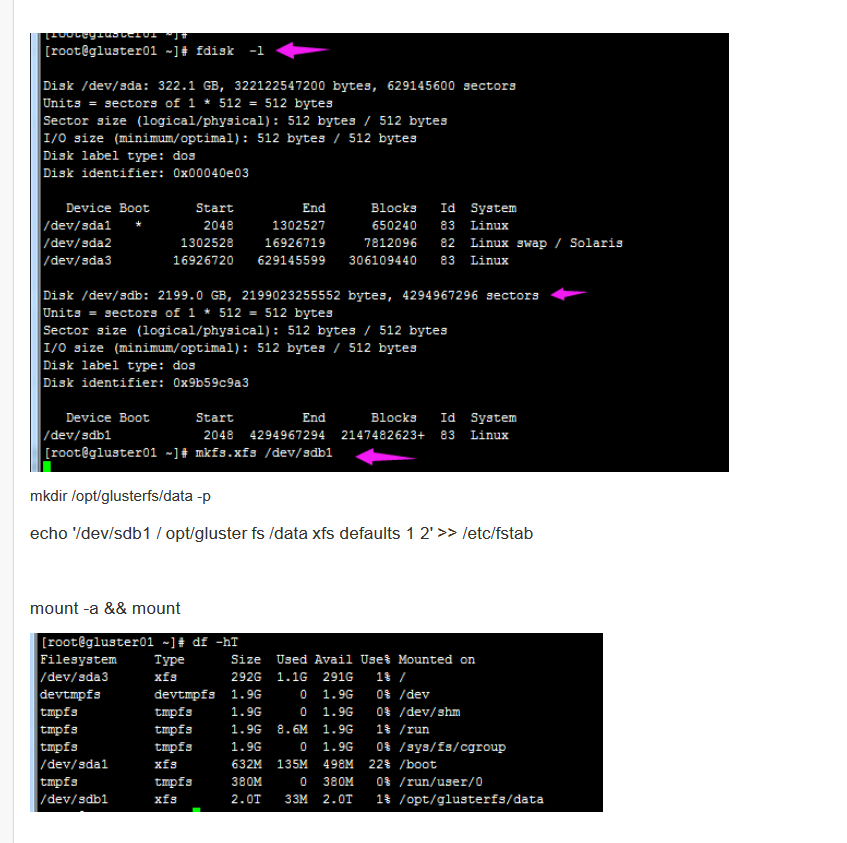

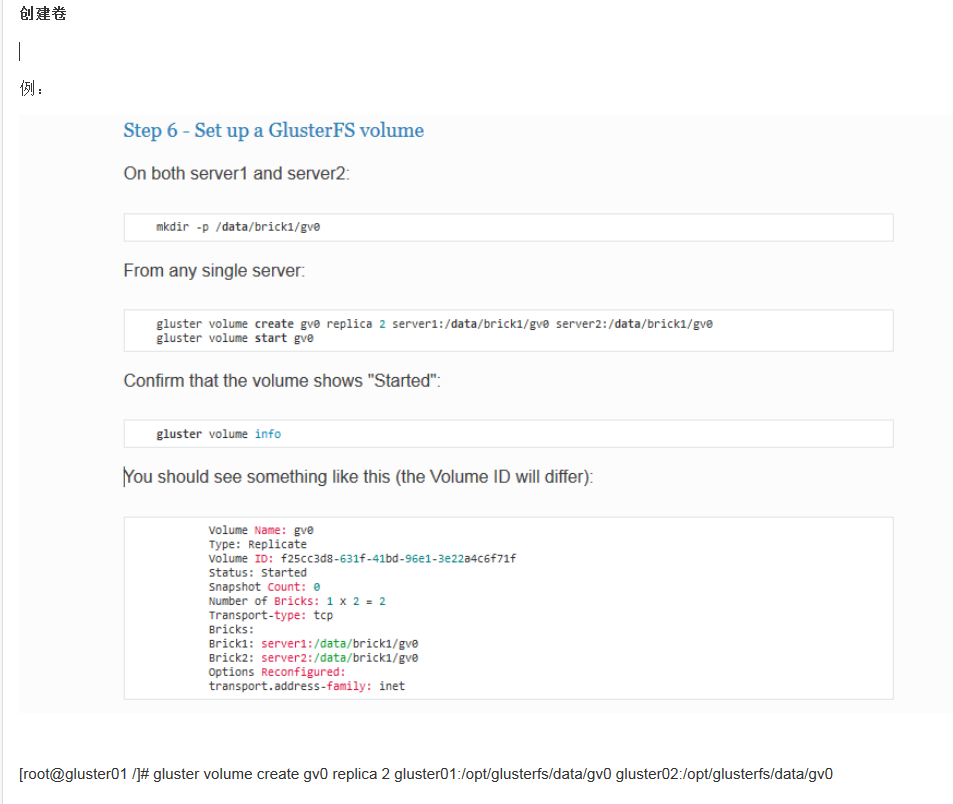

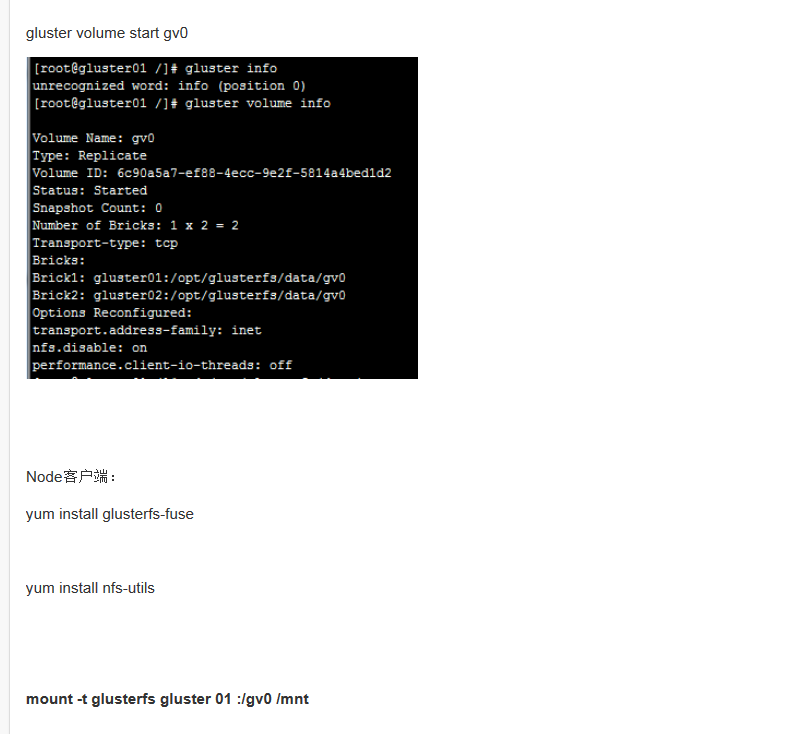

安装存储 glusterfs

2T硬盘 双节点高可用

18.238,18.239

yum install wget ntpdate lrzsz unzip git net-tools vim

ntpdate cn.pool.ntp.org

cp /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bak

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

yum clean all

yum makecache

浙公网安备 33010602011771号

浙公网安备 33010602011771号