【MATLAB深度学习】卷积神经网络

卷积神经网络

深度神经网络的重要性在于,它开启了通向复杂非线性模型和对知识进行分层处理的系统方法的大门。人们开发了很多提取图像特征的技术:SIFT、HoG、Textons、图像旋转、RIFT、GLOH等。卷积神经网络的特点和优势在于自动提取特征。

卷积层生成特征映射图(feature map)的新图像,其突出了原始图像的独特特征。卷积滤波器矩阵的值时通过训练过程确定的。

池化是一种在其他许多图像处理处理方法中采用的典型技术。池化层在一定程度上补偿了目标的偏心和倾斜。由于池化缩减了图像大小,它对减轻计算负载和防止过拟合是非常有益的。

MNIST示例

60000幅图像用于训练,10000幅图像用于测试,每幅都是28*28黑白图像。本例使用10000幅图像,训练测试比为8:2。网络结果如下表所示:

| 层 | 备注 | 激活函数 |

| 输入层 | 28*28个节点 | |

| 卷积层 | 20个9*9卷积滤波器 | ReLU |

| 池化层 | 一个平均池化(2*2) | |

| 隐含层 | 100个节点 | ReLU |

| 输出层 | 10个节点 | Softmax |

网络结构定义如下:

function [W1, W5, Wo] = MnistConv(W1, W5, Wo, X, D)

% W1,W5,W0分别是卷积滤波器矩阵、池化-隐含层权重矩阵和隐含-输出层权重矩阵

% 使用反向传播算法、minibatch方法训练网络

% 使用动量来调整权重

alpha = 0.01;

beta = 0.95;

momentum1 = zeros(size(W1));

momentum5 = zeros(size(W5));

momentumo = zeros(size(Wo));

N = length(D);

% minibatch

bsize = 100;

blist = 1:bsize:(N-bsize+1);

% One epoch loop

%

for batch = 1:length(blist)

dW1 = zeros(size(W1));

dW5 = zeros(size(W5));

dWo = zeros(size(Wo));

% Mini-batch loop

% 对100个权重更新值求和并取平均值

begin = blist(batch);

for k = begin:begin+bsize-1

% Forward pass = inference

%

x = X(:, :, k); % Input, 28x28

y1 = Conv(x, W1); % Convolution, 20x20x20

y2 = ReLU(y1); %

y3 = Pool(y2); % Pooling, 10x10x20

y4 = reshape(y3, [], 1); %

v5 = W5*y4; % ReLU, 2000

y5 = ReLU(v5); %

v = Wo*y5; % Softmax, 10x1

y = Softmax(v); %

% One-hot encoding

%

d = zeros(10, 1);

d(sub2ind(size(d), D(k), 1)) = 1;

% Backpropagation

%

e = d - y; % Output layer

delta = e;

e5 = Wo' * delta; % Hidden(ReLU) layer

delta5 = (y5 > 0) .* e5;

e4 = W5' * delta5; % Pooling layer

e3 = reshape(e4, size(y3));

e2 = zeros(size(y2));

W3 = ones(size(y2)) / (2*2);

for c = 1:20

e2(:, :, c) = kron(e3(:, :, c), ones([2 2])) .* W3(:, :, c);

end

delta2 = (y2 > 0) .* e2; % ReLU layer

delta1_x = zeros(size(W1)); % Convolutional layer

for c = 1:20

delta1_x(:, :, c) = conv2(x(:, :), rot90(delta2(:, :, c), 2), 'valid');

end

dW1 = dW1 + delta1_x;

dW5 = dW5 + delta5*y4';

dWo = dWo + delta *y5';

end

% Update weights

%

dW1 = dW1 / bsize;

dW5 = dW5 / bsize;

dWo = dWo / bsize;

% 动量

momentum1 = alpha*dW1 + beta*momentum1;

W1 = W1 + momentum1;

momentum5 = alpha*dW5 + beta*momentum5;

W5 = W5 + momentum5;

momentumo = alpha*dWo + beta*momentumo;

Wo = Wo + momentumo;

end

end

其中卷积定义为:

function y = Conv(x, W)

% 接受输入图像和卷积和卷积滤波器矩阵,返回特征映射图

%

[wrow, wcol, numFilters] = size(W);

[xrow, xcol, ~ ] = size(x);

yrow = xrow - wrow + 1;

ycol = xcol - wcol + 1;

y = zeros(yrow, ycol, numFilters);

for k = 1:numFilters

filter = W(:, :, k);

filter = rot90(squeeze(filter), 2);

y(:, :, k) = conv2(x, filter, 'valid');

end

end

池化定义为:

function y = Pool(x)

%

% 2x2 mean pooling

%

%

[xrow, xcol, numFilters] = size(x);

y = zeros(xrow/2, xcol/2, numFilters);

for k = 1:numFilters

filter = ones(2) / (2*2); % for mean

image = conv2(x(:, :, k), filter, 'valid');

y(:, :, k) = image(1:2:end, 1:2:end);

end

end

测试代码如下:

clear all

Images = loadMNISTImages('./MNIST/t10k-images.idx3-ubyte');

Images = reshape(Images, 28, 28, []);

Labels = loadMNISTLabels('./MNIST/t10k-labels.idx1-ubyte');

Labels(Labels == 0) = 10; % 0 --> 10

rng(1);

% Learning

%

W1 = 1e-2*randn([9 9 20]);

W5 = (2*rand(100, 2000) - 1) * sqrt(6) / sqrt(360 + 2000);

Wo = (2*rand( 10, 100) - 1) * sqrt(6) / sqrt( 10 + 100);

X = Images(:, :, 1:8000);

D = Labels(1:8000);

for epoch = 1:3

epoch

[W1, W5, Wo] = MnistConv(W1, W5, Wo, X, D);

end

save('MnistConv.mat');

% Test

%

X = Images(:, :, 8001:10000);

D = Labels(8001:10000);

acc = 0;

N = length(D);

for k = 1:N

x = X(:, :, k); % Input, 28x28

y1 = Conv(x, W1); % Convolution, 20x20x20

y2 = ReLU(y1); %

y3 = Pool(y2); % Pool, 10x10x20

y4 = reshape(y3, [], 1); % 2000

v5 = W5*y4; % ReLU, 360

y5 = ReLU(v5); %

v = Wo*y5; % Softmax, 10

y = Softmax(v); %

[~, i] = max(y);

if i == D(k)

acc = acc + 1;

end

end

acc = acc / N;

fprintf('Accuracy is %f\n', acc);

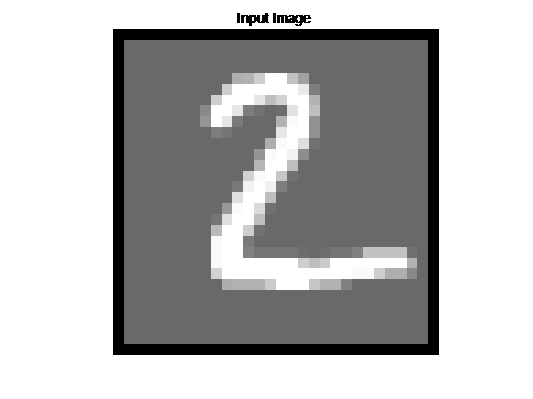

三轮运算后,结果为Accuracy is 0.946500。同时生成MnistConv.mat文件。下面分析图像在卷积神经网络每一层中的实际演变过程。代码如下:

clear all

load('MnistConv.mat')

k = 2;

x = X(:, :, k);

y1 = Conv(x, W1); % Convolution, 20x20x20

y2 = ReLU(y1); %

y3 = Pool(y2); % Pool, 10x10x20

y4 = reshape(y3, [], 1); % 2000

v5 = W5*y4; % ReLU, 360

y5 = ReLU(v5); %

v = Wo*y5; % Softmax, 10

y = Softmax(v); %

figure;

display_network(x(:));

title('Input Image')

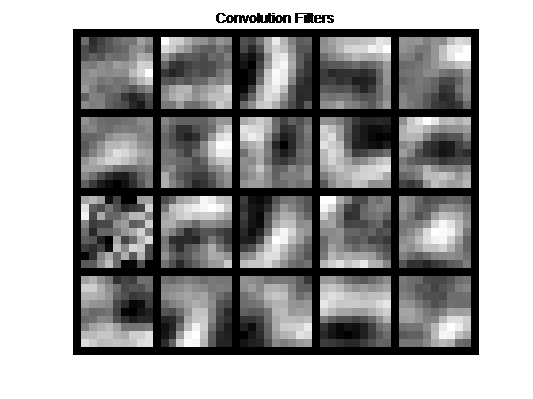

convFilters = zeros(9*9, 20);

for i = 1:20

filter = W1(:, :, i);

convFilters(:, i) = filter(:);

end

figure

display_network(convFilters);

title('Convolution Filters')

fList = zeros(20*20, 20);

for i = 1:20

feature = y1(:, :, i);

fList(:, i) = feature(:);

end

figure

display_network(fList);

title('Features [Convolution]')

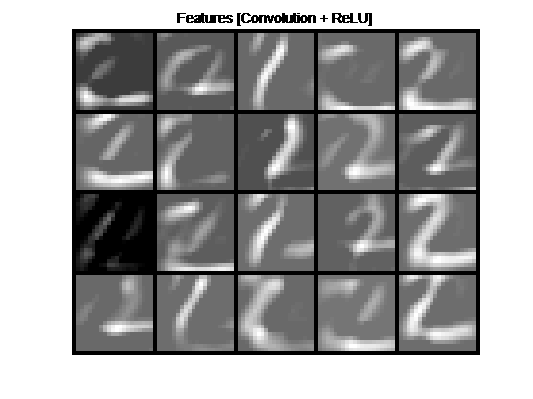

fList = zeros(20*20, 20);

for i = 1:20

feature = y2(:, :, i);

fList(:, i) = feature(:);

end

figure

display_network(fList);

title('Features [Convolution + ReLU]')

fList = zeros(10*10, 20);

for i = 1:20

feature = y3(:, :, i);

fList(:, i) = feature(:);

end

figure

display_network(fList);

title('Features [Convolution + ReLU + MeanPool]')

输出的结果为:

图1

图2

图2是20个经过训练的卷积滤波器组成。值越大,阴影越亮。

图3

图3呈现的是卷积层图像处理的结果。

图4

图4是卷积层采用ReLU处理特征映射图所得的结果。去除之前图像中的暗色像素,当前图像的字符主要是白色像素。但其中有一个格子变暗了,这不是一个好现象,因为它未能捕捉到输入图像“2”的任何特征。

图5

图5显示的是特征提取神经网络的最终结果。这些图像被转换为一个一维向量,并存储在分类神经网络中。

上述代码中用到的辅助函数如下:

function images = loadMNISTImages(filename)

%loadMNISTImages returns a 28x28x[number of MNIST images] matrix containing

%the raw MNIST images

fp = fopen(filename, 'rb');

assert(fp ~= -1, ['Could not open ', filename, '']);

magic = fread(fp, 1, 'int32', 0, 'ieee-be');

assert(magic == 2051, ['Bad magic number in ', filename, '']);

numImages = fread(fp, 1, 'int32', 0, 'ieee-be');

numRows = fread(fp, 1, 'int32', 0, 'ieee-be');

numCols = fread(fp, 1, 'int32', 0, 'ieee-be');

images = fread(fp, inf, 'unsigned char=>unsigned char');

images = reshape(images, numCols, numRows, numImages);

images = permute(images,[2 1 3]);

fclose(fp);

% Reshape to #pixels x #examples

images = reshape(images, size(images, 1) * size(images, 2), size(images, 3));

% Convert to double and rescale to [0,1]

images = double(images) / 255;

end

function labels = loadMNISTLabels(filename)

%loadMNISTLabels returns a [number of MNIST images]x1 matrix containing

%the labels for the MNIST images

fp = fopen(filename, 'rb');

assert(fp ~= -1, ['Could not open ', filename, '']);

magic = fread(fp, 1, 'int32', 0, 'ieee-be');

assert(magic == 2049, ['Bad magic number in ', filename, '']);

numLabels = fread(fp, 1, 'int32', 0, 'ieee-be');

labels = fread(fp, inf, 'unsigned char');

assert(size(labels,1) == numLabels, 'Mismatch in label count');

fclose(fp);

end

function [h, array] = display_network(A, opt_normalize, opt_graycolor, cols, opt_colmajor)

% This function visualizes filters in matrix A. Each column of A is a

% filter. We will reshape each column into a square image and visualizes

% on each cell of the visualization panel.

% All other parameters are optional, usually you do not need to worry

% about it.

% opt_normalize: whether we need to normalize the filter so that all of

% them can have similar contrast. Default value is true.

% opt_graycolor: whether we use gray as the heat map. Default is true.

% cols: how many columns are there in the display. Default value is the

% squareroot of the number of columns in A.

% opt_colmajor: you can switch convention to row major for A. In that

% case, each row of A is a filter. Default value is false.

warning off all

if ~exist('opt_normalize', 'var') || isempty(opt_normalize)

opt_normalize= true;

end

if ~exist('opt_graycolor', 'var') || isempty(opt_graycolor)

opt_graycolor= true;

end

if ~exist('opt_colmajor', 'var') || isempty(opt_colmajor)

opt_colmajor = false;

end

% rescale

A = A - mean(A(:));

if opt_graycolor, colormap(gray); end

% compute rows, cols

[L M]=size(A);

sz=sqrt(L);

buf=1;

if ~exist('cols', 'var')

if floor(sqrt(M))^2 ~= M

n=ceil(sqrt(M));

while mod(M, n)~=0 && n<1.2*sqrt(M), n=n+1; end

m=ceil(M/n);

else

n=sqrt(M);

m=n;

end

else

n = cols;

m = ceil(M/n);

end

array=-ones(buf+m*(sz+buf),buf+n*(sz+buf));

if ~opt_graycolor

array = 0.1.* array;

end

if ~opt_colmajor

k=1;

for i=1:m

for j=1:n

if k>M,

continue;

end

clim=max(abs(A(:,k)));

if opt_normalize

array(buf+(i-1)*(sz+buf)+(1:sz),buf+(j-1)*(sz+buf)+(1:sz))=reshape(A(:,k),sz,sz)/clim;

else

array(buf+(i-1)*(sz+buf)+(1:sz),buf+(j-1)*(sz+buf)+(1:sz))=reshape(A(:,k),sz,sz)/max(abs(A(:)));

end

k=k+1;

end

end

else

k=1;

for j=1:n

for i=1:m

if k>M,

continue;

end

clim=max(abs(A(:,k)));

if opt_normalize

array(buf+(i-1)*(sz+buf)+(1:sz),buf+(j-1)*(sz+buf)+(1:sz))=reshape(A(:,k),sz,sz)/clim;

else

array(buf+(i-1)*(sz+buf)+(1:sz),buf+(j-1)*(sz+buf)+(1:sz))=reshape(A(:,k),sz,sz);

end

k=k+1;

end

end

end

if opt_graycolor

h=imagesc(array,'EraseMode','none',[-1 1]);

else

h=imagesc(array,'EraseMode','none',[-1 1]);

end

axis image off

drawnow;

warning on all

function rng(x)

randn('seed', x)

rand('seed', x)

end

function y = Softmax(x)

ex = exp(x);

y = ex / sum(ex);

end

function y = ReLU(x)

y = max(0, x);

end