1-Elasticsearch - 集群部署实战

before

本篇博客分别演示了在Windows、centos系统的单机集群和非单机集群的搭建。

分别来看吧。

window单机集群搭建

win10 + elasticsearch-6.5.4 + kibana-6.5.4

单机集群部署,需要通过设置不同的IP和集群IP来实现区分各节点。

windows单机集群的特点是,搭建简单,好理解,能快速入门,了解es集群的特点。

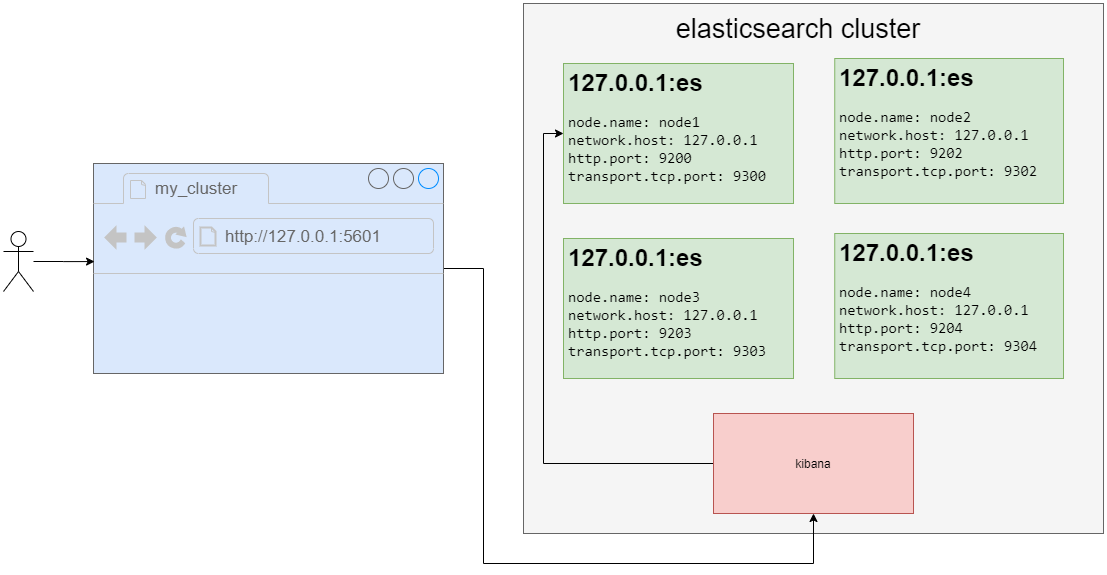

架构如下:

另外,需要Java环境,参考:https://www.cnblogs.com/Neeo/articles/11954283.html

目录结构

需要说明的是,查看集群还可以用到elasticsearch head插件,安装参考:https://www.cnblogs.com/Neeo/articles/9146695.html#chrome。

我这里采用kibana做集群的可视化展示。而且,由于elasticsearch比较大,所以,根据系统环境适当的减少节点也是合理的,没必要非要搞4个。最好放到固态盘下演示效果最好!

为了便于管理,我在C盘的根目录下创建一个es_cluster目录,然后将elasticsearch压缩包和kibana压缩包解压到该目录内,并且重命名为如下结构:

补充一点,这里仅是搭建集群,并没有安装ik分词插件,如果要安装的话,直接安装在各es目录内的plugins目录下即可。具体安装方法参见ik分词器的安装

集群配置

现在,我们为这个集群增加一些单播配置,打开各节点内的\config\elasticsearch.yml文件。每个节点的配置如下(原配置文件都被注释了,可以理解为空,我写好各节点的配置,直接粘贴进去,没有动注释的,出现问题了好恢复):

- elasticsearch1节点,,集群名称是my_es1,集群端口是9300;节点名称是node1,监听本地9200端口,可以有权限成为主节点和读写磁盘(不写就是默认的)。

cluster.name: my_es1

node.name: node1

network.host: 127.0.0.1

http.port: 9200

transport.tcp.port: 9300

discovery.zen.ping.unicast.hosts: ["127.0.0.1:9300", "127.0.0.1:9302", "127.0.0.1:9303", "127.0.0.1:9304"]

- elasticsearch2节点,集群名称是my_es1,集群端口是9302;节点名称是node2,监听本地9202端口,可以有权限成为主节点和读写磁盘。

cluster.name: my_es1

node.name: node2

network.host: 127.0.0.1

http.port: 9202

transport.tcp.port: 9302

node.master: true

node.data: true

discovery.zen.ping.unicast.hosts: ["127.0.0.1:9300", "127.0.0.1:9302", "127.0.0.1:9303", "127.0.0.1:9304"]

- elasticsearch3节点,集群名称是my_es1,集群端口是9303;节点名称是node3,监听本地9203端口,可以有权限成为主节点和读写磁盘。

cluster.name: my_es1

node.name: node3

network.host: 127.0.0.1

http.port: 9203

transport.tcp.port: 9303

discovery.zen.ping.unicast.hosts: ["127.0.0.1:9300", "127.0.0.1:9302", "127.0.0.1:9303", "127.0.0.1:9304"]

- elasticsearch4节点,集群名称是my_es1,集群端口是9304;节点名称是node4,监听本地9204端口,仅能读写磁盘而不能被选举为主节点。

cluster.name: my_es1

node.name: node4

network.host: 127.0.0.1

http.port: 9204

transport.tcp.port: 9304

node.master: false

node.data: true

discovery.zen.ping.unicast.hosts: ["127.0.0.1:9300", "127.0.0.1:9302", "127.0.0.1:9303", "127.0.0.1:9304"]

由上例的配置可以看到,各节点有一个共同的名字my_es1,但由于是本地环境,所以各节点的名字不能一致,我们分别启动它们,它们通过单播列表相互介绍,发现彼此,然后组成一个my_es1集群。谁是老大则是要看谁先启动了!

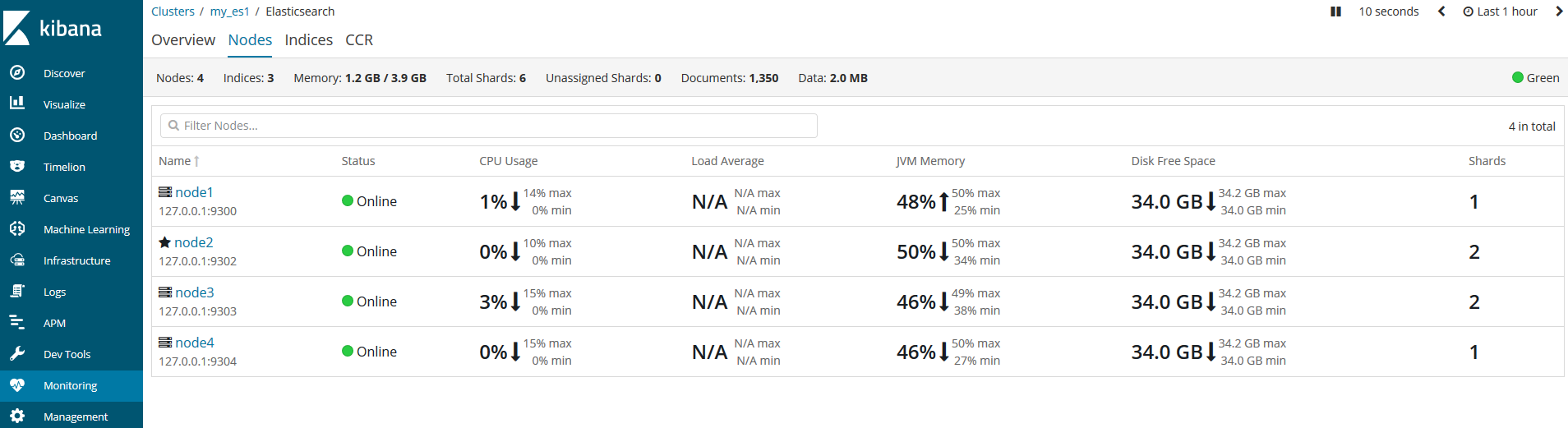

kibana测试

我们打开kibana,通过左侧菜单栏的Monitoring来监控各集群的健康状况。

由于我先启动的是node2节点,所以node2就是主节点。

centos集群部署

centos7.9 + elasticsearch-6.8.15

本小节演示如何在三台服务器上搭建es集群,基于rpm包的安装方式。

集群架构如下:

注意,不同es版本,不同系统平台,不同的安装方式,在部署集群式,都会有些许不同,所以,大家要擦亮眼睛。

另外,要保证各服务器之间能正常通信。

接下来,我们分别对三个节点进行安装部署。

elasticsearch-head插件安装参考:https://www.cnblogs.com/Neeo/articles/9146695.html#chrome

我这里也提供一份elasticsearch-6.8.15的rpm包。链接:https://pan.baidu.com/s/1Gn0rx4Q0Fa0IwudcMmI08A 提取码:8c57

node-1

安装Java环境

[root@cs tmp]# pwd

/tmp

[root@cs tmp]# yum install java-1.8.0-openjdk.x86_64 -y

[root@cs tmp]# java -version

openjdk version "1.8.0_292"

OpenJDK Runtime Environment (build 1.8.0_292-b10)

OpenJDK 64-Bit Server VM (build 25.292-b10, mixed mode)

安装elasticsearch

[root@cs tmp]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.8.15.rpm

[root@cs tmp]# rpm -ivh elasticsearch-6.8.15.rpm

[root@cs tmp]# systemctl daemon-reload

[root@cs tmp]# rm -rf elasticsearch-6.8.15.rpm

[root@cs tmp]# rpm -qc elasticsearch

/etc/elasticsearch/elasticsearch.yml

/etc/elasticsearch/jvm.options

/etc/elasticsearch/log4j2.properties

/etc/elasticsearch/role_mapping.yml

/etc/elasticsearch/roles.yml

/etc/elasticsearch/users

/etc/elasticsearch/users_roles

/etc/init.d/elasticsearch

/etc/sysconfig/elasticsearch

/usr/lib/sysctl.d/elasticsearch.conf

/usr/lib/systemd/system/elasticsearch.service

注意,elasticsearch服务的运行需要以非root权限运行,但rpm安装时,默认帮我们创建了一个elasticsearch用户,所以,接下来,这里,我手动创建一个数据文件目录,然后给相关目录进行授权:

mkdir -p /data/elasticsearch

chown -R elasticsearch:elasticsearch /data/elasticsearch/

chmod -R g+s /data/elasticsearch/

chown -R elasticsearch:elasticsearch /etc/elasticsearch/

chown -R elasticsearch:elasticsearch /etc/init.d/elasticsearch

chown -R elasticsearch:elasticsearch /etc/sysconfig/elasticsearch

chown -R elasticsearch:elasticsearch /var/log/elasticsearch/

chown -R elasticsearch:elasticsearch /usr/share/elasticsearch/

chown -R elasticsearch:elasticsearch /usr/lib/sysctl.d/elasticsearch.conf

chown -R elasticsearch:elasticsearch /usr/lib/systemd/system/elasticsearch.service

必要的配置

为了后续能正常启动集群,需要提前对系统参数和内核参数做些配置。

修改系统参数:

[root@cs tmp]# vim /etc/security/limits.conf

# 可打开的文件句柄最大数

* soft nofile 65535

* hard nofile 65535

# 单个用户可用的最大进程数

* soft nproc 4096

* hard nproc 4096

# 可打开的文件描述符的最大数,unlimited:无限制

* soft memlock unlimited

* hard memlock unlimited

修改内核参数:

[root@cs tmp]# vim /etc/sysctl.conf

# JAM能开启的最大线程数

vm.max_map_count = 262144

[root@cs tmp]# sysctl -p # 使修改的内核参数生效

vm.max_map_count = 262144

为了避免内存锁定失败,还需要:

[root@cs tmp]# sudo systemctl edit elasticsearch

[Service]

LimitMEMLOCK=infinity

[root@cs tmp]# sudo systemctl daemon-reload

启动前的最后准备

编辑elasticsearch的配置文件:

[root@cs tmp]# cp /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak

[root@cs tmp]# > /etc/elasticsearch/elasticsearch.yml

[root@cs tmp]# vim /etc/elasticsearch/elasticsearch.yml

cluster.name: my_cluster

node.name: node-1

node.master: true

node.data: true

path.data: /data/elasticsearch

path.logs: /var/log/elasticsearch

bootstrap.memory_lock: true

network.host: 10.0.0.201,127.0.0.1

http.port: 9200

discovery.zen.ping.unicast.hosts: ["10.0.0.201", "10.0.0.202", "10.0.0.203"]

http.cors.enabled: true

http.cors.allow-origin: "*"

# discovery.zen.minimum_master_nodes: 2

启动你的es服务,然后稍等下查看集群状态:

[root@cs tmp]# systemctl restart elasticsearch

[root@cs tmp]# systemctl status elasticsearch

[root@cs tmp]# curl http://10.0.0.201:9200

{

"name" : "node-1",

"cluster_name" : "my_cluster",

"cluster_uuid" : "sPQePmgpSi6c9AQkpWm6oA",

"version" : {

"number" : "6.8.15",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "c9a8c60",

"build_date" : "2021-03-18T06:33:32.588487Z",

"build_snapshot" : false,

"lucene_version" : "7.7.3",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}

[root@cs tmp]# curl http://10.0.0.201:9200/_cat/nodes

10.0.0.201 14 96 28 0.41 0.11 0.06 mdi * node-1

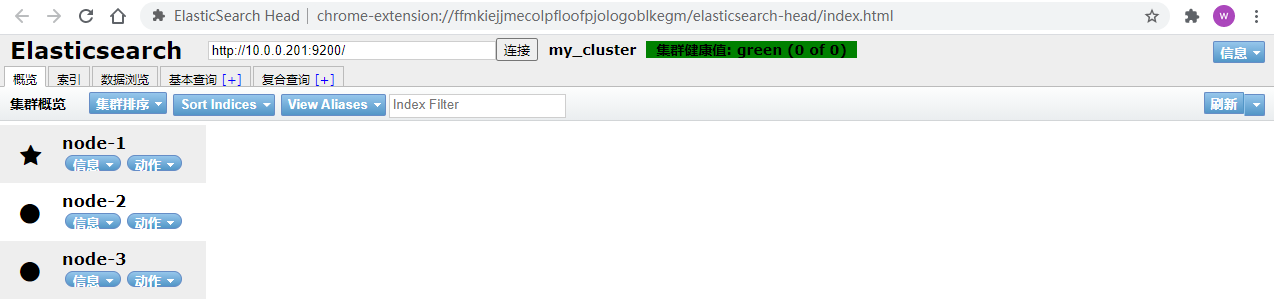

OK,节点1配置好了,此时,可以浏览器通过elasticsearch-head插件访问看看了:

node-2

安装Java环境

[root@cs tmp]# pwd

/tmp

[root@cs tmp]# yum install java-1.8.0-openjdk.x86_64 -y

[root@cs tmp]# java -version

openjdk version "1.8.0_292"

OpenJDK Runtime Environment (build 1.8.0_292-b10)

OpenJDK 64-Bit Server VM (build 25.292-b10, mixed mode)

安装elasticsearch

[root@cs tmp]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.8.15.rpm

[root@cs tmp]# rpm -ivh elasticsearch-6.8.15.rpm

[root@cs tmp]# systemctl daemon-reload

[root@cs tmp]# rm -rf elasticsearch-6.8.15.rpm

注意,elasticsearch服务的运行需要以非root权限运行,但rpm安装时,默认帮我们创建了一个elasticsearch用户,所以,接下来,这里,我手动创建一个数据文件目录,然后给相关目录进行授权:

mkdir -p /data/elasticsearch

chown -R elasticsearch:elasticsearch /data/elasticsearch/

chmod -R g+s /data/elasticsearch/

chown -R elasticsearch:elasticsearch /etc/elasticsearch/

chown -R elasticsearch:elasticsearch /etc/init.d/elasticsearch

chown -R elasticsearch:elasticsearch /etc/sysconfig/elasticsearch

chown -R elasticsearch:elasticsearch /var/log/elasticsearch/

chown -R elasticsearch:elasticsearch /usr/share/elasticsearch/

chown -R elasticsearch:elasticsearch /usr/lib/sysctl.d/elasticsearch.conf

chown -R elasticsearch:elasticsearch /usr/lib/systemd/system/elasticsearch.service

必要的配置

为了后续能正常启动集群,需要提前对系统参数和内核参数做些配置。

修改系统参数:

[root@cs tmp]# vim /etc/security/limits.conf

# 可打开的文件句柄最大数

* soft nofile 65535

* hard nofile 65535

# 单个用户可用的最大进程数

* soft nproc 4096

* hard nproc 4096

# 可打开的文件描述符的最大数,unlimited:无限制

* soft memlock unlimited

* hard memlock unlimited

修改内核参数:

[root@cs tmp]# vim /etc/sysctl.conf

# JAM能开启的最大线程数

vm.max_map_count = 262144

[root@cs tmp]# sysctl -p # 使修改的内核参数生效

vm.max_map_count = 262144

为了避免内存锁定失败,还需要:

[root@cs tmp]# sudo systemctl edit elasticsearch

[Service]

LimitMEMLOCK=infinity

[root@cs tmp]# sudo systemctl daemon-reload

启动前的最后准备

编辑elasticsearch的配置文件:

[root@cs tmp]# cp /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak

[root@cs tmp]# > /etc/elasticsearch/elasticsearch.yml

[root@cs tmp]# vim /etc/elasticsearch/elasticsearch.yml

cluster.name: my_cluster

node.name: node-2

node.master: true

node.data: true

path.data: /data/elasticsearch

path.logs: /var/log/elasticsearch

bootstrap.memory_lock: true

network.host: 10.0.0.202,127.0.0.1

http.port: 9200

discovery.zen.ping.unicast.hosts: ["10.0.0.201", "10.0.0.202", "10.0.0.203"]

http.cors.enabled: true

http.cors.allow-origin: "*"

# discovery.zen.minimum_master_nodes: 2

启动你的es服务,然后稍等下查看集群状态:

[root@cs tmp]# systemctl restart elasticsearch

[root@cs tmp]# systemctl status elasticsearch

[root@cs tmp]# curl http://10.0.0.202:9200

{

"name" : "node-2",

"cluster_name" : "my_cluster",

"cluster_uuid" : "sPQePmgpSi6c9AQkpWm6oA",

"version" : {

"number" : "6.8.15",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "c9a8c60",

"build_date" : "2021-03-18T06:33:32.588487Z",

"build_snapshot" : false,

"lucene_version" : "7.7.3",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}

[root@cs tmp]# curl http://10.0.0.202:9200/_cat/nodes

10.0.0.201 20 96 0 0.00 0.03 0.05 mdi * node-1

10.0.0.202 18 96 0 0.44 0.21 0.11 mdi - node-2

OK,节点2配置好了,此时,可以浏览器通过elasticsearch-head插件访问看看了,由于节点1和节点2都属于一个集群,所以,访问任意一个具有选举权的节点,都能返回集群信息:

node-3

安装Java环境

[root@cs tmp]# pwd

/tmp

[root@cs tmp]# yum install java-1.8.0-openjdk.x86_64 -y

[root@cs tmp]# java -version

openjdk version "1.8.0_292"

OpenJDK Runtime Environment (build 1.8.0_292-b10)

OpenJDK 64-Bit Server VM (build 25.292-b10, mixed mode)

安装elasticsearch

[root@cs tmp]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.8.15.rpm

[root@cs tmp]# rpm -ivh elasticsearch-6.8.15.rpm

[root@cs tmp]# systemctl daemon-reload

[root@cs tmp]# rm -rf elasticsearch-6.8.15.rpm

注意,elasticsearch服务的运行需要以非root权限运行,但rpm安装时,默认帮我们创建了一个elasticsearch用户,所以,接下来,这里,我手动创建一个数据文件目录,然后给相关目录进行授权:

mkdir -p /data/elasticsearch

chown -R elasticsearch:elasticsearch /data/elasticsearch/

chmod -R g+s /data/elasticsearch/

chown -R elasticsearch:elasticsearch /etc/elasticsearch/

chown -R elasticsearch:elasticsearch /etc/init.d/elasticsearch

chown -R elasticsearch:elasticsearch /etc/sysconfig/elasticsearch

chown -R elasticsearch:elasticsearch /var/log/elasticsearch/

chown -R elasticsearch:elasticsearch /usr/share/elasticsearch/

chown -R elasticsearch:elasticsearch /usr/lib/sysctl.d/elasticsearch.conf

chown -R elasticsearch:elasticsearch /usr/lib/systemd/system/elasticsearch.service

必要的配置

为了后续能正常启动集群,需要提前对系统参数和内核参数做些配置。

修改系统参数:

[root@cs tmp]# vim /etc/security/limits.conf

# 可打开的文件句柄最大数

* soft nofile 65535

* hard nofile 65535

# 单个用户可用的最大进程数

* soft nproc 4096

* hard nproc 4096

# 可打开的文件描述符的最大数,unlimited:无限制

* soft memlock unlimited

* hard memlock unlimited

修改内核参数:

[root@cs tmp]# vim /etc/sysctl.conf

# JAM能开启的最大线程数

vm.max_map_count = 262144

[root@cs tmp]# sysctl -p # 使修改的内核参数生效

vm.max_map_count = 262144

为了避免内存锁定失败,还需要:

[root@cs tmp]# sudo systemctl edit elasticsearch

[Service]

LimitMEMLOCK=infinity

[root@cs tmp]# sudo systemctl daemon-reload

启动前的最后准备

编辑elasticsearch的配置文件:

[root@cs tmp]# cp /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak

[root@cs tmp]# > /etc/elasticsearch/elasticsearch.yml

[root@cs tmp]# vim /etc/elasticsearch/elasticsearch.yml

cluster.name: my_cluster

node.name: node-3

node.master: true

node.data: true

path.data: /data/elasticsearch

path.logs: /var/log/elasticsearch

bootstrap.memory_lock: true

network.host: 10.0.0.203,127.0.0.1

http.port: 9200

discovery.zen.ping.unicast.hosts: ["10.0.0.201", "10.0.0.202", "10.0.0.203"]

http.cors.enabled: true

http.cors.allow-origin: "*"

# discovery.zen.minimum_master_nodes: 2

启动你的es服务,然后稍等下查看集群状态:

[root@cs tmp]# systemctl restart elasticsearch

[root@cs tmp]# systemctl status elasticsearch

[root@cs tmp]# curl http://10.0.0.203:9200

{

"name" : "node-3",

"cluster_name" : "my_cluster",

"cluster_uuid" : "sPQePmgpSi6c9AQkpWm6oA",

"version" : {

"number" : "6.8.15",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "c9a8c60",

"build_date" : "2021-03-18T06:33:32.588487Z",

"build_snapshot" : false,

"lucene_version" : "7.7.3",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}

[root@cs tmp]# curl http://10.0.0.203:9200/_cat/nodes

10.0.0.201 17 96 0 0.02 0.02 0.05 mdi * node-1

10.0.0.203 18 96 20 0.44 0.19 0.09 mdi - node-3

10.0.0.202 21 96 0 0.00 0.04 0.06 mdi - node-2

OK,节点3配置好了,此时,可以浏览器通过elasticsearch-head插件访问看看了:

OK了,三个节点都配置好了,现在,集群也配置完毕了。

一些注意事项

注意,当开始配置每个节点时,discovery.zen.minimum_master_nodes: 2这个参数请注释掉,因为加了这个参数后,在启动当前节点时,它会一直ping其他具有选举权的节点,然后进行选举出主节点。但如果其他节点还没启动。它就一直ping......所以,还是先注释掉它吧。等你集群跑着都没问题,再开启它。

欢迎斧正,that's all,see also:

elasticsearch es调优实践经验总结 | Centos7搭建部署Elasticsearch7.10.2集群(rpm安装) | ElasticSearch 7.1 集群搭建 基于RPM

浙公网安备 33010602011771号

浙公网安备 33010602011771号