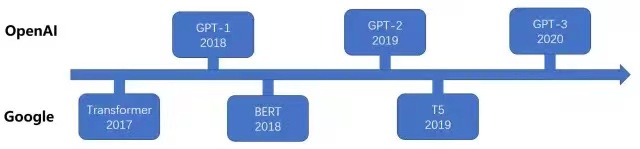

Transformer Family

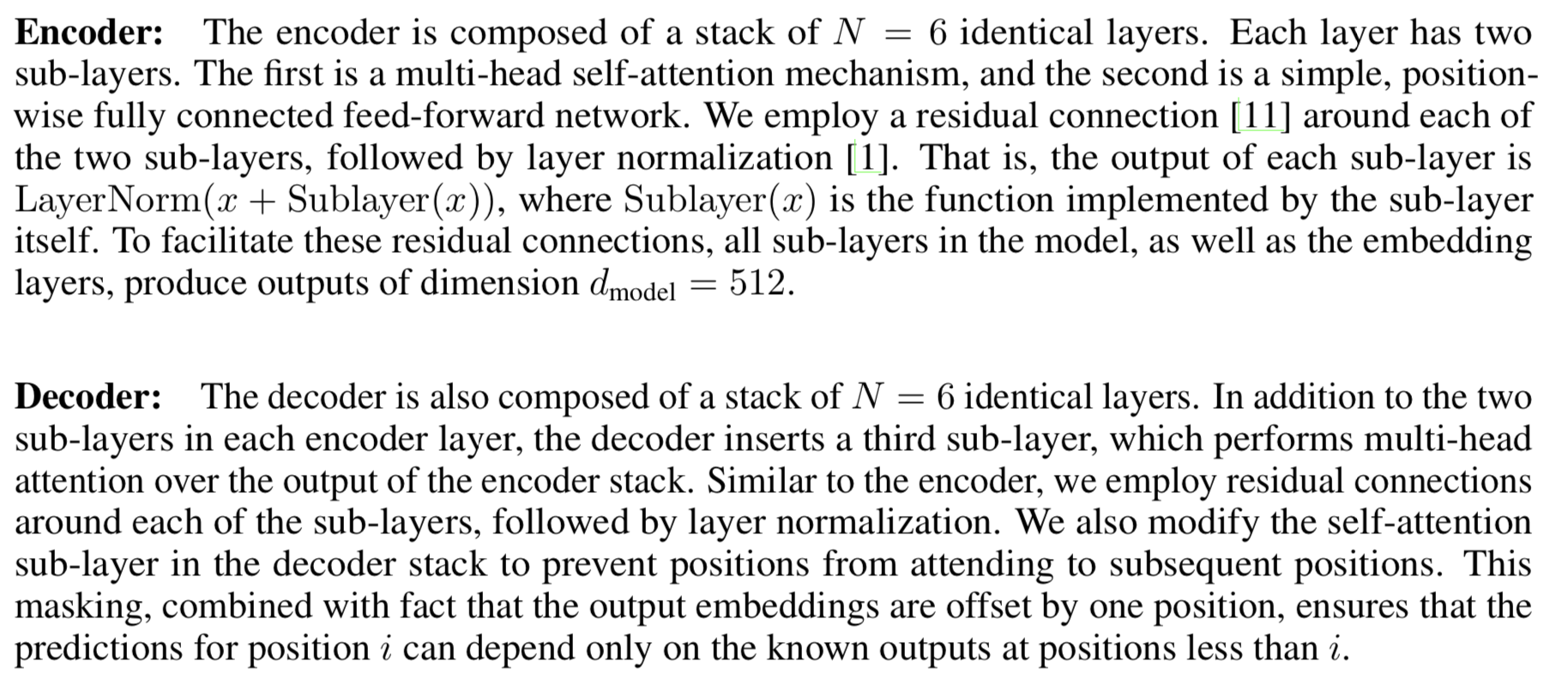

Transformer

简介

paper:Attention Is All You Need

time:2017.6

company:Google

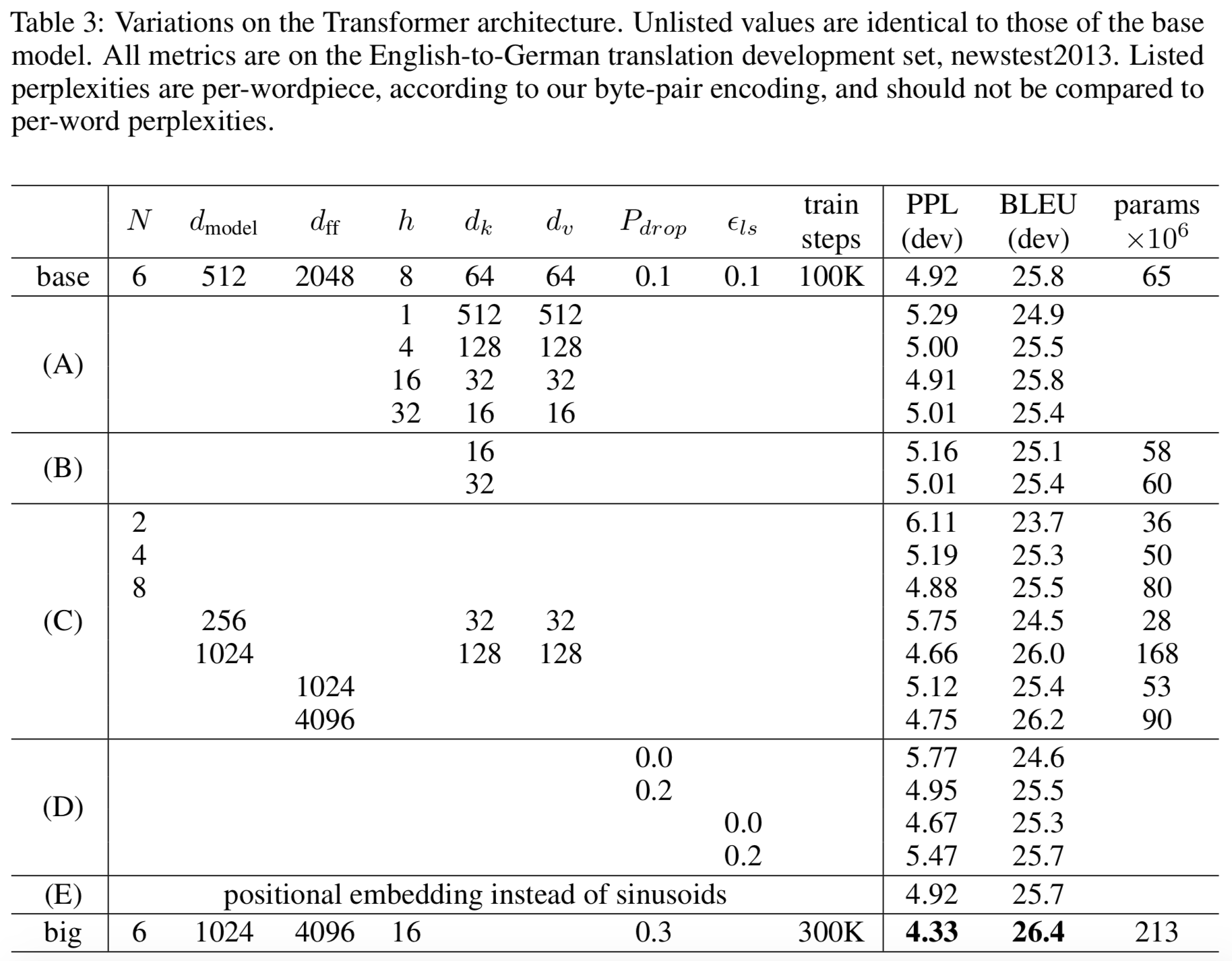

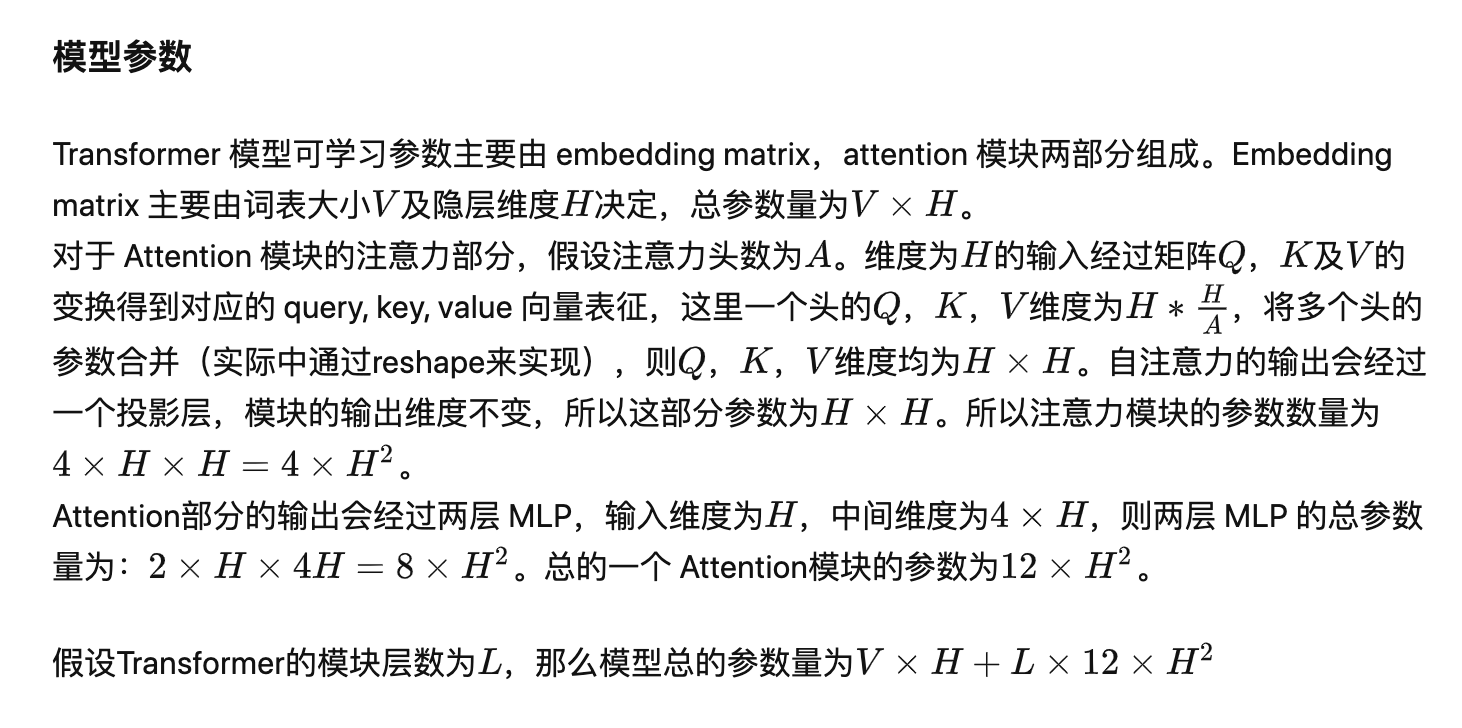

模型参数

37000512+1212512512=56692736(原文是 65000000)

Bert

简介

paper:BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

time:2018.10

company:Google

模型参数

Multi-Head Attention

Position-wise Feed-Forward Networks

T5

简介

paper:Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

time:2019

company:Google

模型参数

GPT-1

简介

paper:Improving Language Understanding by Generative Pre-Training

time:2018.6

company:OpenAI

模型参数

GPT-2

简介

paper:Language Models are Unsupervised Multitask Learners

time:2019.2

company:OpenAI

模型参数

参考资料

GPT-3

简介

paper:Language Models are Few-Shot Learners

time:2020.5

company:OpenAI