【动手学深度学习】51 序列模型

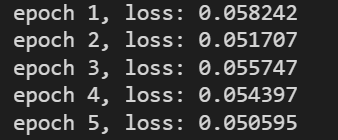

%matplotlib inline import torch from torch import nn from d2l import torch as d2l T = 1000 # 总共产生1000个点 time = torch.arange(1, T + 1, dtype=torch.float32) #生成数据并加入噪音 x = torch.sin(0.01 * time) + torch.normal(0, 0.2, (T,)) d2l.plot(time, [x], 'time', 'x', xlim=[1, 1000], figsize=(6, 3)) #用马尔可夫方法,此处设置用每4个数据预测第5个数据的自回归模型 #标签yi=xi ,输入Xi=[(xi-1)~(xi-tau)] tau = 4 features = torch.zeros((T - tau, tau)) for i in range(tau): features[:, i] = x[i: T - tau + i] #有996个标签 labels = x[tau:].reshape((-1, 1)) batch_size, n_train = 16, 600 # 只有前n_train个样本用于训练 train_iter = d2l.load_array((features[:n_train], labels[:n_train]), batch_size, is_train=True) # 初始化网络权重的函数 def init_weights(m): if type(m) == nn.Linear: nn.init.xavier_uniform_(m.weight) # 一个简单的多层感知机 def get_net(): net = nn.Sequential(nn.Linear(4, 10), nn.ReLU(), nn.Linear(10, 1)) net.apply(init_weights) return net # 平方损失。注意:MSELoss计算平方误差时不带系数1/2 loss = nn.MSELoss(reduction='none') #训练 def train(net, train_iter, loss, epochs, lr): trainer = torch.optim.Adam(net.parameters(), lr) for epoch in range(epochs): for X, y in train_iter: trainer.zero_grad() l = loss(net(X), y) l.sum().backward() trainer.step() print(f'epoch {epoch + 1}, ' f'loss: {d2l.evaluate_loss(net, train_iter, loss):f}') net = get_net() train(net, train_iter, loss, 5, 0.01)

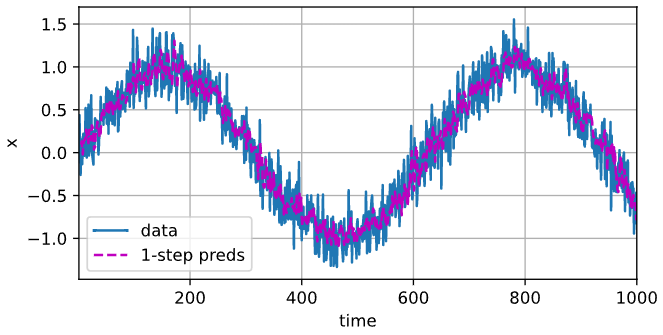

#预测 onestep_preds = net(features) d2l.plot([time, time[tau:]], [x.detach().numpy(), onestep_preds.detach().numpy()], 'time', 'x', legend=['data', '1-step preds'], xlim=[1, 1000], figsize=(6, 3))

K步预测:如已经观察到了x604,其k步预测是x604+k。即我们必须使用我们自己的预测(而不是原始数据)来进行多步预测。

虽然“4步预测”看起来仍然不错,但超过这个跨度的任何预测几乎都是无用的。

“Make my parents proud,and impress the girl I like.”