AI | 强化学习 | Sarsa

AI | 强化学习 | Sarsa

首先感谢莫烦大佬的公开教程。

https://github.com/MorvanZhou/Reinforcement-learning-with-tensorflow

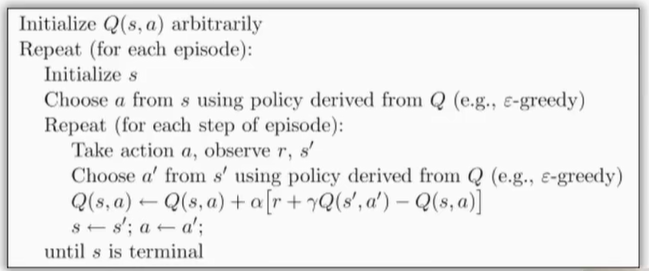

sarsa是强化学习中的一种,属于在线学习。【走到哪一步学哪一步】

和qlearning类似,但是qlearning属于离线学习。

这次实验是三个文件,一个是迷宫环境,一个是强化学习决策类,一个是运行更新的脚本。

RL_brain.py:

"""

This part of code is the Q learning brain, which is a brain of the agent.

All decisions are made in here.

View more on my tutorial page: https://morvanzhou.github.io/tutorials/

"""

import numpy as np

import pandas as pd

# 父类

class RL(object):

def __init__(self, action_space, learning_rate=0.01, reward_decay=0.9, e_greedy=0.9):

self.actions = action_space # a list

self.lr = learning_rate

self.gamma = reward_decay

self.epsilon = e_greedy

self.q_table = pd.DataFrame(columns=self.actions, dtype=np.float64)

# 查看q表中有没有这个state,如果没有就添加上。

def check_state_exist(self, state):

if state not in self.q_table.index:

# append new state to q table

self.q_table = self.q_table.append(

pd.Series(

[0]*len(self.actions),

index=self.q_table.columns,

name=state,

)

)

# 选择决策

# 90%最优解,10%随机采取行动

def choose_action(self, observation):

self.check_state_exist(observation)

# action selection

if np.random.rand() < self.epsilon:

# choose best action

state_action = self.q_table.loc[observation, :]

# some actions may have the same value, randomly choose on in these actions

action = np.random.choice(state_action[state_action == np.max(state_action)].index)

else:

# choose random action

action = np.random.choice(self.actions)

return action

def learn(self, *args):

pass

# off-policy

# Qlearning算法

class QLearningTable(RL):

def __init__(self, actions, learning_rate=0.01, reward_decay=0.9, e_greedy=0.9):

super(QLearningTable, self).__init__(actions, learning_rate, reward_decay, e_greedy)

def learn(self, s, a, r, s_):

self.check_state_exist(s_)

q_predict = self.q_table.loc[s, a]

if s_ != 'terminal':

q_target = r + self.gamma * self.q_table.loc[s_, :].max() # next state is not terminal

else:

q_target = r # next state is terminal

self.q_table.loc[s, a] += self.lr * (q_target - q_predict) # update

# on-policy

class SarsaTable(RL):

def __init__(self, actions, learning_rate=0.01, reward_decay=0.9, e_greedy=0.9):

super(SarsaTable, self).__init__(actions, learning_rate, reward_decay, e_greedy)

# 比Qlearning多了下一个action的参数

def learn(self, s, a, r, s_, a_):

self.check_state_exist(s_) # 检查新的state有没有存在

q_predict = self.q_table.loc[s, a]

if s_ != 'terminal':

q_target = r + self.gamma * self.q_table.loc[s_, a_] # next state is not terminal

else:

q_target = r # next state is terminal

# 更新q表

self.q_table.loc[s, a] += self.lr * (q_target - q_predict) # update

run_this.py:

"""

Sarsa is a online updating method for Reinforcement learning.

Unlike Q learning which is a offline updating method, Sarsa is updating while in the current trajectory.

You will see the sarsa is more coward when punishment is close because it cares about all behaviours,

while q learning is more brave because it only cares about maximum behaviour.

"""

from maze_env import Maze

from RL_brain import SarsaTable

def update():

for episode in range(100):

# initial observation

observation = env.reset()

# RL choose action based on observation

action = RL.choose_action(str(observation))

while True:

# fresh env

env.render()

# RL take action and get next observation and reward

observation_, reward, done = env.step(action)

# RL choose action based on next observation

action_ = RL.choose_action(str(observation_))

# RL learn from this transition (s, a, r, s, a) ==> Sarsa

RL.learn(str(observation), action, reward, str(observation_), action_)

# swap observation and action

observation = observation_

action = action_

# break while loop when end of this episode

if done:

break

print(f'round: {episode}')

print(RL.q_table) # 输出查看q表

# end of game

print('game over')

env.destroy()

if __name__ == "__main__":

env = Maze()

RL = SarsaTable(actions=list(range(env.n_actions)))

env.after(100, update)

env.mainloop()

本文来自博客园,作者:Mz1,转载请注明原文链接:https://www.cnblogs.com/Mz1-rc/p/17014859.html

如果有问题可以在下方评论或者email:mzi_mzi@163.com