Hadoop JobHistory

JobHistory用来记录已经finished的mapreduce运行日志,日志信息存放于HDFS目录中,默认情况下没有开启此功能,需要在mapred-site.xml中配置并手动启动。

1、mapred-site.xml配置信息:

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> <description>指定运行mapreduce的环境是yarn,与hadoop1截然不同的地方</description> </property> <property> <name>mapreduce.jobhistory.address</name> <value>xfvm03:10020</value> <description>MR JobHistory Server管理的日志的存放位置</description> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>xfvm03:19888</value> <description>查看历史服务器已经运行完的Mapreduce作业记录的web地址,需要启动该服务才行</descripti on> </property> <property> <name>mapreduce.jobhistory.done-dir</name> <value>/opt/big/hadoop-2.7.3/mr-history/done</value> <description>MR JobHistory Server管理的日志的存放位置,默认:/mr-history/done</description> </property> <property> <name>mapreduce.jobhistory.intermediate-done-dir</name> <value>/opt/big/hadoop-2.7.3/mr-history/mapred/tmp</value> <description>MapReduce作业产生的日志存放位置,默认值:/mr-history/tmp</description> </property> <property> <name>mapreduce.map.memory.mb</name> <value>1024</value> <description>每个Map任务的物理内存限制</description> </property> <property> <name>mapreduce.reduce.memory.mb</name> <value>2048</value> <description>每个Reduce任务的物理内存限制</description> </property> <!--> <property> <name>mapreduce.map.java.opts</name> <value>-Xmx1024m</value> </property> <property> <name>mapreduce.reduce.java.opts</name> <value>-Xmx2048m</value> </property> <--> </configuration>

2、启动 jobhistory server

[xfvm@xfvm03 ~]$ mr-jobhistory-daemon.sh start historyserver

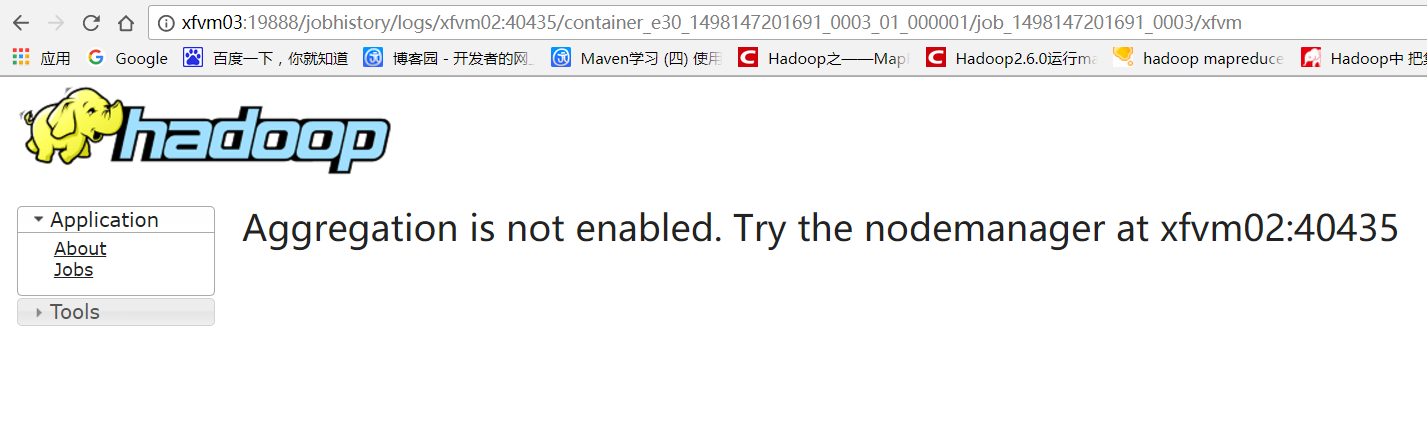

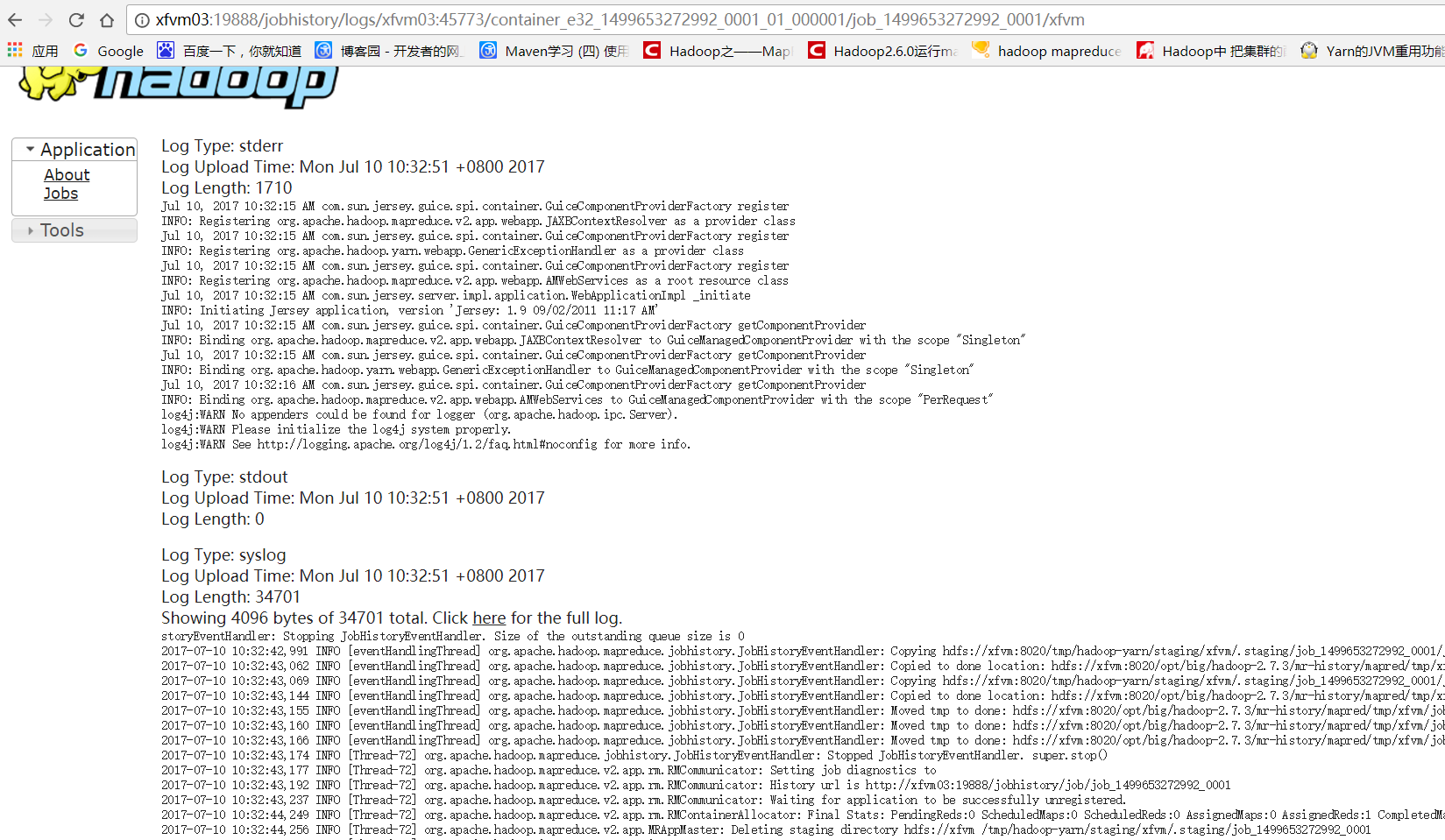

问题记录:

3、问题解决

在yarn-site.xml文件中增加如下属性,并重启jobhistory server

<!--打开HDFS上日志记录功能--> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property>

4、yarn-site.xml完整配置如下:

<?xml version="1.0"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <configuration> <!--开启RM高可靠--> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <!--指定RM的cluster id --> <property> <name>yarn.resourcemanager.cluster-id</name> <value>xfvm-yarn</value> </property> <!--指定RM的名字--> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> <description>默认</description> </property> <property> <name>yarn.resourcemanager.hostname.rm1</name> <value>xfvm01</value> </property> <property> <name>yarn.resourcemanager.hostname.rm2</name> <value>xfvm02</value> </property> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> <property> <name>yarn.resourcemanager.zk-address</name> <value>xfvm02:2181,xfvm03:2181,xfvm04:2181</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.nodemanager.resource.memory-mb</name> <value>2048</value> <description>该值配置小于1024时,NM是无法启动的!会报错:NodeManager from slavenode2 doesn't satisfy minimum allocations, Sending SHUTDOWN signal to the NodeManager.</description> </property> <property> <name>yarn.scheduler.minimum-allocation-mb</name> <value>512</value> <discription>单个任务可申请最少内存,默认1024MB</discription> </property> <property> <name>yarn.scheduler.maximum-allocation-mb</name> <value>2048</value> <discription>单个任务可申请最大内存,默认8192MB</discription> </property> <!--打开HDFS上日志记录功能--> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <!--在HDFS上聚合的日志最长保留多少秒。3天--> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>259200</value> </property> <!--rm失联后重新链接的时间--> <property> <name>yarn.resourcemanager.connect.retry-interval.ms</name> <value>2000</value> </property> <!--开启故障自动切换--> <property> <name>yarn.resourcemanager.ha.automatic-failover.enabled</name> <value>true</value> </property> <!--在namenode1上配置rm1,在namenode2上配置rm2,注意:一般都喜欢把配置好的文件远程复制到其它机器上,但这个在YARN的另一个机器上一定要修改--> <property> <name>yarn.resourcemanager.ha.id</name> <value>rm1</value> <description>If we want to launch more than one RM in single node, we need this configuration</description> </property> <!--配置与zookeeper的连接地址--> <property> <name>yarn.resourcemanager.zk-state-store.address</name> <value>xfvm02:2181,xfvm03:2181,xfvm04:2181</value> </property> <!--schelduler失联等待连接时间--> <property> <name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms</name> <value>5000</value> </property> <!--配置rm1--> <property> <name>yarn.resourcemanager.address.rm1</name> <value>xfvm01:8132</value> </property> <property> <name>yarn.resourcemanager.scheduler.address.rm1</name> <value>xfvm01:8130</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm1</name> <value>xfvm01:8188</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address.rm1</name> <value>xfvm01:8131</value> </property> <property> <name>yarn.resourcemanager.admin.address.rm1</name> <value>xfvm01:8033</value> </property> <property> <name>yarn.resourcemanager.ha.admin.address.rm1</name> <value>xfvm01:23142</value> </property> <!--配置rm2--> <property> <name>yarn.resourcemanager.address.rm2</name> <value>xfvm02:8132</value> </property> <property> <name>yarn.resourcemanager.scheduler.address.rm2</name> <value>xfvm02:8130</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm2</name> <value>xfvm02:8188</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address.rm2</name> <value>xfvm02:8131</value> </property> <property> <name>yarn.resourcemanager.admin.address.rm2</name> <value>xfvm02:8033</value> </property> <property> <name>yarn.resourcemanager.ha.admin.address.rm2</name> <value>xfvm02:23142</value> </property> <property> <name>yarn.nodemanager.local-dirs</name> <value>/opt/big/hadoop-2.7.3/yarn/local</value> </property> <property> <name>yarn.nodemanager.log-dirs</name> <value>/opt/big/hadoop-2.7.3/yarn/logs</value> </property> <property> <name>mapreduce.shuffle.port</name> <value>23080</value> </property> <!--故障处理类--> <property> <name>yarn.client.failover-proxy-provider</name> <value>org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider</value> </property> <property> <name>yarn.resourcemanager.ha.automatic-failover.zk-base-path</name> <value>/yarn-leader-election</value> <description>Optional setting. The default value is /yarn-leader-election</description> </property> </configuration>

爱家,更要爱技术。