java spark远程调用问题

从IntelliJ IDEA提交应用(submit Application),从spark webUI上能观察到集群在不停地add、remove Executor,无法正常执行。代码及截图如下:

代码:

SparkConf conf = new SparkConf() .setSparkHome(sparkHome) .setAppName(appName) conf.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer"); 或者 SparkSession spark = SparkSession.builder().master("spark://server01:7077").appName("HBASEDATA") .getOrCreate();

控制台输出信息:

20/07/21 10:29:06 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://DESKTOP-A56927L:4040 20/07/21 10:29:06 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://server01:7077... 20/07/21 10:29:06 INFO TransportClientFactory: Successfully created connection to bikini-bottom/192.168.0.91:7077 after 149 ms (0 ms spent in bootstraps) ... ... 20/07/21 10:29:34 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 13 20/07/21 10:29:34 INFO StandaloneSchedulerBackend: Granted executor ID app-20190721102906-0002/14 on hostPort 192.168.0.91:46381 with 1 core(s), 800.0 MB RAM 20/07/21 10:29:34 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190721102906-0002/14 is now RUNNING 20/07/21 10:29:34 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190721102906-0002/12 is now EXITED (Command exited with code 1) 20/07/21 10:29:34 INFO StandaloneSchedulerBackend: Executor app-20190721102906-0002/12 removed: Command exited with code 1 20/07/21 10:29:34 INFO BlockManagerMaster: Removal of executor 12 requested

Spark集群Executor分配情况:

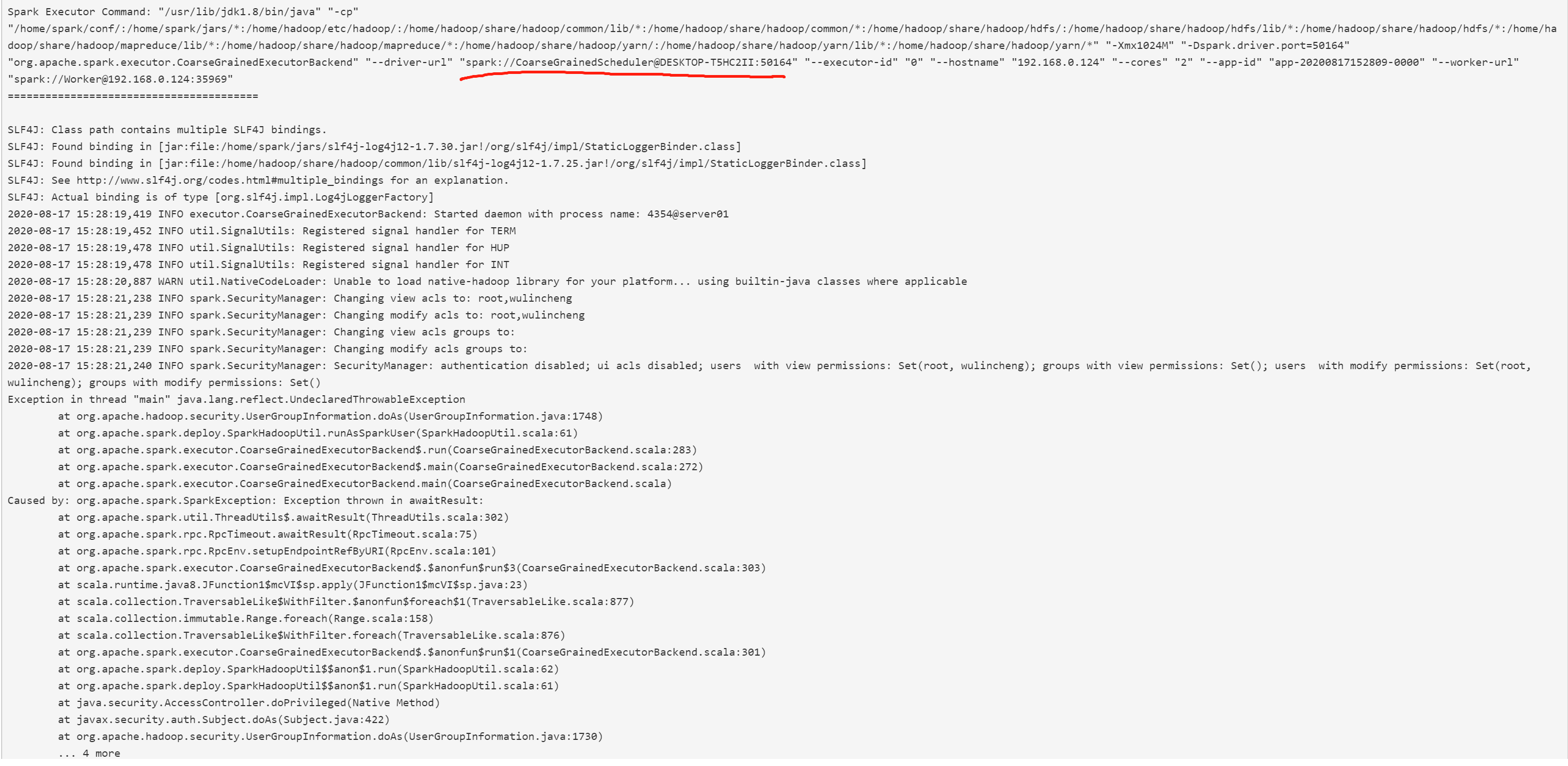

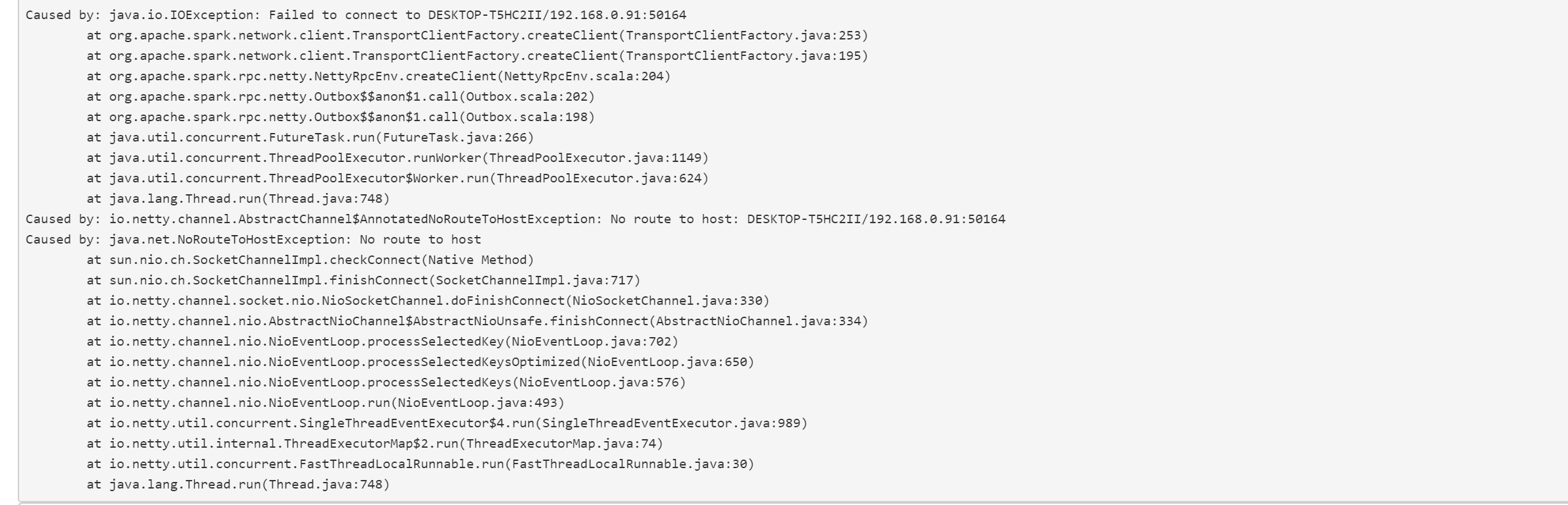

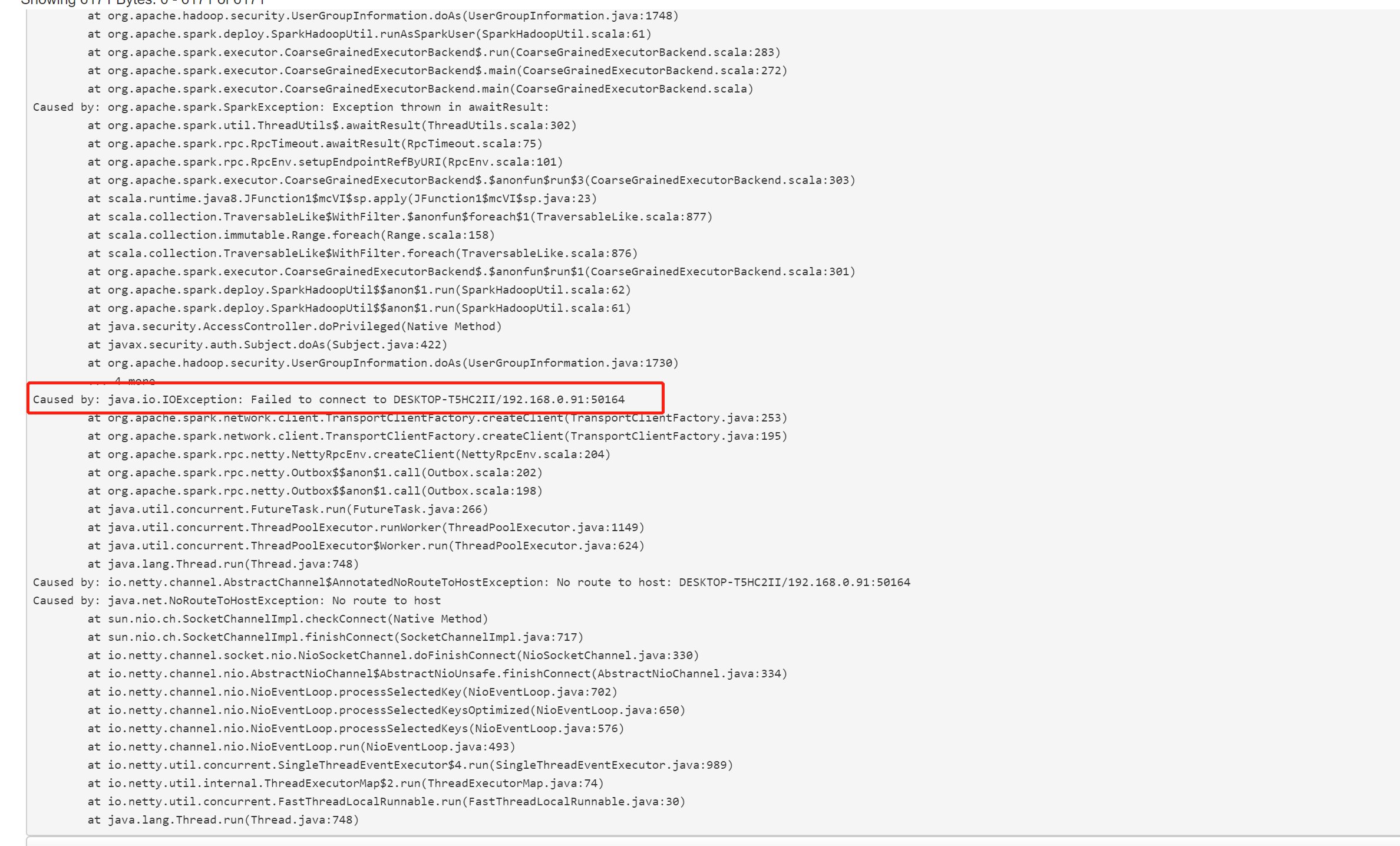

Executor的报错信息:

---------------------------------------------------------------------------------------------------------------------

解决:

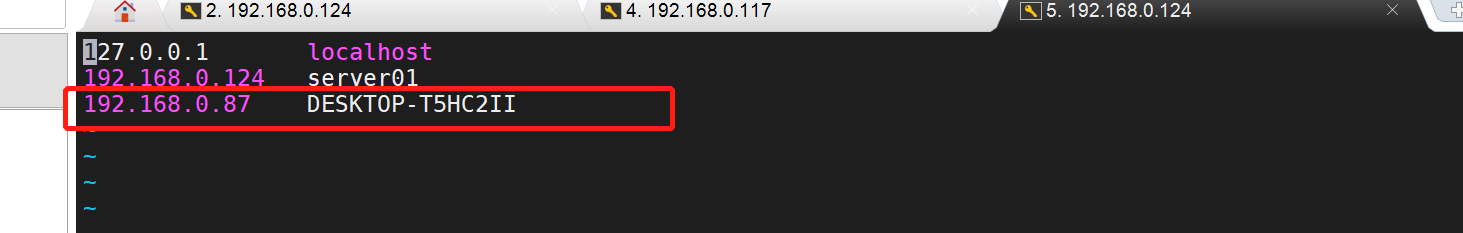

配置主机:

修改代码:

SparkConf conf = new SparkConf() .setSparkHome(sparkHome) .setAppName(appName) //指定driver 的hosts-name .set("spark.driver.host","DESKTOP-T5HC2II") //指定driver的服务端口 .set("spark.driver.port","9095") //内存大小 .set("spark.executor.memory","800m") //CPU核心数 .set("spark.driver.cores","1") .setMaster(master); conf.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer"); 或者 SparkSession spark = SparkSession.builder().master("spark://server01:7077").appName("HBASEDATA") //指定driver 的hosts-name .config("spark.driver.host","DESKTOP-T5HC2II") //指定driver的服务端口 .config("spark.driver.port","9092") .getOrCreate();