ELKF日志系统搭建(一)基础——ELKF架构

一、ELKF简介

ElasticSearch:搜索、分析和存储数据

Logstash:采集日志、格式化、过滤数据(数据清洗的过程),最后将数据推送到Elasticsearch存储

Kibana:数据可视化

Beats:集合了多种单一用途数据采集器,用于实现从边缘机器向Logstash和Elasticsearch发送数据,使用最多的是Filebeat,是一个轻量级日志采集 器

二、环境准备(三台机器做同样的操作)

环境说明:

1 2 3 4 5 | 准备三台机器:server IP 主机名 安装应用centos7.9 192.168.200.21 elk01 数据、主节点 安装elasticsearch、logstash、kabana、filebeat centos7.9 192.168.200.22 elk02 数据、主节点 安装elasticsearch、kabanacentos7.9 192.168.200.23 elk03 数据、主节点 安装elasticsearch、kabana |

1、关闭selinux

1 2 | setenforce 0 #临时关闭SELinuxsed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config #永久关闭SELnux(重启生效) |

2、修改最大打开文件数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | cat /etc/security/limits.conf | grep -v "^#" | grep -v "^$"* soft nproc 65536* hard nproc 65536* soft nofile 65536* hard nofile 65536cat /etc/sysctl.conf | grep -v "^#"vm.max_map_count = 655360# 应用配置sysctl -pcat /etc/systemd/system.conf | grep -v "^#"[Manager]DefaultLimitNOFILE=655360DefaultLimitNPROC=655360 |

3、配置hsost文件

1 2 3 4 5 6 | cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.200.21 elk01192.168.200.22 elk02192.168.200.23 elk03 |

4、安装Java环境

1 2 3 4 5 6 7 8 9 10 11 12 13 | 查询yum源支持的jdk的rpm包yum list | grep jdk安装jdk-11版本yum install -y java-11-openjdk*# 查看java版本[root@elk01 ~]# java -versionopenjdk version "11.0.19" 2023-04-18 LTSOpenJDK Runtime Environment (Red_Hat-11.0.19.0.7-1.el7_9) (build 11.0.19+7-LTS)OpenJDK 64-Bit Server VM (Red_Hat-11.0.19.0.7-1.el7_9) (build 11.0.19+7-LTS, mixed mode, sharing) |

三、安装和配置elasticsearch(三台机器都要安装;注意:安装后先启动配置文件中指定的master节点机器,其他节点暂时不要启动,等加入集群后再启动)

1、安装elasticsearch

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 | rpm --import https: //artifacts.elastic.co/GPG-KEY-elasticsearch[root@elk01 ~]# cat /etc/yum.repos.d/elasticsearch.repo[elasticsearch]name=Elasticsearch repository for 8.x packagesbaseurl=https: //artifacts.elastic.co/packages/8.x/yumgpgcheck=1gpgkey=https: //artifacts.elastic.co/GPG-KEY-elasticsearchenabled=0autorefresh=1type=rpm-mdyum install --enablerepo=elasticsearch elasticsearch -y已加载插件:fastestmirror, langpacksLoading mirror speeds from cached hostfile * base : mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com正在解决依赖关系--> 正在检查事务---> 软件包 elasticsearch.x86_64.0.8.9.0-1 将被 安装--> 解决依赖关系完成依赖关系解决=============================================================================================== Package 架构 版本 源 大小===============================================================================================正在安装: elasticsearch x86_64 8.9.0-1 elasticsearch 578 M事务概要===============================================================================================安装 1 软件包总下载量:578 M安装大小:1.2 GDownloading packages:elasticsearch-8.9.0-x86_64.rpm | 578 MB 01:09:19 Running transaction checkRunning transaction testTransaction test succeededRunning transactionCreating elasticsearch group ... OKCreating elasticsearch user... OK 正在安装 : elasticsearch-8.9.0-1.x86_64 1/1 --------------------------- Security autoconfiguration information ------------------------------Authentication and authorization are enabled.TLS for the transport and HTTP layers is enabled and configured.The generated password for the elastic built- in superuser is : u9COgfA0okh4LD+fRIRhIf this node should join an existing cluster, you can reconfigure this with'/usr/share/elasticsearch/bin/elasticsearch-reconfigure-node --enrollment-token 'after creating an enrollment token on your existing cluster.You can complete the following actions at any time:Reset the password of the elastic built- in superuser with '/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic' .Generate an enrollment token for Kibana instances with '/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibana' .Generate an enrollment token for Elasticsearch nodes with '/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s node' .-------------------------------------------------------------------------------------------------### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd sudo systemctl daemon-reload sudo systemctl enable elasticsearch.service### You can start elasticsearch service by executing sudo systemctl start elasticsearch.service 验证中 : elasticsearch-8.9.0-1.x86_64 1/1 已安装: elasticsearch.x86_64 0:8.9.0-1 完毕! |

2、配置elasticsearch

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 | [root@elk01 ~]# cat /etc/elasticsearch/elasticsearch.yml | grep -v "^#"#集群名称cluster.name: my-elk#当前节点在集群中的名称,在集群中,名称唯一node.name: elk01#数据存储目录path.data: / var /lib/elasticsearch#日志目录path.logs: / var /log/elasticsearch#应用监听地址network.host: 0.0.0.0#应用监听端口http.port: 9200#集群节点列表discovery.seed_hosts: [ "elk01" , "elk02" , "elk03" ]#首次启动指定的Master节点,在Node节点需注释掉cluster.initial_master_nodes: [ "elk01" ]xpack.security.enabled: truexpack.security.enrollment.enabled: truexpack.security.http.ssl: enabled: true keystore.path: certs/http.p12xpack.security.transport.ssl: enabled: true verification_mode: certificate keystore.path: certs/transport.p12 truststore.path: certs/transport.p12http.host: 0.0.0.0#防火墙开放相关端口firewall-cmd --add-port=9200/tcp --permanentfirewall-cmd --add-port=9300/tcp --permanentfirewall-cmd --reload#启动elasticsearch服务并设为开机自启(注意:3台全部配置好后,先启动配置文件中指定的Master节点这一台,node节点暂时不要启动)systemctl enable elasticsearchsystemctl start elasticsearch#安装完 Elasticsearch 时,安装过程会配置 默认情况下为单节点群集。如果想要其他节点加入现有群集 可以在配置的master执行下面的命令在现有节点上生成注册令牌。[root@server01 ~]# /usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s nodeeyJ2ZXIiOiI4LjkuMSIsImFkciI6WyIxOTIuMTY4LjEwMC4yMTo5MjAwIl0sImZnciI6IjhlZDhmMmY2MjExYWM1YzM5NzdjNzllZDdmODY1MDFiY2E5YjlkNDA4ZTgwNDg0NjRiZDViZWFkNzZiZjRmMjIiLCJrZXkiOiJKX0RERG9vQkRPSVRJM0NlWnNIODpINUFMeHo1eFQ2bU5vcUF1cDNxRk1RIn0=之后在新的 Elasticsearch 节点上,将注册令牌作为参数传递给工具:elasticsearch-reconfigure-node[root@server02 ~]# /usr/share/elasticsearch/bin/elasticsearch-reconfigure-node --enrollment-token eyJ2ZXIiOiI4LjkuMSIsImFkciI6WyIxOTIuMTY4LjEwMC4yMTo5MjAwIl0sImZnciI6IjhlZDhmMmY2MjExYWM1YzM5NzdjNzllZDdmODY1MDFiY2E5YjlkNDA4ZTgwNDg0NjRiZDViZWFkNzZiZjRmMjIiLCJrZXkiOiJKX0RERG9vQkRPSVRJM0NlWnNIODpINUFMeHo1eFQ2bU5vcUF1cDNxRk1RIn0=This node will be reconfigured to join an existing cluster, using the enrollment token that you provided.This operation will overwrite the existing configuration. Specifically: - Security auto configuration will be removed from elasticsearch.yml - The [certs] config directory will be removed - Security auto configuration related secure settings will be removed from the elasticsearch.keystoreDo you want to continue with the reconfiguration process [y/N]y如果有多个节点,执行相同的操作,执行完后启动elasticsearch服务启动后可以使用journalctl -f 查看跟踪日志[root@server02 ~]# journalctl-- Logs begin at 日 2023-08-20 00:17:50 CST, end at 日 2023-08-20 03:05:01 CST. --8月 20 00:17:50 centos7 systemd-journal[107]: Runtime journal is using 8.0M (max allowed 188.5M8月 20 00:17:50 centos7 kernel: Initializing cgroup subsys cpuset8月 20 00:17:50 centos7 kernel: Initializing cgroup subsys cpu8月 20 00:17:50 centos7 kernel: Initializing cgroup subsys cpuacct............也可以使用journalctl --unit elasticsearch命令列出elasticsearch的日志条目[root@server02 ~]# journalctl --unit elasticsearch-- Logs begin at 日 2023-08-20 00:17:50 CST, end at 日 2023-08-20 03:05:01 CST. --8月 20 01:14:44 elk02 systemd[1]: Starting Elasticsearch...8月 20 01:15:30 elk02 systemd[1]: Started Elasticsearch.8月 20 01:24:29 elk02 systemd[1]: Stopping Elasticsearch...8月 20 01:24:31 elk02 systemd[1]: Stopped Elasticsearch.在任意一节点查看集群状态和信息(elastic为账号;086530是密码)#查询状态[root@elk01 ~]# curl -kXGET "https://localhost:9200/_cluster/health?pretty=true" -u elastic:086530#查询Elasticsearch运行状态[root@elk01 ~]# curl --cacert /etc/elasticsearch/certs/http_ca.crt -u elastic:086530 https: //localhost:9200#查询集群节点信息[root@elk01 ~]# curl --cacert /etc/elasticsearch/certs/http_ca.crt -u elastic:086530 https: //localhost:9200/_cluster/health?pretty=true[root@server02 ~]# curl --cacert /etc/elasticsearch/certs/http_ca.crt -u elastic:086530 https: //localhost:9200/_cat/nodes#修改elasticsearch默认密码(在任意一节点修改密码,其他节点也会修改)[root@elk01 ~]# /usr/share/elasticsearch/bin/elasticsearch-reset-password -u elasticThis tool will reset the password of the [elastic] user.You will be prompted to enter the password.Please confirm that you would like to continue [y/N]yEnter password for [elastic]: Re-enter password for [elastic]: Password for the [elastic] user successfully reset. |

四、安装和配置Kibana(三台机器都要安装和配置)

1、安装Kibana

1 2 3 4 5 6 7 8 9 10 11 | [root@elk8]# cat /etc/yum.repos.d/kibana.repo [kibana-8.x]name=Kibana repository for 8.x packagesbaseurl=https: //artifacts.elastic.co/packages/8.x/yumgpgcheck=1gpgkey=https: //artifacts.elastic.co/GPG-KEY-elasticsearchenabled=1autorefresh=1type=rpm-mdyum install -y kibana |

2、配置Kibana

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 | #vim /etc/kibana/kibana.ymlserver.port: 5601server.host: "0.0.0.0"server.name: "elk01"# server.publicBaseUrl 缺失,在生产环境中运行时应配置。某些功能可能运行不正常。# 这里地址改为你访问kibana的地址,不能以 / 结尾server.publicBaseUrl: "http://192.168.200.21:5601"# Kibana 修改中文在kibana.yml配置文件中添加一行配置i18n.locale: "zh-CN"# 分别在三台机器上生成kibana加密密钥,并将生成的密钥加入到kibana配置文件中# xpack.encryptedSavedObjects.encryptionKey: Used to encrypt stored objects such as dashboards and visualizations# xpack.reporting.encryptionKey: Used to encrypt saved reports# xpack.security.encryptionKey: Used to encrypt session information[root@aclab ~]# /usr/share/kibana/bin/kibana-encryption-keys generatexpack.encryptedSavedObjects.encryptionKey: 5bb5e37c09fd6b05958be5a3edc82cf9xpack.reporting.encryptionKey: b2b873b52ab8ec55171bd8141095302cxpack.security.encryptionKey: 30670e386fab78f50b012e25cb284e88# 防火墙放行5601端口firewall-cmd --add-port=5601/tcp --permanentfirewall-cmd --reload# 重启# 开机启动kibanasystemctl enable kibana# 启动kibanasystemctl start kibana# 生成kibana令牌/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibanaeyJ2ZXIiOiI4LjAuMSIsImFkciI6WyIxOTIuMTY4LjIxMC4xOTo5MjAwIl0sImZnciI6IjMzYjUwYTkxN2VmYjIwZjhjYzFjMmM0ZjFhMDdlY2Q2MTliZGUxOTU4MzMyOGY2MTJjMzMyODFjNzI0ODQ5NDYiLCJrZXkiOiJBemgtXzRBQnBtQ3lIN2p4MG1VdDpNN0tiNTFMNlM5NnhwU1lTdGpIOUVRIn0=# 测试kibana,浏览器访问:http: //192.168.200.21:5601/# 在tonken处输入刚刚的令牌eyJ2ZXIiOiI4LjAuMSIsImFkciI6WyIxOTIuMTY4LjIxMC4xOTo5MjAwIl0sImZnciI6IjMzYjUwYTkxN2VmYjIwZjhjYzFjMmM0ZjFhMDdlY2Q2MTliZGUxOTU4MzMyOGY2MTJjMzMyODFjNzI0ODQ5NDYiLCJrZXkiOiJBemgtXzRBQnBtQ3lIN2p4MG1VdDpNN0tiNTFMNlM5NnhwU1lTdGpIOUVRIn0=# 在服务器中检索验证码 sh /usr/share/kibana/bin/kibana-verification-code# 输入Elasticsearch的用户名密码,进入系统# 进入kibana后台后可以根据需要修改elastic密码(elasticsearch和kibana的登录密码都会修改) |

注意:elk02和elk03机器上的server.name和server.publicBaseUrl要修改为本机的主机名和ip链接。

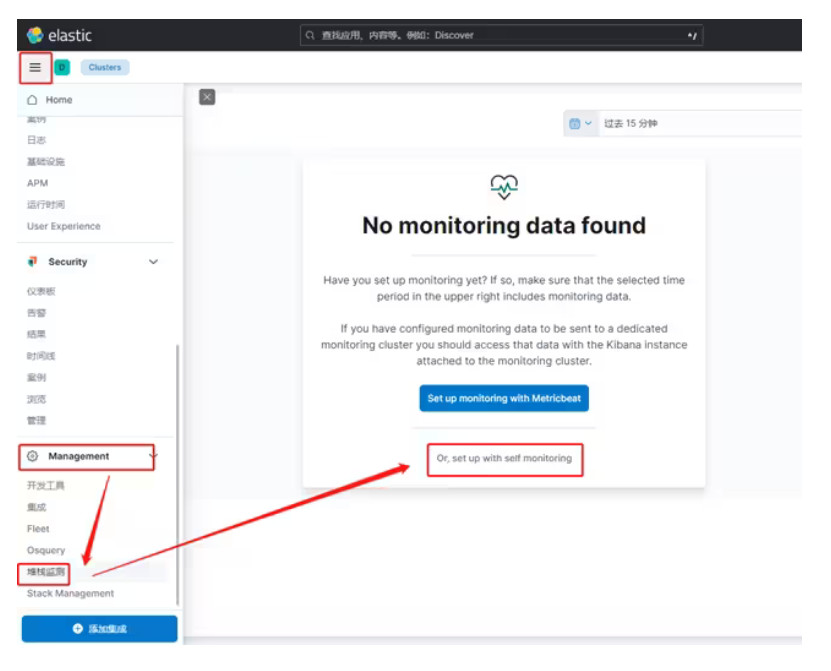

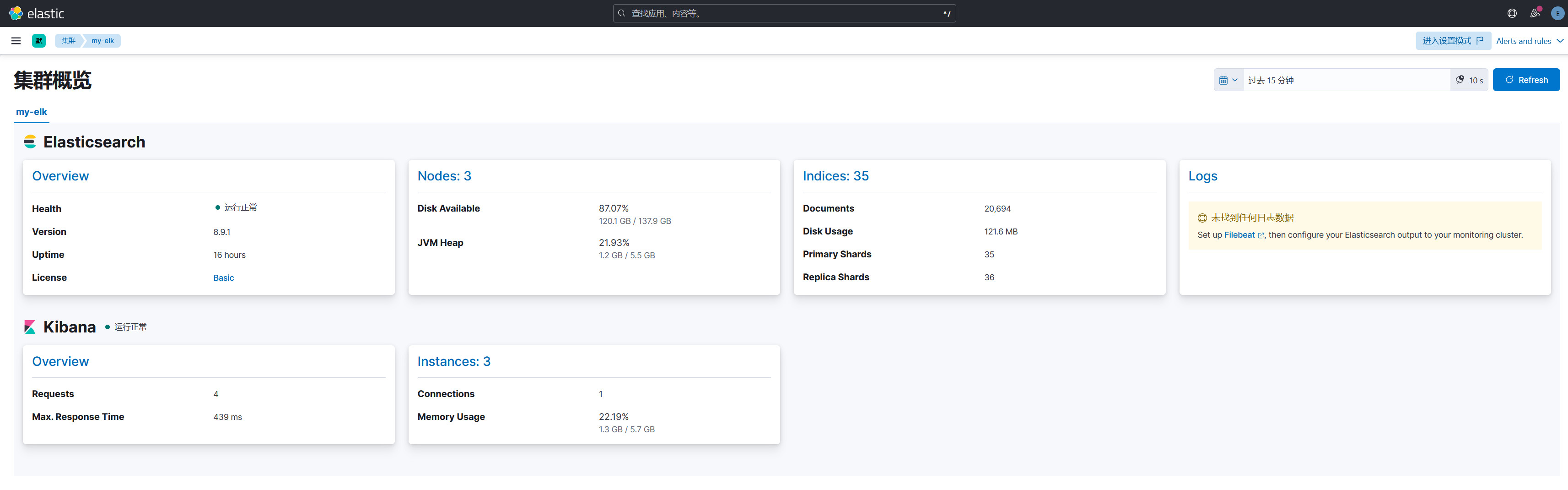

3、Kibana配置ES监控和管理

(1)、Kibana首次登录进来后,选择自己浏览。

(2)、打开Management,找到“堆栈监测”开启ES集群的监控

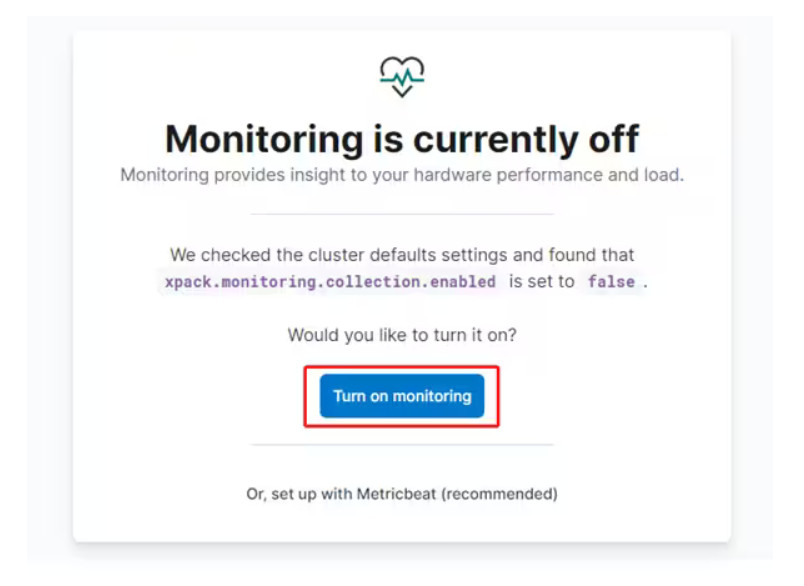

(3)、点击打开监控

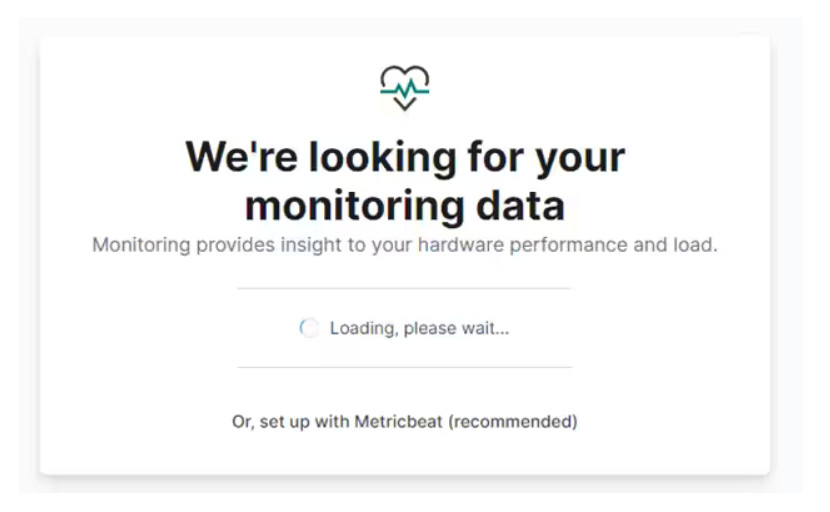

(4)、稍等一会

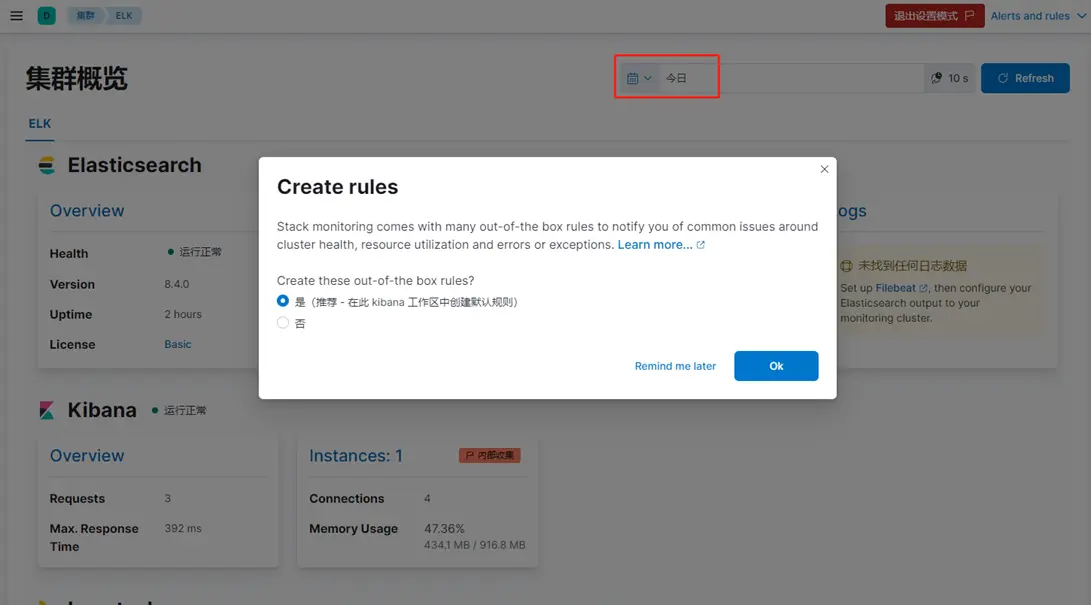

(5)、如果上面的界面加载了很久都没跳出页面可以如下图右上角。选择今天的数据时间范围,就有数据了,然后弹出创建规则窗口,按推荐默认的来也可以。

(6)、就可以看到集群的状态 了

五、安装和配置Logstash(只在elk01上安装Logstash)

1、安装Logstash

1 2 3 4 5 6 7 8 9 10 11 12 13 | #添加yum源[root@elk01 ~]# cat /etc/yum.repos.d/logstash.repo [logstash-8.x]name=Elastic repository for 8.x packagesbaseurl=https: //artifacts.elastic.co/packages/8.x/yumgpgcheck=1gpgkey=https: //artifacts.elastic.co/GPG-KEY-elasticsearchenabled=1autorefresh=1type=rpm-md#安装yum install -y logstash |

2、配置Logstash

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 | #将ES的证书复制到Logstash目录。因为我们的ES使用的HTTPS访问认证, Logstash要发送日志到ES时,需要进行证书认证。cp -r /etc/elasticsearch/certs /etc/logstash/#创建软链接ln -s /usr/share/logstash/bin/logstash /bin/ln -s /usr/share/logstash/bin/logstash-plugin /bin/ln -s /usr/share/logstash/bin/logstash.lib.sh /usr/bin/#使用Logstash收集日志。进入Logstash目录,创建日志收集的配置文件,因为我的服务器没装有什么应用,就以收集操作系统日志作为例子。[root@elk01 ~]# cat /etc/logstash/conf.d/systemlog.conf input { file { path => [ "/var/log/messages" ] type => "system" start_position => "beginning" }}output { elasticsearch { hosts => [ "https://192.168.200.21:9200" , "https://192.168.200.22:9200" , "https://192.168.200.23:9200" ] index => "192.168.200.21-syslog-%{+YYYY.MM}" user => "elastic" password => "086530qwe" ssl => "true" cacert => "/etc/logstash/certs/http_ca.crt" }}#验证配置文件是否正确,最后面显示OK才能正常启动logstash[root@elk01 ~]# logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/systemlog.conf --config.test_and_exit#启动服务systemctl enable logstashsystemctl start logstash#防火墙开放相关端口firewall-cmd --add-port=9600/tcp --permanentfirewall-cmd --reload# 测试logstash[root@elk01 ~]# logstash -e 'input { stdin { } } output { stdout {} }'Using bundled JDK: /usr/share/logstash/jdk............ipelines=>[:main], :non_running_pipelines=>[]}#输入内容并按回车hello word{ "@version" => "1" , "message" => "hello word" , "host" => { "hostname" => "elk01" }, "@timestamp" => 2023-08-20T16:54:10.531323818Z, "event" => { "original" => "hello word" }}说明:在默认情况下,stdout输出插件的编解码器为rubydebug,所以输出内容中包含有版本、时间等信息,其中message属性包含的就是在命令行输入的内容@timestamp:标记事件发生的时间点host:标记事件发生的主机@version:标记事件的唯一类型#收集日志。运行日志收集脚本,开始收集日志,并查看日志。logstash -f /etc/logstash/conf.d/systemlog.conf & |

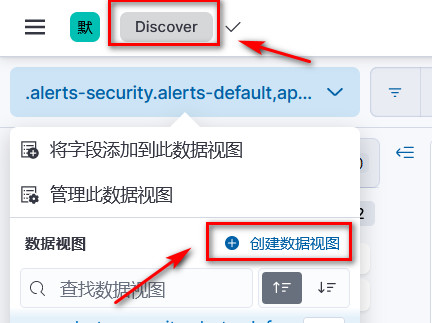

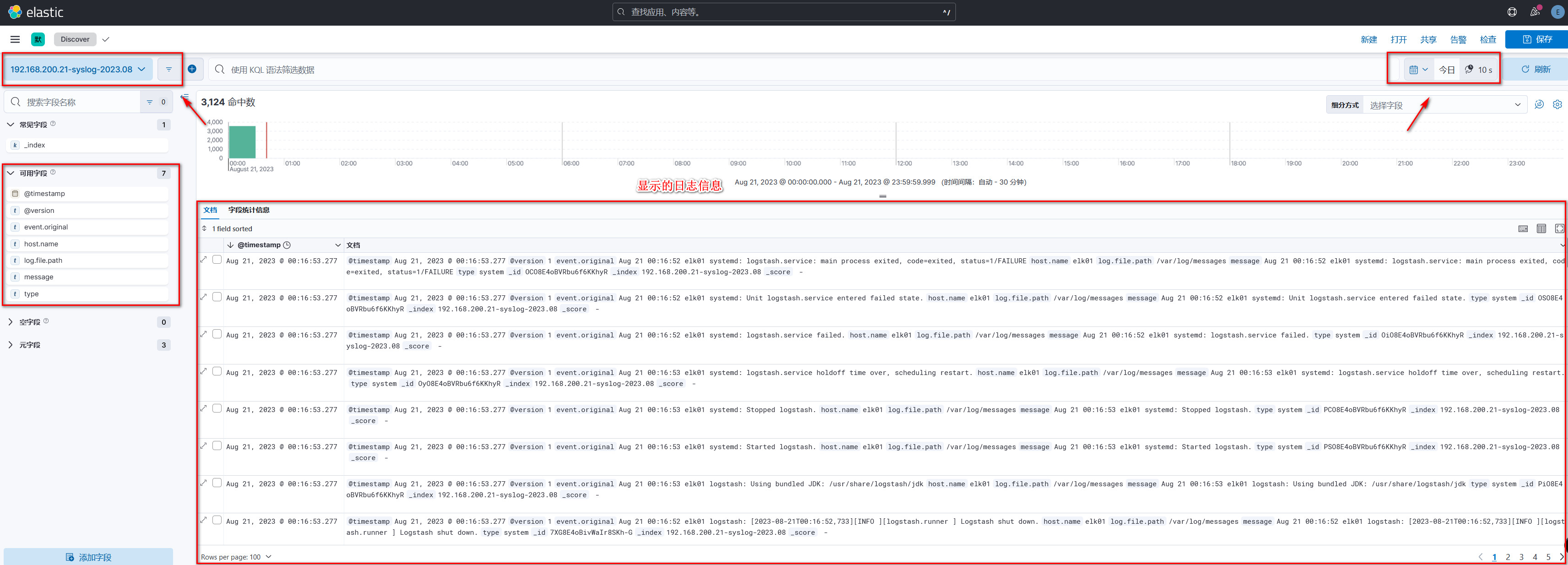

使用Kibana查看日志。打开Kibana页面,点击左侧菜单栏的Discover选项卡,如下图创建数据视图。

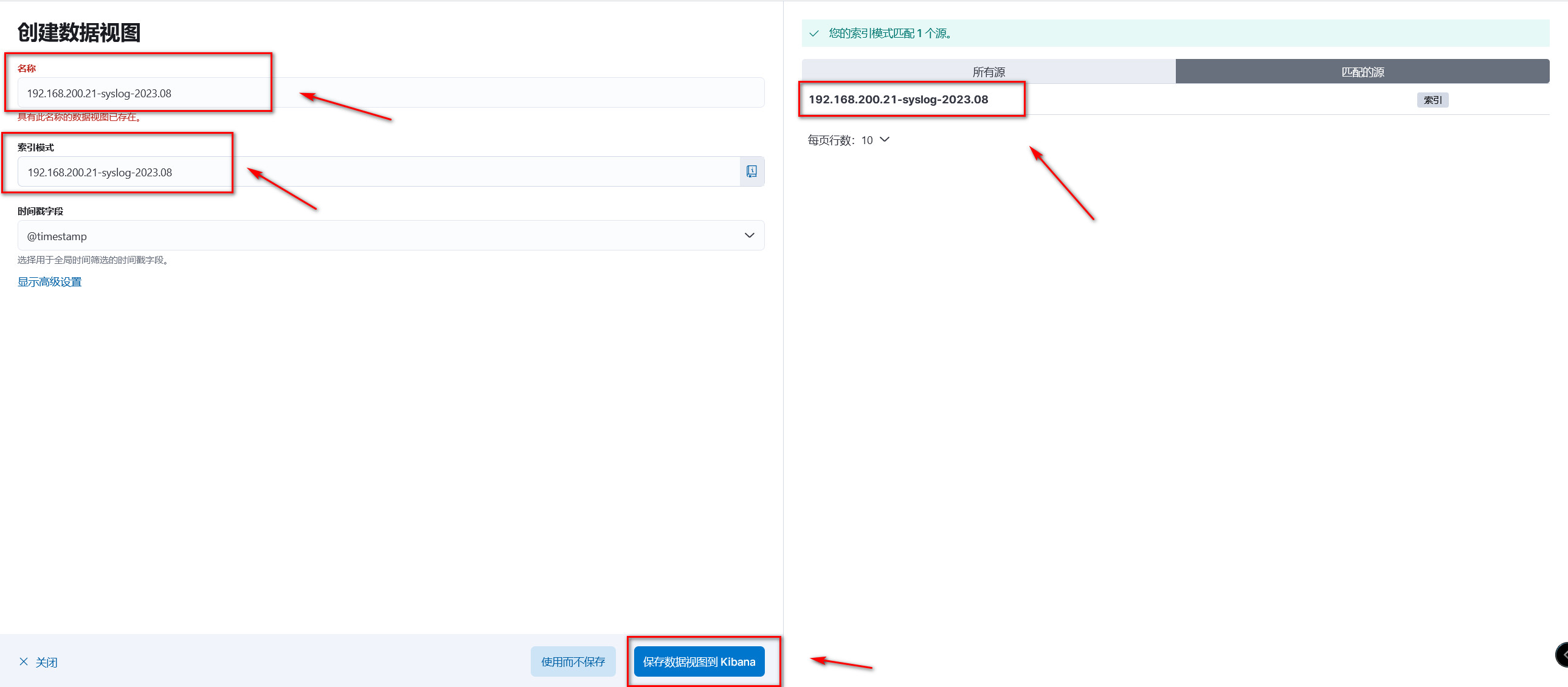

如下图复制粘贴索引名称过来,然后保存数据视图,名称可以自己命名。(我这里已经创建了,所以会显示数据视图已存在)

然后就可以查看到日志了,左侧可以做一些日志筛选,右侧可选相应时间段。

以上,就算是整套ELK收集日志的一个简单示范:Logstash收集日志发送到ES,Kibana从ES读取数据进行日志展示和查询。

六、安装和配置Filebeat收集日志

1、安装Filebeat

1 2 3 4 5 6 7 8 9 10 11 12 13 | #配置yum源[root@elk01 ~]# cat /etc/yum.repos.d/elastic.repo [elastic-8.x]name=Elastic repository for 8.x packagesbaseurl=https: //artifacts.elastic.co/packages/8.x/yumgpgcheck=1gpgkey=https: //artifacts.elastic.co/GPG-KEY-elasticsearchenabled=1autorefresh=1type=rpm-md#安装yum install -y filebeat |

2、配置Filebeat收集日志

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 | #同Logstash一样,因需要进行证书认证,需要将ES的证书复制到Filebeat目录。cp -r /etc/elasticsearch/certs /etc/filebeat/编辑filebeat 的配置文件,编辑filebeat.yml配置文件可以先做个备份,为方便编写,将filebeat.yml原有的内容全部清空,然后写上我们自己的日志收集配置。我这里以日志格式是Json格式的kibana日志作为示范。[root@elk01 ~]# cat /etc/filebeat/filebeat.ymlfilebeat.inputs:- type: log enabled: true paths: - / var /log/kibana/kibana.log json.keys_under_root: true json.overwrite_keys: trueoutput.elasticsearch: hosts: [ "192.168.200.21:9200" , "192.168.200.22:9200" , "192.168.200.23:9200" ] index: "filebeat-kibanalog-%{+yyyy.MM}" protocol: "https" username: "elastic" password: "086530qwe" ssl.certificate_authorities: - /etc/filebeat/certs/http_ca.crtsetup.template.name: "filebeat"setup.template.pattern: "filebeat-*"setup.template.enabled: falsesetup.template.overwrite: true#启动filebeat收集日志。因为filebeat收集日志的配置是yml格式的,书写语法比较严格规范,在启动filebeat前,可以先检查一下配置文件的语法有没有问题。[root@elk01 ~]# filebeat test config -c /etc/filebeat/filebeat.ymlConfig OK#启动服务systemctl enable filebeat.service systemctl start filebeat.service |

同logstash一样,打开Kibana创建数据视图导入索引,查看日志。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY