Milvus 1.1版本容器化安装

先决条件:

● Kubernetes 1.10+

● Helm >= 2.12.0

安装Chart:

1、添加稳定的存储库

helm repo add stable https://charts.helm.sh/stable

helm repo add milvus https://milvus-io.github.io/milvus-helm/

2、更新chart存储库

[root@test:/data/test ]# helm repo update

3、因为网络的问题,在线安装milvus有可能安装不上,建议下载chart的包进行安装,指定chart的版本1.1.6对应的milvus的版本就是1.1

[root@love.k8s-dev-worker2.154-116:/data/test ]# helm pull milvus/milvus --version 1.1.6

//解压安装包milvus-1.1.6.tgz

[root@love.k8s-dev-worker2.154-116:/data/test ]# tar -xzvf milvus-1.1.6.tgz

目录结构:

[root@love.k8s-dev-worker2.154-116:/data/test ]# tree milvus

milvus

├── charts

│ └── mysql

│ ├── Chart.yaml

│ ├── README.md

│ ├── templates

│ │ ├── configurationFiles-configmap.yaml

│ │ ├── deployment.yaml

│ │ ├── _helpers.tpl

│ │ ├── initializationFiles-configmap.yaml

│ │ ├── NOTES.txt

│ │ ├── pvc.yaml

│ │ ├── secrets.yaml

│ │ ├── serviceaccount.yaml

│ │ ├── servicemonitor.yaml

│ │ ├── svc.yaml

│ │ └── tests

│ │ ├── test-configmap.yaml

│ │ └── test.yaml

│ └── values.yaml //主要修改文件

├── Chart.yaml

├── README.md

├── requirements.lock

├── requirements.yaml

├── templates

│ ├── admin-deployment.yaml

│ ├── admin-svc.yaml

│ ├── config.yaml

│ ├── _helpers.tpl

│ ├── _mishards_config.tpl

│ ├── mishards-deployment.yaml

│ ├── mishards-rbac.yaml

│ ├── mishards-svc.yaml

│ ├── NOTES.txt

│ ├── pvc.yaml

│ ├── readonly-deployment.yaml

│ ├── _readonly_server_config.tpl

│ ├── readonly-svc.yaml

│ ├── _server_config.tpl

│ ├── writable-deployment.yaml

│ └── writable-svc.yaml

└── values.yaml //主要修改文件

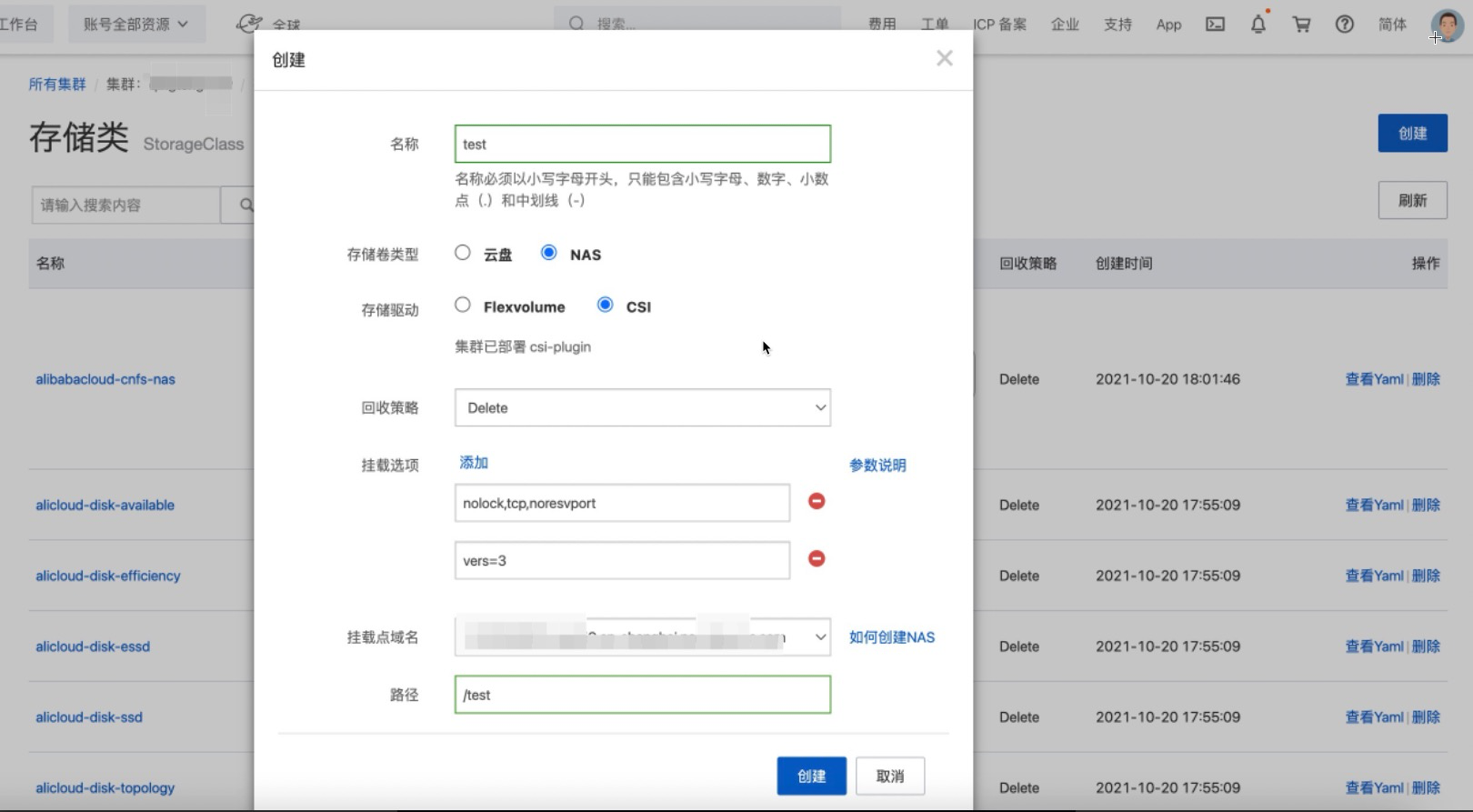

4、进行持久化配置

4.1,创建StorageClass使用nas为存储类型,需要创建两个一个用于Mysql的存储一个用于Mivus的存储

5、自定义Milvus Chart文件

5.1 添加mysql存储配置

进入Milvus文件夹

cd milvus/charts/mysql

编辑values.yaml文件,添加你创建的StorageClassName名称

vim values.yaml

97 persistence``

98 enabled: true

99 ## database data Persistent Volume Storage Class

100 ## If defined, storageClassName: <storageClass>

101 ## If set to "-", storageClassName: "", which disables dynamic provisioning

102 ## If undefined (the default) or set to null, no storageClassName spec is

103 ## set, choosing the default provisioner. (gp2 on AWS, standard on

104 ## GKE, AWS & OpenStack)

105 ##

106 storageClass: "milvus-mysql" //去掉注释填写StorageClassName名称

107 accessMode: ReadWriteOnce

108 size: 8Gi

109 annotations: {}

5.2 修改Milvus配置

修改Milvus缓冲区大小跟用于缓存的 CPU 内存大小配置

cache:

insertBufferSize: 5GB //允许的最大插入缓冲区大小 (GB)

cacheSize: 40GB //用于缓存的 CPU 内存大小 (GB)

启用Prometheus 监控

metrics:

enabled: true //开启配置默认为false

address: pushgateway-svc.arms-prom.svc //pushgateway地址默认没有安装pushgateway需要自己安装

port: 9091 //pushgateway端口

配置Milvus存储配置

添加Mivus DB存储

147 persistence:

148 mountPath: "/var/lib/milvus/db"

149 ## If true, alertmanager will create/use a Persistent Volume Claim

150 ## If false, use emptyDir

151 ##

152 enabled: true //使用持久卷来存储数据

153 annotations: {}

154 # helm.sh/resource-policy: keep

155 persistentVolumeClaim:

156 existingClaim: ""

157 ## milvus data Persistent Volume Storage Class

158 ## If defined, storageClassName: <storageClass>

159 ## If set to "-", storageClassName: "", which disables dynamic provisioning

160 ## If undefined (the default) or set to null, no storageClassName spec is

161 ## set, choosing the default provisioner.

162 ##

163 storageClass: milvus-data-sc //之前创建的StorageClass名称

164 accessModes: ReadWriteMany

165 size: 50Gi

166 subPath: ""

添加Mivus Log存储

168 logsPersistence:

169 mountPath: "/var/lib/milvus/logs"

170 ## If true, alertmanager will create/use a Persistent Volume Claim

171 ## If false, use emptyDir

172 ##

173 enabled: false //milvus日志存储安装自己的需要是否开启

174 annotations: {}

175 # helm.sh/resource-policy: keep

176 persistentVolumeClaim:

177 existingClaim: ""

178 ## milvus logs Persistent Volume Storage Class

179 ## If defined, storageClassName: <storageClass>

180 ## If set to "-", storageClassName: "", which disables dynamic provisioning

181 ## If undefined (the default) or set to null, no storageClassName spec is

182 ## set, choosing the default provisioner.

183 ##

184 storageClass: //之前创建的StorageClass名称

185 accessModes: ReadWriteMany

186 size: 5Gi

187 subPath: ""

修改Milvus资源限制

189 image:

190 repository: milvusdb/milvus

191 tag: 1.1.1-cpu-d061621-330cc6

192 pullPolicy: IfNotPresent

193 ## Optionally specify an array of imagePullSecrets.

194 ## Secrets must be manually created in the namespace.

195 ## ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

196 ##

197 # pullSecrets:

198 # - myRegistryKeySecretName

199 #resources: {}

200 resources: //之前创建的StorageClass名称

201 limits:

202 memory: "50Gi"

203 cpu: "20.0"

204 requests:

205 memory: "50Gi"

206 cpu: "20.0"

6、执行安装

去到最上层目录

helm install milvus ./milvus -n milvus

Release "milvus" has been upgraded. Happy Helming!

NAME: milvus

LAST DEPLOYED: Mon Mar 7 19:33:13 2022

NAMESPACE: milvus-1-1

STATUS: deployed

REVISION: 4

TEST SUITE: None

NOTES:

The Milvus server can be accessed via port 19530 on the following DNS name from within your cluster:

milvus.milvus-1-1.svc.cluster.local

Get the Milvus server URL by running these commands in the same shell:

export POD_NAME=$(kubectl get pods --namespace milvus-1-1 -l "app.kubernetes.io/name=milvus,app.kubernetes.io/instance=milvus,component=standalone" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace milvus-1-1 port-forward $POD_NAME 19530 19121

For more information on running Milvus, visit:

https://milvus.io/

7、等待服务创建完成

两个pod Running服务正常启动

[root@test:/data/test ]# kubectl get all -n milvus-1-1

NAME READY STATUS RESTARTS AGE

pod/milvus-mysql-6bcd5f9bcd-hvgcc 1/1 Running 0 4h11m

pod/milvus-writable-5dcc958d9c-t8mgh 1/1 Running 0 95s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/milvus ClusterIP 192.168.88.153 <none> 19530/TCP,19121/TCP 2d18h

service/milvus-mysql ClusterIP 192.168.5.145 <none> 3306/TCP 2d18h

service/milvus-proxy LoadBalancer 192.168.5.134 47.100.78.138 19530:30051/TCP 8h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/milvus-mysql 1/1 1 1 2d18h

deployment.apps/milvus-writable 1/1 1 1 2d18h

NAME DESIRED CURRENT READY AGE

replicaset.apps/milvus-mysql-5d6f76758c 0 0 0 2d18h

replicaset.apps/milvus-mysql-5f85dbc785 0 0 0 4h11m

replicaset.apps/milvus-mysql-6bcd5f9bcd 1 1 1 4h11m

replicaset.apps/milvus-writable-56998fdb66 0 0 0 6h26m

replicaset.apps/milvus-writable-575d74dd98 0 0 0 8h

replicaset.apps/milvus-writable-5c5f4887c 0 0 0 2d18h

replicaset.apps/milvus-writable-5dcc958d9c 1 1 1 95s

replicaset.apps/milvus-writable-658546dbd8 0 0 0 2d18h

replicaset.apps/milvus-writable-759bd5967c 0 0 0 7h46m

replicaset.apps/milvus-writable-75f69c9488 0 0 0 144m

replicaset.apps/milvus-writable-8464c866f9 0 0 0 7h47m

replicaset.apps/milvus-writable-8474f5d754 0 0 0 89m

replicaset.apps/milvus-writable-fc8d5c54b 0 0 0 2d18h

NAME AGE

containernetworkfilesystem.storage.alibabacloud.com/default-cnfs-nas-2dcf9ba-20211020180121 138d

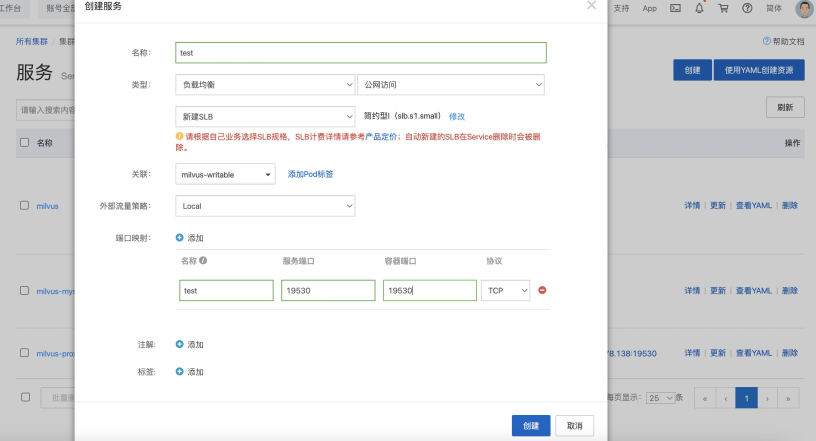

8、(选配)配置外部代理访问 测试环境需要外部直接调用milvus服务所以需要进行Slb转4层代理出去

完成安装

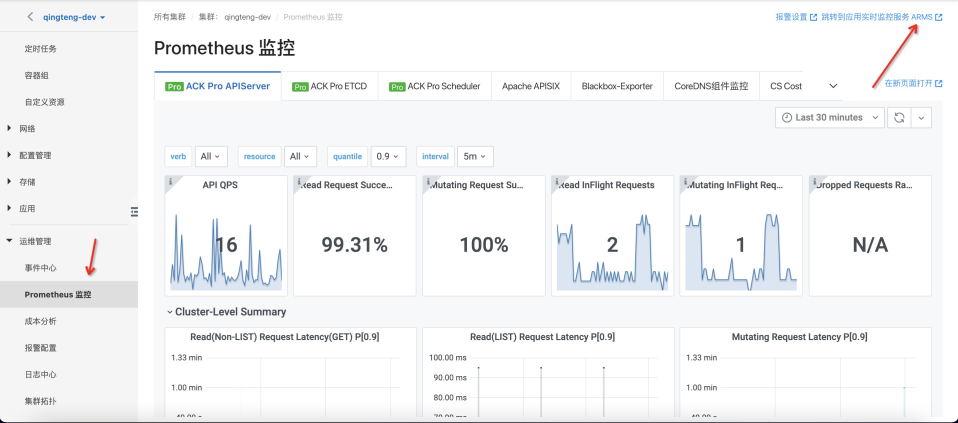

对接监控

1、安装PushGateway,因为Milvus采用PushGateway的方式进行推送数据,阿里云ACK中没有安装需要自行安装配置

pushgateway.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: arms-prom

name: pushgateway

labels:

app: pushgateway

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "8080"

spec:

replicas: 1

revisionHistoryLimit: 0

selector:

matchLabels:

app: pushgateway

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: "25%"

maxUnavailable: "25%"

template:

metadata:

name: pushgateway

labels:

app: pushgateway

spec:

containers:

- name: pushgateway

image: prom/pushgateway:v0.7.0

imagePullPolicy: IfNotPresent

livenessProbe:

initialDelaySeconds: 600

periodSeconds: 10

successThreshold: 1

failureThreshold: 10

httpGet:

path: /

port: 9091

ports:

- name: "app-port"

containerPort: 9091

resources:

limits:

memory: "1000Mi"

cpu: 1

requests:

memory: "1000Mi"

cpu: 1

---

apiVersion: v1

kind: Service

metadata:

name: pushgateway-svc

namespace: arms-prom

labels:

app: pushgateway

spec:

selector:

app: pushgateway

ports:

- name: pushgateway

port: 9091

targetPort: 9091

1.1创建服务

##创建PushGateway服务

kubectl apply -f pushgateway.yaml

##查看服务是否启动

[root@test:/data/test ]# kubectl get pod -n arms-prom | grep pushgateway

pushgateway-6c796fb64b-92qk4 1/1 Running 2 6h50m

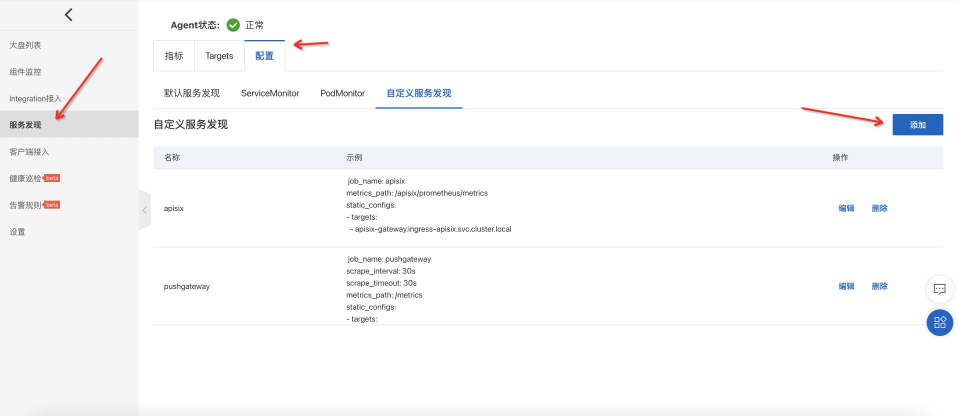

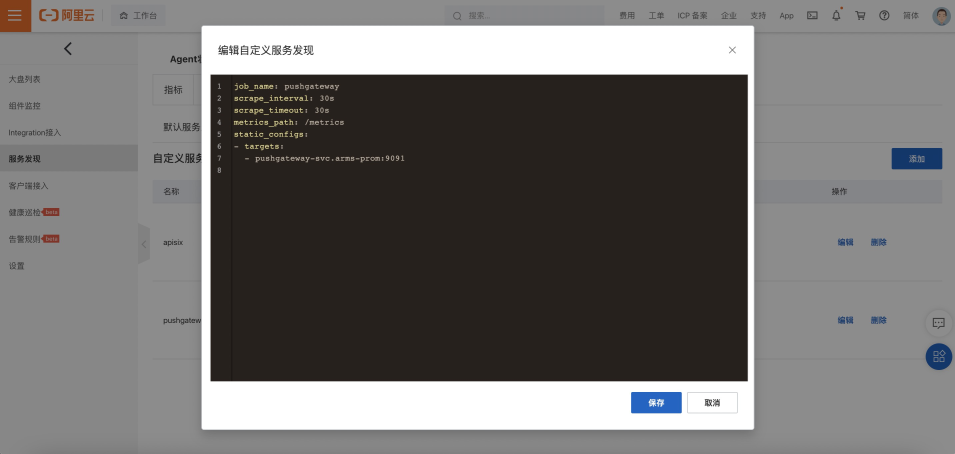

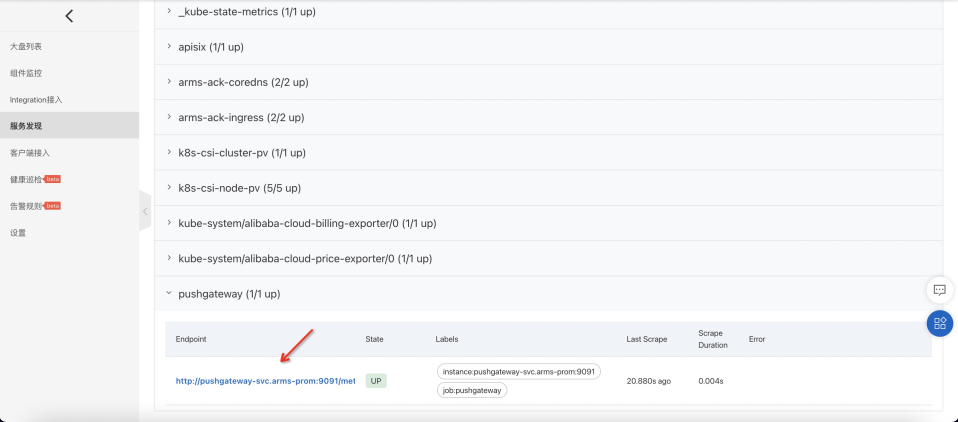

2、配置Prometheus自定义服务发现

job_name: pushgateway //名称

scrape_interval: 30s //采集间隔时间

scrape_timeout: 30s //采集间隔时间

metrics_path: /metrics //目录

static_configs:

- targets:

- pushgateway-svc.arms-prom:9091 //地址

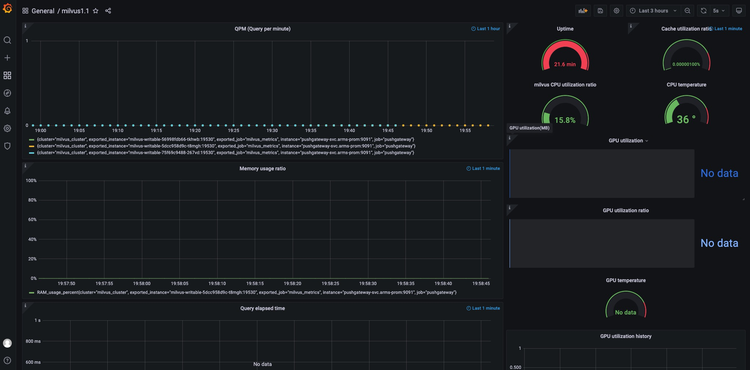

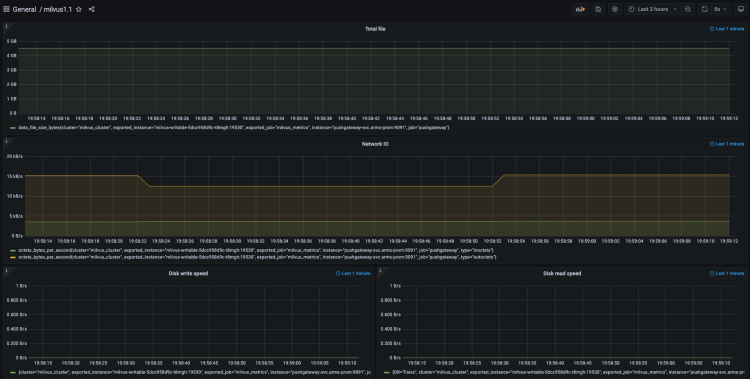

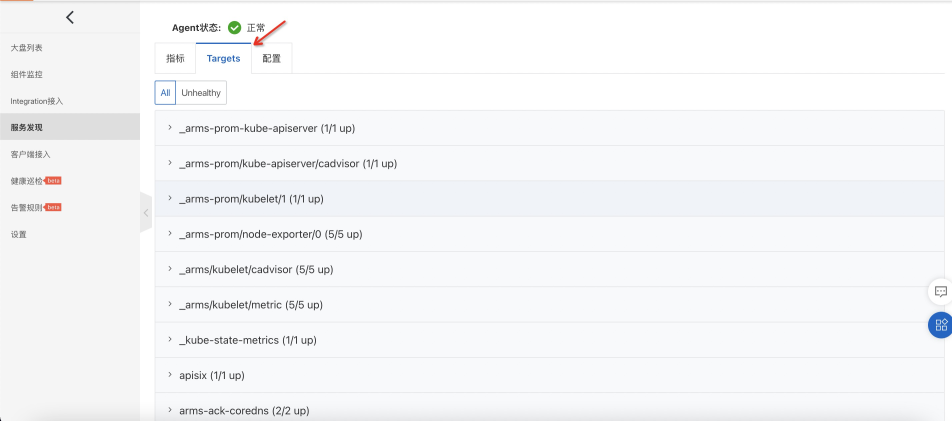

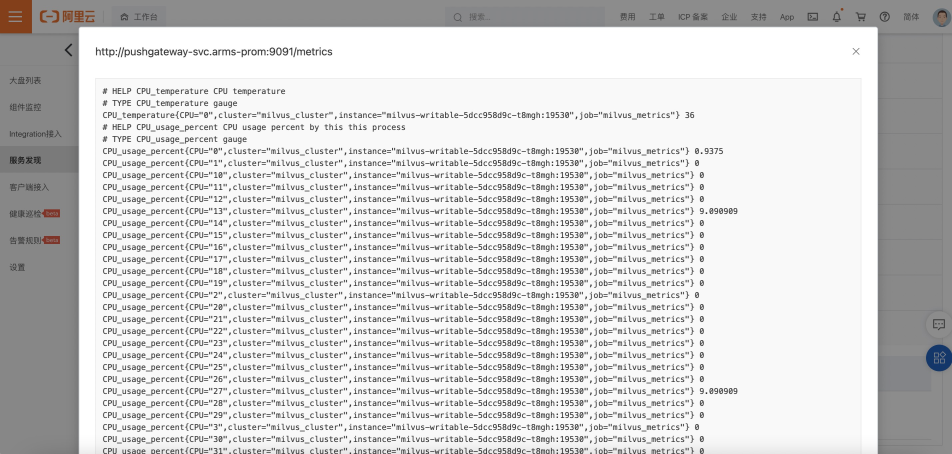

3、确认数据

4、添加Grafana图表

Grafana配置文件

将文件导入Granfan