【机器学习】逻辑回归(二元分类)

目录

源代码文件请点击此处!

感知器的种类

- 离散感知器:输出的预测值仅为 0 或 1

- 连续感知器(逻辑分类器):输出的预测值可以是 0 到 1 的任何数字,标签为 0 的点输出接近于 0 的数,标签为 1 的点输出接近于 1 的数

- 逻辑回归算法(logistics regression algorithm):用于训练逻辑分类器的算法

sigmoid(logistics)函数

- sigmoid 函数:

\[\begin{aligned}

& g(z) = \frac{1}{1 + e^{-z}},\ z \in (-\infty, +\infty),\ 0 < g(z) < 1 \\

& when \ z \in (-\infty, 0), \ 0 < g(z) < 0.5 \\

& when \ z \in [0, +\infty), \ 0.5 \leq g(z) < 1

\end{aligned}

\]

- 决策边界(decision boundary):

\[线性决策边界:z = \vec{w} \cdot \vec{x} + b \\

非线性决策边界(例如):z = x_1^2 + x_2^2 - 1

\]

- sigmoid 函数与线性决策边界函数的结合:

\[\begin{aligned}

& g(z) = \frac{1}{1 + e^{-z}} \\

& f_{\vec{w}, b}(\vec{x}) = \frac{1}{1 + e^{-(\vec{w} \cdot \vec{x} + b)}}

\end{aligned}

\]

- 决策原理(\(\hat{y}\) 为预测值):

\[概率:

\begin{cases}

a_1 = f_{\vec{w}, b}(\vec{x}) &= P(\hat{y} = 1 | \vec{x}) \\

a_2 = 1 - a_1 &= P(\hat{y} = 0 | \vec{x})

\end{cases}

\]

代价/损失函数(cost function)——对数损失函数(log loss function)

- 一个训练样本:\(\vec{x}^{(i)} = (x_1^{(i)}, x_2^{(i)}, ..., x_n^{(i)})\) 和 \(y^{(i)}\)

- 训练样本总数 = \(m\)

- 对数损失函数(log loss function):

\[\begin{aligned}

L(f_{\vec{w}, b}(\vec{x}^{(i)}), y^{(i)}) &=

\begin{cases}

-\ln [f_{\vec{w}, b}(\vec{x}^{(i)})], \ y^{(i)} = 1 \\

-\ln [1 - f_{\vec{w}, b}(\vec{x}^{(i)})], \ y^{(i)} = 0 \\

\end{cases}

\\ & = -y^{(i)} \ln [f_{\vec{w}, b}(\vec{x}^{(i)})] - [1 - y^{(i)}] \ln [1 - f_{\vec{w}, b}(\vec{x}^{(i)})] \\

& = -y^{(i)} \ln a_1^{(i)} - [1 - y^{(i)}] \ln a_2^{(i)}

\end{aligned}

\]

- 代价函数(cost function):

\[\begin{aligned}

J(\vec{w}, b) &= \frac{1}{m} \sum_{i=1}^{m} L(f_{\vec{w}, b}(\vec{x}^{(i)}), y^{(i)}) \\

&= -\frac{1}{m} \sum_{i=1}^{m} \bigg(y^{(i)} \ln [f_{\vec{w}, b}(\vec{x}^{(i)})] + [1 - y^{(i)}] \ln [1 - f_{\vec{w}, b}(\vec{x}^{(i)})] \bigg) \\

&= -\frac{1}{m} \sum_{i=1}^{m} \bigg(y^{(i)} \ln a_1^{(i)} + [1 - y^{(i)}] \ln a_2^{(i)} \bigg)

\end{aligned}

\]

梯度下降算法(gradient descent algorithm)

- \(\alpha\):学习率(learning rate),用于控制梯度下降时的步长,以抵达损失函数的最小值处。

- 逻辑回归的梯度下降算法:

\[\begin{aligned}

repeat \{ \\

& tmp\_w_1 = w_1 - \alpha \frac{1}{m} \sum^{m}_{i=1} [f_{\vec{w},b}(\vec{x}^{(i)}) - y^{(i)}] x_1^{(i)} \\

& tmp\_w_2 = w_2 - \alpha \frac{1}{m} \sum^{m}_{i=1} [f_{\vec{w},b}(\vec{x}^{(i)}) - y^{(i)}] x_2^{(i)} \\

& ... \\

& tmp\_w_n = w_n - \alpha \frac{1}{m} \sum^{m}_{i=1} [f_{\vec{w},b}(\vec{x}^{(i)}) - y^{(i)}] x_n^{(i)} \\

& tmp\_b = b - \alpha \frac{1}{m} \sum^{m}_{i=1} [f_{\vec{w},b}(\vec{x}^{(i)}) - y^{(i)}] \\

& simultaneous \ update \ every \ parameters \\

\} until \ & converge

\end{aligned}

\]

正则化逻辑回归(regularization logistics regression)

- 正则化的作用:解决过拟合(overfitting)问题(也可通过增加训练样本数据解决)。

- 损失/代价函数(仅需正则化 \(w\),无需正则化 \(b\)):

\[\begin{aligned}

J(\vec{w}, b) &= -\frac{1}{m} \sum_{i=1}^{m} \bigg(y^{(i)} \ln [f_{\vec{w}, b}(\vec{x}^{(i)})] + [1 - y^{(i)}] \ln [1 - f_{\vec{w}, b}(\vec{x}^{(i)})] \bigg) + \frac{\lambda}{2m} \sum^{n}_{j=1} w_j^2

\end{aligned}

\]

其中,第二项为正则化项(regularization term),使 \(w_j\) 变小。初始设置的 \(\lambda\) 越大,最终得到的 \(w_j\) 越小。

- 梯度下降算法:

\[\begin{aligned}

repeat \{ \\

& tmp\_w_1 = w_1 - \alpha \frac{1}{m} \sum^{m}_{i=1} [f_{\vec{w},b}(\vec{x}^{(i)}) - y^{(i)}] x_1^{(i)} + \frac{\lambda}{m} w_1 \\

& tmp\_w_2 = w_2 - \alpha \frac{1}{m} \sum^{m}_{i=1} [f_{\vec{w},b}(\vec{x}^{(i)}) - y^{(i)}] x_2^{(i)} + \frac{\lambda}{m} w_2 \\

& ... \\

& tmp\_w_n = w_n - \alpha \frac{1}{m} \sum^{m}_{i=1} [f_{\vec{w},b}(\vec{x}^{(i)}) - y^{(i)}] x_n^{(i)} + \frac{\lambda}{m} w_n \\

& tmp\_b = b - \alpha \frac{1}{m} \sum^{m}_{i=1} [f_{\vec{w},b}(\vec{x}^{(i)}) - y^{(i)}] \\

& simultaneous \ update \ every \ parameters \\

\} until \ & converge

\end{aligned}

\]

代码实现

import numpy as np

import matplotlib.pyplot as plt

# sigmoid 函数 f = 1/(1+e^(-x))

def sigmoid(x):

return np.exp(x) / (1 + np.exp(x))

# 计算分数 z = w*x+b

def score(x, w, b):

return np.dot(w, x) + b

# 预测值 f_pred = sigmoid(z)

def prediction(x, w, b):

return sigmoid(score(x, w, b))

# 对数损失函数 f = -y*ln(a)-(1-y)*ln(1-a)

# 训练样本: (vec{X[i]}, y[i])

def log_loss(X_i, y_i, w, b):

pred = prediction(X_i, w, b)

return - y_i * np.log(pred) - (1-y_i) * np.log(1-pred)

# 计算损失函数 J(w, b)

# 训练样本: (vec{X[i]}, y[i])

def cost_function(X, y, w, b):

cost_sum = 0

m = X.shape[0]

for i in range(m):

cost_sum += log_loss(X[i], y[i], w, b)

return cost_sum / m

# 计算梯度值 dJ/dw, dJ/db

def compute_gradient(X, y, w, b):

m = X.shape[0] # 训练集的数据样本数(矩阵行数)

n = X.shape[1] # 每个数据样本的维度(矩阵列数,即特征个数)

dj_dw = np.zeros((n,))

dj_db = 0.0

for i in range(m): # 每个数据样本

pred = prediction(X[i], w, b)

for j in range(n): # 每个数据样本的维度

dj_dw[j] += (pred - y[i]) * X[i, j]

dj_db += (pred - y[i])

dj_dw = dj_dw / m

dj_db = dj_db / m

return dj_dw, dj_db

# 梯度下降算法,以得到决策边界(decision boundary)方程

def logistic_function(X, y, w, b, learning_rate=0.01, epochs=1000):

J_history = []

for epoch in range(epochs):

dj_dw, dj_db = compute_gradient(X, y, w, b)

# w 和 b 需同步更新

w = w - learning_rate * dj_dw

b = b - learning_rate * dj_db

J_history.append(cost_function(X, y, w, b)) # 记录每次迭代产生的误差值

return w, b, J_history

# 绘制线性方程的图像

def draw_line(w, b, xmin, xmax, title):

x = np.linspace(xmin, xmax)

y = w * x + b

plt.xlabel("feature-0", size=15)

plt.ylabel("feature-1", size=15)

plt.title(title, size=20)

plt.plot(x, y)

# 绘制散点图

def draw_scatter(x, y, title):

plt.xlabel("epoch", size=15)

plt.ylabel("error", size=15)

plt.title(title, size=20)

plt.scatter(x, y)

# 从这里开始执行

if __name__ == '__main__':

# 加载训练集

X_train = np.array([[1, 0], [0, 2], [1, 1], [1, 2], [1, 3], [2, 2], [2, 3], [3, 2]])

y_train = np.array([0, 0, 0, 0, 1, 1, 1, 1])

w = np.zeros((X_train.shape[1],)) # 权重

b = 0.0 # 偏置

learning_rate = 0.01 # 学习率

epochs = 10000 # 迭代次数

J_history = [] # 记录每次迭代产生的误差值

# 逻辑回归建立模型

w, b, J_history = logistic_function(X_train, y_train, w, b, learning_rate, epochs)

print(f"result: w = {np.round(w, 4)}, b = {b:0.4f}") # 打印结果

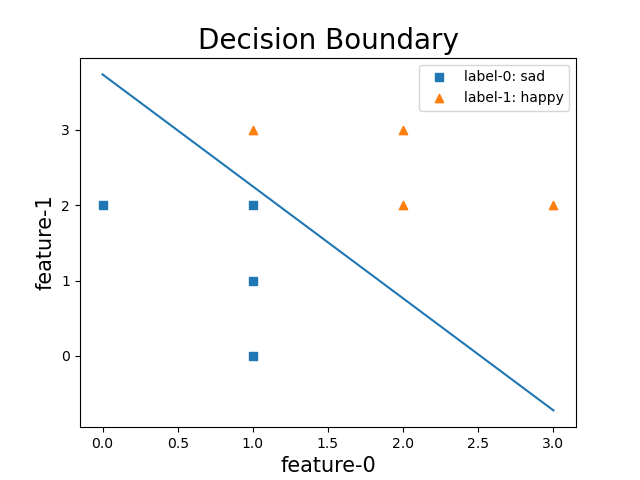

# 绘制迭代计算得到的决策边界(decision boundary)方程

# w[0] * x_feature0 + w[1] * x_feature1 + b = 0

# --> x_feature1 = -w[0]/w[1] * x_feature0 - b/w[1]

plt.figure(1)

draw_line(-w[0]/w[1], -b/w[1], 0.0, 3.0, "Decision Boundary")

plt.scatter(X_train[0:4, 0], X_train[0:4, 1], label="label-0: sad", marker='s') # 将训练集也表示在图中

plt.scatter(X_train[4:8, 0], X_train[4:8, 1], label="label-1: happy", marker='^') # 将训练集也表示在图中

plt.legend()

plt.show()

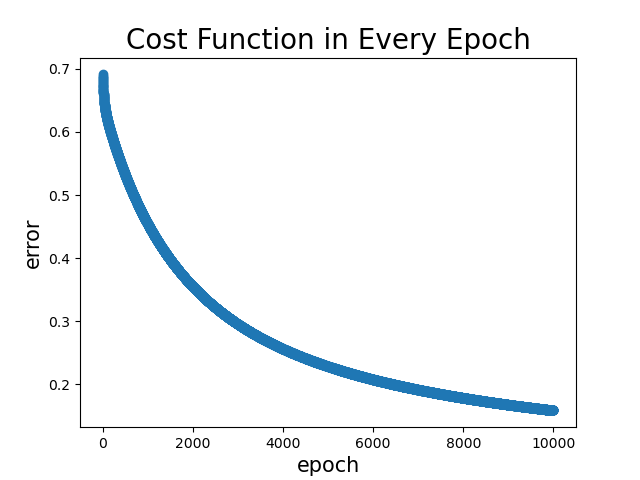

# 绘制误差值的散点图

plt.figure(2)

x_axis = list(range(0, epochs))

draw_scatter(x_axis, J_history, "Cost Function in Every Epoch")

plt.show()

运行结果

浙公网安备 33010602011771号

浙公网安备 33010602011771号