from selenium import webdriver

from selenium.webdriver.firefox.options import Options

import datetime

import openpyxl

import re

import time

import os

def get_connect():

firefox_options = Options()

# 设置无头

firefox_options.headless = True

browser = webdriver.Firefox(firefox_options=firefox_options)

browser.get("https://www.dongchedi.com/auto/library/x-x-x-x-x-x-x-x-x-x-x")

browser.implicitly_wait(5)

return browser

def parse_car_data():

browser = get_connect()

# 汽车数据存储

car_data = []

# 品牌id

car_brand_id = 1

# 车系id

car_bank_id = 1

# 解析第一个ul里的li A B C... 并除去 不限和热门两个

lis = browser.find_elements_by_xpath("//div[@class='wrap tw-bg-white']//"

"div[@class='jsx-1042301898 item-wrap']//"

"div[@class='jsx-1042301898 item-list']//"

"ul[@class='jsx-975855502 tw-flex md:tw-flex-none']//"

"li")[2:]

# 获取汽车类型 轿车 SUV MPV

car_type_spans = browser.find_elements_by_xpath("//div[@class='wrap tw-bg-white']//"

"section//"

"div[@class='jsx-964070570 tw-flex']//"

"ul[@class='jsx-964070570 tw-flex-1']//"

"li//"

"a[@class='jsx-964070570']//"

"span[@class='jsx-964070570 series-type_car-name__3pZLx']")

index = 1

for li in lis:

li.click()

# 获取 A B C...下的所有品牌

brand_lis = browser.find_elements_by_xpath("//div[@class='wrap tw-bg-white']//"

"div[@class='jsx-1042301898 item-wrap']//"

"div[@class='jsx-1042301898 item-list']//"

"div[@class='jsx-1207899626 more-list-wrap']//"

"ul[" + str(index) + "]//li")

index += 1

for brand_li in brand_lis:

brand_li.click()

brand_name = brand_li.text

print("{}品牌数据开始爬取---------->".format(brand_name))

for car_type_span in car_type_spans:

car_type_span.click()

# 解决加载不全 1 拖动滚动条 2 窗口放大

browser.set_window_size(1000, 30000)

time.sleep(3)

car_type = car_type_span.text

# 获取车系数据

car_bank_lis = browser.find_elements_by_xpath("//div[@class='wrap tw-bg-white']//"

"section//"

"div[@class='jsx-3448462877 list-wrap']//"

"ul[@class='jsx-3448462877 list tw-grid tw-grid-cols-12 tw-gap-x-12 tw-gap-y-16']//"

"li")

car_bank_lis_len = len(car_bank_lis)

if car_bank_lis_len == 0:

continue

else:

for car_bank_li in range(1, car_bank_lis_len + 1):

print("第{}个车系数据开始爬取---------->".format(car_bank_id))

bank_name = browser.find_element_by_xpath("//div[@class='wrap tw-bg-white']//"

"section//"

"div[@class='jsx-3448462877 list-wrap']//"

"ul[@class='jsx-3448462877 list tw-grid tw-grid-cols-12 tw-gap-x-12 tw-gap-y-16']//"

"li[" + str(car_bank_li) + "]//"

"a[@class='jsx-2744368201 item-link']//"

"p[@class='jsx-2744368201 car-name']").text

car_price = browser.find_element_by_xpath("//div[@class='wrap tw-bg-white']//"

"section//"

"div[@class='jsx-3448462877 list-wrap']//"

"ul[@class='jsx-3448462877 list tw-grid tw-grid-cols-12 tw-gap-x-12 tw-gap-y-16']//"

"li[" + str(car_bank_li) + "]//"

"a[@class='jsx-2744368201 item-link']//"

"p[@class='jsx-2744368201 price']").text

car_image_src = browser.find_element_by_xpath("//div[@class='wrap tw-bg-white']//"

"section//"

"div[@class='jsx-3448462877 list-wrap']//"

"ul[@class='jsx-3448462877 list tw-grid tw-grid-cols-12 tw-gap-x-12 tw-gap-y-16']//"

"li[" + str(car_bank_li) + "]//"

"div[@class='jsx-2682525847 button-wrap tw-grid tw-grid-cols-12 tw-gap-x-3']//"

"a[2]").get_attribute("href")

car_data.append([car_brand_id, car_bank_id, brand_name, bank_name, car_type, car_price, car_image_src,get_time()])

car_bank_id += 1

print("{}品牌数据爬取结束---------->".format(brand_name))

car_brand_id += 1

print("数据开始保存---------->")

save_car_data(car_data)

print("数据保存成功---------->")

def format_car_data(data):

new_data = data.replace(" ", "")

return re.sub(u"\\(.*?\\)|\\{.*?}|\\[.*?]", "", new_data)

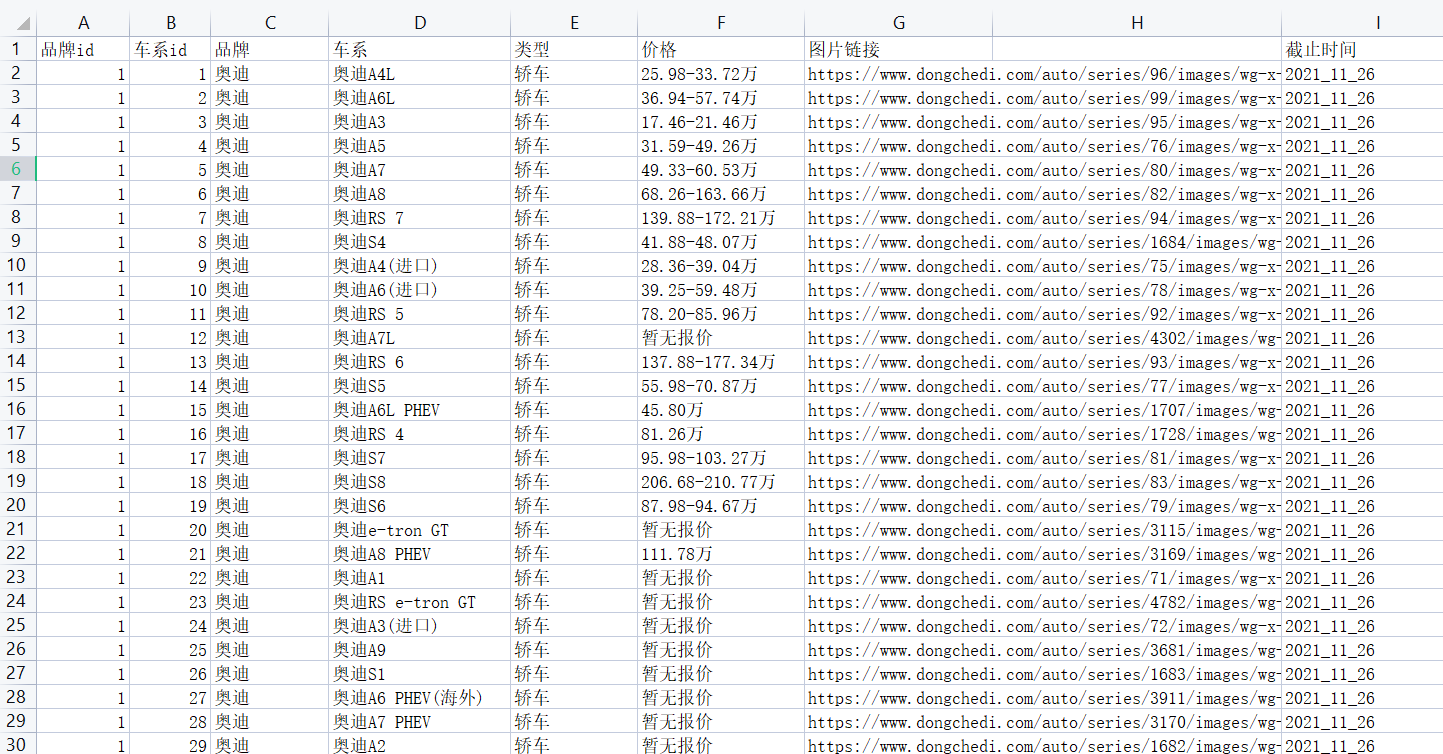

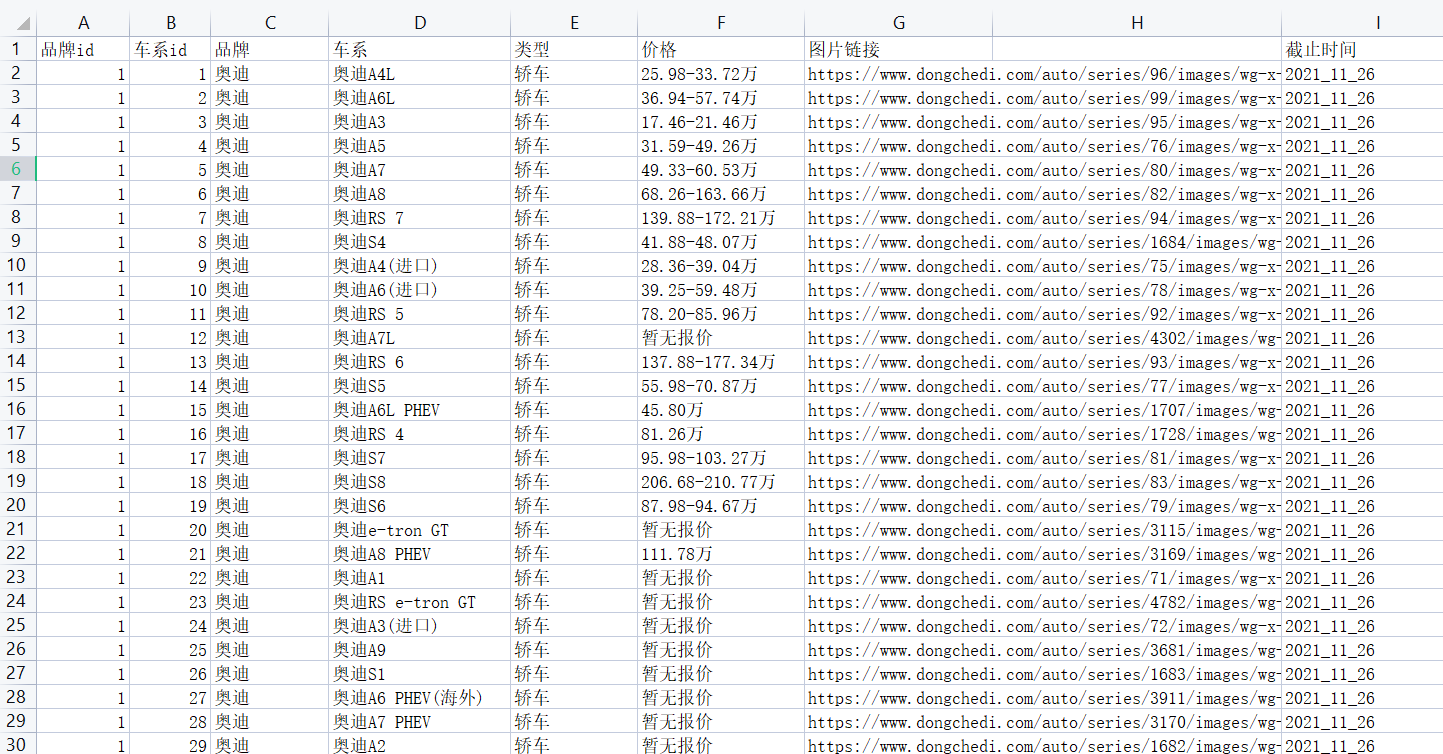

def save_car_data(car_data):

path = "../dataset/" + get_time() + "_car_data.xlsx"

if os.path.exists(path) is False:

wk = openpyxl.Workbook()

sheet = wk.active

header ='品牌id', '车系id', '品牌', '车系', '类型', '价格', '图片链接', '截止时间'

sheet.append(header)

wk.save(path)

if len(car_data) != 0:

wk = openpyxl.load_workbook(path)

sheet = wk.active

for item in car_data:

sheet.append(item)

wk.save(path)

def get_time():

return datetime.datetime.now().strftime("%Y_%m_%d")

def start():

parse_car_data()

if __name__ == '__main__':

start()