Python爬取世界疫情的实时数据

一、Python爬取世界疫情的实时数据

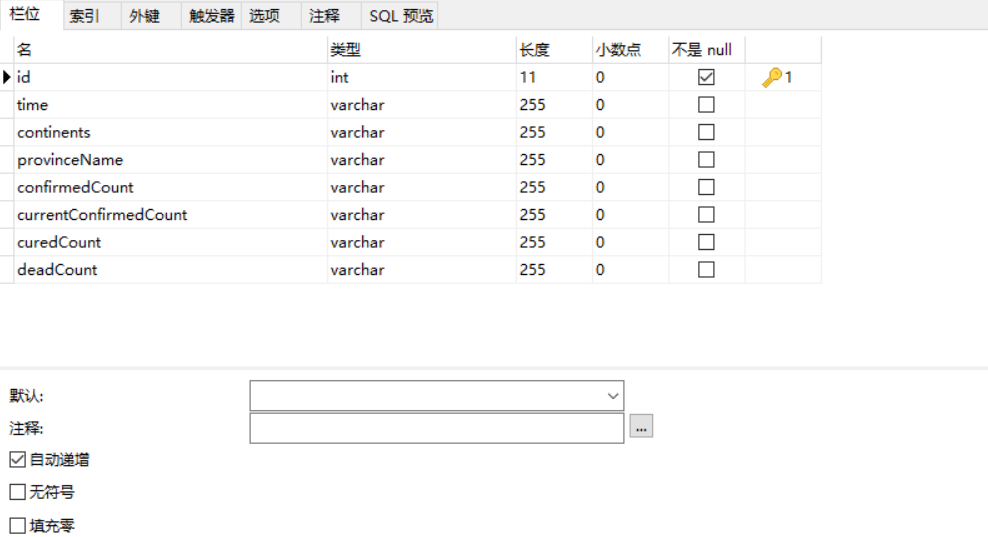

1、表结构(MySQL)

2、代码部分(数据:丁香医生)

import requests from bs4 import BeautifulSoup import json import time from pymysql import * def mes(): url = 'https://ncov.dxy.cn/ncovh5/view/pneumonia?from=timeline&isappinstalled=0' #请求地址 headers = {'user-agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.87 Safari/537.36 SLBrowser/6.0.1.6181'}#创建头部信息 resp = requests.get(url,headers = headers) #发送网络请求 content=resp.content.decode('utf-8') soup = BeautifulSoup(content, 'html.parser') listA = soup.find_all(name='script',attrs={"id":"getListByCountryTypeService2true"}) account =str(listA) mes = account.replace('[<script id="getListByCountryTypeService2true">try { window.getListByCountryTypeService2true = ', '') mes=mes.replace('}catch(e){}</script>]','') #mes=account[95:-21] messages_json = json.loads(mes) times=time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(time.time())) worldList=[] for item in messages_json: continents=item['continents'] provinceName=item['provinceName'] confirmedCount=item['confirmedCount'] currentConfirmedCount=item['currentConfirmedCount'] curedCount = item['curedCount'] deadCount=item['deadCount'] worldList.append((times,continents,provinceName,confirmedCount,currentConfirmedCount,curedCount,deadCount)) insert(worldList) def insert(worldList): worldTuple=tuple(worldList) cursor = db.cursor() sql = "insert into world values (0,%s,%s,%s,%s,%s,%s,%s) " try: cursor.executemany(sql,worldTuple) print("插入成功") db.commit() except Exception as e: print(e) db.rollback() cursor.close() # 连接数据库的方法 def connectDB(): try: db = connect(host='localhost', port=3306, user='root', password='123456', db='yiqing',charset='utf8') print("数据库连接成功") return db except Exception as e: print(e) return NULL if __name__ == '__main__': db=connectDB() mes()

3、结果